aiden

Members-

Posts

951 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

aiden's Achievements

Collaborator (7/14)

1

Reputation

-

All the logs look like this... Mar 15 07:12:05 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:12:05 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:14:17 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:14:17 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:16:29 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:16:29 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:18:41 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:18:41 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:20:51 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:20:51 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:23:03 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:23:03 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:25:15 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:25:15 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:27:28 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:27:28 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:29:39 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:29:39 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:31:49 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:31:49 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:34:01 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:34:01 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:36:13 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:36:13 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:38:26 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:38:26 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:40:38 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:40:38 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:42:49 preclear_disk_ZL2KAPEB_9509: cp: cannot create regular file '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory Mar 15 07:42:49 preclear_disk_ZL2KAPEB_9509: mv: cannot stat '/boot/preclear_reports/ZL2KAPEB.resume.tmp': No such file or directory

-

Okay, I've been absent for a while, and suddenly we're on v 6.5.3 with 6.6 looming. The sys admin in me just doesn't trust the "Check for Updates" easy button in the unRAID gui, especially given how complicated past updates have been (I've been using unRAID since the 4.x days). I've been reading countless update threads for the past hour, trying to discern what the generally accepted stable version is currently (6.4.1, 6.5.0, 6.5.1..?), and I just can't get a good handle on what to upgrade to. What is the recommended update procedure from v6.3? Which version should I go to? System Config Single parity All drives are ReiserFS, including cache I noted several variations of upgrade issues for different users depending on their configuration, so I'm listing my add-ons below in case anything stands out as a "definitely disable / delete and reinstall" situation. Docker MySQL Plugins Community Applications (Andrew Zawadzki) 2017.11.23 CA Auto Update Applications (Andrew Zawadzki) 2017.10.28 CA Backup/Restore App Data (Andrew Zawadzki) 2017.01.28 CA Cleanup App Data (Andrew Zawadzki) 2017.11.24a Dynamix System Buttons (Bergware) 2017.06.07 Dynamix System Information (Bergware) 2017.8.23 Dynamix System Statistics (Bergware) 2017.10.02b Dynamix WebGUI (Bergware) 2017.03.30 Fix Common Problems (Andrew Zawadzki) 2017.10.15a Nerd Tools (dmacias72) 2017.10.03a Plex Media Server (PhAzE) 2016.09.17.1 SABnzbd (PhAzE) 2016.11.29.1 Sick Beard (PhAzE) 2016.09.17.1 Unassigned Devices (dlandon) - 2017.10.17 I have been totally stable with this build, and the only reason I want to upgrade is because Community Applications will no longer update unless I'm at least on version 6.4. I appreciate any advice and experiences with these versions to help me formulate an upgrade path.

-

aiden started following Emulators??

-

I find myself in the position of needing to upgrade my hardware to a new chassis. I have been looking hard at 45 Drives, but they are spendy. I've always loved the look of the XServe design, and it's possible to purchase SEVERAL of them for the price of one empty Storinator case. I realize that it would take 10U (3 XServe RAIDS + 1 XServe) to equal the capacity of a 4U Storinator. But the aesthetics are are a different story. I notice cooling is a concern, but that seems to depend on fan placement and channeling. But my question is, is it worth spending the time + money to buy some of these old girls and rip her guts out? Do all the status indicators still work for those of you who have embarked on this journey? Any other gotchas I should consider? Thanks!

-

The biggest advantage of ZFS to my mind is the self-healing properties, protecting against bit rot etc. That is ideal for a hard disk archive. But the downsides have already been mentioned: no mixed drives, multiple drives per pool means all drives spin up together, etc. The merits of ReiserFS have been discussed in ancient threads on here, but the primary reason it's still in use is its robust journaling. BTRFS is most likely the path forward, though there has been no mention of it beyond the cache drives.

-

You do you realize that this is pre-1.0 software with only 2 point releases so far? Unfortunately, some people don't understand the concept of pre-releases, alpha testing, development cycles, proof-of-concept, etc. They just want it now. Typically, these are people who have not the skills or experience necessary to truly appreciate what it takes to do all of this kind of work on your own time, by yourself, and with no compensation beyond gratitude. I look forward to your continued development on this project. It is indeed a lofty goal, though sorely needed. Tom has attempted to reboot the UI several times in the past, but this is much more advanced. Please don't feel discouraged by uninformed posts such as the one above, and realize that many of us respect the time and effort it takes simply think about designing something as complex as this. When life gets out of your way, I hope you'll be able to dive back into this like you did during the summer. Good luck.

-

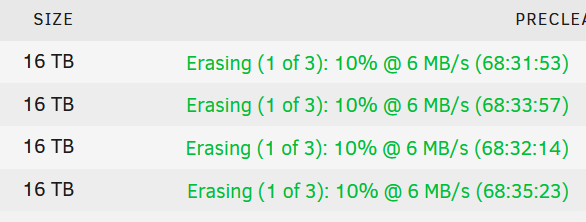

I would agree with you, if I hadn't detected errors in my third pass of 2 drives in the past few years. If I had only done 2 cycles, they would have passed. I know there's a point of diminishing returns with repeated cycles, and someone could argue that 4 cycles caught a failure. But after my experiences, 3 passes are worth the wait in my mind.

-

Very nice... thanks for posting this update.

-

C.O.R.P.S. – Centralized Operations in Rack Portable Storage

aiden replied to pyrater's topic in Unraid Compulsive Design

He listed the case he's using with the parts list -- HARDIGG CASES 413-665-2163 ... and a quick Google for this case shows they are available on e-bay for exactly what he's listed ($184.23), so I suspect that's where he purchased it. http://www.ebay.com/itm/HARDIGG-CASES-413-665-2163-SHIPPING-STORAGE-HARD-CASE-/190709478495 Interesting, because my "quick" Google search came back that 413-665-2163 is the TELEPHONE number for Hardigg. That's why I asked the question. My guess is that is in fact NOT the part number for the actual item, and that the eBay listed it incorrectly. I appreciate your attempt to answer for him, but you of all people should know I do plenty of my own research before I start asking questions. If that is where the OP found the item, the so be it. But I would rather have him tell me, just the same. -

C.O.R.P.S. – Centralized Operations in Rack Portable Storage

aiden replied to pyrater's topic in Unraid Compulsive Design

More importantly how will he/she move it, wouldn't want that thing sitting in my living room lol. This is no joke. With 24 hard drives in the unRAID server, another server, a rackmount UPS, and the weight of the case, this will weigh a LOT. Why one large case instead of several smaller ones? I would think when going mobile, that would help make it easier to move the whole system. EDIT: Nevermind. The cost on these kind of cases is very prohibitive. Could you provide a link of where you are sourcing yours? I like the idea of a modular setup like this, because it's a completely self contained enterprise quality infrastructure in a half height rack. I will watch this DIY with interest. -

That's definitely a proof of concept. The airflow is completely wrong, however. You need the fans to blow across ALL the drives, not hit the first one flat and disperse around the rest. If you follow that layout exactly, you would need to put a fan on the bottom and top, pulling air from the bottom and exhausting out the top. Whatever you decide to do, keep that in mind.

-

It IS available in the US, but it is out of stock right now. http://www.u-nas.com/xcart/product.php?productid=17617&cat=249

-

ASRock debuts Z87 motherboard with 22 SATA-ports

aiden replied to greenythebeast's topic in Motherboards and CPUs

I agree. Plus, redundancy would make things more redundant, which is always more durable on dedicated systems. -

4TB Hitachi Deskstar Internal Desktop Hard Drive $152.67

aiden replied to Mailman74's topic in Good Deals!

Love my Coolspins. They are also the drives of choice for Backblaze. -

There's something to be said for having some extra power on board if you need it. When the 5TB drives come along and you get that upgrade itch, the drives may be more power hungry than your current drives. Then you'd have to upgrade the PSU as well. Just more food for your brain.