-

Posts

140 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by TimTheSettler

-

-

I have this problem on one of my servers but only the first docker app is affected. All the other apps are ok.

I didn't bother to do anything and now a couple months later, on a completely different server, I have the same problem. Only the first docker app is affected (note that krusader is the second app on this other server and it's ok there).

So I looked at the template file and found that it was corrupted. That's just weird.

-

On 3/11/2024 at 7:55 AM, Kiara_taylor said:

Do we need a NAS? Does it have to run on Windows? What about SAS drives?

Our needs are simple:

- Not a lot of storage required (even 10TB would do)

- Protection against drive failures (daily backups are a must)

- Files need to be accessible on Windows via UNC PathFirst of all I completely agree with @Miss_Sissy's post. She gives good advice. I would add that it might be a good idea to hire one of the paid support people to help you through the process (https://unraid.net/blog/unraid-paid-support).

I was a Windows guy but Windows was getting too expensive for me and all the software that ran on Windows just as expensive. I needed a more reliable but cheaper option that had a good GUI (I'm not a Linux guy). I tried TrueNAS and it's good and powerful but complicated. I tried unRAID and I really like it. I think you will too.

-

1

1

-

1

1

-

-

Sorry, a bit late to the game here.

When I hear of HA I think of clustering/replication and load balancing. You should read the following articles:

https://www.ituonline.com/blogs/achieving-high-availability/

https://mariadb.com/kb/en/kubernetes-overview-for-mariadb-users/

Proxmox, VMware, Hyper-V, and unRAID are all VMEs but to different degrees. These alone don't give you High Availability; it's the apps inside that need to be designed for this. But if you have multiple VMEs and, of course, multiple VMs (dockers) inside then you can set up the apps to have the proper clustering (vertical/horizontal), replication, and load balancing. Assuming these strategies are supported by the app.

On 12/23/2022 at 3:31 PM, Jclendineng said:that’s true, I could do that, I was more thinking about ha within unraid so running 2 instances of the sql docker so I can patch one and not take all the apps relying on it down.

But in your case the goal is to minimize downtime when you're doing an upgrade and you've hit upon the solution.- Copy/back up the original container (Container A) to another container (Container B) and then point the app to that backup.

- Upgrade the original container (Container A).

- Copy/back up (Container B - the backup) to the original container (Container A) and then point the app to the original again.

-

5 hours ago, paokkerkir said:

I have seen multiple reports of corruption with btrfs for cache; granted these were from 2-3 years back.

3 minutes ago, JonathanM said:b is by far the most common thing we see, improper settings can fill a volume very quickly.

Good to know. I guess I've been lucky or prudent with my settings.

Many people like to use the Mover but I actually turned it off. My array is used for all my data and my cache is used for appdata.

The beauty of unRAID is that you can play around with stuff. Get started with some simple, basic settings and then tweak things as you go.

-

3 hours ago, paokkerkir said:

First of all, I understand that ECC ram is vastly preffered - especially for ZFS. Should I not use the harware I plan at all and opt for an ECC based solution, even if I'm using XFS?

ECC is quite expensive and you already have the hardware. It's also one of those one-in-a-million situations that isn't worth worrying about. Will you be using a parity HDD?

3 hours ago, paokkerkir said:Planning on using XFS for the array - this is still what is recommended from what I understand.

Use XFS. Wait for ZFS to mature and then maybe use it later (you can switch over at a later time). There are a whole list of benefits and disadvantages that it's better to keep it simple for now and use XFS.

3 hours ago, paokkerkir said:Especially for the cache I have some questions. I'm planning on using the 512gb nvme as cache for appdata/docker etc (with backups daily) and the 240gb ssd for plex cache only. This is still possible with XFS? Is it not the recommended way?

It's a good idea to double up the NVMe and SSD so that they are mirrored. Use two NVMe as Cache1 and two SSD as Cache2 or use the NVMe and SSD you have today as Cache1. Use btrfs as the file system (keep it simple and avoid ZFS for now). All cache devices in the same pool are mirrored with btrfs (see below).

3 hours ago, paokkerkir said:I'm planning on using the 512gb nvme as cache for appdata/docker etc (with backups daily) and the 240gb ssd for plex cache only.

Use one cache for the appdata (docker). Be sure to use the appdataBackup plugin to back that up. You don't need daily backups unless the apps you're using change each day. Use the other cache for file mover. You don't need to do anything special for plex. It should be fast enough reading from the HDD (I've never had problems streaming plex from my server). Use the NVMe cache for file mover and the SSD for the apps that way file transfer is nice and fast. SSD will be fast enough for the apps.

3 hours ago, paokkerkir said:I get that with Btrfs there are some issues for cache, not sure if this is still the case.

Not sure what issues you are talking about. Been using this for a few years now.

-

Just so that I've got it right, you back up the photos folder on the file server to the photos folder on the backup server using Duplicacy. I would then copy that Duplicacy backup folder/file to the cloud. If the photos folder on the file server is lost you restore from the backup server and if the photos folder on the backup server is lost then you can rebuild from the photos folder on the file server or from the Duplicacy folder/file in the cloud. If both your file server and backup server are lost then you restore from the cloud.

-

18 hours ago, Big Mike said:

Maybe if I had let it do the initial copy I wouldn’t have had this problem.

I see what you're saying. I generally copy the data so that my two folders are identical and then I point syncthing to those folders and from that point on let it manage the synchronization.

You might want to give it a try again because it's constantly updated and maybe there was a flaw when you first used it.

One last note is that I've come across a couple odd-ball cases where the folder/device gets confused. It's usually because I tried to do something I shouldn't have (as Rysz points out) like pausing the folder for too long. If something looks weird then you can recreate the folder (delete it and create it again) or reset the folder using the following curl api call.

-

13 hours ago, Big Mike said:

huge amount of data

Define "huge amount of data". Large number of files/folders or the size of the files?

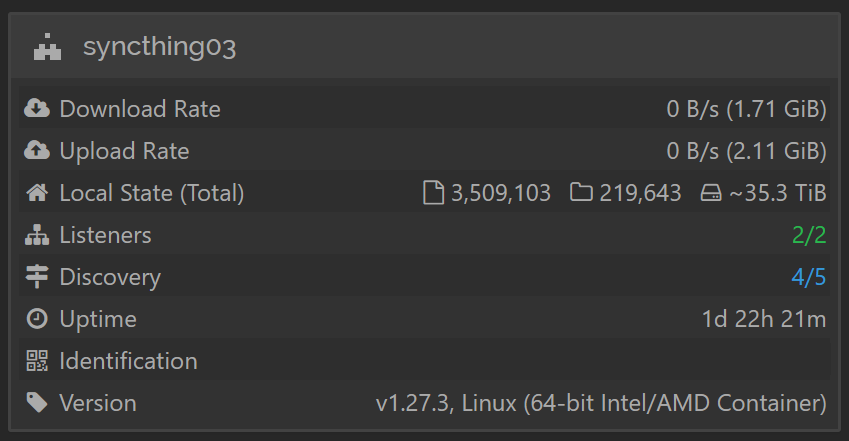

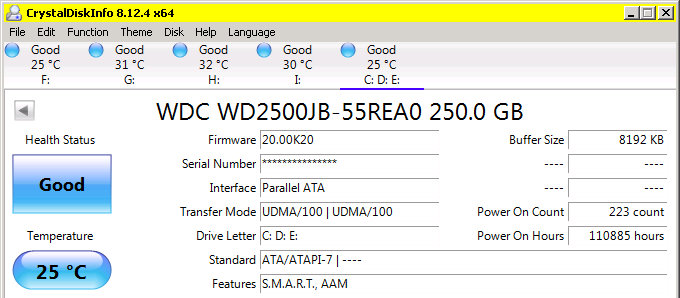

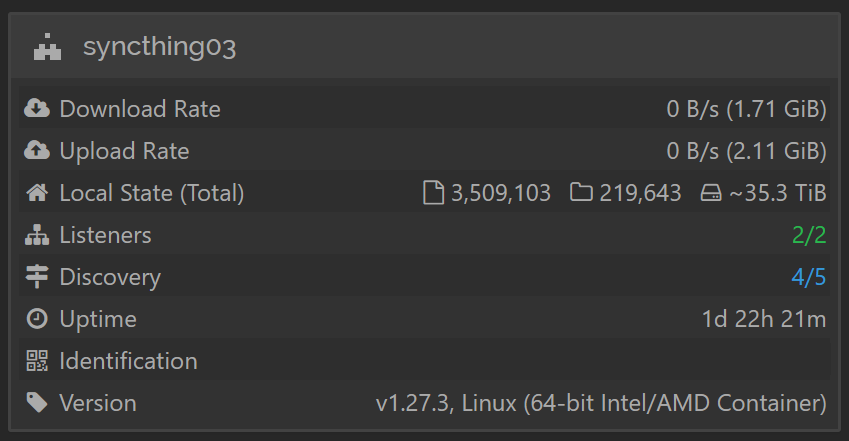

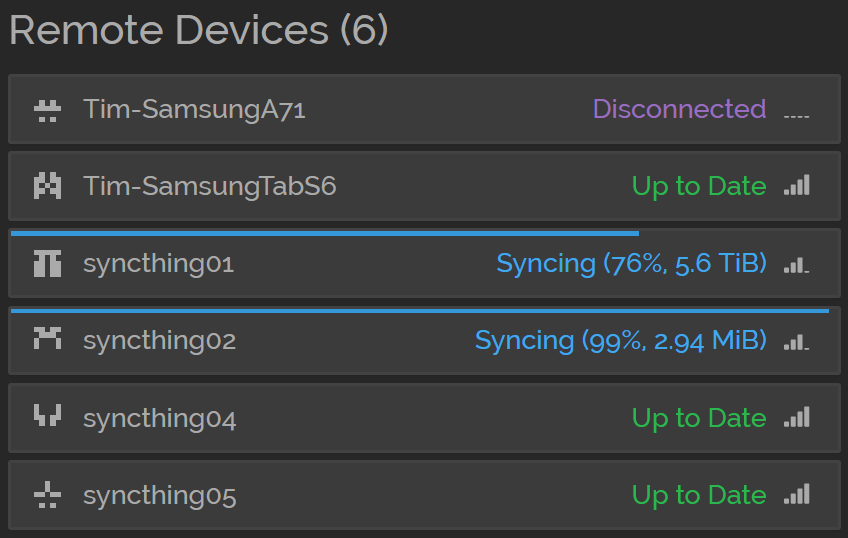

I use syncthing to sync data across four of my servers at different locations. No problems in general but I have noticed that large files (8GB+) tend to take a while to sync. For example, 1 8GB file will take much longer than 100 files totalling 8GB.

-

12 hours ago, ConnerVT said:

I have a keyring of flash drives.

I'm imagining this

and this

and this

-

1

1

-

-

On 2/11/2024 at 11:17 PM, sonofdbn said:

All of this makes me think that I might surrender to our Google overlords and just use Chrome on everything.

Please don't surrender. I've used Firefox for about 16 years now. Back then it was far superior and now it seems that the major browsers seem to be roughly equal, although some have features that others don't have. My favourite feature (that all browsers have now) is the password saver. But if you use this you should be using a master password. It's open source (always has been) and not linked to those big tech companies.

On 2/11/2024 at 11:17 PM, sonofdbn said:Firefox ... doesn't play nicely with unRAID sometimes

I also had some problems and switched to Edge for about 3 months (only for unRAID) but I haven't noticed anything lately so I switched back.

-

I originally tried TrueNAS too. I found it confusing and I had lots of trouble with the permissions/ACL. I felt that it was an advanced product requiring advanced knowledge. I needed something simple but powerful and I think unRAID is it. Don't get me wrong, unRAID is advanced too but it simplifies that complexity. I wish TrueNAS the best because you need competitive products in the marketplace to keep things interesting.

You mentioned 3x4TB HDDs and then mention a single 8TB SSD. One of those 4TB HDDs failed and so you decided to scrap the other two and use a single 8TB? I assume you have a backup strategy in place in case that 8TB bites the dust?

-

On 2/10/2024 at 2:09 PM, Hotsammysliz said:

So essentially I want to have a different backup method outside of duplicati that I can rely on to restore duplicati (along with the other docker apps) appdata config and then once that's been restored proceed with duplicati restoration.

Yup!

-

On 1/15/2024 at 9:10 AM, Hotsammysliz said:

If I'm backing up all my data with duplicati how can I restore that duplicati data for itself before installing duplicati? Hopefully that question makes sense.

Yes, I can see how this part seems confusing. What you need to do is back up the docker config somewhere else. The appdata directory (where the docker config is stored) is on my cache. I then use the Appdata Backup plugin (from Robin Kluth) to back up the config to the array. That config is then synchronized to another server using syncthing. If I lose the cache I have the backup on the array. If I lose the array then I have the docker still on the cache. If you lose both the cache and array (the server is fried, burnt, or stolen) then you have the backup somewhere else. You don't need to use syncthing (or the plugin), you can back up the cache to a flash drive. Did I miss anything?

-

On 1/2/2024 at 1:36 PM, Hotsammysliz said:

How would I access and actually restore my duplicati backups if the machine I am backing up is gone?

Are you running duplicati as a docker app? You should have all your docker apps backed up regularly.

On 1/2/2024 at 1:36 PM, Hotsammysliz said:I'm curious what the process would look like if I completely lost my server (i.e fire, flood, etc).

The server is gone so what do you do? The following list is a very basic process. Maybe someone else here has a better list.

- Buy new hardware including new hard drives.

- Restore the flash backup to a new USB (re-assign the license key to the new USB).

- Boot to unRAID using the restored flash backup.

- Assign the new hard drives to the array.

- Install the docker apps you used to have but then replace the config folders with your backups.

- Using the restored duplicati docker app retrieve your data.

-

On 1/10/2024 at 10:32 AM, neomac3444 said:

Thanks for the reply.

Any thoughts on which is better? AMP or Pterodactyl?

I'm not sure you need these unless you're running a lot of servers like Newtious does. I run a Minecraft server and a couple other game servers for friends and family. I don't need to manage them in any special way.

On 1/9/2024 at 1:35 PM, neomac3444 said:My first question is not sure what the better methods are for setting up communications to the open world. It used to be you just open a port on your router and direct traffic to that port and done. However, was reading many different sets of advice about setting up a reverse proxy with using a platform like Cloudflare and Nginx. Is that really the recommended method even though I will not be "Hosting" a website on my server?

This is what I do. I run DuckDNS so that my friends can find my server easily and port forwarding to the port for the game.

Here's some additional info about security (beyond the link that trurl has):

- If you expose a port on your router pointing to your unRAID server then keep in mind that someone will find out that the port is open and "listening". This will happen regardless of you having a host name or not (since people port scan IP addresses all the time).

- If someone finds an open port then the first assumption is that the port is being used for whatever app typically uses that port so a way to hide the purpose of a port is to use an unconventional port number for that app (or game). The drawback is that services or games might expect a specific port number.

- Trust the app or game to have decent security to block bad requests to the port (this is debatable).

- The docker app is self-contained. If someone gains control of the app then they can only see the data that the docker app has access to. Be aware of this and limit what the docker has access to (this is one reason why using the "privileged" flag is a bad idea). This is why I like dockers. They are like a VM but for a very specific purpose with a small footprint.

-

On 12/12/2023 at 3:54 PM, -Daedalus said:

Have a look at AMP. There's a docker image for it on Community Applications.

That's cool but you need the tower defence and puzzle games first. Too bad there wasn't a Wordle docker.

-

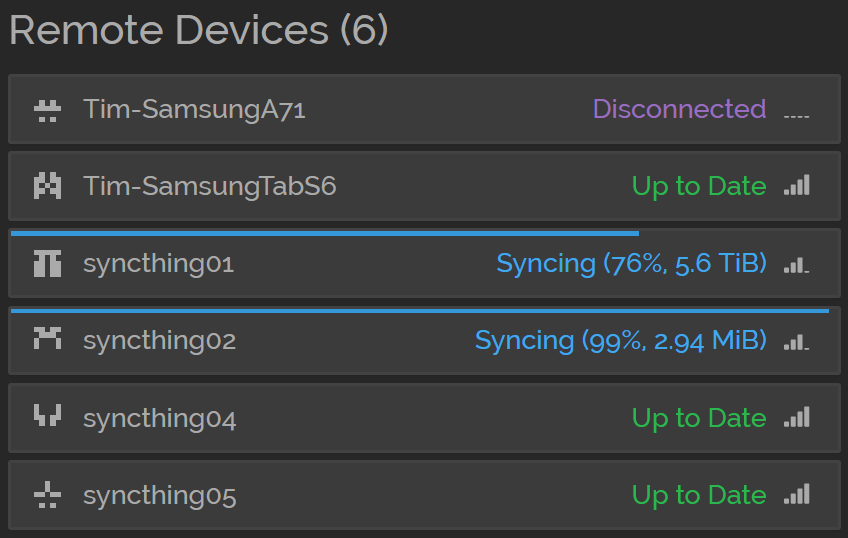

On 12/25/2023 at 12:24 AM, Gizmotoy said:

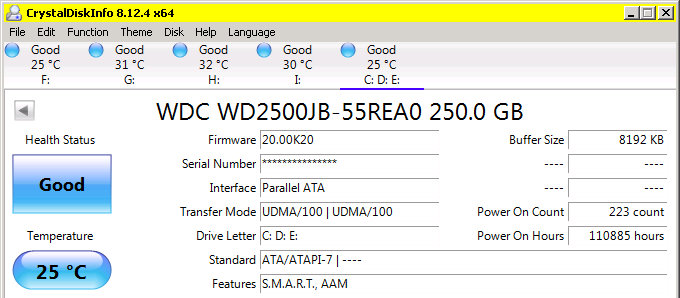

Power on hours: 109250

Oh man. You are incredibly close to my long-serving HDD with 110885. See the post below. Mine is still in use but not as much as it used to be (it still sits in an old Windows Server 2008 machine that I use as a backup machine.

https://hardforum.com/threads/post-your-hard-drive-power-on-hours.1915865/post-1045205453

-

Your signature is out of date now. 😀

I always find it cool to add more drives to my system. I guess your friends just don't appreciate it. Might have to get new friends. 😄

Careful though. When you add more space it's tempting to fill it up and then realize that you need more...

-

1

1

-

-

Docker is the way to go. If you find a game then you'll need to get the DuckDNS docker. There are YouTube videos that talk about "Reverse Lookups". Basically you register an internet host name like RichB.duckdns.org and the DuckDNS app will synchronize your host name with your IP address. The next step is to do "port forward" on your home router to point to your unRAID server and the port that your game is using. If the game is using port 1234 then your friends would type in RichB.duckdns.org:1234 and then they could play your game.

Let me know what games you find. I like tower defence and puzzle games too but I haven't really found any good ones. Maybe I haven't looked hard enough.

-

On 11/19/2023 at 6:46 AM, Michael_P said:

This discussion is about backup servers. Unless you're restoring backups of your backups to your backup server, I'm not sure what case you're trying to make.

If a drive on your backup server dies then you need to restore the contents of that drive. If it's a single drive then that's easy to do but if you have multiple drives in an array and the data is spread across the drives and then one drive fails then will you know what you've lost? In this case you will need to back up all that data. In my case that's a lot of data so I'd rather not waste time backing up 30TB of data. Instead, I buy a new drive and let unRAID restore one 10TB drive in less than a day.

One last point is that my backup server uses snapshots so losing that server would suck. I could restore the data from the source but I would lose the history.

I guess it just depends on how the backup server is set up. My post above and here is simply meant as another perspective.

-

I'm guessing that most of you have had the good fortune to never need to restore because it's a pain in the royal butt. I've since learned to buy good reliable drives and all my servers have dual parity. If money is a problem then I can understand but keep in mind that time is money.

-

Thanks @JonathanM. I figured that it was something like that.

-

-

On 9/14/2023 at 11:10 AM, buster84 said:

1) if I go back to XFS is there a plugin that snapshots the files and folder systems that way if I lose my parity or drives get disabled again I can recover the data exactly how it was originally? Or a sway to snapshot with xfs?

Use Vorta (Borg) or Duplicati. I like GUI apps better than CLI but with Vorta (Borg) you get both because Vorta is the GUI and Borg is the backend CLI that Vorta uses. To me a GUI is more intuitive about what's going on.

MrGrey alludes to the fact that your backup should be somewhere else, preferably in another location and at the very least outside of the server holding all your data. It could be another server or just a bunch of hard drives that you update from time to time.

In my setup I have two servers. One (actually three) that are file servers and one backup server. The data on the file servers is backed up to the backup server with daily snapshots. Vorta (Borg) and Duplicati use deduplication which is much easier (less strain) on the computer than the deduplication that ZFS uses since ZFS is realtime deduplication and backup apps only need to dedupe the files when they are backed up.

and this

and this

Configuration not found. Was this container created using this plugin?

in General Support

Posted

Here's a trick if you don't have a backup or just as another way to solve this problem.

Go to the Apps and install a second instance.

Go to the console and navigate to "config/plugins/DockerMan/templates-user" (as mentioned by trurl above).

Copy/replace the old, broken template file with the new template from your new instance.

Edit the file that you just replaced using the vi editor and change the name of the container to match the name of the old container.

In the Docker tab the app won't have an "Edit" menu item but after you've followed the steps above and then refresh the Docker tab the "Edit" button will show up and you will be able to update the app again.

You can now remove the second instance since you don't need it anymore.