-

Posts

63 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Report Comments posted by Pri

-

-

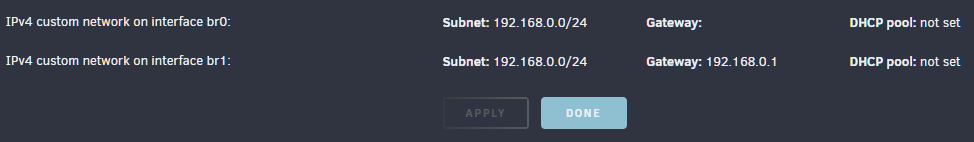

I have them setup in my Docker but only br0 shows not br1 in the dropdown. But both of those work fine in VM's.

This is what I see on my Docker settings page:

This isn't a bug?

-

I don't have logs of my last parity (it concluded November 26th). But I have the same issue and I'm also using EPYC (Gen 3 Milan) combined with a LSI 9500-8i HBA (SAS3) to a SAS3 Expander, I have 11 drives connected (various 10TB & 18TB drives) with dual-parity configured.

In the past (6.9.x etc) parity would start at 220MB/s and at the slowest point it would be about 140MB/s which is normal for my disks.

Under the current (v6.11.5) it starts off fast like normal but after a few hours I'm down to 40MB/s. But if I then pause the parity check and resume it, it jumps back to full speed (at an appropriate speed for the position in the parity checking like between the stated 220MB/s and 140MB/s etc).

I have nothing writing to the disks when this occurs (not shares, not VM's or dockers, nothing).

[6.11.5] Docker "Network Type" selection in the GUI omits custom bridges beyond br0

in Stable Releases

Posted

I see, so this is a limitation of Docker and not unRAID. The reason I did it this way (two bridges that go to the same network) is because of performance issues. If my VM's are hammering the bridge, dockers can lose connectivity. So I shifted them to different bridges and gave dedicated physical ports on my server to each bridge.

I'm running all VM's on br1 and then docker is using br0 and now they don't cause contention issues with each other. I could instead create a bond but my VM's could just eat all of that too thus the separation etc

This isn't really a problem since the VM manager of unRAID lets me select br0 or br1 for my VM's so as I said above I just used br1 for VM's but I thought it was a bug due to the discrepancy between what VM's let me select and what Docker lets me select.

Thank you for the clarification