njm5785

Members-

Posts

28 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by njm5785

-

Over the weekend I decided to move one of my disks to the xfs filesystem. I have a total of 5 disks (3 data disks, 1 parity, 1 cache). Two of those 3 data disks were is RFS format(disk1, disk2) and 1 was in the XFS format (disk3). Disk3 (XFS) has been sitting empty in my array for several months waiting to be used to convert my RFS disks. So I moved disk1 into disk3 and it seems to have worked. The problem I am running into is that my user shares are not showing the files after the move. For example if I open up Movies share in the unraid GUI I shows the files that were on disk2 are still there. But all the files that were on disk1 are missing and there is no mention of disk3. I know the files exist because if I use the command line to view files direct on disk3 they are there. I am not sure what to do next. Below are the steps I followed. 1. Installed new empty drive (disk3(XFS) months ago) 2. Turned off all dockers, turned off mover and parity checker/builder thing 3. Opened up screen session 4. Started copy using rsync (Took ~20 hours total - No errors that I know of) rsync -av --progress /mnt/disk1/ /mnt/disk3/ (~15 hours) rsync -avc --progress --remove-source-files /mnt/disk1/ /mnt/disk3/ (~5 hours) 5. Checked a handful of files on disk3 (Seemed good). Disk1 was left with empty folders (Just rsync) 6. Checked unraid main page GUI. Disk1 showed empty (bascially (33mb - folders)). Disk3 showed about the size that disk1 was before copy (~2.5TB) 7. Turned off array 8. Changed filesystem on Disk1 to XFS 9. Started array and disk1 showed unformatted. So I formatted it. (Disk1 was the only one the showed) 10. After the format I looked at the main page GUI. I had two disks XFS now (Disk1 - empty, Disk3 - ~2.5TB) 11. I looked in the different shares that use to be on disk1 and they still exist but the files that were in disk1 were missing 12. Logged in command line and disk3 still has the files even though they are missing from the shares. Will this fix itself or do I need to do something to let the share know of the move? Right now my array is in the off state. I don't want unraid wiping disk3 for some odd reason because it looks like it still has all the files. I am running unraid version 6.1.7

-

Did this and got zero errors. Guess I am good to go. Thank you all for the help. I am back up and running.

-

-

-

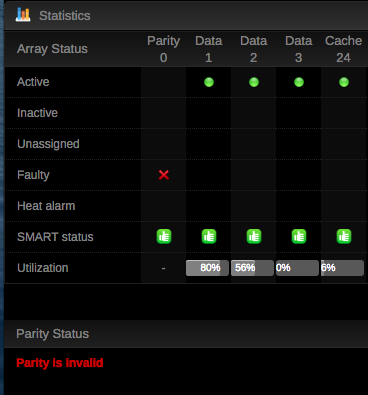

I will check this tonight when I get off work. What does that mean if it does say that? Or if it doesn't. I think it is just the legend which is a bit misleading, but not the actual text which goes with the drive. @bonienl I looked at what you said and it I can confirm that it says "Parity device is disabled". Should I follow the steps of given by itimpi?

-

I will check this tonight when I get off work. What does that mean if it does say that? Or if it doesn't.

-

Makes sense. I had never seen anything like that before so I was just using the key to help. So do you think itimpi's idea is the best to follow?

-

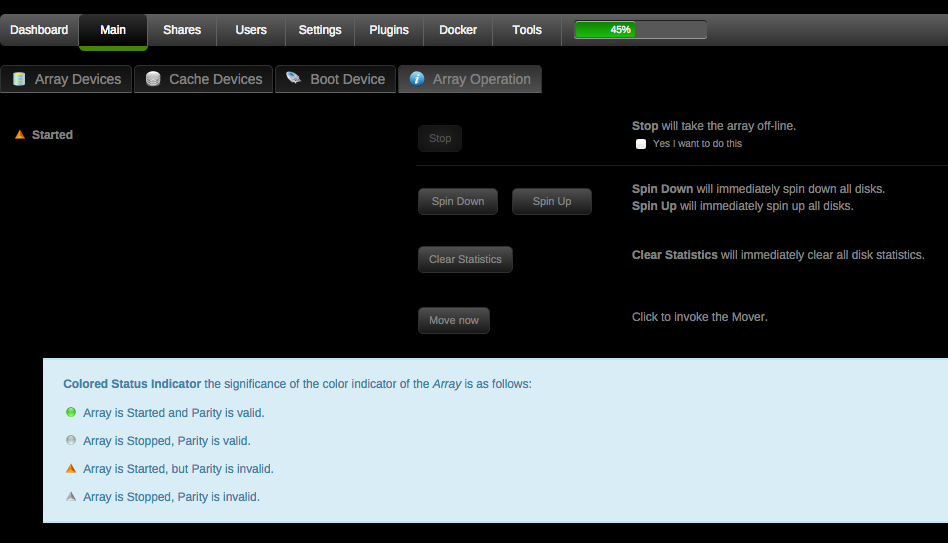

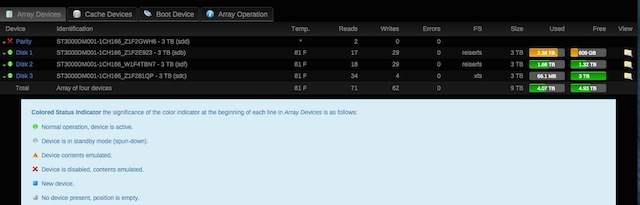

Yeah I will wait a little bit before I do anything and see what others have to say. Thanks for the ideas though. I almost forgot I did those changes friday and didn't notice any issues until yesterday. Though I have a parity check that is scheduled every sunday. Not sure that matters or not. Here are a few screen shots of my web gui.

-

I am getting "Device is disabled, contents emulated" on my parity drive. I got this for the first time last week after adding another cache drive. That ssd drive had errors so I removed it. In turn that made it so I had to format my other cache drive but I have a backup of that so it was no big deal (I have not yet restored it). I have sense checked cables. I restarted and did a smart test. The short and extended tests passed. I am not sure how to fix the issue or even what next steps I need to do. I am running 6.1.0 The device that is getting the error is ST3000DM001-1CH166_Z1F2GWH6 I attached my diagnostics report. Thanks for any help tower-diagnostics-20150909-0841.zip

-

Thanks, that helps, I just figured there was an issue. If I delete one of my containers and tell it to delete the image too does it clean up all the images associated with it or does it leave behind all the junk images? So the My-templates doesn't get deleted when you delete the docker.img file? Where is the My-templates info stored?

-

Well that sorta worked, but I still have a bunch of images with no containers. I ran the docker info command and it shows how many I have. Is it normal to have this many images? root@Tower:~# docker info Containers: 7 Images: 172 Storage Driver: btrfs Build Version: Btrfs v4.0.1 Library Version: 101 Execution Driver: native-0.2 Kernel Version: 4.0.4-unRAID Operating System: Slackware 14.1 CPUs: 4 Total Memory: 7.723 GiB Name: Tower ID: 364Z:CZMD:C3MW:BVPY:GMIR:Y4HS:XMAF:JHWJ:K25T:X6CL:3HJ4:IHV6

-

Yeah I know it is only the applications but I always forget the settings I use for the containers, but that isn't that hard. I don't quite understand what "it will do already quite a cleanup" means. I understand how to delete the 4 orphans but what about the huge list of images from the command line?

-

Doing that shows me 4 orphan images (screenshot) and it looks like they are the images I am missing. That still leaves a couple questions though. [*]How do I get them so they are not orphans anymore without deleting them? [*]That list of images I posted was huge and each one of those images was huge, can I delete those and how do I do it if I can?

-

I somewhat got this working. Here are the steps I took. // First ran this on command line sudo docker version // got this error on the last line (Though I still don't know what this error really means but I no longer get it) FATA[0000] Get http:///var/run/docker.sock/v1.18/version: dial unix /var/run/docker.sock: no such file or directory. Are you trying to connect to a TLS-enabled daemon without TLS? // Then I ran this docker -D -d // got this error on the last line FATA[0000] Shutting down daemon due to errors: Insertion failed because database is full: database or disk is full The last error I got gave me the idea that maybe I should up the size of my docker image. I had it at 10G and I made it 15G. Then I disabled docker in the settings->docker section and applied. Then re-enabled it and applied. This got my docker tab back with some of my containers. Though now I still have an issue. When I go to the docker tab I only have 4 containers (screenshot attached). Though when I run go to command line and run the docker images command I have a huge list of images with some of them being my missing ones and some I have no idea why they are there. docker images -a // I get this REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE gfjardim/owncloud latest 2ce688ba14e6 7 weeks ago 513.9 MB <none> <none> 641f45a9debf 7 weeks ago 513.9 MB <none> <none> 265d0beb4e95 7 weeks ago 513.9 MB <none> <none> abf4df89fb88 7 weeks ago 289 MB <none> <none> 7e22f9c56f5e 7 weeks ago 288.9 MB <none> <none> 2dee791751b5 7 weeks ago 288.9 MB <none> <none> ad858df1f413 7 weeks ago 288.9 MB needo/couchpotato latest 196d8d33f934 7 weeks ago 338 MB <none> <none> 6844ec3597d4 7 weeks ago 288.9 MB <none> <none> 65685e623e7e 7 weeks ago 288.9 MB <none> <none> b1b7f1c2986d 7 weeks ago 288.9 MB <none> <none> e2c2eaa1385d 7 weeks ago 288.9 MB <none> <none> 721c309d7a01 7 weeks ago 288.9 MB <none> <none> 8f2da4b4533c 7 weeks ago 288.9 MB <none> <none> 411368ab3dc4 7 weeks ago 288.9 MB <none> <none> af865190d427 7 weeks ago 288.9 MB <none> <none> 0ded2369145d 7 weeks ago 288.9 MB <none> <none> cea12b865f8b 7 weeks ago 288.9 MB <none> <none> a5475b7bc3b7 7 weeks ago 288.9 MB <none> <none> 2598883ac20a 7 weeks ago 288.9 MB <none> <none> bc0df8505206 7 weeks ago 288.9 MB needo/plex latest 8906416ebf13 8 weeks ago 603.9 MB <none> <none> e05c4967b07b 8 weeks ago 603.9 MB <none> <none> 4d44b1a1dd5f 8 weeks ago 603.9 MB <none> <none> e8780cda3d60 8 weeks ago 603.9 MB <none> <none> 9a4b68e056f1 8 weeks ago 603.9 MB <none> <none> c67ec4dd661d 8 weeks ago 603.9 MB <none> <none> a31e68d0daa2 8 weeks ago 603.9 MB <none> <none> 635e31957bb8 8 weeks ago 603.9 MB <none> <none> 4760b39cedac 8 weeks ago 603.9 MB <none> <none> fbbc715c40a8 8 weeks ago 603.9 MB <none> <none> 34829843a5cd 8 weeks ago 603.9 MB <none> <none> 183ce8f863bd 8 weeks ago 603.9 MB <none> <none> c8b463b2746e 8 weeks ago 603.6 MB <none> <none> 04ff2d4ecf0a 8 weeks ago 313.5 MB <none> <none> 2db14fff5bfe 8 weeks ago 313.5 MB <none> <none> 0ad36e2b047a 8 weeks ago 310.2 MB <none> <none> 40724c4075a2 8 weeks ago 288.9 MB <none> <none> f67106a1e349 8 weeks ago 288.9 MB <none> <none> 10ed5bddf71e 8 weeks ago 288.9 MB <none> <none> b142ca11d3ed 8 weeks ago 288.9 MB <none> <none> fa3cb62b0a51 8 weeks ago 288.9 MB captinsano/ddclient latest 1e7faf00a4fb 10 weeks ago 371.9 MB <none> <none> 3f4f4efea86d 10 weeks ago 371.9 MB <none> <none> ba3bae26a9a7 10 weeks ago 371.9 MB <none> <none> a0825cae9c38 10 weeks ago 371.9 MB <none> <none> be15f8784644 10 weeks ago 371.9 MB <none> <none> 4b6fa4bbc28e 10 weeks ago 371.9 MB <none> <none> a1c44adfc8bb 10 weeks ago 371.9 MB <none> <none> 288708abb6a3 10 weeks ago 371.9 MB <none> <none> b868c5599efe 10 weeks ago 371.9 MB <none> <none> 24f99a93159f 10 weeks ago 371.9 MB <none> <none> e8e5b9953ae8 10 weeks ago 371.3 MB <none> <none> e398507f096d 10 weeks ago 279.7 MB <none> <none> f6dbdbe9413c 10 weeks ago 279.7 MB <none> <none> b0b18af94ec5 10 weeks ago 279.6 MB <none> <none> 90f4aed24972 10 weeks ago 279.6 MB <none> <none> 295f38a63786 10 weeks ago 279.6 MB <none> <none> 46d2aa519d62 10 weeks ago 279.6 MB <none> <none> e43cbc29d2ad 10 weeks ago 279.6 MB <none> <none> 22066176f7d5 10 weeks ago 279.6 MB <none> <none> 559ec1f5c454 10 weeks ago 279.6 MB captinsano/tonido latest f735ea48629e 12 weeks ago 380.7 MB <none> <none> b30da7b6ec5b 12 weeks ago 380.7 MB <none> <none> b90fdf18fe61 12 weeks ago 380.7 MB <none> <none> cbf8034174a8 12 weeks ago 380.7 MB <none> <none> e45ecafea9c7 12 weeks ago 380.7 MB <none> <none> 82fc70f9cd65 12 weeks ago 380.7 MB <none> <none> 426fc20cd498 12 weeks ago 380.7 MB <none> <none> d6330720cac1 12 weeks ago 380.7 MB <none> <none> 67dc745cb248 12 weeks ago 380.7 MB <none> <none> 929105d21390 12 weeks ago 380.7 MB <none> <none> 38ca06afe477 12 weeks ago 279.7 MB <none> <none> d310d7969f73 12 weeks ago 279.7 MB <none> <none> b51485f1d192 12 weeks ago 279.7 MB <none> <none> 1daaed38e2e0 12 weeks ago 279.6 MB <none> <none> 09312975adb1 12 weeks ago 279.6 MB <none> <none> a659e96a22d5 12 weeks ago 279.6 MB <none> <none> f594ebc36632 12 weeks ago 279.6 MB <none> <none> 1c527c7826a5 12 weeks ago 279.6 MB <none> <none> 933f6e28ea21 12 weeks ago 279.6 MB <none> <none> 09c5a8e871a7 12 weeks ago 279.6 MB needo/sabnzbd latest 29e60047211d 4 months ago 447.4 MB <none> <none> 09fc099a7b28 4 months ago 447.4 MB <none> <none> 8995cefc285b 4 months ago 447.4 MB <none> <none> ab069957577e 4 months ago 447.4 MB <none> <none> a03f4be615c6 4 months ago 447.4 MB <none> <none> cc33cd17a400 4 months ago 447.4 MB <none> <none> 18d9594ac4a6 4 months ago 447.4 MB <none> <none> 2b61957ab1c7 4 months ago 446.8 MB <none> <none> 472490b62272 4 months ago 446.6 MB <none> <none> f2e6c6d43b75 4 months ago 445.5 MB <none> <none> c040abbde224 4 months ago 309.3 MB <none> <none> 538ac87a7548 4 months ago 280.5 MB <none> <none> 9ebfd9f7714f 4 months ago 280.5 MB <none> <none> 9d013f4e4cbf 4 months ago 280.5 MB <none> <none> 0ad809410ba7 4 months ago 280.5 MB <none> <none> 3c198a7af20e 4 months ago 279.7 MB <none> <none> f06590329411 4 months ago 279.7 MB <none> <none> 595ce1a858bf 4 months ago 279.6 MB <none> <none> 5e32fbc5478b 4 months ago 279.6 MB <none> <none> 5537eae430ad 4 months ago 279.6 MB <none> <none> f74d3918f34c 4 months ago 279.6 MB <none> <none> 5a14c1498ff4 5 months ago 279.6 MB <none> <none> 2eccda511755 5 months ago 279.6 MB <none> <none> 2ce4ac388730 5 months ago 188.3 MB <none> <none> ec5f59360a64 5 months ago 188.3 MB <none> <none> 8254ff58b098 5 months ago 188.3 MB <none> <none> b39b81afc8ca 5 months ago 188.3 MB <none> <none> 615c102e2290 5 months ago 188.3 MB <none> <none> 837339b91538 5 months ago 188.3 MB <none> <none> 53f858aaaf03 5 months ago 188.1 MB smdion/docker-upstatsboard latest c3c7aed20973 6 months ago 540.1 MB <none> <none> 687a9fa2ce65 6 months ago 540.1 MB <none> <none> 686878649dfc 6 months ago 540.1 MB <none> <none> 5418d882f12e 6 months ago 540.1 MB <none> <none> c16a5e90212c 6 months ago 540.1 MB <none> <none> f4d867e4f947 6 months ago 540.1 MB <none> <none> ddd3d27dfcdd 6 months ago 540.1 MB <none> <none> 1c69777f6ce9 6 months ago 540.1 MB <none> <none> 21266eaa9eeb 6 months ago 540.1 MB <none> <none> 0166e97a69e0 6 months ago 540.1 MB <none> <none> fcff37e91ef6 6 months ago 540.1 MB <none> <none> 6116aed6f4c0 6 months ago 540.1 MB <none> <none> b891f675f3b8 6 months ago 540.1 MB <none> <none> 56703520a894 6 months ago 540.1 MB <none> <none> b4cf48407e75 6 months ago 540.1 MB <none> <none> 41b5028bea8c 6 months ago 540.1 MB <none> <none> 82bc976ed326 6 months ago 540.1 MB <none> <none> d66db4f6017a 6 months ago 495.5 MB <none> <none> 58ae0d4d5ec5 6 months ago 289 MB <none> <none> 39f00020c970 6 months ago 289 MB <none> <none> e6b6f98619ac 6 months ago 288.9 MB <none> <none> 865404e6d2e6 6 months ago 288.9 MB <none> <none> 0b828c10ab60 6 months ago 288.9 MB <none> <none> 8eb7d3b88715 6 months ago 288.9 MB <none> <none> c97a2e9a8962 6 months ago 288.9 MB <none> <none> 919db1524250 6 months ago 288.9 MB <none> <none> 9028400d0323 6 months ago 288.9 MB <none> <none> cf39b476aeec 9 months ago 288.9 MB <none> <none> 64463062ff22 9 months ago 288.9 MB <none> <none> d5199f68b2fe 9 months ago 194.8 MB <none> <none> c27763e1f3e5 9 months ago 194.8 MB <none> <none> bad562ead0dc 9 months ago 194.8 MB <none> <none> 9d9561782335 9 months ago 194.8 MB <none> <none> 6b4e8a7373fe 9 months ago 194.8 MB <none> <none> c900195dcbf3 9 months ago 194.8 MB <none> <none> 8b1c48305638 9 months ago 192.7 MB <none> <none> 67b66f26d423 9 months ago 192.7 MB <none> <none> 25c4824a5268 9 months ago 192.7 MB <none> <none> b18d0a2076a1 9 months ago 192.5 MB needo/mariadb latest 566c91aa7b1e 11 months ago 590.6 MB <none> <none> fede856341ad 11 months ago 590.6 MB <none> <none> 864b9f0054e7 11 months ago 590.6 MB <none> <none> 23c87de41786 11 months ago 590.6 MB <none> <none> 5d7fe409bfe4 11 months ago 590.6 MB <none> <none> da7bfdf0e1b6 11 months ago 590.6 MB <none> <none> 6470f811e73f 11 months ago 590.6 MB <none> <none> 594f8b77e732 11 months ago 590.6 MB <none> <none> 9243c9adab8b 11 months ago 590.6 MB <none> <none> ee0b1941d320 11 months ago 590.6 MB <none> <none> 4fe585a340e7 11 months ago 590.5 MB <none> <none> 31bfb552afaf 11 months ago 468.3 MB <none> <none> 56b9543fe345 11 months ago 391 MB <none> <none> 776eac27ad9d 11 months ago 391 MB <none> <none> 4132f6005419 11 months ago 390.9 MB <none> <none> 7418ba5f7369 11 months ago 390.9 MB <none> <none> 30867c4ff0fd 11 months ago 390.9 MB <none> <none> dabfc8a44cb5 12 months ago 390.9 MB <none> <none> 37fd06241067 12 months ago 390.9 MB <none> <none> 68bde466ffab 12 months ago 266 MB <none> <none> 5cbfee875b7b 13 months ago 266 MB <none> <none> 82c9a6741336 13 months ago 266 MB <none> <none> afc7fc2f17c1 13 months ago 266 MB <none> <none> 99ec81b80c55 14 months ago 266 MB <none> <none> d4010efcfd86 14 months ago 192.6 MB <none> <none> 4d26dd3ebc1c 14 months ago 192.6 MB <none> <none> 5e66087f3ffe 14 months ago 192.5 MB <none> <none> 511136ea3c5a 2.045643 years ago 0 B Does anyone have ideas on how I can get my missing ones (have a repository name) back in the web gui and how I can delete all the others? Plus I am not sure how I ended up with all those junk ones. I always use the web gui to create and delete docker images. Am I missing something when I delete them?

-

I only have three items in the installed plugins page. They are all up to date. sickbeard.64bit Dynamix webGui unRAID Server OS Why is this info needed?

-

To uninstall it do I just disable docker and re-enable it, or is there some other process to uninstall it? Also, does that mean I will have to setup all my containers again?

-

I updated unraid to version 6.0.0 from version 6.0-beta15 then it told me to reboot my system. So I spun down the array, shut if off and rebooted. My docker containers are now missing and my docker tab is gone from my menu. I have docker at the same path you mention /mnt/cache/docker.img so it should not be getting messed up by the mover. What else might be causing this?

-

Yes it is a ssd drive. Should I get a new cable? I will try and run the manufactures diagnostics tonight.

-

Thanks for the info I had no idea how to get the complete syslog. I thought the log link to the right on all the pages was it. Here is the complete one based on your instructions. syslog.zip

-

Here is my syslog /usr/bin/tail -n 42 -f /var/log/syslog 2>&1 Apr 20 12:43:22 Tower emhttp: shcmd (47): cp /etc/avahi/services/afp.service- /etc/avahi/services/afp.service Apr 20 12:43:22 Tower avahi-daemon[3444]: Files changed, reloading. Apr 20 12:43:22 Tower avahi-daemon[3444]: Service group file /services/afp.service changed, reloading. Apr 20 12:43:23 Tower avahi-daemon[3444]: Service "Tower" (/services/smb.service) successfully established. Apr 20 12:43:23 Tower avahi-daemon[3444]: Service "Tower-AFP" (/services/afp.service) successfully established. Apr 20 13:13:04 Tower kernel: mdcmd (35): spindown 0 Apr 20 13:18:22 Tower kernel: mdcmd (36): spindown 1 Apr 20 13:18:22 Tower kernel: mdcmd (37): spindown 2 Apr 20 22:17:43 Tower kernel: ata2: exception Emask 0x50 SAct 0x0 SErr 0x4090800 action 0xe frozen Apr 20 22:17:43 Tower kernel: ata2: irq_stat 0x00400040, connection status changed Apr 20 22:17:43 Tower kernel: ata2: SError: { HostInt PHYRdyChg 10B8B DevExch } Apr 20 22:17:43 Tower kernel: ata2: hard resetting link Apr 20 22:17:49 Tower kernel: ata2: link is slow to respond, please be patient (ready=0) Apr 20 22:17:53 Tower kernel: ata2: SATA link up 6.0 Gbps (SStatus 133 SControl 300) Apr 20 22:17:53 Tower kernel: ata2.00: configured for UDMA/133 Apr 20 22:17:53 Tower kernel: ata2: EH complete Apr 21 00:20:01 Tower sSMTP[27690]: Creating SSL connection to host Apr 21 00:20:02 Tower sSMTP[27690]: SSL connection using ECDHE-RSA-AES128-GCM-SHA256 Apr 21 00:20:04 Tower sSMTP[27690]: Sent mail for unraid@*****.me (221 2.0.0 closing connection f1sm863160pdp.24 - gsmtp) uid=0 username=root outbytes=628 Apr 21 03:40:01 Tower logger: mover started Apr 21 03:40:01 Tower logger: skipping "applications" Apr 21 03:40:01 Tower logger: skipping "downloads" Apr 21 03:40:01 Tower logger: mover finished Apr 21 04:20:45 Tower kernel: mdcmd (38): spindown 2 Apr 21 04:20:51 Tower kernel: mdcmd (39): spindown 1 Apr 21 07:59:01 Tower sSMTP[23288]: Creating SSL connection to host Apr 21 07:59:01 Tower sSMTP[23288]: SSL connection using ECDHE-RSA-AES128-GCM-SHA256 Apr 21 07:59:04 Tower sSMTP[23288]: Sent mail for unraid@*****.me (221 2.0.0 closing connection m8sm2201318pdn.5 - gsmtp) uid=0 username=root outbytes=731 Apr 21 07:59:04 Tower sSMTP[23308]: Creating SSL connection to host Apr 21 07:59:05 Tower sSMTP[23308]: SSL connection using ECDHE-RSA-AES128-GCM-SHA256 Apr 21 07:59:07 Tower sSMTP[23308]: Sent mail for unraid@*****.me (221 2.0.0 closing connection vu7sm2156902pbc.39 - gsmtp) uid=0 username=root outbytes=701 Apr 21 13:15:49 Tower sshd[10417]: Accepted password for root from 10.10.0.6 port 56606 ssh2 Apr 21 17:48:36 Tower emhttp: read_line: client closed the connection Apr 22 00:20:02 Tower sSMTP[12388]: Creating SSL connection to host Apr 22 00:20:02 Tower sSMTP[12388]: SSL connection using ECDHE-RSA-AES128-GCM-SHA256 Apr 22 00:20:06 Tower sSMTP[12388]: Sent mail for unraid@*****.me (221 2.0.0 closing connection x1sm3914625pdp.1 - gsmtp) uid=0 username=root outbytes=910 Apr 22 03:40:01 Tower logger: mover started Apr 22 03:40:01 Tower logger: skipping "applications" Apr 22 03:40:01 Tower logger: skipping "downloads" Apr 22 03:40:01 Tower logger: mover finished Apr 22 07:34:55 Tower emhttp: read_line: client closed the connection Apr 22 07:59:58 Tower sshd[17175]: Accepted password for root from 10.10.0.6 port 57446 ssh2

-

I thought maybe I should also attach a smart log from the drive. Here it is. Warning: ATA error count 0 inconsistent with error log pointer 1 ATA Error Count: 0 CR = Command Register [HEX] FR = Features Register [HEX] SC = Sector Count Register [HEX] SN = Sector Number Register [HEX] CL = Cylinder Low Register [HEX] CH = Cylinder High Register [HEX] DH = Device/Head Register [HEX] DC = Device Command Register [HEX] ER = Error register [HEX] ST = Status register [HEX] Powered_Up_Time is measured from power on, and printed as DDd+hh:mm:SS.sss where DD=days, hh=hours, mm=minutes, SS=sec, and sss=millisec. It "wraps" after 49.710 days. Error 0 occurred at disk power-on lifetime: 13273 hours (553 days + 1 hours) When the command that caused the error occurred, the device was active or idle. After command completion occurred, registers were: ER ST SC SN CL CH DH -- -- -- -- -- -- -- 00 50 08 78 b9 8f 40 at LBA = 0x008fb978 = 9419128 Commands leading to the command that caused the error were: CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name -- -- -- -- -- -- -- -- ---------------- -------------------- 60 00 08 78 d8 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 00 90 72 05 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 78 78 b9 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 18 e0 b8 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 28 78 d2 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED Error -1 occurred at disk power-on lifetime: 13273 hours (553 days + 1 hours) When the command that caused the error occurred, the device was active or idle. After command completion occurred, registers were: ER ST SC SN CL CH DH -- -- -- -- -- -- -- 00 50 20 98 b7 8f 40 at LBA = 0x008fb798 = 9418648 Commands leading to the command that caused the error were: CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name -- -- -- -- -- -- -- -- ---------------- -------------------- 60 00 20 80 b7 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 08 78 b7 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 08 18 70 05 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 40 a0 d7 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED 60 00 20 80 d7 8f 40 00 6d+06:21:19.744 READ FPDMA QUEUED Error -2 occurred at disk power-on lifetime: 13204 hours (550 days + 4 hours) When the command that caused the error occurred, the device was active or idle. After command completion occurred, registers were: ER ST SC SN CL CH DH -- -- -- -- -- -- -- 00 50 08 78 b9 8f 40 at LBA = 0x008fb978 = 9419128 Commands leading to the command that caused the error were: CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name -- -- -- -- -- -- -- -- ---------------- -------------------- 60 03 08 a8 70 05 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 00 78 78 b9 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 00 18 e0 b8 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 00 08 a0 70 05 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 10 28 78 d2 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED Error -3 occurred at disk power-on lifetime: 13204 hours (550 days + 4 hours) When the command that caused the error occurred, the device was active or idle. After command completion occurred, registers were: ER ST SC SN CL CH DH -- -- -- -- -- -- -- 00 50 20 98 b7 8f 40 at LBA = 0x008fb798 = 9418648 Commands leading to the command that caused the error were: CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name -- -- -- -- -- -- -- -- ---------------- -------------------- 60 00 20 80 b7 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 00 08 78 b7 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 03 08 08 8f 05 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 00 40 a0 d7 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED 60 00 20 80 d7 8f 40 00 3d+09:25:19.744 READ FPDMA QUEUED Error -4 occurred at disk power-on lifetime: 13204 hours (550 days + 4 hours) When the command that caused the error occurred, the device was active or idle. After command completion occurred, registers were: ER ST SC SN CL CH DH -- -- -- -- -- -- -- 00 50 08 78 b9 8f 40 at LBA = 0x008fb978 = 9419128 Commands leading to the command that caused the error were: CR FR SC SN CL CH DH DC Powered_Up_Time Command/Feature_Name -- -- -- -- -- -- -- -- ---------------- -------------------- 60 00 08 78 d8 8f 40 00 3d+09:01:19.744 READ FPDMA QUEUED 60 00 20 c8 70 05 40 00 3d+09:01:19.744 READ FPDMA QUEUED 60 00 78 78 b9 8f 40 00 3d+09:01:19.744 READ FPDMA QUEUED 60 00 18 e0 b8 8f 40 00 3d+09:01:19.744 READ FPDMA QUEUED 60 00 18 b0 70 05 40 00 3d+09:01:19.744 READ FPDMA QUEUED

-

I had a drive on my unraid server fail last week. I ordered a new drive and put it in. Unraid rebuilt the new drive and all my files seemed fine. Then I tried to start my docker image back up and it doesn't seem to start. I also got notifications about a 187 error on my cache drive. I started digging around the menus and I found a screen that seems to highlight the issue (I attached a screenshot). I have no idea what it means and wondered if anyone could help out. Do I need to replace the drive? Is there something I can do to get my docker back up and running? One more thing that might relate is that when I try and turn off the array I have to ssh in and force unmount the cache drive because it says it is busy. I know this is sorta random information but I am pretty new to problems with unraid. I set my server up almost 2 years ago and haven't had any issues until last week when one of my drives failed. I am running unraid version 6.0-beta14b. Thanks for any help someone can offer.

-

Not sure if this has been mentioned or not in the 39 pages, but BitTorrent Sync would be awesome to have. http://www.bittorrent.com/sync/how-it-works http://www.bittorrent.com/sync/download

-

@PhAzE I love your plugins they work great. I started it with Plex, then added sickbeard and sabnzbd. Still working on setting up others. I would like to put in a request for Crashplan. I have been using unraid for 2 years but never known a good way to do backups to it and Crash plan seems like a perfect option. I don't know the challenges involved but thank you for the hard work and great job. Sent from my HTC One using Tapatalk