Kallb123

-

Posts

10 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Kallb123

-

-

I might have managed it... though I can't see any data yet, maybe it'll show up in an hour (my timezone offset).

I firstly ran:

sudo docker run --name cacti -d -p 9123:80 -p 161:161 --restart always -e TZ=Europe/London \ -v /home/x/cacti/cacti:/data/cacti -v /home/x/cacti/mysql:/data/mysql quantumobject/docker-cactithen access the container terminal with

sudo docker exec -it cacti bashI gave it ~10 minutes to start and gather some data. I then transferred all the files:

rsync -a /var/lib/mysql/* /data/mysql/ rsync -a /opt/cacti/* /data/cacti/I then removed the container and started it with the new volumes:

sudo docker run --name cacti -d -p 9123:80 -p 161:161 --restart always -e TZ=Europe/London \ -v /home/x/cacti/cacti:/opt/cacti -v /home/x/cacti/mysql:/var/lib/mysql quantumobject/docker-cactiIt seems to have booted and there's no errors. But I'll have to wait for data to show up. You could do the same with more volumes for maximum customisability I suppose. I haven't tested multiple restarts, I'll wait for data first.

EDIT: Folder ownership seems to have been messed up, but the files seem ok. Too early to tell whether the permissions are going to screw it up.

-

1 hour ago, koyaanisqatsi said:

If you run it as documented, it's completely volatile, and you lose everything if you delete the container. unRAID always deletes containers when they are stopped. I'm still working (slowly) on addressing that in my installation. Last night I discovered the backup and restore commands are robust, though not enough to establish a persistent storage set up. But they do allow for easily not losing your data history. I've set up a cron task inside the container to run a backup every hour. And the backups are kept on persistent storage. Then when I have to restart the container or reboot, I just run the restore command and the new container comes back to life. Next step for me is to pick apart the backups and see what is in them, which will tell me what I need to capture and mount as external volumes, for a truly persistent and self-healing configuration.

Here's how I'm starting (actually unRAID is starting) the container right now, which just uses the backup/restore commands:

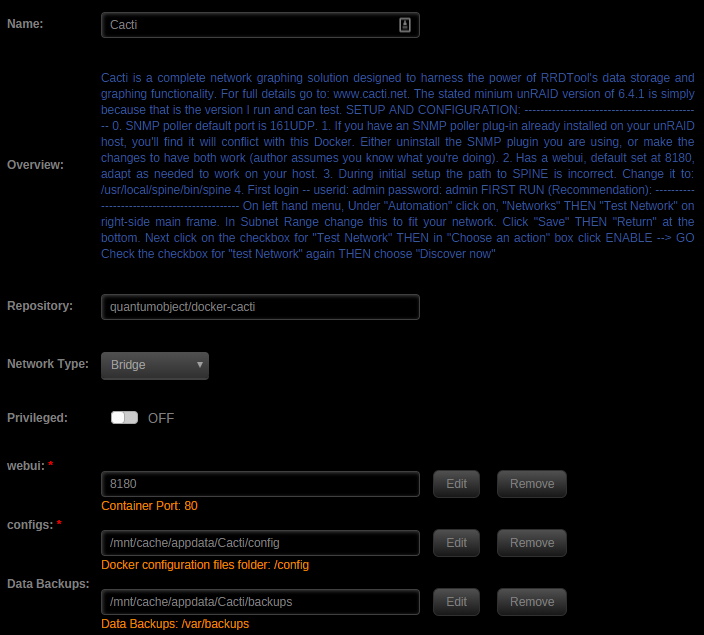

docker run -d \ --name='Cacti' \ --net='bridge' \ -e TZ="America/Los_Angeles" \ -e HOST_OS="unRAID" \ -p '8180:80/tcp' \ -v '/mnt/cache/appdata/Cacti/config':'/config':'rw' \ -v '/mnt/cache/appdata/Cacti/backups':'/var/backups':'rw' \ 'quantumobject/docker-cacti'What this looks like in unRAID:Then in an unRAID terminal, run:docker exec -it Cacti /sbin/restoreLog into the container:docker exec -it Cacti /bin/bashEdit the crontab:crontab -eAnd paste in the schedule:4 * * * * /sbin/backupThis runs a backup at :04 minutes after the hour, every hour.I'll post the final setup once I get fully persistent storage working.EDIT: Regarding the time zone edit to /opt/cacti/graph_image.php; I haven't addressed that yet, and it's apparently not always necessary. You'll need to edit the file every time you start a new container, if you're seeing the issue. I needed it on one container, but not another, even though both were running on the same host.That's odd about the timezone. I actually found that my host was on UTC rather than London time, so that might help my situation. Haven't tested yet.

I'm struggling with persistence too. I found that /var/lib/mysql and /opt/cacti are where the configs are stored and if they can be passed as a volume then that would work. However, when I pass them through as volumes the container stops working. The following command, in my eyes, should start the container with persisted volumes:

docker run --name cacti -d -p 9123:80 -p 161:161 --restart always -e TZ=Europe/London \ -v /home/x/cacti/cacti:/opt/cacti -v /home/x/cacti/mysql:/var/lib/mysql quantumobject/docker-cactiI must be missing something about the writing of the volumes as it just stops working with this change. I found https://hub.docker.com/r/idle/cacti-1-minute/ which should have persistence, but this also fails to start up. Note that the cacti config here is said to be /var/lib/cacti.

Let us know if you figure out persistence. I'll update you too if anything works for me.

-

On 8/4/2018 at 8:04 AM, koyaanisqatsi said:

Huh, OK. Data started showing up in my graphs at 9:40 this morning, which is 7 hours after I posted above, or the same offset as my time zone. So I seem to have a TZ issue. I did set -e TZ=America/Los_Angeles (In fact I just saw that it's set twice, and removed one.). But the graphs are slid off the data itself. So it seems like the TZ isn't getting to all the components.

From php.ini (both):

[Date]

; Defines the default timezone used by the date functions

; http://php.net/date.timezone

date.timezone = "America/Los_Angeles"System time, according to the container, is correct:

sh-4.3# date

Fri Aug 3 23:46:43 PDT 2018

sh-4.3#my.cnf:

default-time-zone = America/Los_Angeles

Container startup:

root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name='Cacti' --net='bridge' -e TZ="America/Los_Angeles" -e HOST_OS="unRAID" -p '8180:80/tcp' -v '/mnt/cache/appdata/Cacti':'/config':'rw' 'quantumobject/docker-cacti'

ccddc228f9e6c3c454c8197a797d32d292ad2a2f3b9fe6e0306adc9f17432e2aThe command finished successfully!

What else can I check?

Thanks!

On 8/4/2018 at 8:35 AM, koyaanisqatsi said:K, looks like adding:

putenv('TZ=America/Los_Angeles');

just beaneath the <?php line in /opt/cacti/graph_image.php has resolved the issue. (https://forums.cacti.net/viewtopic.php?f=2&t=47931)

Now I just need to figure out the persistent storage issue. If anyone is working on that, I'd love to see your notes!

Thank you for updating and posting this!! I'm trying to get this same docker running (quantumobject/docker-cacti) and I have the same timezone issue. I'm not within unRaid, just bare Ubuntu. This was the only result that showed up though.

Could you explain how you fixed the timezone issue? I'm unsure how to put

putenv('TZ=America/Los_Angeles');into the docker system (persistently).

I've also been wondering how this system copes with a reboot. I've not specified any persistent volumes, so do all the stats wipe when the container restarts?

-

I'm looking to start using Docker soon and your DelugeVPN container looks like what I need, but I also need a VPN for SickRage. Unfortunately my ISP blocks most torrenting websites so SickRage can't find anything without a VPN. Since you've already got Deluge+OpenVPN (maybe not finished, but close), is it easy to create other combinations?

EDIT: An alternative would be to keep using the OpenVPN plugin in Unraid and switch everything else to Docker. Would all the Docker traffic still pass through the VPN if it was just a plugin? I'd rather just have Transmission/Deluge and SickRage on VPN, but all apps would be a partial victory.

-

I'm trying to install btsync_x64_overbyrn.plg but the plugin doesn't show up on the settings page. My command line after "installplg /boot/config/plugins/btsync_x64_overbyrn.plg" shows things like:

.... Warning: simplexml_load_file(): /boot/config/plugins/btsync_x64_overbyrn.plg:1978: parser error : Premature end of data in tag head line 8 in /usr/local/emhttp/plugins/plgMan/plugin on line 125 Warning: simplexml_load_file(): in /usr/local/emhttp/plugins/plgMan/plugin on line 125 Warning: simplexml_load_file(): ^ in /usr/local/emhttp/plugins/plgMan/plugin on line 125 Warning: simplexml_load_file(): /boot/config/plugins/btsync_x64_overbyrn.plg:1978: parser error : Premature end of data in tag html line 7 in /usr/local/emhttp/plugins/plgMan/plugin on line 125 Warning: simplexml_load_file(): in /usr/local/emhttp/plugins/plgMan/plugin on line 125 Warning: simplexml_load_file(): ^ in /usr/local/emhttp/plugins/plgMan/plugin on line 125 plugin: xml parse error

Any ideas?

EDIT: Re-downloaded the file and got it installed alright. Problem solved

-

Would it be possible to set up a VPN connection (OpenVPN to something like PrivateInternetAccess) for certain (or all) containers to use? Ideally I'd like to say "this container and that container should go through VPN connection, whilst the rest go through the normal connection", but all containers going through the VPN would be good too.

-

I've just run into an issue where I lost access to the WebGUI. I rebooted from telnet, several times, and even rebooted it at the machine, but I still can't access the GUI.

I can still access the server via Telnet, and I can see the flash share over the network.

Does anyone know what's happened? Is there a log file somewhere that I should post?

EDIT: Access is back now, but only at //tower. For some reason the IP won't work. Yet Plex etc are available at IP:Port.

-

Is there any way to add new providers (mainly torrents) to SickBeard? I've tried copying the provider files from other builds (junalmeida's) and then updating the list of providers in __init__.py but then SickBeard fails to start. It seems there are many other places that would need updating.

I've also tried installing the other build, but can't get that to start either. I would really like some added torrent providers

EDIT:

Wow, I actually managed it. I'm going to provide a list of files that I had to update:

There are many lines that need copying in these files and the provider .py files need copying to \sickbeard\providers\

Everything seems to be working fine. Hope this can help someone.

NOPE NOPE NOPE

-

I've run into an issue after following this post. After starting up Delude via:

/etc/rc.d/rc.deluged start

The web client doesn't connect to the Daemon though. Whenever I browse to the client at port 8112, the connection manager appears and it seems offline.

I get the following messages in the log:

[ERROR ] 22:29:44 main:233 /usr/lib/libstdc++.so.6: version `GLIBCXX_3.4.14' not found (required by /usr/lib/python2.6/site-packages/libtorrent.so) Traceback (most recent call last): File "/usr/lib/python2.6/site-packages/deluge/main.py", line 226, in start_daemon Daemon(options, args) File "/usr/lib/python2.6/site-packages/deluge/core/daemon.py", line 141, in __init__ from deluge.core.core import Core File "/usr/lib/python2.6/site-packages/deluge/core/core.py", line 36, in <module> from deluge._libtorrent import lt File "/usr/lib/python2.6/site-packages/deluge/_libtorrent.py", line 59, in <module> import libtorrent as lt ImportError: /usr/lib/libstdc++.so.6: version `GLIBCXX_3.4.14' not found (required by /usr/lib/python2.6/site-packages/libtorrent.so)

Is there any way to get the Daemon up and running?

[Support] Cacti

in Docker Containers

Posted

It works! The data showed up (an hour late), so I removed the container and ran it again. The same data is still visible.

Thank you for your help koyaanisqatsi, your idea of creating a starter database is spot on!!

The timezone issue doesn't seem to be fixed even after editing that php file