bkastner

Members-

Posts

1198 -

Joined

-

Last visited

About bkastner

- Birthday 08/07/1971

Converted

-

Gender

Male

-

Location

Canada

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

bkastner's Achievements

Collaborator (7/14)

4

Reputation

-

Perfect. Thank you for your help Jorge

-

I have 2 drives on that particular backplane... the one with 130000 CRC errors (disk3), and the second drive that has 1 random one (disk8). Disk3 isn't in the array anymore and is being simulated. I had to do a parity check over the weekend as I inadventenly caused an unclean shutdown, and I didn't get any CRC errors on Disk8 during the check. The 130000 CRC errors on Disk3 occured while UnRAID was rebuilding the previously failed drive, but disk8 just had that one minor hiccup. Is a parity check still worthwhile in this scenario? I am guessing not, but want to confirm in case I am missing something.

-

Okay, so I've flashed the firmware (what a pain in the ass that was), and I've replaced all my sff-8643 cables, and brought the system back up. Everything seems like it's better. The last drive that was screaming at me as 130050 UDMA CRC errors, but seems to not be moving. When I started rebuilding the last failed drive the CRC errors on this drive were skyrocketing, so I am guessing them remaining stable now is a good sign. Is there an easy way to test? I've browsed the drive through the GUI as I figured that would cause a read operation which would maybe cause the CRC errors to climb, but it's still the same. I also ran a short SMART test and it came back without error. Is it fairly safe to assume that it was a controller/cable issue, and I shouldn't have any more drive failures? Or is there another test I should run before considering this case closed?

-

lol.. sorry. I didn't realize I'd sent that. I was going to ask about the lsi flash. I had the files on the flash drive, but couldn't make them executable, so was going to ask you, but figured I could create a MS DOS boot USB with the files and do it that way.

-

@jorgeb

-

Thank you. I've ordered replacement cables and will check the LSI firmware and see how things progress

-

bkastner started following Continuous Drive Failures

-

For some reason I've been having an issue since May or so. I had a drive fail, and got a RMA replacement sent from WD. I did a preclear on another machine, shut down UnRAID, swapped the drive and brought UnRAID back up to start the rebuild. However, as the rebuild started I started getting udma crc error count errors on a drive that previously reported no issues. I figured it was just a fluke that a second drive failed while rebuiding, but once the rebuild was done I RMAd the new drive and repeated the process. Then another drive failed during that build with the same thing, and this just keeps happening over and over. I am now rebuilding my 5th or 6th drive, and again, I am getting a ton of udma errors (Disk 3 (disk dsbl) in the logs). While all my previously failed drives were bought a couple of years ago, the new Disk3 drive with errors is a WD Gold I just bought back in May (just before this all started). I did preclear it with no issue originally. I don't understand what's going on, but am hoping someone can take a look at my diagnostics and provide some insights. This is happening far too frequently for me to think it's actually drive failure after failure, but I could be wrong. I know sometimes rebooting will clear the crc errors, but I'd like to try and understand root cause and see if I can do something more permanent to fix it. cydstorage-diagnostics-20221015-1520.zip

-

I do understand what you are saying, and believe it makes sense. It's just the odds that so few disks would be hammered that confuses me. Regardless... I will just let it go. I am almost done the refresh and will manually move files around to be more balanced and upate my split settings. On the split level question is it recommended to just split any directory, or select a specific different level?

-

That all makes sense, and yes... it's OCD behaviour around the TV shows. I know it really doesn't matter, and should likely get over it. Even with this explanation I don't understand why those 3 disks I mentioned are being targetted excessively... I understand and expect some data to be written there, but not like it has. Also, I have 500GB minimum free space required set on those disks... even if it's writing to movie folders there already I could see it using an extra 25-50GB or even 100GB, but disk 10 is down to 552MB of space and disk5 is 193GB of space... both well under that threshold. That still confuses me.

-

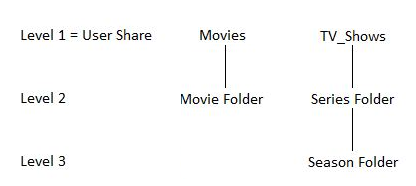

Here is the diagram as well as a screenshot from my Movies share. The TV share is set up identically.

-

So.. this server has been running for a decade with these exact same share settings, and there are Movies folders on each of the 12 data disks and this has never been an issue before. When manually copying to the Movies share the files can go to any disk. As a test I just disabled the cache on my movies share so it writes directly to the array. I picked a movie at random that was on disk8 and moved it to my local machine, and then moved it back... and it ended up on disk9 So, is it fair to say that share settings/split level are not the issue I am having right now?

-

While I do see a number of disks have been written to, it's interested that I've gained almost half a TB on Disk4... presumably because the old version of a movie was deleted and the new version went to another disk (Other than mover I don't think I've touched any files). Though I am guessing, I don't know why else space would have gone up and not down. I do look at the cache disk. I'm constantly monitoring it to see what's been completed, what's still showing incomplete, etc. So right now I have a 173 movies in the Movies folder on the cache drive. Because the file names are different I don't think it's overwritting. It would treat them as seperate files (I've had movie duplicates this way before by accident).

-

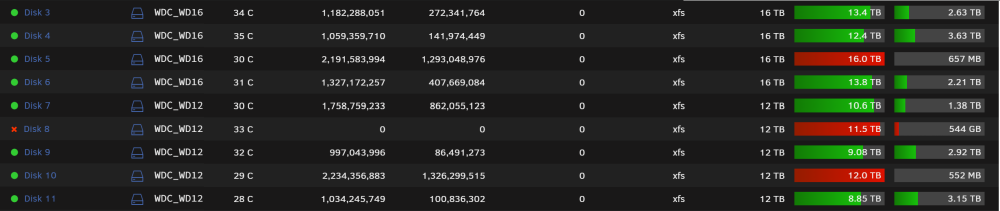

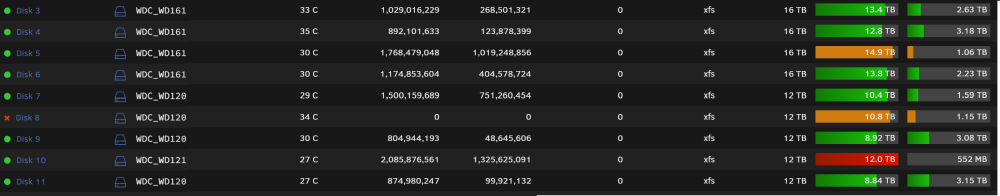

So, I haven't done the one off test yet, but here is the picture I posted yesterday of the drives, and then again from just now. Not absoutely everything uses those 3 disks, but it looks like the vast majority does. Yesterday: Today:

-

I still tend to leave it at split level 1 for tv as I'd prefer to have everything related to a show in a single folder on a disk, but there is no real reason I suppose. My brain just likes things ordered like that. Movies\Movie Name (year)\Movie Name is how everything is structured, and with metadata not downloading it's only a single file in a given movie folder, so there is really nothing to split beyond the first level.

-

This is only if the file name is exactly the same though, correct? As mentioned in my original post there is a zero % chance of this. Up until this refresh project I just used movie.mkv, but now use movie, quality, audio setup, imdb link, release group, etc. Before this project I didn't use Radarr... I manually cleaned up movies, fixed metadata with Media Companion and then moves to Unraid. I am now trying to automate this and narrow my focus on finding the best quality version that meets my criteria. So, the folder would pre-exist, but the movie file name doesn't as it seems to get deleted once the new file is in the movies folder on my cache drive. I would still sort of expect that if the folder exists on disk2 for example that the new movie would be put into the same folder.... but this doesn't look like is what's happening. As I think more about this I likely need to do a test with a specific movie and follow it through the process. Right now I've just been searching all movies in a given year, and letting the 300-400 movies update as a single batch.