5hurb

-

Posts

15 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by 5hurb

-

-

On 10/5/2021 at 4:38 PM, Walter S said:

Hi All, I've checked-out Spaceinvader Ones' Video on setting up Tdarr. I've been playing with this app/docker for a couple days now after seeing the vid and it seems pretty useful. I was wondering how to setup CPU=Health checking, GPU=Transcoding? any help would be great. ty.. Are these the right settings? I just don't see any change in the Node Overview (Active workers) Thanks..

Health checks are quick and likely to be all done. Only took an hour or 2 to scan 20tb.

-

12 hours ago, djblu said:

Maybe use a VM with the 2nd GPU passed through, then set up a Tadrr node on the VM.

Make sure you pass through the shares(tadrr transcode/Media) too.

That's definitely an option.

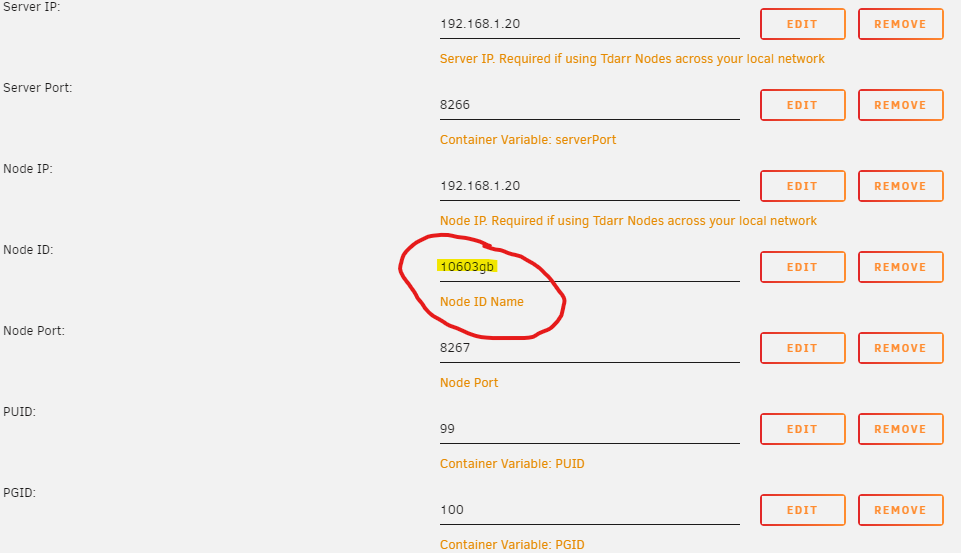

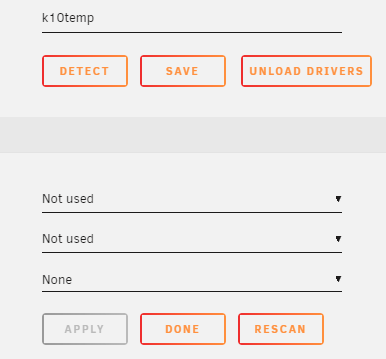

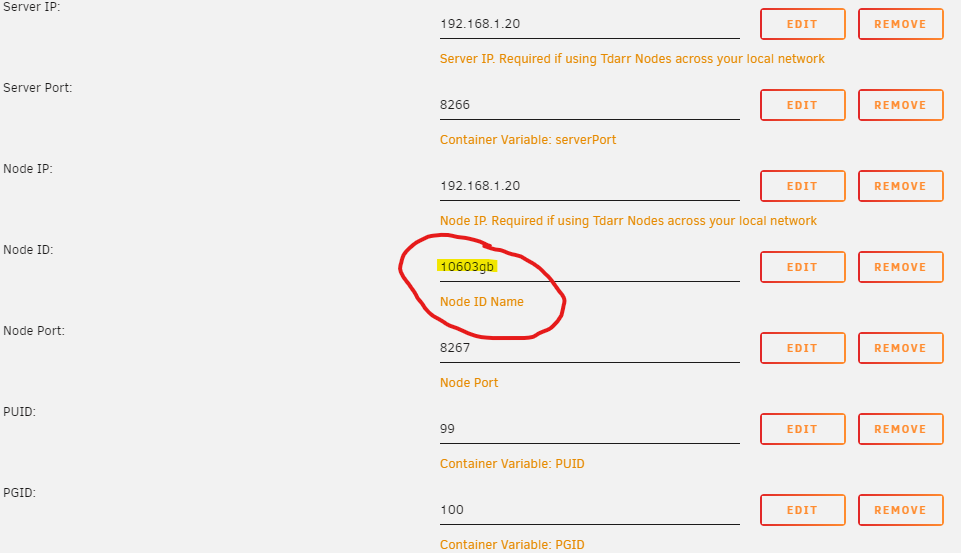

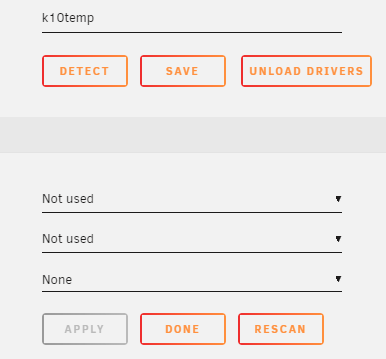

I have had another play around today and checking the node logs if you change the port of the 2nd docker to 8268 the container still thinks its 8267. Looks to be a bug in the tdarr_node.

I will get the blinking node on the web interface every time the node registers

[32m[2021-10-02T10:33:14.191] [INFO] Tdarr_Node - [39mStarting Tdarr_Node

Preparing environment

[32m[2021-10-02T10:33:14.208] [INFO] Tdarr_Node - [39mUpdating plugins

[32m[2021-10-02T10:33:14.213] [INFO] Tdarr_Node - [39mTdarr_Node listening at http://localhost:8267

[32m[2021-10-02T10:33:14.300] [INFO] Tdarr_Node - [39m---------------Binary tests start----------------

[32m[2021-10-02T10:33:14.308] [INFO] Tdarr_Node - [39mhandbrakePath:HandBrakeCLI

[32m[2021-10-02T10:33:14.317] [INFO] Tdarr_Node - [39mffmpegPath:ffmpeg

[32m[2021-10-02T10:33:14.326] [INFO] Tdarr_Node - [39mmkvpropedit:mkvpropedit

[32m[2021-10-02T10:33:14.326] [INFO] Tdarr_Node - [39mBinary test 1: handbrakePath working

[32m[2021-10-02T10:33:14.327] [INFO] Tdarr_Node - [39mBinary test 2: ffmpegPath working

[32m[2021-10-02T10:33:14.327] [INFO] Tdarr_Node - [39mBinary test 3: mkvpropeditPath working

[32m[2021-10-02T10:33:14.327] [INFO] Tdarr_Node - [39m---------------Binary tests end-------------------

[32m[2021-10-02T10:33:14.626] [INFO] Tdarr_Node - [39mCloning plugins

[32m[2021-10-02T10:33:15.327] [INFO] Tdarr_Node - [39mFinished downloading plugins!

[32m[2021-10-02T10:33:16.221] [INFO] Tdarr_Node - [39mNode registered

[32m[2021-10-02T10:33:17.516] [INFO] Tdarr_Node - [39m[2.891s]Plugin update finished

[32m[2021-10-02T10:33:20.490] [INFO] Tdarr_Node - [39mNode registered

[32m[2021-10-02T10:33:24.539] [INFO] Tdarr_Node - [39mNode registered

[32m[2021-10-02T10:33:28.541] [INFO] Tdarr_Node - [39mNode registered

I also found another wee problem. If you name your node ID: with just numbers it will do the same behavior and just blink in the web interface. It requires letter text. So you cant just call it 1060

-

11 hours ago, mike2246 said:

I've got it working as well with 1 Docker Node, I tried to make a second Docker node keeping all settings the same excpet Name/Node Port/GPU but the server doesn't detect it unless I use node port 8267 which I can't do on both at the same time. Is there a way around this? or can 2 GPU's be added to 1 node?

I've tried to add the 2nd GPU to the node it only uses one unfortunately. Anyone else got ideas?

-

14 hours ago, surferjsmc said:

Hi ppl!

I've followed spaceinvader one's guide to set up Tdarr using my Nvidia gtx 970 in the server, everything looks correct but i can't set anything on the node part at the main page, it keeps blinking in a loop showing the gpu node for about 2 seconds and change again to no tdarr nodes detected.

Nvidia driver installed is the lasted one v470.74.

Anyone having this issue ?

double check you have --runtime=nvidia in advanced extra param on the node.

Now the issue I have, I have 2x GPU's how the F do I get this working? Will takes months to get through my database at the rate its working at with just 1

-

Yea not working for Ryzen 3700x ASUS ROG STRIX B450-F Gaming

-

1

1

-

-

So I am looking at changing up my hardware. Currently i7 3770k 16gb ram no gpu 10x 4TB drives etc...

My server is mainly used for Plex and a windows 10VM for ISO Torrents and Blue Iris. This is starting to show its age. New Intel CPU just does not make sense when AMD can do many more cores for less cost. So I'm looking at going Ryzen 3700x and 32gb Ram on a B450. This way I can isolate/allocate cores for VM/Plex/unraid + I can play with other VM/Dockers without slowdowns or running out of Ram.

iGPU with Plex is obviously not going to work anymore, can Plex transcode 1080p on the Ryzen CPU or do I need to poke a Nvidia GPU at it?

If I do put a Nvidia GPU(1070 or 2060) in can I use it as a pass through to the 10 VM and do some light gaming while still being able to HW Transcode Plex?

Is there any other pinch points you guys can think of before I do this?

-

You made that seem like a simple task...

3 motherboards later I've got it to update to 20.00.07.00 on an old AM3 with FreeDOS.

It used to have the error pretty much straight away. 10mins in nothing yet so fingers crossed. Speeds are still looking the same as before so its looking like a win.

Thank you for pointing me in the right direction.

-

My system has been running perfectly fine without errors using the motherboard sata and a cheap pci-e 5 port add in card but am looking to expand so am trying these sff8087- 4 port sata cards/cables but they are just not happy.

I am getting UDMA CRC errors coming through quite often. I have read up that its normally cables that cause the issues.

Tried 4 different cables and 2 different pci-e cards and am still getting these errors.

LSI SAS 9207-8i 7.39.00.00 (2014.09.18)

IOCREST PCIe to 8 internal Ports SATA 6g (Do not recommend it just got it for testing)

1st set of cables are the generic ebay light blue ones. - Got alot more errors with these than the 2nd set.

2nd set of cables are from Startech@ 23x the ebay cost (The only ones available locally).

Does anyone know if there is a firmware/bios update that might solve this? or How bad is it if I ignore these just turn off error reporting for 199?

-

Nope still the same. Will start swapping systems soon.

-

Cooling is good cpu sitting at 47c at 50% load. I've just noticed that I'm down to 6 cores on the cpu. unraid has shut down 3 and 7. Will try reseating the cpu and ram first.

-

Hey Just checked my server and the system log is full of errors. Was up for 35 days so tried a reboot which did not help. Hoping someone can have a look at the logs and see if it terminal?

Server still seems to be running ok and the drives are ok.

eg:

Nov 9 22:21:11 Tower root: <26>Nov 9 22:21:10 mcelog: Offlining CPU 7 due to cache error threshold

Nov 9 22:21:11 Tower root: MCA: Instruction CACHE Level-1 Instruction-Fetch Error

Nov 9 22:21:10 Tower root: <26>Nov 9 22:21:10 mcelog: Offlining CPU 3 due to cache error threshold

-

36 minutes ago, jonathanm said:

Any changes made in XML view are removed if you modify anything in form view. So, once you start modifying the XML, don't switch the view back.

Thankyou for getting back to me. Sorry I included the XML to see if something in there was not right.

I'm using the GUI to change Hyper-V setting to no but it does nothing for me when I go back in to edit the setting Hyper-V is always set to yes.

Im getting the code 43 on a old 9800gt nvidia card that i'm trying to pass through for to the VM CUDA support for BlueIris in windows 10.

-

I seem to have an odd one where I change hyper-v to no and update but its automagiclly changing back to yes.

Im no good with XML but this is what it looks like?

<?xml version='1.0' encoding='UTF-8'?>

<domain type='kvm'>

<name>Windows 10 VM</name>

<uuid>acdfb713-0f86-d6ac-f3a2-3d58a29e8e67</uuid>

<metadata>

<vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/>

</metadata>

<memory unit='KiB'>8388608</memory>

<currentMemory unit='KiB'>8388608</currentMemory>

<memoryBacking>

<nosharepages/>

</memoryBacking>

<vcpu placement='static'>3</vcpu>

<cputune>

<vcpupin vcpu='0' cpuset='1'/>

<vcpupin vcpu='1' cpuset='2'/>

<vcpupin vcpu='2' cpuset='3'/>

</cputune>

<os>

<type arch='x86_64' machine='pc-i440fx-3.1'>hvm</type>

<loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader>

<nvram>/etc/libvirt/qemu/nvram/acdfb713-0f86-d6ac-f3a2-3d58a29e8e67_VARS-pure-efi.fd</nvram>

</os>

<features>

<acpi/>

<apic/>

<hyperv>

<relaxed state='on'/>

<vapic state='on'/>

<spinlocks state='on' retries='8191'/>

<vendor_id state='on' value='none'/>

</hyperv>

</features>

<cpu mode='host-passthrough' check='none'>

<topology sockets='1' cores='3' threads='1'/>

</cpu>

<clock offset='localtime'>

<timer name='hypervclock' present='yes'/>

<timer name='hpet' present='no'/>

</clock>

<on_poweroff>destroy</on_poweroff>

<on_reboot>restart</on_reboot>

<on_crash>restart</on_crash>

<devices>

<emulator>/usr/local/sbin/qemu</emulator>

<disk type='file' device='disk'>

<driver name='qemu' type='raw' cache='writeback'/>

<source file='/mnt/user/domains/Windows 10 VM/vdisk1.img'/>

<target dev='hdc' bus='virtio'/>

<boot order='1'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/>

</disk>

<disk type='file' device='cdrom'>

<driver name='qemu' type='raw'/>

<source file='/mnt/user/isos/Windows.iso'/>

<target dev='hda' bus='ide'/>

<readonly/>

<boot order='2'/>

<address type='drive' controller='0' bus='0' target='0' unit='0'/>

</disk>

<disk type='file' device='cdrom'>

<driver name='qemu' type='raw'/>

<source file='/mnt/user/isos/virtio-win-0.1.160-1.iso'/>

<target dev='hdb' bus='ide'/>

<readonly/>

<address type='drive' controller='0' bus='0' target='0' unit='1'/>

</disk>

<controller type='pci' index='0' model='pci-root'/>

<controller type='ide' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/>

</controller>

<controller type='virtio-serial' index='0'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/>

</controller>

<controller type='usb' index='0' model='ich9-ehci1'>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci1'>

<master startport='0'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci2'>

<master startport='2'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/>

</controller>

<controller type='usb' index='0' model='ich9-uhci3'>

<master startport='4'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/>

</controller>

<interface type='bridge'>

<mac address='52:54:00:fd:4e:d6'/>

<source bridge='br0'/>

<model type='virtio'/>

<address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/>

</interface>

<serial type='pty'>

<target type='isa-serial' port='0'>

<model name='isa-serial'/>

</target>

</serial>

<console type='pty'>

<target type='serial' port='0'/>

</console>

<channel type='unix'>

<target type='virtio' name='org.qemu.guest_agent.0'/>

<address type='virtio-serial' controller='0' bus='0' port='1'/>

</channel>

<input type='tablet' bus='usb'>

<address type='usb' bus='0' port='1'/>

</input>

<input type='mouse' bus='ps2'/>

<input type='keyboard' bus='ps2'/>

<hostdev mode='subsystem' type='pci' managed='yes'>

<driver name='vfio'/>

<source>

<address domain='0x0000' bus='0x01' slot='0x00' function='0x0'/>

</source>

<address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/>

</hostdev>

<hostdev mode='subsystem' type='usb' managed='no'>

<source>

<vendor id='0x046d'/>

<product id='0xc077'/>

</source>

<address type='usb' bus='0' port='2'/>

</hostdev>

<hostdev mode='subsystem' type='usb' managed='no'>

<source>

<vendor id='0x046d'/>

<product id='0xc31c'/>

</source>

<address type='usb' bus='0' port='3'/>

</hostdev>

<memballoon model='none'/>

</devices>

</domain>

[Support] HaveAGitGat - Tdarr: Audio/Video Library Analytics & Transcode Automation

in Docker Containers

Posted

Looks like your transcode folder is set to prefer to use the cache drive. Try changing this to only so that the mover wont touch them