-

Posts

14 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by benfishbus

-

-

The virtiofs support is working great with my ubuntu VM. I just had one small problem which I managed to solve with a workaround. Ubuntu could not automount via fstab an unraid share with the tag "media". I don't exactly know why. DMESG in the vm looked like this:

[ 24.644594] systemd[1]: Mounting /mnt/unraid/ben... [ 24.647784] systemd[1]: Mounting /mnt/unraid/media... [ 24.669726] virtiofs virtio0: virtio_fs_setup_dax: No cache capability [ 24.679994] virtiofs virtio1: virtio_fs_setup_dax: No cache capability [ 24.685471] virtio-fs: tag </media> not found

I tried additional shares, and all of them worked - but not the "media" share. I tried changing the order of the shares in fstab - same result. If I ran "sudo mount -av" at a command prompt, ubuntu would mount the "media" share no problem - but never at bootup.

The workaround was to specify the "media" share manually in the vm config, using some other tag like "xmedia" - and suddenly ubuntu had no problem automounting the share.

Now if only I didn't have to resort to xml or virt-manager to add a virtual sound card to a vm in unraid...

-

4 hours ago, primeval_god said:

@benfishbus A new version of the plugin is available which I believe will solve your issue.

Text is all legible now, thanks!

-

3 hours ago, primeval_god said:

@benfishbus Do you happen to have the unassigned devices plugin installed?

No. Do you want a list of the plugins I do have installed?

-

12 hours ago, primeval_god said:

I am unable to reproduce this issue locally. It is true that the popups are not themed, but they look fine to me when running the black theme. What version of unRAID are you using?

6.11.0 release.

Tried resetting Firefox theme in case it might be interfering - no change. Behaves the same for me in Edge.

Just tried changing Unraid themes - "Gray" renders the same popup this way:

Solves legibility for me. Unfortunately I also hate the layout of that theme, so would still love to figure this out. (If only Unraid made color scheme and layout separate options...)

In experimenting with the different Unraid themes, I thought this seemed strange with the "White" theme:

Guessing that Unraid's theming function is not as robust as it could be.

-

-

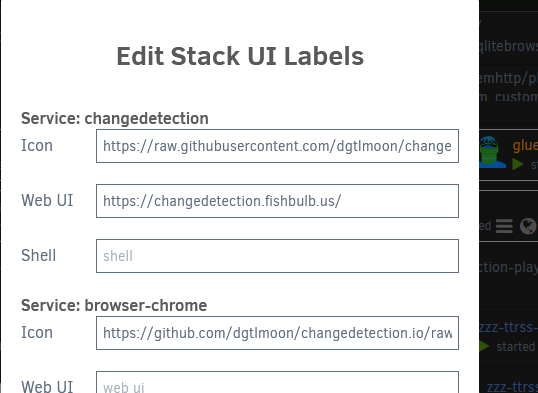

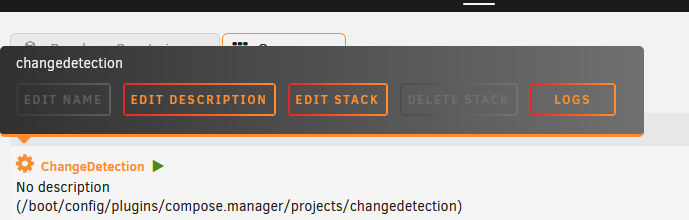

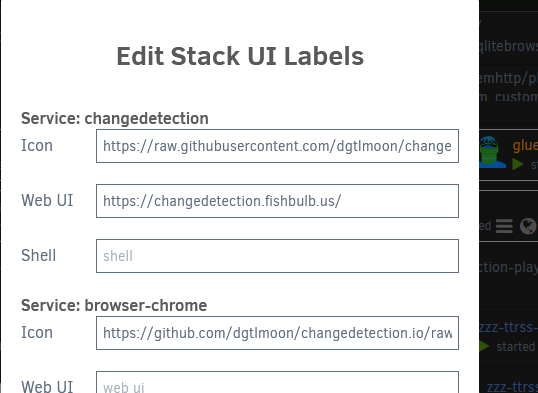

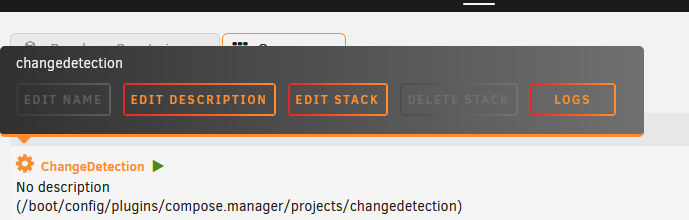

Thanks for making this. While we wait for whatever Docker changes await in 6.10, this is fun to play with.

How about adding a Compose Logs button?

Also, the plugin allows you to rename a running stack, then can't control it - i.e., you hit Compose Down button and it says no container with the new name is running.

-

I've been getting this too on 6.9.2 - the Docker page was basically unusable - and finally pulled diagnostics (attached). Docker.log was full of...something. Uptime on all my containers looked fine, though. Well, for some reason I decided to run

docker container prunewhich cleared out all the stopped containers...and stopped the refreshing. I added back all the stopped containers I still need, which was easy because the templates were still there.

-

I am able to get virtio-fs working on bare metal Ubuntu Server 21.04 (libvirt 7.0.0, qemu 5.2.0), but not Unraid 6.9.2 (libvirt 6.5.0, qemu 5.1.0).

For my (Windows 10) VM domain, I used Virt-manager to insert the following in XML:

<memoryBacking> <access mode="shared"/> </memoryBacking> ... <cpu ...> ... <numa> <cell id="0" cpus="0-7" memory="8388608" unit="KiB" memAccess="shared"/> </numa> </cpu>

Then added a Filesystem using the following XML:

<filesystem type="mount" accessmode="passthrough"> <driver type="virtiofs"/> <source dir="/mnt/user"/> <target dir="unraid"/> </filesystem>

Virt-manager/libvirt assigned the filesystem the following hardware address:

<address type="pci" domain="0x0000" bus="0x00" slot="0x0b" function="0x0"/>

Everything adds fine, but the VM won't start. Log is as follows:

-rtc base=localtime \ -no-hpet \ -no-shutdown \ -boot strict=on \ -device pci-bridge,chassis_nr=1,id=pci.1,bus=pci.0,addr=0x3 \ -device ich9-usb-ehci1,id=usb,bus=pci.0,addr=0x7.0x7 \ -device ich9-usb-uhci1,masterbus=usb.0,firstport=0,bus=pci.0,multifunction=on,addr=0x7 \ -device ich9-usb-uhci2,masterbus=usb.0,firstport=2,bus=pci.0,addr=0x7.0x1 \ -device ich9-usb-uhci3,masterbus=usb.0,firstport=4,bus=pci.0,addr=0x7.0x2 \ -device ahci,id=sata0,bus=pci.0,addr=0xa \ -device virtio-serial-pci,id=virtio-serial0,bus=pci.0,addr=0x5 \ -blockdev '{"driver":"file","filename":"/mnt/cache/domains/Windows-10/vdisk1.img","node-name":"libvirt-2-storage","cache":{"direct":false,"no-flush":false},"auto-read-only":true,"discard":"unmap"}' \ -blockdev '{"node-name":"libvirt-2-format","read-only":false,"cache":{"direct":false,"no-flush":false},"driver":"raw","file":"libvirt-2-storage"}' \ -device virtio-blk-pci,bus=pci.0,addr=0x9,drive=libvirt-2-format,id=virtio-disk2,bootindex=1,write-cache=on \ -blockdev '{"driver":"file","filename":"/mnt/user/isos/virtio-win-0.1.190.iso","node-name":"libvirt-1-storage","auto-read-only":true,"discard":"unmap"}' \ -blockdev '{"node-name":"libvirt-1-format","read-only":true,"driver":"raw","file":"libvirt-1-storage"}' \ -device ide-cd,bus=sata0.0,drive=libvirt-1-format,id=sata0-0-0 \ -chardev socket,id=chr-vu-fs0,path=/var/lib/libvirt/qemu/domain-4-Windows-10/fs0-fs.sock \ -device vhost-user-fs-pci,chardev=chr-vu-fs0,tag=unraid,bus=pci.0,addr=0xb \ -netdev tap,fd=34,id=hostnet0 \ -device virtio-net,netdev=hostnet0,id=net0,mac=52:54:00:7c:b2:7b,bus=pci.0,addr=0x4 \ -chardev pty,id=charserial0 \ -device isa-serial,chardev=charserial0,id=serial0 \ -chardev socket,id=charchannel0,fd=36,server,nowait \ -device virtserialport,bus=virtio-serial0.0,nr=1,chardev=charchannel0,id=channel0,name=org.qemu.guest_agent.0 \ -device usb-tablet,id=input0,bus=usb.0,port=1 \ -vnc 0.0.0.0:0,websocket=5700 \ -k en-us \ -device qxl-vga,id=video0,ram_size=67108864,vram_size=67108864,vram64_size_mb=0,vgamem_mb=16,max_outputs=1,bus=pci.0,addr=0x2 \ -device ich9-intel-hda,id=sound0,bus=pci.0,addr=0x8 \ -device hda-duplex,id=sound0-codec0,bus=sound0.0,cad=0 \ -device virtio-balloon-pci,id=balloon0,bus=pci.0,addr=0x6 \ -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny \ -msg timestamp=on 2021-05-03 12:49:46.800+0000: Domain id=4 is tainted: high-privileges 2021-05-03 12:49:46.800+0000: Domain id=4 is tainted: host-cpu char device redirected to /dev/pts/0 (label charserial0) 2021-05-03T12:49:46.936396Z qemu-system-x86_64: -device vhost-user-fs-pci,chardev=chr-vu-fs0,tag=unraid,bus=pci.0,addr=0xb: Failed to write msg. Wrote -1 instead of 12. 2021-05-03T12:49:46.936455Z qemu-system-x86_64: -device vhost-user-fs-pci,chardev=chr-vu-fs0,tag=unraid,bus=pci.0,addr=0xb: vhost_dev_init failed: Operation not permitted 2021-05-03 12:49:46.971+0000: shutting down, reason=failedObviously there is a difference in version of qemu+libvirt between the two systems. Hoping these packages will be updated to at least 5.2.0+7.0.0 respectively in the next release of Unraid. I think they're up to at least release 6.0.0+7.2.0.

That aside, anyone else pounding away at this?

-

Seeing that we are now at 6.9.1, I would like to have this working, too. I've not gone as far as either of you. I'm accustomed to setting up 9p, which just isn't cutting it, and am very interested in the more direct(?) virtio-fs driver.

-

Fix Common Problems told me to run diagnostics because my server has "Machine Check Events". I did that, and here's what I found in syslog:

Mar 14 04:30:11 Jimbob root: Fix Common Problems: Error: Machine Check Events detected on your server Mar 14 04:30:11 Jimbob root: Hardware event. This is not a software error. Mar 14 04:30:11 Jimbob root: MCE 0 Mar 14 04:30:11 Jimbob root: CPU 0 BANK 7 TSC d21975bc9ab2a Mar 14 04:30:11 Jimbob root: MISC 2140160286 ADDR 4e2af9f80 Mar 14 04:30:11 Jimbob root: TIME 1615419381 Wed Mar 10 18:36:21 2021 Mar 14 04:30:11 Jimbob root: MCG status: Mar 14 04:30:11 Jimbob root: MCi status: Mar 14 04:30:12 Jimbob root: Corrected error Mar 14 04:30:12 Jimbob root: MCi_MISC register valid Mar 14 04:30:12 Jimbob root: MCi_ADDR register valid Mar 14 04:30:12 Jimbob root: MCA: MEMORY CONTROLLER RD_CHANNEL3_ERR Mar 14 04:30:12 Jimbob root: Transaction: Memory read error Mar 14 04:30:12 Jimbob root: STATUS 8c00004000010093 MCGSTATUS 0 Mar 14 04:30:12 Jimbob root: MCGCAP 1000c19 APICID 0 SOCKETID 0 Mar 14 04:30:12 Jimbob root: MICROCODE 42e Mar 14 04:30:12 Jimbob root: CPUID Vendor Intel Family 6 Model 62 Mar 14 04:30:12 Jimbob root: Hardware event. This is not a software error. Mar 14 04:30:12 Jimbob root: MCE 1 Mar 14 04:30:12 Jimbob root: CPU 0 BANK 7 TSC e02778c981cdc Mar 14 04:30:12 Jimbob root: MISC 214016d486 ADDR 4e2af9f80 Mar 14 04:30:12 Jimbob root: TIME 1615514478 Thu Mar 11 21:01:18 2021 Mar 14 04:30:12 Jimbob root: MCG status: Mar 14 04:30:12 Jimbob root: MCi status: Mar 14 04:30:12 Jimbob root: Corrected error Mar 14 04:30:12 Jimbob root: MCi_MISC register valid Mar 14 04:30:12 Jimbob root: MCi_ADDR register valid Mar 14 04:30:12 Jimbob root: MCA: MEMORY CONTROLLER RD_CHANNEL3_ERR Mar 14 04:30:12 Jimbob root: Transaction: Memory read error Mar 14 04:30:12 Jimbob root: STATUS 8c00004000010093 MCGSTATUS 0 Mar 14 04:30:12 Jimbob root: MCGCAP 1000c19 APICID 0 SOCKETID 0 Mar 14 04:30:12 Jimbob root: MICROCODE 42e Mar 14 04:30:12 Jimbob root: CPUID Vendor Intel Family 6 Model 62 Mar 14 04:30:12 Jimbob root: mcelog: warning: 8 bytes ignored in each record Mar 14 04:30:12 Jimbob root: mcelog: consider an update

Are these read errors something to be concerned about? This is ECC RAM.

-

Agreed, it’s night and day. I had already enabled turbo, so the improvement for me was all down to CPU scaling. Would like to understand why this needed to be changed and what other consequences will be.

-

I have noticed the same thing. My Win10 VM ran like a top on 6.8.3, and since 6.9.0 it's unbearably slow. I regularly watched streaming video in this VM, and now it struggles even to load the smallest applications. I haven't run numbers on the performance, and would have no baseline to compare them to if I did. Unless some solution turns up, I'll have to go back to 6.8.3.

-

On 12/27/2020 at 4:59 PM, hdlineage said:

I think I know exactly what is happening here.

If there is no port mapping that binds from the container to host, the web ui link doesn't show.

I have a custom bridge network that doesn't require port mapping, and WEB UI link doesn't show no matter what I put in the WEBUI field.

As soon as I map even one port to host the webui appears.

It's kind of annoying, is there a way to force WEB UI link setting?

Yep, I have the same problem.

VirtioFS Support Page

in VM Engine (KVM)

Posted

Regarding sound? Not sure if this is what you're asking for, but virt-manager lists 3 models for me to choose from - "AC97", "HDA (ICH6)", and "HDA (ICH9)". I don't know if these are the options everyone sees, or just me based on my host hardware.

I have always had success with HDA (ICH9). Virt-manager adds this to the XML:

Can't remember whether sound actually worked in a SPICE session, or if I had to turn on/install RDP in the guest and connect that way...