MacDaddy

Members-

Posts

50 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

MacDaddy's Achievements

Rookie (2/14)

3

Reputation

-

[solved] Does a Memory Check Error contribute to a HDD read error?

MacDaddy replied to MacDaddy's topic in General Support

-

[solved] Does a Memory Check Error contribute to a HDD read error?

MacDaddy replied to MacDaddy's topic in General Support

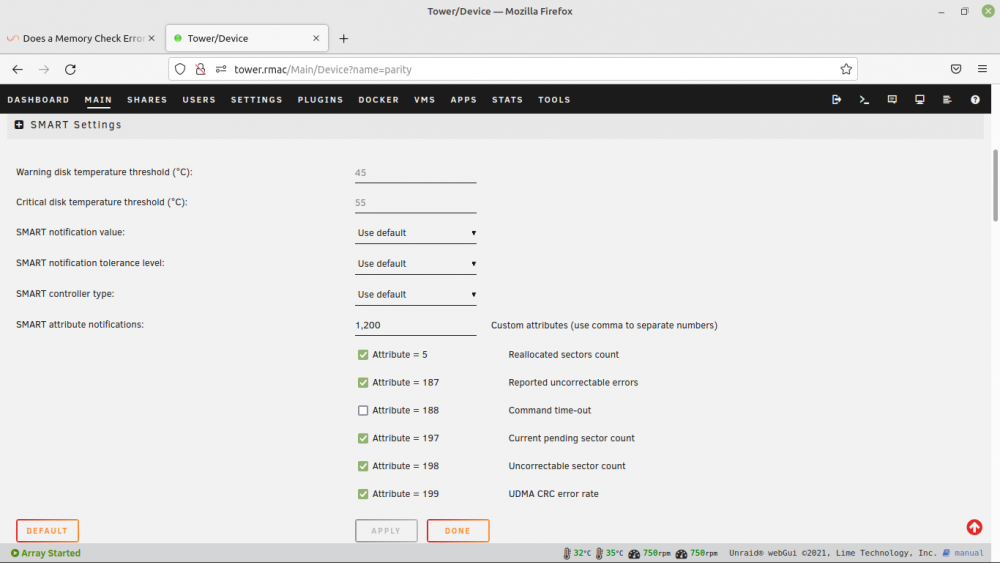

Thanks for the suggestion. I will add attributes 1 and 200 to be monitored as you suggest. The parity completed with no errors. The main screen shows there are 56 errors on the parity disk alone. All other disks are 0. I'm assuming these are all the correctable read errors. I believe I will use this opportunity to replace the parity with an 8TB option. This will give me the latitude to begin incrementing the data drives to 8TB as the storage is consumed. Unless I'm just being hyper paranoid, I'll not add the former parity drive back to the disk pool. If the risk is is low to the point of non-existent then I'll be willing to add it back in. -

I have had a MCE occuring since mid December. I've ordered some replacement memory that has been delivered and plan to install it tomorrow. While awaiting the replacement memory, the parity drive alerted for read errors. Before I reboot following the new memory installation I wanted to add the current diagnostic information. It appears that the HDD read errors were corrected, but I wanted to ask for help in determining if the HDD read error might possibly be a false positive influenced by the memory errors. If it is truly failing, I have no problem replacing the drive. In this case would adding the old parity drive back to the data pool be an unreasonable risk. Thanks in advance for your advice. tower-diagnostics-20220110-1653.zip tower-smart-20220110-1648.zip tower-syslog-20220110-2300.zip

-

Fix Common Problems alerted me to a previous MCE. I'm seeing repeating entries for about 5 secs of: Oct 18 22:34:33 Tower kernel: mce: [Hardware Error]: Machine check events logged Oct 18 22:34:33 Tower kernel: EDAC sbridge MC1: HANDLING MCE MEMORY ERROR Oct 18 22:34:33 Tower kernel: EDAC MC1: 1 CE memory read error on CPU_SrcID#1_Ha#0_Chan#3_DIMM#0 (channel:3 slot:0 page:0x5ff304 offset:0xec0 grain:32 syndrome:0x0 - area:DRAM err_code:0001:0091 socket:1 ha:0 channel_mask:8 rank:0) It corrected and I have not seen a repeat event. Is this a one-time event that bears attention if it should happen again, or do I need to start looking for some new memory? Thanks in advance for any advice you can offer. tower-diagnostics-20211024-1411.zip

-

unRAID 6 NerdPack - CLI tools (iftop, iotop, screen, kbd, etc.)

MacDaddy replied to jonp's topic in Plugin Support

Any possibility to add sshpass? In conjunction with user.scripts I'm hoping to implement something like : #!/bin/bash #argumentDescription=Enter password and box name (mypass pihole) sshpass -p $1 ssh pi@$2.rmac "sudo dd bs=4M if=/dev/mmcblk0 status=progress | gzip -1 - " | dd of=/mnt/user/Backups/$2/$(date +%Y%m%d\_%H%M%S)\_$box.gz I run 4 different Raspberry Pi boxes. They run for a good long time, but I've just had the third SD card fail. I would like to keep an image where I can recover quickly with minimal pain. -

MacDaddy started following [SOLVED] Error upgrading 6.3.5 to 6.5.3 , Silencing the Hoover and Advice needed: Rebuild disk or xfs_repair

-

Thanks for the info. The 5400 drives should in theory be quieter than their 7200 counterparts. Good points on the airflow. Noise and the airflow will go hand in hand. I’ll look in to active cooling on the CPUs. Sent from my iPhone using Tapatalk

-

I have a Supermicro X9DRi-LN4+/X9DR3-LN4+ with dual Xeon® CPU E5-2630L v2 based server for my unRaid build. It is a surplus server in a Supermicro CSE-835TQ-R920B case. In my prior residence, I had the luxury of converting one of the closets to house all my equipment. It was designed for power/ventilation/noise. I'm now in a place where I can't modify any rooms and the only location to house the equipment is a closet in the master bedroom. Needless to say, the server sounds like a hoover vacuum with asthma on steroids. It has served me well and I am thinking to transfer the M/B and 5xWD40EFRX to a silenced case. I'm thinking something like be quiet! Dark Base 900 https://www.bequiet.com/en/case/697 might work. The CPU shows 60W TDP. Currently they have a passive cooler with the custom air shroud from Supermicro. I would intend to keep them configured as such. I would have to change from the redundant power supplies currently in the server chassis. I would appreciate any potential suggestions in that area. What is your advice on the noise footprint after the conversion? Any experience with this case or any other that might accommodate the M/B? Any thoughts on potential roadblocks I might find? Thanks in advance for any input.

-

Thanks for your response. I had a feeling it would go that way. This is my first encounter with corruption. When I complete the XFS repair it will prune data (according to the dry run output). Is that data lost for good or will unRaid recognize it and let parity reconstruct? Sent from my iPhone using Tapatalk

-

I'm currently using a docker MakeMKV to write cloned DVD structures in to a MKV container. I've noticed that a share that I'm using for the output keeps dropping. I can reboot and the array will start with all drives green and the share is restored. A snippet from the log is attached. I can start in maintenance mode and dry run xfs_repair on all the hard drives. All are clean except md2. Is it better to xfs_repair the md2 drive or replace with new drive and let it rebuild? Note-while parity shows valid, it has been more than 700 days since last check. Oct 13 18:36:15 Tower kernel: XFS (md2): Metadata CRC error detected at xfs_inobt_read_verify+0xd/0x3a [xfs], xfs_inobt block 0x19f754db8 Oct 13 18:36:15 Tower kernel: XFS (md2): Unmount and run xfs_repair Oct 13 18:36:15 Tower kernel: XFS (md2): First 128 bytes of corrupted metadata buffer: Oct 13 18:36:15 Tower kernel: 0000000095cfb836: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 000000001de8c0f3: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 00000000c8d99f19: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 00000000a9a413e7: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 000000003c326670: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 000000005abd08ab: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 000000003867ab1f: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: 0000000085cdd1ba: 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 ................ Oct 13 18:36:15 Tower kernel: XFS (md2): metadata I/O error in "xfs_trans_read_buf_map" at daddr 0x19f754db8 len 8 error 74 Oct 13 18:36:15 Tower kernel: XFS (md2): xfs_do_force_shutdown(0x1) called from line 300 of file fs/xfs/xfs_trans_buf.c. Return address = 000000007c1ff77b Oct 13 18:36:15 Tower kernel: XFS (md2): I/O Error Detected. Shutting down filesystem Oct 13 18:36:15 Tower kernel: XFS (md2): Please umount the filesystem and rectify the problem(s) tower-diagnostics-20201013-2150.zip

-

Some people should learn to search the forum before posting redundant issues. My apologies. Sent from my iPhone using Tapatalk

- 1 reply

-

- 2

-

-

-

I’m resurrecting my unRaid box. It shows the latest update v6.5.3 is available. When I initiate the upgrade it throws an invalid URL/ server error message. Sorry for the pic, I’m on direct terminal. Is it possible that amazon is down? Or maybe I need an address intermediate step? Sent from my iPhone using Tapatalk

-

First off, a big shout out to the entire unRaid community. It's funny how much more stuff beyond my media library was stored away. Being able to recover was a life saver. I sincerely appreciate all those who took the time to give me some actionable suggestions and thoughtful approaches. One thing I did when loading the drives was to put an Avery sticker with "row column" indicator (i.e. A1 was in the uppermost left slot, B1 was the uppermost right slot). I knew A1 was parity and had high (but not absolute) confidence A2 was disk1, A3 was disk2, etc.. I used the plugin to document the disk layout, but the copy I saved on my desktop machine was also destroyed by flood. Using the instructions provided I was able to get the "swamp" drives to reconstruct the dead drive. While it's fresh in my mind, here a few random thoughts that may be useful to others pondering disaster recovery. 1) Make a backup of your machine. Parity helps in fault tolerance, but it's not the same as a full backup. I used my old unraid server to backup my production server. I also had a mutual protection agreement with a buddy to swap hard drives with selected shares backed up. Where I went wrong was in mounting my old unraid server in an unused closet. While it was higher than my production server, it wasn't high enough to escape destruction. I still think mutual protection is the right way to go, just choose a partner that is not in the same flood plain. 2) Label the disks as they go in. Be deliberate about how you assign them so you can document the disk assignment on new drives. 3) Email yourself a copy of the output from the drive layout plugin. It will lower the stress level. 4) When you do your monthly parity, email a copy of the backed up USB drive to yourself. 5) If you have a friend in the medical equipment field, the CFC bath is a great way to go in cleanup. Full disclosure - I'm not sure this is a "true" CFC bath like was used with circuit boards back in the day (EPA outlawed). But I think it is a close approximation that does wonders for electronics. 6) The rice baggie approach to dry out may not be the most optimum, but rice is ubiquitous and will buy you time while you deal with the 1001 simultaneous crises that happen in a disaster. 7) In Texas there are DryBox kiosks that provide self serve drying for electronics. While it is targeted to phones, a hard drive will fit. I wish I had tried the DryBox on the failing drive at the very beginning. It helped get it working well enough to get many files off, but I imagine I contributed to it's demise by trying to spin it up directly out of the rice bag. 8). If you can plan your disasters and select for flood then helium drives would be a good preventative measure:-). However with my luck the next disaster will be an earthquake. It's been said before but bears repeating - don't panic. Take a deep breath and reach out to the fantastic people on this forum. Take your time and be deliberate.

-

Thanks guys - I truly appreciate all the advice and recipes. It is helping me move forward. Here is where I'm at the moment: - I found a new self-service kiosk at my local HEB called DryBox. The basic idea is to pull a vacuum and heat at a lower point to pull out moisture. Targeted towards cell phones but a drive fits. I ran the failed drive through this process. - I was able to mount the failed drive and get some files off before it degraded. I let it cool down, rebooted and got another set of files off. Repeat. Got a lesser set of files. Repeat. Fatal I/O error. - Followed your instructions to bring up the array to the point the failed disk was emulated. Mounted the recovery drive and was able to rsync files from emulated drive to fill in the blanks of missing files. I think I've got a good backup of the failed drive. - I bought another 4TB drive and ran it through preclear. I'm in progress of letting array rebuild this drive. Thinking of second parity. Thinking of helium drives. Certainly moving the hell away from this area of Houston.

-

Apologies. I was too quick to reply and probably didn't spell it out to the degree I can ask for good advice. Here is what I intend to try: 1) I have five existing flood damaged disks. The parity disk appears to be operational. Three of the data disks appear to operate well enough for me to rsync contents to a fresh disk. One data disk had I/O errors and is undergoing a second pass at the drying process. 2) Once I get an additional controller card installed, I will have sufficient ports to attach the four operational flood damaged disks. 3) I'll use johnnie.black's advice on bringing an array online with flood damaged disks in a new order (understanding the caution required). 4) Once the array of flood damaged disks (one parity, three data, one emulated) is active, I will attempt to copy the data to a new, precleared, unassigned disk. 5) I would like to perform the copy locally (as opposed to network copy). I'm fuzzy about where the emulated drive will appear. --let's say that disk3 is the emulated disk. Let's also say that the fresh drive is not in the array, but mounts as disk6. Can I bring up a terminal window on the local box and use my prior rsync command with /mnt/disk3/ as source and /mnt/disk6/ as target?

-

Thanks. That's good advice. So far I've had 7 individual files that didn't copy cleanly. I've been able to retry and get three to copy. I've been able to reboot and get two more to copy. So far only one has permanent I/O errors. I like the idea of pulling the files off individually from a emulated drive. I need to do this "locally" if possible with an unassigned device. I'm assuming that there is a mount point in Linux that represents the emulated disk (is it the /mnt/user)? Or does a /mnt/diskX show up for emulated disk?