-

Posts

61 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by nearcatch

-

-

On 4/16/2024 at 4:10 AM, unraid_fk34 said:

So I'm having issues displaying the custom network name in the docker containers tab. It will only show me the ID. I found a thread discussing this particular issue on the forums. Is that something that has to do with unraid or the plugin? Is it something that could be fixed in the future or is there a solution maybe?

I was able to fix this on my unRAID server. Iirc the fix was to not use external networks, and instead let docker-compose create the networks my stack needed. My networks block:

########################### NETWORKS networks: default: driver: bridge reverse_proxy: external: false name: reverse_proxy ipam: config: - subnet: ${REVERSE_PROXY_SUBNET} gateway: ${REVERSE_PROXY_GATEWAY} socket_proxy: external: false name: socket_proxy ipam: config: - subnet: ${SOCKET_PROXY_SUBNET} gateway: ${SOCKET_PROXY_GATEWAY} lan_ipvlan: external: false name: lan_ipvlan driver: ipvlan driver_opts: parent: br0 ipam: config: - subnet: ${LAN_IPVLAN_SUBNET} gateway: ${LAN_IPVLAN_GATEWAY} ip_range: ${LAN_IPVLAN_IP_RANGE} -

For anyone interested, the below is the minimum required to run this container using docker-compose, based off the example docker command from the repo. You can modify it easily to use traefik or another reverse proxy instead of accessing directly by port. Change $CONTDIR to wherever you want to store the logs.

preclear: container_name: preclear image: ghcr.io/binhex/arch-preclear:latest restart: unless-stopped privileged: true ports: - 5900:5900 - 6080:6080 environment: - WEBPAGE_TITLE=Preclear - VNC_PASSWORD=mypassword - ENABLE_STARTUP_SCRIPTS=yes - UMASK=000 - PUID=0 - PGID=0 volumes: - $CONTDIR/preclear/config:/config - /boot/config/disk.cfg:/unraid/config/disk.cfg:ro - /boot/config/super.dat:/unraid/config/super.dat:ro - /var/local/emhttp/disks.ini:/unraid/emhttp/disks.ini:ro - /usr/local/sbin/mdcmd:/unraid/mdcmd:ro - /dev/disk/by-id:/unraid/disk/by-id:ro - /boot/config/plugins/dynamix/dynamix.cfg:/unraid/config/plugins/dynamix/dynamix.cfg:ro - /etc/ssmtp/ssmtp.conf:/unraid/ssmtp/ssmtp.conf:ro - /etc/localtime:/etc/localtime:ro -

2 hours ago, primeval_god said:

No the "Compose Up" button in the webui executes 'docker compose up'. Images are pulled as part of the up command not with a separate command.

Ah sorry, I misunderstood. I thought the other person was asking for the cli analogue, not the plugin analogue.

-

On 1/16/2024 at 6:41 AM, L0rdRaiden said:

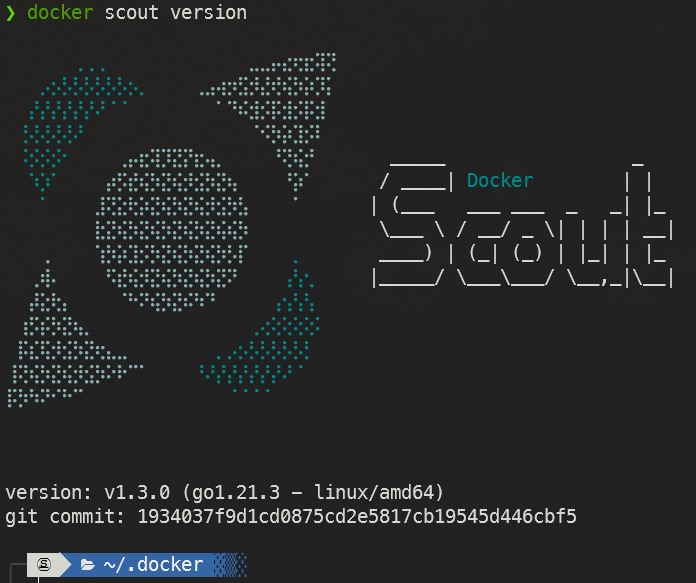

Could you please include docker scout cli binaries as part of compose manager?

I modified my docker compose update script to create a script to install docker scout on unraid. Save the script somewhere, source it in your profile.sh with `source /YOURPATHTOSCRIPT/dsupdate.source`, and then run with `dsupdate` or `dsupdate check`.

This works for me on a linux x86 system. If your system is different then you may need to edit line 12 to pull the proper filename from the release page.

#!/bin/bash alias notify='/usr/local/emhttp/webGui/scripts/notify' dsupdate() { SCOUT_LOCAL=$(docker scout version 2>/dev/null | grep version | cut -d " " -f2) SCOUT_LOCAL=${SCOUT_LOCAL:-"none"} echo Current: ${SCOUT_LOCAL} SCOUT_REPO=$(curl -s https://api.github.com/repos/docker/scout-cli/releases/latest | grep 'tag_name' | cut -d '"' -f4) if [ ${SCOUT_LOCAL} != ${SCOUT_REPO} ]; then dsdownload() { echo Repo: ${SCOUT_REPO} # curl -L "https://github.com/docker/scout-cli/releases/download/${SCOUT_REPO}/docker-scout_${SCOUT_REPO/v/}_$(uname -s)_$(uname -m).tar.gz" --create-dirs -o /tmp/docker-scout/docker-scout.tar.gz curl -L "https://github.com/docker/scout-cli/releases/download/${SCOUT_REPO}/docker-scout_${SCOUT_REPO/v/}_linux_amd64.tar.gz" --create-dirs -o /tmp/docker-scout/docker-scout.tar.gz tar -xf "${_}" -C /tmp/docker-scout/ --no-same-owner mkdir -p /usr/local/lib/docker/scout mv -T /tmp/docker-scout/docker-scout /usr/local/lib/docker/scout/docker-scout && chmod +x "${_}" rm -r /tmp/docker-scout cat "$HOME/.docker/config.json" | jq '.cliPluginsExtraDirs[]' 2>/dev/null | grep -qs /usr/local/lib/docker/scout 2>/dev/null if [ $? -eq 1 ]; then echo "Scout entry not found in .docker/config.json. Creating a backup and adding the scout entry." cp -vnT "$HOME/.docker/config.json" "$HOME/.docker/config.json.bak" cat "$HOME/.docker/config.json" | jq '.cliPluginsExtraDirs[.cliPluginsExtraDirs| length] |= . + "/usr/local/lib/docker/scout"' >"$HOME/.docker/config.json.tmp" mv -vT "$HOME/.docker/config.json.tmp" "$HOME/.docker/config.json" fi echo "Installed: $(docker scout version | grep version | cut -d " " -f2)" notify -e "docker-scout updater" -s "Update Complete" -d "New version: $(docker scout version | grep version | cut -d " " -f2)<br>Previous version: ${SCOUT_LOCAL}" -i "normal" } if [ -n "${1}" ]; then if [ "${1}" = "check" ]; then echo "Update available: ${SCOUT_REPO}" notify -e "docker-scout updater" -s "Update Available" -d "Repo version: ${SCOUT_REPO}<br>Local version: ${SCOUT_LOCAL}" -i "normal" else dsdownload fi else dsdownload fi else echo Repo: ${SCOUT_REPO} echo "Versions match, no update needed" fi unset SCOUT_LOCAL unset SCOUT_REPO }

-

On 1/16/2024 at 7:26 PM, primeval_god said:

The cli equivalent is docker compose up, not docker pull. The images are pulled as part of the up process.

wouldn't the equivalent be `docker compose pull SERVICENAME`? I always get extraction progress when pulling via docker compose.

-

@jbrodriguezIf you take PRs, I sent one on github that losslessly compresses the png images.

-

On 12/24/2023 at 2:13 PM, Caleb Bassham said:

Sorry to be a nuisance, but I can't find a lot of info for this plugin. Hoping for an update soon to get docker compose up to > v2.22 for the compose file extending/merging/importing new hotness. Any idea of when this may be?

Also, is there some sort of repo/homepage for this project besides this thread?

If you don't want to wait for the plugin to update, you can update docker-compose with this function I wrote and shared earlier in this topic. You can add it to your profile.sh.

-

On 11/2/2023 at 1:32 AM, alturismo said:

1/ you did check if your script is working running nativ from terminal ? install a gfx driver ?

2/ as you see it is actually executed, but failing ...

so in your case it looks like a different case i would say, at least what i see ...

1. The scripts work fine if I click "Run Script" in the UserScripts plugin options. For now, I have just been clicking "Run Script" on all these scripts manually when the server restarts. These are old scripts that I have not changed. Something in the plugin is not working. The graphics driver is unrelated. I just mentioned it because it was the reason I had to restart.

2. Only "Run Script" button works. I don't believe they're successfully running when in the background, like it does when the server restarts. The "Run in Background" button from UserScripts plugin options produces the log I shared: the script logline followed by several atd[3975] loglines.

-

On 10/22/2023 at 12:17 AM, alturismo said:

I restarted today to install a gfx driver. The backgrounding scripts during server restart redirect to /dev/null so no logs, but I found these when I tried to manually background a script:

Nov 1 14:49:10 unRAID emhttpd: cmd: /usr/local/emhttp/plugins/user.scripts/backgroundScript.sh /tmp/user.scripts/tmpScripts/jellyfin_mergerfs/script Nov 1 14:49:10 unRAID atd[3975]: PAM unable to dlopen(/lib64/security/pam_unix.so): /lib64/libc.so.6: version `GLIBC_2.38' not found (required by /lib64/libcrypt.so.1) Nov 1 14:49:10 unRAID atd[3975]: PAM adding faulty module: /lib64/security/pam_unix.so Nov 1 14:49:10 unRAID atd[3975]: Module is unknown Nov 1 14:49:16 unRAID emhttpd: cmd: /usr/local/emhttp/plugins/user.scripts/backgroundScript.sh /tmp/user.scripts/tmpScripts/zsh/script Nov 1 14:49:16 unRAID atd[4052]: PAM unable to dlopen(/lib64/security/pam_unix.so): /lib64/libc.so.6: version `GLIBC_2.38' not found (required by /lib64/libcrypt.so.1) Nov 1 14:49:16 unRAID atd[4052]: PAM adding faulty module: /lib64/security/pam_unix.so Nov 1 14:49:16 unRAID atd[4052]: Module is unknown -

On 10/9/2023 at 2:37 PM, jit-010101 said:

At Startup of Array and At Fist Start of Array Only are not working for me on two machines with 6.12.4

If you use either - then trying to run a script in background it simply wont do anything.

For example try to do a simple

echo "hello world" 1> /var/log/hello.log

in a new script

and then a cat /var/log/hello.log in the terminal afterwards - either by trying to run in background, or via array start -> nothing produced. You have to actually run the user-script manually in foreground - otherwise it wont work.

I recon this has something to do with scheduling? Happens for me on two systems - both on 6.12.4 ...

one of that brand new, and with very little plugins at all beside user scripts. No clue maybe its just me or I'm missing something very basic but both dont seem to do anything at all ... even on freshly created scripts of any name.

I since then moved to add this to my /boot/config/go ... works just fine (albeit before the array starts)

# wait 30 seconds then enable the crond jobs ... /bin/bash -c 'sleep 30; /usr/bin/resticprofile --log /var/log/resticprofile schedule --all' &

Edit forgot to mention:

If I run the same script for example with a custom scheduler every 5 minutes it works just fine.

Did you ever find a fix for this? I'm finding today that "on array start" scripts aren't running for me either. I'm not sure when the issue started since I don't often reboot my server, but I'm also on 6.12.4.

-

21 hours ago, hasown said:

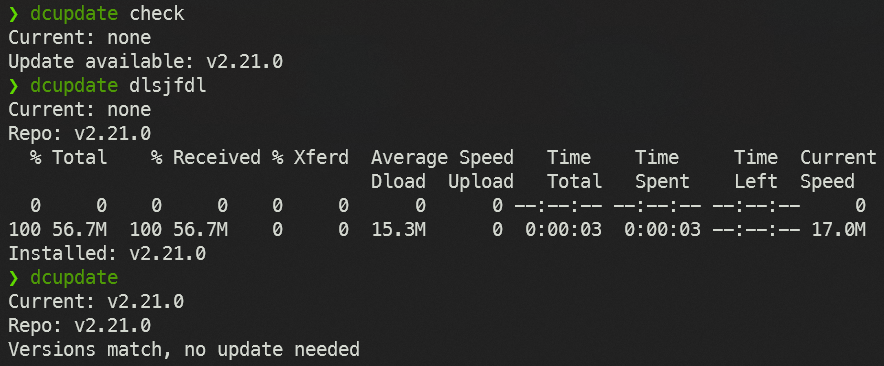

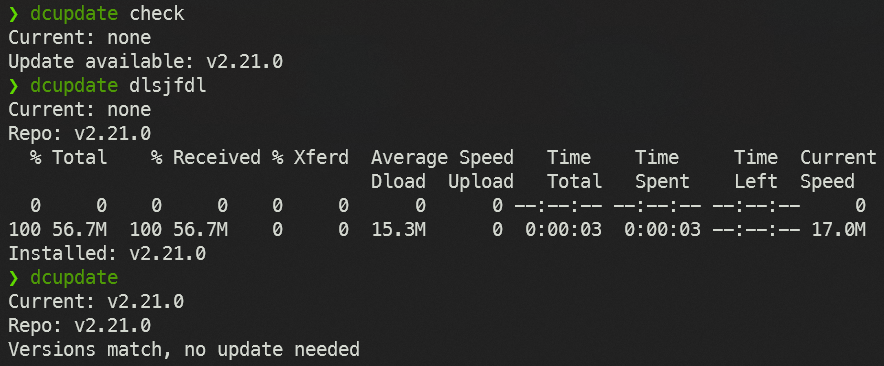

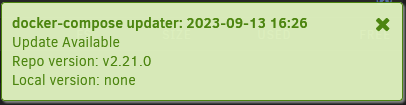

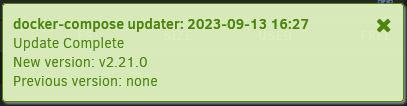

I have this function in my profile.sh to update docker-compose without waiting for the plugin to be updated. This function works even if the plugin isn't installed, so if you only use docker-compose from the command-line, this is all you need.

New version of this function. Now it checks your local version and only downloads a new one if the github repo version is different or if docker-compose is missing entirely. It also sends an unRAID notification when a download happens, so you can run this function daily using cron or a userscript and get notified when an update happens.

EDIT: new-new version. Now if you pass "check" when calling the function, it only notifies of new versions instead of downloading. Passing anything else or nothing will download a new version if available.

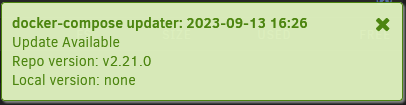

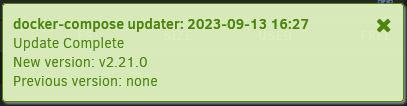

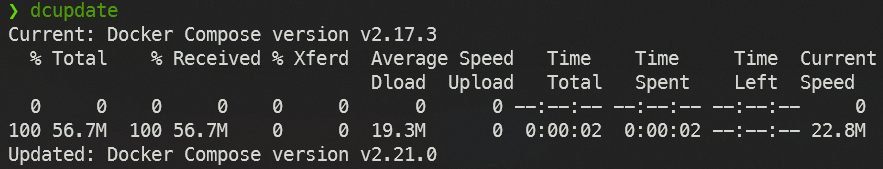

HELP: If anyone knows how to print newlines into an unraid notification without using <br>, please let me know. <br> works fine for dashboard notifications but they look weird in discord notifications using a slack webhook.# notify [-e "event"] [-s "subject"] [-d "description"] [-i "normal|warning|alert"] [-m "message"] [-x] [-t] [-b] [add] alias notify='/usr/local/emhttp/webGui/scripts/notify' # dc update dcupdate() { COMPOSE_LOCAL=$(docker compose version 2>/dev/null | cut -d " " -f4) COMPOSE_LOCAL=${COMPOSE_LOCAL:-"none"} COMPOSE_REPO=$(curl -s https://api.github.com/repos/docker/compose/releases/latest | grep 'tag_name' | cut -d '"' -f4) echo Current: ${COMPOSE_LOCAL} if [ ${COMPOSE_LOCAL} != ${COMPOSE_REPO} ]; then dcdownload() { echo Repo: ${COMPOSE_REPO} curl -L "https://github.com/docker/compose/releases/download/${COMPOSE_REPO}/docker-compose-$(uname -s)-$(uname -m)" --create-dirs -o /usr/local/lib/docker/cli-plugins/docker-compose && chmod +x "${_}" echo "Installed: $(docker compose version | cut -d ' ' -f4)" notify -e "docker-compose updater" -s "Update Complete" -d "New version: $(docker compose version | cut -d ' ' -f4)<br>Previous version: ${COMPOSE_LOCAL}" -i "normal" } if [ -n "${1}" ]; then if [ "${1}" = "check" ]; then echo "Update available: ${COMPOSE_REPO}" notify -e "docker-compose updater" -s "Update Available" -d "Repo version: ${COMPOSE_REPO}<br>Local version: ${COMPOSE_LOCAL}" -i "normal" else dcdownload fi else dcdownload fi else echo Repo: ${COMPOSE_REPO} echo "Versions match, no update needed" fi unset COMPOSE_LOCAL unset COMPOSE_REPO }First run passes "check" as an argument to check for updates without downloading. Second is passing a non "check" argument. Third is no argument.:

Notification for check only:

Notification for completed update:

-

2

2

-

-

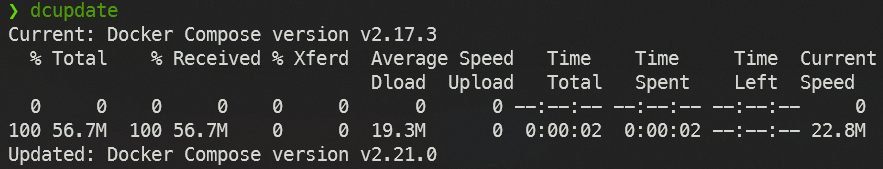

See this post for an updated version of this function.

I have this function in my profile.sh to update docker-compose without waiting for the plugin to be updated. This function works even if the plugin isn't installed, so if you only use docker-compose from the command-line, this is all you need.

You'll need to re-run the command on a reboot. If you have the plugin installed, then a reboot will reset docker-compose to the plugin's docker-compose version. If you don't have the plugin, a reboot will remove docker-compose entirely.

Probably you could add the function to the go file to install docker-compose on every reboot but I haven't tried.EDIT: I tried it in my go file and it worked on reboot to install on server start.

dcupdate() { echo Current: $(docker compose version) COMPOSE_VERSION=$(curl -s https://api.github.com/repos/docker/compose/releases/latest | grep 'tag_name' | cut -d\" -f4) curl -L "https://github.com/docker/compose/releases/download/${COMPOSE_VERSION}/docker-compose-$(uname -s)-$(uname -m)" --create-dirs -o /usr/local/lib/docker/cli-plugins/docker-compose && sudo chmod +x "${_}" unset COMPOSE_VERSION echo Updated: $(docker compose version) }looks like this when you run it:

-

1

1

-

-

Just following up to finish this thread: the second parity check just finished and 0 errors corrected. Hopefully helps someone in the future who searches for this issue.

-

1

1

-

-

I precleared the parity drives to stress test them before doing the swaps, so I thought they would've been zeroed anyway. But I'll run a second parity check and see how it goes.

-

1 hour ago, JorgeB said:

Did you do a parity swap in the recent past?

Yes, I've done a couple of parity swaps to get the 20TB drives in. My previous parity drives are data drives now.

-

Running a correcting parity check and the "sync errors corrected" numbers is increasing a lot, but the parity check is in the space *after* the data. My biggest data drive is 18TB and the parity drives are both 20TB. The check is currently at 18.3 TB. My understanding of parity is that if the parity is bigger than the array, all the *extra* parity should just be 0? No drives are reporting any SMART errors, syslog seems clean other than an odd cron error that has to be unrelated.

-

-

Feature request described below. TLDR: a way to provide exclusions to extra files

I have a folder in my appdata folder called "mergerfs" where my jellyfin nfos and images are stored. This is also where jellyscrub stores thumbnails for scrubbing using "trickplay" folders in each media item's directory under "mergerfs". These trickplay folders greatly bloat the size of the "mergerfs" folder and I would like to exclude them, but otherwise backup everything in mergerfs.

-

On 8/7/2023 at 3:50 PM, Sanches said:

Was the hidden Windows share thing ever resolved? I have the same issue. A share shows "-- Invalid Configuration - Remove and Re-add --" when the $ is at the end. If that's removed in Windows sharing options, the exact same share mounts fine with UD.

-

4 hours ago, Presjar said:

That is for updating containers. The previous poster was asking for the docker compose binary to be updated. It is pretty out of date right now. The plugin is 2.11.2 but the current release is 2.17.3. It is 15 versions older, which will be a huge version jump when it finally upgrades.

-

1

1

-

-

I actually found a docker container change was spawning a bunch of zombie processes, so I've reversed a change there and hopefully that will fix this. The processes list is much shorter afterwards so I'm hopeful.

-

1

1

-

-

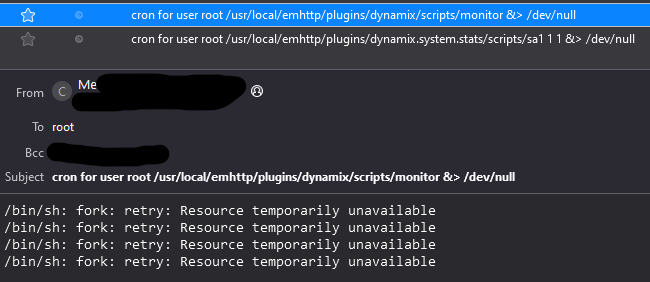

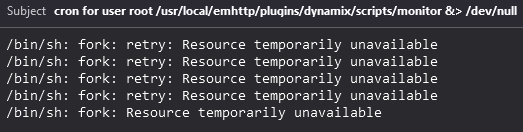

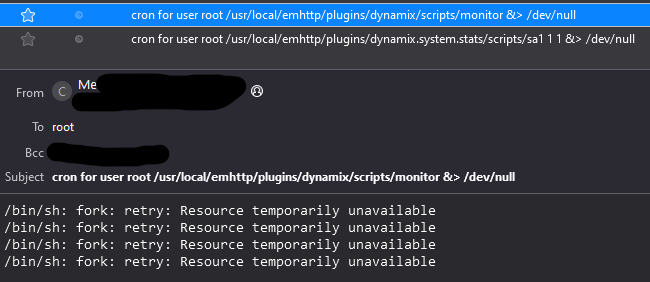

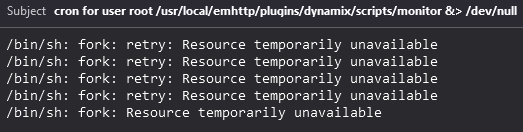

I shutdown the server and moved the drive to a different port, and I haven't seen any warnings about the boot device yet, but I got two more of the cron emails today.

EDIT: Also got this just now:

Very confused about what could be causing this, because I haven't changed anything major in the server for months, and the changes I have done in the last couple of weeks I rolled back. I thought the boot drive was loaded into memory when the server started, so even if it *is* going bad, I don't know why it would be the source of this issue.

-

Recently have had a lot of instabilty. My jellyfin docker has crashed multiple times a day due to an Out of Memory error, and I'm sporadically getting errors in the terminal that look like the below (I was trying to send a `docker compose down` command). I managed to download diagnostics, although that failed several times.

I also got a notification about there being a physical problem with the drive, and then the notification changed to say my drive was blacklisted. After a server reboot where I swapped the drive to another USB slot, the server came up without any errors or those warning notifications. Can someone take a look at the diagnostics and tell me what exactly is happening?

EDIT: also have been getting emails like this:

❯ dc down _check_global_aliases:15: fork failed: resource temporarily unavailable zsh: fork failed: resource temporarily unavailable _flush_ysu_buffer:3: fork failed: resource temporarily unavailable ❯ dc down runtime/cgo: pthread_create failed: Resource temporarily unavailable SIGABRT: abort PC=0x175b73a m=10 sigcode=18446744073709551610 goroutine 0 [idle]: runtime: unknown pc 0x175b73a stack: frame={sp:0x15045891f948, fp:0x0} stack=[0x150458900248,0x15045891fe48) 0x000015045891f848: 0x0000000000000037 0x0000000000000000 0x000015045891f858: 0x0000000000000000 0x00000000001062f3 0x000015045891f868: 0x0000000000000001 0x000000000284f160 0x000015045891f878: 0x0000000000000037 0x00000000028ff888 0x000015045891f888: 0x000015045891f8c0 0x000015045891f950 0x000015045891f898: 0x000000000175e58b 0x0000000000040000 0x000015045891f8a8: 0x00000000004b0d6f 0x0000150400000000 0x000015045891f8b8: 0x000015045891f8d8 0x0000003000000010 0x000015045891f8c8: 0x000015045891fb20 0x000015045891fa50 0x000015045891f8d8: 0x0000000000000000 0x0000000000000000 0x000015045891f8e8: 0x0000000000000000 0x0000000000000000 0x000015045891f8f8: 0x0000000000000000 0x5f64616572687470 0x000015045891f908: 0x6620657461657263 0x52203a64656c6961 0x000015045891f918: 0x20656372756f7365 0x7261726f706d6574 0x000015045891f928: 0x76616e7520796c69 0x00656c62616c6961 0x000015045891f938: 0x00000000004c3885 <runtime.persistentalloc+0x0000000000000065> 0x0000000000203000 0x000015045891f948: <0x000000000175b77b 0x0000000000000000 0x000015045891f958: 0x0000000000003fc0 0x0000000000000000 0x000015045891f968: 0x00000000017548ba 0x00000000fffffff5 0x000015045891f978: 0x0000000001756edf 0x0000000000000001 0x000015045891f988: 0x000000000175bd45 0x00000000028ff888 0x000015045891f998: 0x0000000000000000 0x000015045891f9ff 0x000015045891f9a8: 0x0000000000000001 0x000015045891fb28 0x000015045891f9b8: 0x000000000284f160 0x000000000000000a 0x000015045891f9c8: 0x000000000284f1ec 0x0000000000000178 0x000015045891f9d8: 0x000000000284f160 0x000015045891fd08 0x000015045891f9e8: 0x0000000001754e86 0x0000000000000000 0x000015045891f9f8: 0x0a0000000284f160 0x000000000284f160 0x000015045891fa08: 0x000000000175c56a 0x000000000284f160 0x000015045891fa18: 0x000015045891fd08 0x0000000000203000 0x000015045891fa28: 0x00000000017507f9 0x0000003000000008 0x000015045891fa38: 0x000015045891fb20 0x000015045891fa50 runtime: unknown pc 0x175b73a stack: frame={sp:0x15045891f948, fp:0x0} stack=[0x150458900248,0x15045891fe48) 0x000015045891f848: 0x0000000000000037 0x0000000000000000 0x000015045891f858: 0x0000000000000000 0x00000000001062f3 0x000015045891f868: 0x0000000000000001 0x000000000284f160 0x000015045891f878: 0x0000000000000037 0x00000000028ff888 0x000015045891f888: 0x000015045891f8c0 0x000015045891f950 0x000015045891f898: 0x000000000175e58b 0x0000000000040000 0x000015045891f8a8: 0x00000000004b0d6f 0x0000150400000000 0x000015045891f8b8: 0x000015045891f8d8 0x0000003000000010 0x000015045891f8c8: 0x000015045891fb20 0x000015045891fa50 0x000015045891f8d8: 0x0000000000000000 0x0000000000000000 0x000015045891f8e8: 0x0000000000000000 0x0000000000000000 0x000015045891f8f8: 0x0000000000000000 0x5f64616572687470 0x000015045891f908: 0x6620657461657263 0x52203a64656c6961 0x000015045891f918: 0x20656372756f7365 0x7261726f706d6574 0x000015045891f928: 0x76616e7520796c69 0x00656c62616c6961 0x000015045891f938: 0x00000000004c3885 <runtime.persistentalloc+0x0000000000000065> 0x0000000000203000 0x000015045891f948: <0x000000000175b77b 0x0000000000000000 0x000015045891f958: 0x0000000000003fc0 0x0000000000000000 0x000015045891f968: 0x00000000017548ba 0x00000000fffffff5 0x000015045891f978: 0x0000000001756edf 0x0000000000000001 0x000015045891f988: 0x000000000175bd45 0x00000000028ff888 0x000015045891f998: 0x0000000000000000 0x000015045891f9ff 0x000015045891f9a8: 0x0000000000000001 0x000015045891fb28 0x000015045891f9b8: 0x000000000284f160 0x000000000000000a 0x000015045891f9c8: 0x000000000284f1ec 0x0000000000000178 0x000015045891f9d8: 0x000000000284f160 0x000015045891fd08 0x000015045891f9e8: 0x0000000001754e86 0x0000000000000000 0x000015045891f9f8: 0x0a0000000284f160 0x000000000284f160 0x000015045891fa08: 0x000000000175c56a 0x000000000284f160 0x000015045891fa18: 0x000015045891fd08 0x0000000000203000 0x000015045891fa28: 0x00000000017507f9 0x0000003000000008 0x000015045891fa38: 0x000015045891fb20 0x000015045891fa50 goroutine 1 [semacquire, locked to thread]: github.com/docker/cli/vendor/k8s.io/api/core/v1.init() /go/src/github.com/docker/cli/vendor/k8s.io/api/core/v1/types_swagger_doc_generated.go:316 +0x25cc goroutine 34 [chan receive]: github.com/docker/cli/vendor/k8s.io/klog.(*loggingT).flushDaemon(0xc0000c2060?) /go/src/github.com/docker/cli/vendor/k8s.io/klog/klog.go:1010 +0x6a created by github.com/docker/cli/vendor/k8s.io/klog.init.0 /go/src/github.com/docker/cli/vendor/k8s.io/klog/klog.go:411 +0xef rax 0x0 rbx 0x0 rcx 0x175b73a rdx 0x0 rdi 0x2 rsi 0x15045891f950 rbp 0x15045891f950 rsp 0x15045891f948 r8 0xa r9 0x4b0cce r10 0x8 r11 0x246 r12 0x203000 r13 0x178 r14 0x4b0d6f r15 0x15045891fb30 rip 0x175b73a rflags 0x246 cs 0x33 fs 0x0 gs 0x0 ❯ dc down _check_global_aliases:15: fork failed: resource temporarily unavailable zsh: fork failed: resource temporarily unavailable _flush_ysu_buffer:3: fork failed: resource temporarily unavailable ❯ dc down runtime/cgo: pthread_create failed: Resource temporarily unavailable runtime/cgo: pthread_create failed: Resource temporarily unavailable SIGABRT: abort PC=0x175b73a m=4 sigcode=18446744073709551610 goroutine 0 [idle]: runtime: unknown pc 0x175b73a stack: frame={sp:0x151f8605d938, fp:0x0} stack=[0x151f8603e248,0x151f8605de48) 0x0000151f8605d838: 0x0000000000000037 0x0000000000000000 0x0000151f8605d848: 0x0000000000000000 0x0000151f8605d890 0x0000151f8605d858: 0x0000000000000001 0x000000000284f160 0x0000151f8605d868: 0x0000000000000037 0x00000000028ff888 0x0000151f8605d878: 0x0000151f8605d8b0 0x0000151f8605d940 0x0000151f8605d888: 0x000000000175e58b 0x0000151f8605d908 0x0000151f8605d898: 0x00000000004b0d6f 0x0000151f00000000 0x0000151f8605d8a8: 0x0000151f8605d8c8 0x0000003000000010 0x0000151f8605d8b8: 0x0000151f8605db10 0x0000151f8605da40 0x0000151f8605d8c8: 0x0000000000000000 0x0000000000000000 0x0000151f8605d8d8: 0x0000000000000000 0x0000000000000000 0x0000151f8605d8e8: 0x0000000000000000 0x5f64616572687470 0x0000151f8605d8f8: 0x6620657461657263 0x52203a64656c6961 0x0000151f8605d908: 0x20656372756f7365 0x7261726f706d6574 0x0000151f8605d918: 0x76616e7520796c69 0x00656c62616c6961 0x0000151f8605d928: 0x00000000004c3885 <runtime.persistentalloc+0x0000000000000065> 0x0000000000203000 0x0000151f8605d938: <0x000000000175b77b 0x0000000000000000 0x0000151f8605d948: 0x0000151f85f42000 0x0000000002904af8 0x0000151f8605d958: 0x00000000017548ba 0x00000000fffffff5 0x0000151f8605d968: 0x0000000001756edf 0x0000000000000001 0x0000151f8605d978: 0x000000000175bd45 0x00000000028ff888 0x0000151f8605d988: 0x0000000000000000 0x0000151f8605d9ef 0x0000151f8605d998: 0x0000000000000001 0x0000151f8605db18 0x0000151f8605d9a8: 0x000000000284f160 0x000000000000000a 0x0000151f8605d9b8: 0x000000000284f1ec 0x0000000000000178 0x0000151f8605d9c8: 0x000000000284f160 0x0000151f8605dcf8 0x0000151f8605d9d8: 0x0000000001754e86 0x0000000000000000 0x0000151f8605d9e8: 0x0a0000000284f160 0x000000000284f160 0x0000151f8605d9f8: 0x000000000175c56a 0x000000000284f160 0x0000151f8605da08: 0x0000151f8605dcf8 0x0000000000203000 0x0000151f8605da18: 0x00000000017507f9 0x0000003000000008 0x0000151f8605da28: 0x0000151f8605db10 0x0000151f8605da40 runtime: unknown pc 0x175b73a stack: frame={sp:0x151f8605d938, fp:0x0} stack=[0x151f8603e248,0x151f8605de48) 0x0000151f8605d838: 0x0000000000000037 0x0000000000000000 0x0000151f8605d848: 0x0000000000000000 0x0000151f8605d890 0x0000151f8605d858: 0x0000000000000001 0x000000000284f160 0x0000151f8605d868: 0x0000000000000037 0x00000000028ff888 0x0000151f8605d878: 0x0000151f8605d8b0 0x0000151f8605d940 0x0000151f8605d888: 0x000000000175e58b 0x0000151f8605d908 0x0000151f8605d898: 0x00000000004b0d6f 0x0000151f00000000 0x0000151f8605d8a8: 0x0000151f8605d8c8 0x0000003000000010 0x0000151f8605d8b8: 0x0000151f8605db10 0x0000151f8605da40 0x0000151f8605d8c8: 0x0000000000000000 0x0000000000000000 0x0000151f8605d8d8: 0x0000000000000000 0x0000000000000000 0x0000151f8605d8e8: 0x0000000000000000 0x5f64616572687470 0x0000151f8605d8f8: 0x6620657461657263 0x52203a64656c6961 0x0000151f8605d908: 0x20656372756f7365 0x7261726f706d6574 0x0000151f8605d918: 0x76616e7520796c69 0x00656c62616c6961 0x0000151f8605d928: 0x00000000004c3885 <runtime.persistentalloc+0x0000000000000065> 0x0000000000203000 0x0000151f8605d938: <0x000000000175b77b 0x0000000000000000 0x0000151f8605d948: 0x0000151f85f42000 0x0000000002904af8 0x0000151f8605d958: 0x00000000017548ba 0x00000000fffffff5 0x0000151f8605d968: 0x0000000001756edf 0x0000000000000001 0x0000151f8605d978: 0x000000000175bd45 0x00000000028ff888 0x0000151f8605d988: 0x0000000000000000 0x0000151f8605d9ef 0x0000151f8605d998: 0x0000000000000001 0x0000151f8605db18 0x0000151f8605d9a8: 0x000000000284f160 0x000000000000000a 0x0000151f8605d9b8: 0x000000000284f1ec 0x0000000000000178 0x0000151f8605d9c8: 0x000000000284f160 0x0000151f8605dcf8 0x0000151f8605d9d8: 0x0000000001754e86 0x0000000000000000 0x0000151f8605d9e8: 0x0a0000000284f160 0x000000000284f160 0x0000151f8605d9f8: 0x000000000175c56a 0x000000000284f160 0x0000151f8605da08: 0x0000151f8605dcf8 0x0000000000203000 0x0000151f8605da18: 0x00000000017507f9 0x0000003000000008 0x0000151f8605da28: 0x0000151f8605db10 0x0000151f8605da40 goroutine 1 [runnable, locked to thread]: github.com/docker/cli/vendor/k8s.io/apimachinery/pkg/runtime.(*Scheme).AddKnownTypeWithName(0xc000511b20, {{0x0, 0x0}, {0x200529, 0x2}, {0x17865ef, 0xc}}, {0x1b1a938?, 0xc0004219f0?}) /go/src/github.com/docker/cli/vendor/k8s.io/apimachinery/pkg/runtime/scheme.go:213 +0x47a github.com/docker/cli/vendor/k8s.io/apimachinery/pkg/runtime.(*Scheme).AddKnownTypes(0xc000511b20, {{0x0?, 0x0?}, {0x200529?, 0xa?}}, {0xc00061f418?, 0x7, 0x1b1a870?}) /go/src/github.com/docker/cli/vendor/k8s.io/apimachinery/pkg/runtime/scheme.go:180 +0x237 github.com/docker/cli/vendor/k8s.io/apimachinery/pkg/apis/meta/v1.AddToGroupVersion(0x26ca5e?, {{0x0?, 0x0?}, {0x200529?, 0x0?}}) /go/src/github.com/docker/cli/vendor/k8s.io/apimachinery/pkg/apis/meta/v1/register.go:51 +0x385 github.com/docker/cli/vendor/k8s.io/client-go/plugin/pkg/client/auth/exec.init.0() /go/src/github.com/docker/cli/vendor/k8s.io/client-go/plugin/pkg/client/auth/exec/exec.go:56 +0x34 goroutine 34 [chan receive]: github.com/docker/cli/vendor/k8s.io/klog.(*loggingT).flushDaemon(0xc0000c2060?) /go/src/github.com/docker/cli/vendor/k8s.io/klog/klog.go:1010 +0x6a created by github.com/docker/cli/vendor/k8s.io/klog.init.0 /go/src/github.com/docker/cli/vendor/k8s.io/klog/klog.go:411 +0xef rax 0x0 rbx 0x0 rcx 0x175b73a rdx 0x0 rdi 0x2 rsi 0x151f8605d940 rbp 0x151f8605d940 rsp 0x151f8605d938 r8 0xa r9 0x4b0cce r10 0x8 r11 0x246 r12 0x203000 r13 0x178 r14 0x4b0d6f r15 0x151f8605db20 rip 0x175b73a rflags 0x246 cs 0x33 fs 0x0 gs 0x0 -

On 12/11/2022 at 4:07 AM, Squid said:

One suggestion found via Google would be to run the memory at it's stock speed (2133) instead of underclocking it to 1866. Look in the BIOS for various settings.

Does it say in the diagnostics somewhere that my memory is being underclocked? When I check System Profiler it says all the memory is 2666 MT/s, which matches the purchased sticks.

[Plugin] unbalanced

in Plugin Support

Posted · Edited by nearcatch

Restrict unbalanced LAN Access

I haven't liked that unbalanced is available to anyone in my LAN once it's started, but I have figured out a solution for myself. Sharing the steps here for anyone else who is curious and uses a reverse proxy for other things, like I was.

How-to

1. Set up your reverse proxy to have an authenticated subdomain for unbalanced. I use Traefik and Authelia. You will have to do something specific to your setup, but this is what I added to my Traefik config file:

http: routers: unbalanced-rtr: rule: "Host(`unbalanced.unraid.lan`)" entryPoints: - websecure middlewares: - chain-authelia-lan - error-pages@docker service: unbalanced-svc services: unbalanced-svc: loadBalancer: servers: - url: "http://192.168.1.10:7090" # substitute your unraid server's IP address and unbalanced port2. Run these iptables rules in a console session so that any request to unbalanced's port gets rejected, unless it is from Unraid's IP or the IP range of your reverse proxy's network. Substitute the correct IP addresses and ports for your network. You can also add these to your go file to have them activated every time Unraid is rebooted.

iptables -A INPUT -p tcp --dport 7090 -s 10.10.1.0/24 -j ACCEPT # substitute the subnet your reverse proxy uses. you can also limit this to the exact IP of your reverse proxy docker container if you want iptables -A INPUT -p tcp --dport 7090 -s 192.168.1.10 -j ACCEPT iptables -A INPUT -p tcp --dport 7090 -j REJECT --reject-with tcp-resetResult

After these two steps, unbalanced cannot be accessed by the ip:port of my Unraid server. It can only be accessed using https://unbalanced.unraid.lan, and because I add Authelia using a Traefik middleware, it requires authentication instead of being freely accessible.

No reverse proxy?

If you don't use a reverse proxy, you can still do step 2 and edit the 1st iptables rule to reject any request to unbalanced's port except from one specific computer on your LAN, which should still help limit access.

EDIT: Also for those who don't know, you can remove the iptables rules by running them again with "- D" instead of "- A". Restarting your Unraid server will also reset your iptables if you haven't modified your go file with these rules.