PeterDB

-

Posts

28 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by PeterDB

-

-

It seems that it cannot load correctly due to the drivers for the Kraken not being part of the OS. Looking at others online, Kraken AIOs seems to be very sporadically supported on Linux and many opting for Windows VMs to control it. It seems there is a driver, but I cannot find any details on really how to install it. It might be an idea to reach out to the dev Jonas Malaco (https://jonasmalaco.com/) who created it and actually who wrote LiquidCTL.

Some posts:

https://www.kernel.org/doc/html/latest/hwmon/nzxt-kraken2.html

https://github.com/liquidctl/liquidctl

-

1

1

-

-

32 minutes ago, JorgeB said:

You can still use XFS for single device pools and/or an unRAID array.

That's will depend on the filesystem you choose for the pool, you can with btrfs, not with zfs raidz, until/if raidz expansion gets implemented.

Pools are currently limited to 30 devices, there's no size limit for the disks used.

Here's the thing; in the comment here

it's eluded to the fact, that in the future there will be no array, but just pools, which could imply XFS is going away.

As for scalability, with the btrfs pools I have each time I added a drive it required me to reformat, would that also be the case in the future if XFS goes away?

-

On 1/11/2023 at 10:39 PM, limetech said:

With future release:

- The "pool" concept will be generalized. Instead of having an "unRAID" array, you can create a pool and designate it as an "unRAID" pool. Hence you could have unRAID pools, btrfs pools, zfs pools. Of course individual devices within an unRAID pool have their own file system type. (BTW we could add ext4 but no one has really asked for that).

This is quite interesting! So for the future would a pool still be able to use XFS or will you be limiting it to btrfs and zfs? What about the ability to scale pools one drive at a time (major reason for many who use Unraid) and will there be a pool disk size limit?

-

On 1/11/2023 at 10:41 PM, limetech said:

You really want more than 30 devices in a single array?

In a single array, yes, it should be 32. Why? Good reason, if you have a HBA controllers which supports 16 drives and you have two in your system, such as I and many others do, then the maximum drive count is 32 drives. With Unraid only supporting 30 drives, you're left with two drives you cannot add to the array.

As for the multiple array's, I'm actually fine with a 32 drive limit, but it would be nice to have more arrays, not more cache pools.

-

Awesome!

But, it would be great to have array sizes beyond the 30 drive limit or have multiple arrays

-

3

3

-

-

23 minutes ago, ich777 said:

I would rather call this workaround then a FIX since adding this:

can lead to system instability, other weird behavior and can in certain cases damage your hardware.

You can find a good write up about what this option does here: Click

Please also keep in mind that this is only a friendly reminder and nothing that I want to criticize.

@ich777Considering this is an issue for several people here, and considering your wealth of knowledge, do you have another suggestion?

-

Sadly, I read it already, but it's what is being advised for the nct6775 driver when there are detection issues, and sadly it seems no one is doing anymore development on it.

I'll correct the screenshots and add a disclaimer.

-

On 8/5/2022 at 8:40 PM, MaX93 said:

Same Error here. Unraid 6.10.3 with coretemp nct6775 sensors.

I had issues too! Check this post I made

-

I'm writing this not only for myself, as I had a to spend a couple of hours to get this to work, but also because I find others who still experience issues with getting temperature and fan readings out when they are using Dynamix System Temperature or Dynamix Auto Fan Control Support together with the NCT6775 driver for Nuvoton NCT5572D/NCT6771F/NCT6772F/NCT6775F/W83677HG-I and other chips.

The issue that most will experience is that only CPU temps are showing up, but none of the fan or other temperature sensors are showing up. There are a lot of instructions of solving the various issues, such as sensor detection, adding acpi_enforce_resources=lax to syslinux.cfg or just getting the driver to load, but there is no end-to-end guide!

Newer Asus motherboards, who are notorious for using Nuvoton sensors, will in the future have a different driver, read more here https://www.phoronix.com/forums/forum/hardware/motherboards-chipsets/1304134-new-asus-sensor-driver-for-linux-aims-for-greater-flexibility-faster-sensor-reading. However, for older boards such as my Asus Sabertooth X99 with the Nuvoton thermal sensors, enabling acpi_enforce_resources=lax has been advised in for several years.

This guide assumes you have a bit of experience with Unraid, but if you don't just post here and I'll try to help!

DISCLAIMER: Adding acpi_enforce_resources=lax to syslinux.cfg could/can cause adverse effects, read more here: https://bugzilla.kernel.org/show_bug.cgi?id=204807#c37 It would be strongly advise that should you need to do any firmware updates to boot into regular Unraid OS for the boot menu and not choose the default Unraid OS NCT6775 Workaround option.

Steps for workaround

- Open the Unraid terminal

- Type sensors-detect and accept everything

-

At the end of sensors-detect it will show you a list of the found drivers, write these down: in the below case it's "nct6775" and "coretemp"

-

On the Unraid flash drive edit the Go-file found in /boot/config (make sure to make a backup!) and add the lines referring to the drivers, and save the file

modprobe nct6775

modprobe coretemp

/usr/bin/sensors -s

-

Now go to the Unraid dashboards Main page and click on your flash drive

- Scroll down to the section Syslinux Configuration and on the right side click on "Menu View"

-

Add the label Unraid OS NCT6775 WORKAROUND under the label Unraid OS. The text you need to add is shown below.

label Unraid OS NCT6775 WORKAROUND

kernel /bzimage

append initrd=/bzroot acpi_enforce_resources=lax

-

Once you've added the label click on the "Raw View" option on the right side and select the new Unraid OS NCT6775 WORKAROUND as default but clicking the little round radiobutton and then click "Apply"

- Now reboot

All done and it should work, and you'll be able to configure the Dynamix System Temperature and Dynamix Auto Fan Control Support plugins

Proof that it does:

-

2

2

-

2

2

-

Interesting! Why honestly, why not just use the Add2PSU and a molex connector between the cases?

-

yeah, I spent a couple of hours to see how to get this done correctly, and even custom ordering the cables, so they are made properly... cost is more than an second PSU. Easier to get an Add2PSU and use a second PSU.

Thanks again for the help!

Last question: You mention you run a 3U and 2U as a diskshelf, how are you ensure the power on between the two?

-

I managed to find some, even higher rated 12V to 5V 10A converters, and coincidentally also some chia miners that use the same method to split the power off EPS and PCI-E.

-

Ah, I get it. In essence I could make an EPS or PCI-E 8pin to a step-down 12V>5V and 12V>3.3V and then to SATA, or just 5V and Molex.

However, I seem to be unable to one in Europe.

-

Could you clarify what you mean by the DC-DC module and that there's already one inside?

-

I'm starting to run out of space and room in my rack for drives. I currently have an Inter-Tech 4U 4129L with an array of 14 drives (incl. one for parity), plus an nvme, ssd cache and a hdd cache all working quite well with an Zalman ZM750-ARX PSU. The drives are a mix of shucked 8TB WDCs, 16TB Seagate DC and WDC DC drives totally 152TB, and yes, I'm running out of space.

I bought the Inter-Tech 4F28 (imgur), as it can hold 28 3.5" plus 6 2.5" drives (two on either side of the drive cages and two side mounted in the rear compartment) without thinking of PSU, but just needing more drive space. I plan to populate it with WDC 16tb or 18tb DC drives, which usually pull operationally 5V/0.5A and 12V/0.57A, but would like to port over my existing drives which based on the drive spec pull 9.2A on 5V and 9.8A on 12V.

Yes, I have been reading a lot here, reddit, and many other communities, reached out via DM to a lot of OPs to figure out their set up's, and found that most just only look at the PSU wattage not amps or just plainly ignore amps, staggered spin-ups, max connections on sata cables, and far too many seem to underpower their systems.

Personally, I don't need a high-spec'd CPU or motherboard, I currently use a Gigabyte H97-D3H-CF and an Intel i7-4770 with 32gb, which is more than enough for storage and running a few dockers. The controller is a Adaptec 71605 in HBA mode, and I don't see any options in the controller BIOS for staggered spin-up.

The short question(s):

- How many drives do you have and what's your cabling/PSU?

- My Zalman ZM750-ARX delivers 5V rail 22A 120W and 12V rail 62A 744W (80% load would be 5V/17.6A/96W and 12V/49.6A/595W) is it enough?

- Should I get a 2nd PSU, just to be safe?

- What's the best mounting options for a 2nd PSU considering the case/any regular 1 PSU ATX/4U case?

Am I overthinking this? Did my Googling just make the simple decision a lot more complicated?

-

-

Thanks for the response. This is good info! I actually started to do some test prints and glue tests, and also started to search for eva foam strips. First test prints were promising!

However, while doing my research for eva foam, I discovered that one of the case manufacturers actually started producing a 28 vertical bay rack again, and I just ordered one of those. Only downside is that the rack uses 120mm fans instead of 140mm.

Try searching for 4u 28 drives, and the case manufacturer pops up on Alibaba and a few sellers on AliExpress. Inter-Tech is selling them under the model 4F28 https://inter-tech.de/en/products/ipc/server-cases/4f28-mining-rack

-

On 9/27/2021 at 12:42 PM, tjb_altf4 said:

So I needed a good base to move forward with, so I've got my existing drives moved into the new caddy system... success!

Post fitment, there are definitely a number of things to fix in a v2 design, but my god it is so much quieter... and I've got 13 extra bays to work with! WIN-WIN.

For all headaches, this has been a great upgrade so far.

Spaghetti test run:

wow, this is really an elegant solution and just what I have been looking for. Any chance you can share the STLs or upload to Thingiverse?

So many questions also, like:

- How does the bottom part look like

- How much tolerance do you have on the drives

- Why not 15 drives instead of 14?

- Any vibration issues?

- How is the airflow and drive temps?

- On the read, I see two squares, are these just there to hold up the mount?

- How did you glue/stick/mount them together?

- Did you screw the cage to the rack, or is it just sitting there loose?

-

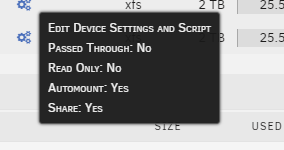

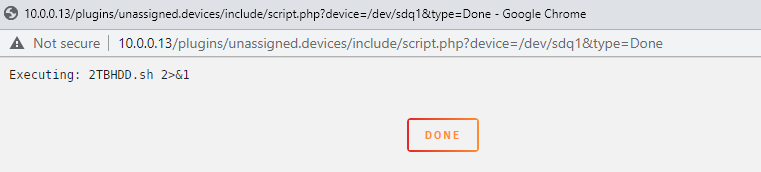

On 7/27/2021 at 11:06 AM, dlandon said:

Click on the three gears icon under the 'Settings' column and turn off the 'Show Partitions' switch. That will collapse the partition.

That solved it. Thanks so much!

-

On 7/24/2021 at 1:03 PM, dlandon said:

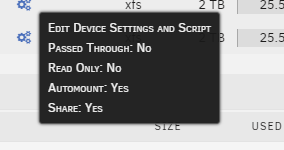

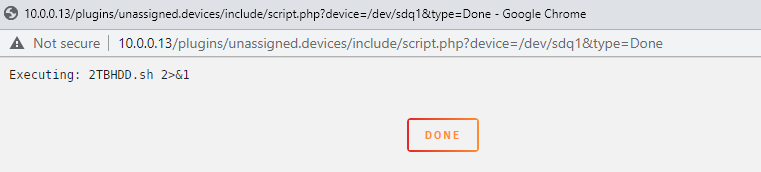

You can set up a script to run when the drive is installed by clicking on the three gears icon. The lightning icon runs that script just like the drive was first plugged in.

Thanks for the clarification. Unfortunately, I don't think that the issue here, as there is no script set to run and the option to collapse the tree is greyed out. It's like unassigned devices is "asking me" to run the script, while there shouldn't be a reason for it.

-

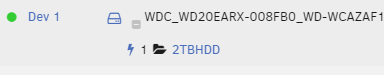

In the last few days, I've noticed drive three is expanded and a lightning is shown next to one of my drives in Unassigned Devices. I don't seem to be able to find any real info about why this is, except it's to execute a script. However, when I do, I get the prompt it's completed, but the lightning doesn't go away.

Any ideas?

-

That is the error: /tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

QuoteScript location: /tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script

Note that closing this window will abort the execution of this script

Container's CPU load exceeded threshold

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

Container's CPU load exceeded threshold

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

/tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not foundOnly difference to your original script, was that I changed it to monitor for Emby rather than Plex.

Quote#!/bin/bash

# make script race condition safe

if [[ -d "/tmp/${0///}" ]] || ! mkdir "/tmp/${0///}"; then exit 1; fi

trap 'rmdir "/tmp/${0///}"' EXIT# ######### Settings ##################

spinup_disks='1,2,3,4,5,6,7' # Note: Usually parity disks aren't needed for Plex

cpu_threshold=1 # Disks spin up if Plex container's CPU load exceeds this value

# #####################################

#

# ######### Script ####################

while true; do

plex_cpu_load=$(docker stats --no-stream | grep -i emby | awk '{sub(/%/, "");print $3}')

if awk 'BEGIN {exit !('$plex_cpu_load' > '$cpu_threshold')}'; then

echo "Container's CPU load exceeded threshold"

for i in ${spinup_disks//,/ }; do

disk_status=$(mdcmd status | grep "rdevLastIO.${i}=" | cut -d '=' -f 2 | tr -d '\n')

if [[ $disk_status == "0" ]]; then

echo "Spin up disk ${i}"

mdcmd spinup "$i"

fi

done

fi

done-

1

1

-

-

I'm trying to use this script to spin up the drives to avoid the Emby coverart loading time. However, when I try to run the script, I get the following error: /tmp/user.scripts/tmpScripts/emby_monitoring_spinup/script: line 18: mdcmd: command not found

Right now, I'm just running it to test using the CA User Scripts "Run Script" option.

Any ideas why it's reporting mdcmd not being found? I did try to run mdcmd status | grep "rdevLastIO.1}=" | cut -d '=' -f 2 | tr -d '\n' from the webterminal and that worked fine.

-

Just to give an update here:

- I'm back online! YaY

- Flashed the USB drive again

- Copied over super.dat and the drive assignments were found

- Copied over the Shares folder and got my shares back. Still had to do some configuration, as the shares weren't set up to use cache.

- Copied over the Plugins folder and got my dockers back. Some of them had broken templates as the xml file in flash\config\plugins\dockerMan\templates-user was lost. Turns out that if you uninstall a docker and leave the image and the reinstall and keep the same paths and ports, it fixes the missing template. I wish I had known this before I delete some of my dockers. Fortunately, the most critical ones were recovered

- Had to reinstall lots of plugins

- Had to set up all my settings again

- I only a few files that where in the process of being copied when I did the hard shutdown, and of course spent my Saturday fixing my mistake

Lessons Learned:

- Don't write scripts after a bottle of wine

- Test and QA your scripts before using them

- Backup

- Backup

- Backup

- Unraid works a lot better than I expected!!

Thanks for the help here and on Discord!

NCT6775 & Dynamix System Temperature + Dynamix Auto Fan Control Support

in Plugins and Apps

Posted

I doubt your issue has anything with the NCT6775 driver.

If you have a multiple PWM controllers, you need to test each own to find out which one is the main PWM controller. Although most of the times its the one ending in pwm1.

Unless you connect the NORCO s500 fans to the motherboard PWM/Fan headers, you won't get able to get any readouts from them. From what I read the NORCO s500 fan is internal in the unit and you only connect power and sata to the units, so unless you open it and connect the cable to the motherboard it's not going to be readout in Unraid. That means that it's the internal temp sensor in the NORCO s500 which controls the fan.

As for why the CPU fan isn't found, it's most likely the ict8718 controllers, if not, you'd need to use the sensors-detect to find which driver is missing.

However, i'll be honest the GA-G33-DS3R is quite old and you might not be able to find a lot of information out there.