a12vman

-

Posts

137 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by a12vman

-

-

I am seeing this daily in my syslog:

kernel: traps: lsof[13954] general protection fault ip:14b81e3ccc6e sp:9e3ce931b6829a07 error:0 in libc-2.37.so[14b81e3b4000+169000]

How do I isolate?

-

Ok my biggest concern is preserving my plex metadata. I changed the docker vdisk and appdata location as below. I enabled docker. Plex now shows as empty. In looking at the filesystem the original plex appdata on the array is unchanged at 4.5GB. It re-created the appdata on cache_nvme as a new install only 4 MB. The other Dockers like Emby & Sonarr have the same issue but others like Deluge & Duplicacy are ok. What did I do wrong?

-

-

I noticed a few errors on my NVME drive(holds System,Docker,AppData,Domains) after a recent 6.12.6 upgrade.. I picked up a new SSD, formatted it & added as a Cache Drive.

Last night I stopped docker & VM services. Went to System,Appdata,Docker,Domains shares & changed secondary storage to Array, Mover action set to Cache-->Array. Kicked off the Mover & let it run overnight.

This morning I confirmed the mover wasnt running. Went to the same shares, changed primary storage to Crucial_Cache, set Mover Action to Array-->Cache. Started the Mover. Within minutes it failed. sdi is the new cache drive and filled the system log.

When I look at Shares, Appdata and System are Green and living on Array. Docker and Domains show that files are unprotected. Looking at MC I have Docker.img on Mnt\User\Docker. Domains is still on the NVME Cache even though I set action to NVME-->Array.

Am I incredibly unlucky with a bad SSD or could I have a problem with the controller? What are my next steps? I don't want to make the problem worse. I do have a full backup of AppData that I took before I started all of this.

Dec 30 06:12:07 MediaTower kernel: BTRFS error (device sdi1): bdev /dev/sdi1 errs: wr 6, rd 0, flush 0, corrupt 0, gen 0 Dec 30 06:12:07 MediaTower kernel: BTRFS error (device sdi1): bdev /dev/sdi1 errs: wr 7, rd 0, flush 0, corrupt 0, gen 0 Dec 30 06:12:07 MediaTower kernel: BTRFS error (device sdi1): bdev /dev/sdi1 errs: wr 8, rd 0, flush 0, corrupt 0, gen 0 Dec 30 06:12:07 MediaTower kernel: BTRFS error (device sdi1):

-

-

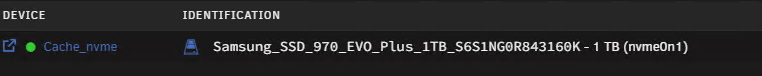

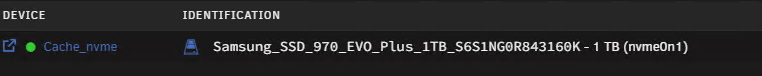

I upgraded to 6.12.6 yesterday. The process of starting the Docker Service is generating the error below on this device. It happened yesterday right after the upgrade and again today when I stopped & started the Docker Engine. I reviewed SysLogs from the last 2 weeks, there were no errors on this drive until I upgraded to 6.12.6

Dec 26 13:33:45 MediaTower kernel: nvme0n1: p1

Dec 26 13:33:45 MediaTower kernel: BTRFS: device label cache_nvme devid 1 transid 6847202 /dev/nvme0n1p1 scanned by udevd (1121)

Dec 26 13:34:23 MediaTower emhttpd: Samsung_SSD_970_EVO_Plus_1TB_S6S1NG0R843160K (nvme0n1) 512 1953525168

Dec 26 13:34:23 MediaTower emhttpd: import 30 cache device: (nvme0n1) Samsung_SSD_970_EVO_Plus_1TB_S6S1NG0R843160K

Dec 26 13:34:23 MediaTower emhttpd: read SMART /dev/nvme0n1

Dec 26 13:36:12 MediaTower emhttpd: shcmd (147): mount -t btrfs -o noatime,space_cache=v2 /dev/nvme0n1p1 /mnt/cache_nvme

Dec 26 13:36:12 MediaTower kernel: BTRFS info (device nvme0n1p1): using crc32c (crc32c-intel) checksum algorithm

Dec 26 13:36:12 MediaTower kernel: BTRFS info (device nvme0n1p1): using free space tree

Dec 26 13:36:12 MediaTower kernel: BTRFS info (device nvme0n1p1): enabling ssd optimizations

Dec 26 13:36:12 MediaTower kernel: BTRFS info (device nvme0n1p1: state M): turning on async discard

Dec 26 14:53:55 MediaTower kernel: nvme0n1: I/O Cmd(0x2) @ LBA 294821792, 32 blocks, I/O Error (sct 0x2 / sc 0x81) MORE DNR

Dec 26 14:53:55 MediaTower kernel: critical medium error, dev nvme0n1, sector 294821792 op 0x0:(READ) flags 0x1000 phys_seg 1 prio class 2

Dec 27 01:01:39 MediaTower root: /mnt/cache_nvme: 718.8 GiB (771819671552 bytes) trimmed on /dev/nvme0n1p1

Dec 27 06:48:35 MediaTower kernel: nvme0n1: I/O Cmd(0x2) @ LBA 294821792, 32 blocks, I/O Error (sct 0x2 / sc 0x81) MORE DNR

Dec 27 06:48:35 MediaTower kernel: critical medium error, dev nvme0n1, sector 294821792 op 0x0:(READ) flags 0x1000 phys_seg 1 prio class 2

-

I removed the drive from historical devices. Plugged it back in and set the Mount Share=Yes. It shows up correctly and I can access the drive.

-

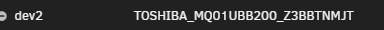

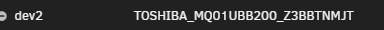

I just finished upgrading from 6.11 --> 6.12.6 and have UD 2023.12.15 Plugin.

Everything is good with one exception. I have an unassigned device, it's a portable hard drive connected to the Server's USB Port.

This is the volume before the upgrade:

This is the volume after the upgrade:

It will mount but I cannot access the share. I took the drive an plugged in into my laptop's USB Port, I am able to view the contents without issue.

Is there anything I should be looking at? "New_Volume" is the name of share.

-

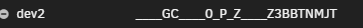

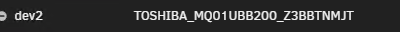

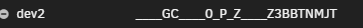

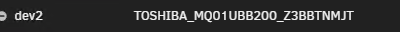

I just finished upgrading from 6.11 --> 6.12.6

Everything is good with one exception. I have an unassigned device, it's a portable hard drive connected to the Server's USB Port.

This is the volume before the upgrade:

This is the volume after the upgrade:

It will mount but I cannot access the share. I took the drive an plugged in into my laptop's USB Port, I am able to view the contents without issue.

Is there anything I should be looking at? "New_Volume" is the name of share.

-

Was using an SSD as a download drive(lots of write/read/delete cycles) a bad choice?

-

I pulled the drive and replaced it with a spinning drive.

All is well now.

Interesting though that even after the drive was unmounted from UD

that syslog filled up with this line(over & over) until I did a re-boot.

SMARTCTL IS USING A DEPRECATED SCSI IOCTL, PLEASE CONVERT IT TO SG_IO

-

This device(Lexar SSD), sdg, has failed 32 days after purchase. I have it installed as an unassigned device.

Is there any diagnostic(chkdsk) tools to examine the drive within UnRaid?

Here is an Excerpt from the System Log. When I un-mount & re-mount the drive there are no files or folders present.

This drive just serves as a download location for Sonarr, then files are moved to cache and then to the array(overnight).

The drive is a Lexar NQ100 SSD 1.92TB 2.5” SATA III Internal Solid State Drive, Up to 550MB/s Read (LNQ100X1920-RNNNU),

did I pick the wrong SSD to serve as a scratch drive? I tried to do the "Check File System" in Unassigned Devices, but Unraid tells

me that there are I/O Errors and it can't be read.

15:38:51 MediaTower kernel: I/O error, dev sdg, sector 357171856 op 0x0:(READ) flags 0x0 phys_seg 1 prio class 0 Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#7 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=DRIVER_OK cmd_age=0s Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#7 CDB: opcode=0x2a 2a 00 38 53 c4 08 00 04 68 00 Sep 6 15:38:51 MediaTower kernel: I/O error, dev sdg, sector 945013768 op 0x1:(WRITE) flags 0x100000 phys_seg 88 prio class 0 Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#8 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=DRIVER_OK cmd_age=0s Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#8 CDB: opcode=0x2a 2a 00 38 53 c8 70 00 07 a8 00 Sep 6 15:38:51 MediaTower kernel: I/O error, dev sdg, sector 945014896 op 0x1:(WRITE) flags 0x104000 phys_seg 168 prio class 0 Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#9 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=DRIVER_OK cmd_age=0s Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#9 CDB: opcode=0x2a 2a 00 38 53 d0 18 00 04 08 00 Sep 6 15:38:51 MediaTower kernel: I/O error, dev sdg, sector 945016856 op 0x1:(WRITE) flags 0x100000 phys_seg 88 prio class 0 Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#10 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=DRIVER_OK cmd_age=0s Sep 6 15:38:51 MediaTower kernel: sd 5:0:0:0: [sdg] tag#10 CDB: opcode=0x2a 2a 00 38 53 d4 20 00 08 18 00 Sep 6 15:38:51 MediaTower kernel: I/O error, dev sdg, sector 945017888 op 0x1:(WRITE) flags 0x104000 phys_seg 168 prio class 0 Sep 6 15:38:51 MediaTower kernel: sdg1: writeback error on inode 4609739, offset 0, sector 9922400 Sep 6 15:38:51 MediaTower kernel: XFS (sdg1): log I/O error -5 Sep 6 15:38:51 MediaTower kernel: XFS (sdg1): metadata I/O error in "xfs_buf_ioend+0x113/0x386 [xfs]" at daddr 0xa7abe630 len 32 error 5 Sep 6 15:38:51 MediaTower kernel: XFS (sdg1): Filesystem has been shut down due to log error (0x2).

-

I pulled the 2023.08.18a version - all good now. Thank You!

-

No I have not removed this disk. It is plugged in to one of the internal sata ports, inside the server.

-

root@MediaTower:~# cat /proc/mounts

rootfs / rootfs rw,size=32892692k,nr_inodes=8223173,inode64 0 0

proc /proc proc rw,relatime 0 0

sysfs /sys sysfs rw,relatime 0 0

tmpfs /run tmpfs rw,nosuid,nodev,noexec,relatime,size=32768k,mode=755,inode64 0 0

/dev/sda1 /boot vfat rw,noatime,nodiratime,fmask=0177,dmask=0077,codepage=437,iocharset=iso8859-1,shortname=mixed,flush,errors=remount-ro 0 0

/dev/loop0 /lib/firmware squashfs ro,relatime,errors=continue 0 0

overlay /lib/firmware overlay rw,relatime,lowerdir=/lib/firmware,upperdir=/var/local/overlay/lib/firmware,workdir=/var/local/overlay-work/lib/firmware 0 0

/dev/loop1 /lib/modules squashfs ro,relatime,errors=continue 0 0

overlay /lib/modules overlay rw,relatime,lowerdir=/lib/modules,upperdir=/var/local/overlay/lib/modules,workdir=/var/local/overlay-work/lib/modules 0 0

hugetlbfs /hugetlbfs hugetlbfs rw,relatime,pagesize=2M 0 0

devtmpfs /dev devtmpfs rw,relatime,size=8192k,nr_inodes=8223176,mode=755,inode64 0 0

devpts /dev/pts devpts rw,relatime,gid=5,mode=620,ptmxmode=000 0 0

tmpfs /dev/shm tmpfs rw,relatime,inode64 0 0

fusectl /sys/fs/fuse/connections fusectl rw,relatime 0 0

cgroup_root /sys/fs/cgroup tmpfs rw,relatime,size=8192k,mode=755,inode64 0 0

cpuset /sys/fs/cgroup/cpuset cgroup rw,relatime,cpuset 0 0

cpu /sys/fs/cgroup/cpu cgroup rw,relatime,cpu 0 0

cpuacct /sys/fs/cgroup/cpuacct cgroup rw,relatime,cpuacct 0 0

blkio /sys/fs/cgroup/blkio cgroup rw,relatime,blkio 0 0

memory /sys/fs/cgroup/memory cgroup rw,relatime,memory 0 0

devices /sys/fs/cgroup/devices cgroup rw,relatime,devices 0 0

freezer /sys/fs/cgroup/freezer cgroup rw,relatime,freezer 0 0

net_cls /sys/fs/cgroup/net_cls cgroup rw,relatime,net_cls 0 0

perf_event /sys/fs/cgroup/perf_event cgroup rw,relatime,perf_event 0 0

net_prio /sys/fs/cgroup/net_prio cgroup rw,relatime,net_prio 0 0

hugetlb /sys/fs/cgroup/hugetlb cgroup rw,relatime,hugetlb 0 0

pids /sys/fs/cgroup/pids cgroup rw,relatime,pids 0 0

tmpfs /var/log tmpfs rw,relatime,size=131072k,mode=755,inode64 0 0

cgroup /sys/fs/cgroup/elogind cgroup rw,nosuid,nodev,noexec,relatime,xattr,release_agent=/lib64/elogind/elogind-cgroups-agent,name=elogind 0 0

rootfs /mnt rootfs rw,size=32892692k,nr_inodes=8223173,inode64 0 0

tmpfs /mnt/disks tmpfs rw,relatime,size=1024k,inode64 0 0

tmpfs /mnt/remotes tmpfs rw,relatime,size=1024k,inode64 0 0

tmpfs /mnt/addons tmpfs rw,relatime,size=1024k,inode64 0 0

tmpfs /mnt/rootshare tmpfs rw,relatime,size=1024k,inode64 0 0

/dev/md1 /mnt/disk1 xfs rw,noatime,nouuid,attr2,inode64,logbufs=8,logbsize=32k,noquota 0 0

/dev/md2 /mnt/disk2 xfs rw,noatime,nouuid,attr2,inode64,logbufs=8,logbsize=32k,noquota 0 0

/dev/md3 /mnt/disk3 xfs rw,noatime,nouuid,attr2,inode64,logbufs=8,logbsize=32k,noquota 0 0

/dev/md4 /mnt/disk4 xfs rw,noatime,nouuid,attr2,inode64,logbufs=8,logbsize=32k,noquota 0 0

/dev/nvme0n1p1 /mnt/cache_nvme btrfs rw,noatime,ssd,space_cache=v2,subvolid=5,subvol=/ 0 0

/dev/sdh1 /mnt/cache_ssd btrfs rw,noatime,ssd,space_cache=v2,subvolid=5,subvol=/ 0 0

shfs /mnt/user0 fuse.shfs rw,nosuid,nodev,noatime,user_id=0,group_id=0,default_permissions,allow_other 0 0

shfs /mnt/user fuse.shfs rw,nosuid,nodev,noatime,user_id=0,group_id=0,default_permissions,allow_other 0 0

/dev/loop2 /var/lib/docker btrfs rw,noatime,ssd,space_cache=v2,subvolid=5,subvol=/ 0 0

/dev/loop2 /var/lib/docker/btrfs btrfs rw,noatime,ssd,space_cache=v2,subvolid=5,subvol=/ 0 0

/dev/loop3 /etc/libvirt btrfs rw,noatime,ssd,space_cache=v2,subvolid=5,subvol=/ 0 0

nsfs /run/docker/netns/default nsfs rw 0 0

//BACKUPBOB/Backup /mnt/remotes/BACKUPBOB_Backup cifs rw,relatime,vers=2.0,cache=strict,username=admin,uid=99,noforceuid,gid=100,noforcegid,addr=192.168.1.175,file_mode=0777,dir_mode=0777,iocharset=utf8,soft,nounix,mapposix,rsize=65536,wsize=65536,bsize=1048576,echo_interval=60,actimeo=1 0 0

//BACKUPBOB/Backup2 /mnt/remotes/BACKUPBOB_Backup2 cifs rw,relatime,vers=2.0,cache=strict,username=admin,uid=99,noforceuid,gid=100,noforcegid,addr=192.168.1.175,file_mode=0777,dir_mode=0777,iocharset=utf8,soft,nounix,mapposix,rsize=65536,wsize=65536,bsize=1048576,echo_interval=60,actimeo=1 0 0

/dev/sdg1 /mnt/disks/Download xfs rw,noatime,nodiratime,attr2,discard,inode64,logbufs=8,logbsize=32k,noquota 0 0

nsfs /run/docker/netns/ac6da8cf6195 nsfs rw 0 0

nsfs /run/docker/netns/df5c982cb68a nsfs rw 0 0

nsfs /run/docker/netns/336ac711bd2c nsfs rw 0 0

nsfs /run/docker/netns/e9fd8399113c nsfs rw 0 0

nsfs /run/docker/netns/b5bbea8aac8c nsfs rw 0 0

nsfs /run/docker/netns/2ac487c7cb94 nsfs rw 0 0

nsfs /run/docker/netns/7b212e614d43 nsfs rw 0 0

nsfs /run/docker/netns/3f3fbcc97452 nsfs rw 0 0

nsfs /run/docker/netns/9ec74d042238 nsfs rw 0 0

nsfs /run/docker/netns/263f36e72df0 nsfs rw 0 0

nsfs /run/docker/netns/6d8c0e2fb8c1 nsfs rw 0 0

nsfs /run/docker/netns/f8d0a384f778 nsfs rw 0 0

nsfs /run/docker/netns/c4ff2e1eabd8 nsfs rw 0 0

nsfs /run/docker/netns/e8e81a7281b3 nsfs rw 0 0 -

-

-

I updated UD to 2023.08.17a, I see the same faded "Reboot" symbol even after stopping unraid & full re-boot.

My scenario is a little different as the share is still accessible via SMB.

I like the ability to move, delete, rename files in this UD share from the Unraid UI. How do I get that feature back?

-

My array won't stop. Looking at the syslog I see this over and over. Disk 4 is completely empty, is excluded from all my shares, docker service is stopped, and VM Service is stopped.

What can I do?

Jul 25 11:02:24 MediaTower emhttpd: Retry unmounting disk share(s)...

Jul 25 11:02:29 MediaTower emhttpd: Unmounting disks...

Jul 25 11:02:29 MediaTower emhttpd: shcmd (21716): umount /mnt/disk4

Jul 25 11:02:29 MediaTower root: umount: /mnt/disk4: target is busy.

Jul 25 11:02:29 MediaTower emhttpd: shcmd (21716): exit status: 32

After 45 minutes the array finally stopped. False Alarm.

-

Same issue here, running "unraid-api restart" does not fix the problem for me.

-

I too would like to hear about plug-in vs baked-in functionality.

I am amazed that something like Unassigned Devices is not Native Functionality.

Also, any plans to add guardrails for long-time users like me?

I have been a user since 2009 starting with 1 TB drives.

I started with ReiserFS and never paid attention.

I've done numerous hardware and drive upgrades over the years with no issues.

Recently I picked up an 18TB drive. I had no idea that ReiserFS was being deprecated.

Pre-Cleared the Drive and all is good. Replaced an aging 8TB data drive with the 18TB Drive.

Started having issue shortly after the re-build was complete. I ended up putting the 8 TB Drive

back in using the "New Config" Method. Formatted the 18TB Drive as XFS then followed the guide to

migrate my data off of each drive using unBalance.I've already learned my lesson and suffered the pain of converting my data drives from Reiser to XFS.

I didn't lose any data but there was no warning or error message when trying to add an 18TB data drive

to the array with an invalid FS. What made it worse was the fact that I had already been running with an

18TB drive(in the Parity Slot) for months with no issues at all. In my mind I had no cause for concern when it

came time to replace 8TB with 18TB.-

1

1

-

1

1

-

-

After days of clearing data from Reiser Drives to XFS Drives i am finally finished.

All of my drives are formatted as XFS. Sigh of relief that I didn't lose any data.

There were a few things that confused me.

In summary

1. Pre-cleared an 18TB drive.

2. I stopped the array and replaced the 10TB drive with the 18TB drive.

3. Started the array to re-build the 18TB from parity.

i started getting errors shortly after the re-build completed. Soon after that the drive was marked as umountable.

4. Stopped the array, pulled the failed 18TB Drive.

5. New config--> Preserve All.

6. Put the 10TB drive back where the failed 18TB was located.

7. Mark parity as valid and start the array.

At this point I am back to original state.

I took the failed(by failed I mean drive was making all kinds of clicking sounds that were not normal) 18TB drive back to Best Buy for an Exchange.

New 18TB drive into the server for Pre-Clear.

Formatted Drive XFS.

Followed the process in the "Upgrade Fliesystem" document - emptied each Reiser drive, format, then fill with data.

i am a little suprised that i never got a warning when i attempted to re-build in steps 3 above.

i don't remember the format procedure the first time, i don't remember being asked for a File Syste Type.

-

Got it, thank you for clarification!

-

1

1

-

-

I am working my way thru upgrading my server from ReiserFS to XFS, following the steps in the "File System Conversion" Document.

I have 3 data drives(14/12/10) and a new 18TB drive formatted as XFS.

So far I have

1. Emptied the 14TB Drive using UnBalance

2. Stopped the Array

3. New Config to Preserve

4. Changed positions of the swap drive and the Reiser data drive I emptied.

5. Start Array with the "Parity is Valid" option.

Now I am ready to start emptying the 12TB Data Drive to the 14TB Swap Drive.

At this point I need to format the Empty 14TB Drive as XFS. How do I do this while it's already in the array?

Help interpret SysLog Entry

in General Support

Posted

Anyone have any ideas? This is showing up every day around the same time. I am not seeing any issues from anything else.

Can any of these identifiers be used to tie back to a specific Docker or Process that is running?

kernel: traps: unrar7[5211] trap invalid opcode ip:562a90ff41a1 sp:7fffe2a97c48 error:0 in unrar7[562a90ff1000+4b000]