Freddie

Members-

Posts

99 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Freddie

-

Yes, those are the instructions, but the formatting on the wiki makes it easy to misunderstand and try to delete one file called: "config/shadow config/smbpasswd" OP said "Shadow config folder didn't exist"

-

Perhaps the password reset instructions need an edit. @Kastro you seem to have tried to delete a single file called: "config/shadow config/smbpasswd" I think the instruction should be to delete two files: "config/shadow" "config/smbpasswd"

-

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

The file modified time stamps do not change, but the directory time stamps do change. The consld8 script uses diskmv which in turn uses rsync to copy files with the -t option flag. The -t option flag preserves the modification times on all files, however, when files are copied to the destination disk and removed from the source disk, the parent directory's modification time is updated by the filesystem. -

It breaks down at the first requirement: In unRAID, the virtual device (/dev/md#) is underneath the filesystem and the virtual device does not support FITRIM. unRAID would need to update the parity drive when the actual data drive (/dev/sdX) is trimmed. There was a little discussion about a year ago in this very thread if you want to think more about the details: http://lime-technology.com/forum/index.php?topic=34434.msg382654#msg382654 This wikipedia article helped me differentiate between TRIM and garbage collection: https://en.wikipedia.org/wiki/Write_amplification#BG-GC The only part that concerns me is the section about Filesystem-aware garbage collection. From the referenced paper (pdf) and another one (pdf), it appears that some SSD devices did garbage collection after a Windows XP format operation. Windows XP did not have TRIM support but it appears some devices where aware of the format operation anyway and proceeded to do garbage collection. So the question remains, are there any SSD devices that will do garbage collection on data that is still valid in unRAID? I think it is very unlikely but impossible to prove. My little piece of anecdotal evidence: I've been running for over a year with an SSD accelerator drive (Silicon Power 240GB S60). I've run monthly parity checks and once did checksums on parity reconstructed data and found no issues. Some data has been cycled off and on and it has generally been over 95% full. I have no plans to stop using the SSD but I also have full backups.

-

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

So my question is what if there isn't enough space on disk8? I have a couple of tasks I would like to perform. [*]Move a bunch of TV shows I have spread across disks 4, 5, 8 & 10 to disks 1, 2, & 3, according to the allocation method I have chosen (high water.) [*]Move a bunch of movies from disks 4, 5, 6 & 7 to disks 5 & 6, again, according to the high water allocation method. Is there a way to achieve this using your tools? Or is there a better way? Sorry, no, my tools completely bypass the allocation method and you have to specify a single destination disk. -

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

I'm sorry you are having troubles Dave. I read through your thread and it looks painful. The next time I mess with consld8 I will consider adding an option to keep the source files. -

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

Thanks Freddie, I really appreciate the info. My 5TB drive should accomodate all of my Movies (at the moment) and then some. As presently configured w/o "usr" directories/shares would the command be "consld8 -f /mnt/Movies disk5" or do I need the "/usr/" in the the command line? Thanks so much for your response and wonderful work! Dave Both diskmv and consld8 are designed to act on user share directories. You must specify a valid user share path. I expect you can simply enable user shares, then /mnt/user/Movies will be a valid user share path and you should be able to run [color=blue]diskmv -f -k /mnt/user/Movies disk1 disk5[/color] . -

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

No need to run diskmv first. When you run consld8, it will call diskmv as many times as needed to consolidate your source directory to the destination disk. For example: [color=blue]consld8 -f /mnt/user/Movies disk8[/color] will move the Movies user share from all other disks to disk8 (if there is enough space). -

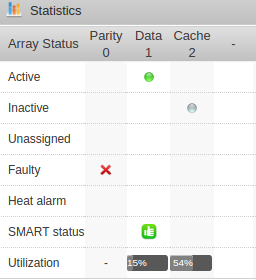

One of my drives has an increasing Current_Pending_Sector count and it also failed the short SMART test. The Dashboard page still shows a green thumbs up for the SMART status. I would expect see a red or orange thumbs down icon with a drive in this state. Is this a defect that should be changed? This is a test system that has no parity, one cache ssd and a single data drive with problems. iwer-diagnostics-20150921-1415.zip

-

The email account used to log in to windows 10 is not the same as the windows 10 username. To get your windows username, enter 'whoami' at the windows command prompt. That should print your computername\username.

-

Definitely related. You need valid ssh host keys to do anything over ssh. Did you rename or remove the config/ssh directory on the flash drive and then reboot? No. Maybe. Especially if you continue to see problems with files stored on the usb stick.

-

SSH is a way to log in to the server from another computer (client) and get a command prompt. Like telnet but newer and more secure. If you haven't used ssh then deleting the directory will not matter. If you have used ssh you might get some warnings when you try to login over ssh again.

-

These key files are copied from the flash drive during startup. You can rename or remove the config/ssh directory on the flash drive, then reboot and unRAID will generate new key files. This should take care of these error messages, but it will also make the server look different to any SSH clients. Don't know how the files became invalid, maybe points to corrupt flash drive.

-

[unRAID 6 beta14+] Unassigned Devices [former Auto Mount USB]

Freddie replied to gfjardim's topic in Plugin Support

I had the same symptoms after changing cache drive from one SSD to another. Seems like unRAID doesn't want to forget about the first cache device. I had to stop array, un-assign cache device, start array, stop array, assign new cache, and start array again. I don't think I had this plugin installed, but I'm not absolutely sure. -

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

I came to the conclusion they are totally harmless. See here for discussion: https://github.com/trinapicot/unraid-diskmv/issues/2 -

Not exactly. If in use, diskmv won't do anything with a file. So duplicate files will not result from in use files. Killing the rsync will likely result in a duplicate file and the file on the destination disk might be a partial copy.

-

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

Yes, file attributes are preserved. That syntax should work. -

[SOLVED] Windows 10 insider issues after build 10130

Freddie replied to danioj's topic in VM Engine (KVM)

Thanks gwl. I was seeing the same problem updating from build 10130. Changed from using 2 cpu cores to 1 and the update to 10240 is now progressing. I also did some testing before starting the update. I had noticed that a recovery/reset results in the same symptoms. A few seconds after restart, a light-blue screen is displayed with a sad face and error message. The vm then reboots and no changes have been made. I was able to use this to test out various vm settings and the only thing that avoided the light-blue sad face was using a single cpu core instead of 2 cpu cores. -

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

There is no built-in log file mechanism in diskmv. You can re-direct standard output to a file diskmv /user/share/video disk1 disk2 > logfile.log Or use tee to keep standard output on your terminal and get a log file diskmv /user/share/video disk1 disk2 | tee logfile.log -

diskmv -- A set of utilities to move files between disks

Freddie replied to Freddie's topic in User Customizations

diskmv will skip any files that are in use. Is there any chance the left-behind files were being accessed by something else when you where trying to move them? -

[unRAID 6 beta14+] Unassigned Devices [former Auto Mount USB]

Freddie replied to gfjardim's topic in Plugin Support

A precleared disk >2.2TB doesn't really have a partition on it. It has a "GPT Protective MBR" but it doesn't have a GPT. The block tools interpret it as a MBR partition of maximum size. In reviewing this plugin code I see the partition size is reported in the disk row when there is only one partition. I would change it so that the disk row always reports the disk size. This would fix the disk size, but would leave a confusing 2.2TB partition listed. You could also get really fancy and detect a valid preclear signature, note it in the FS field and not show any partition. -

[unRAID 6 beta14+] Unassigned Devices [former Auto Mount USB]

Freddie replied to gfjardim's topic in Plugin Support

Now I want to nail this. Does df -h show the right size? Out of town for the weekend. Will let you know asap. Only waiting for you.... [emoji28] Right... sorry, i already added the drive to my array I have a precleared 4TB drive that also shows a disk size of 2.2TB. Like mr-hexen wrote in another post, df -h does not show any information on the disk because it is not mounted. Here is some other information on the disk: root@uwer:~# lsblk /dev/sdd NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT sdd 8:48 0 3.7T 0 disk ??sdd1 8:49 0 2T 0 part root@uwer:~# blockdev --getsize64 /dev/sdd 4000787030016 root@uwer:~# blockdev --getsize64 /dev/sdd1 2199023255040 root@uwer:~# /boot/custom/preclear_disk.sh -t /dev/sdd Pre-Clear unRAID Disk /dev/sdd ################################################################## 1.15 Model Family: Seagate Desktop HDD.15 Device Model: ST4000DM000-1CD168 Serial Number: Z300119S LU WWN Device Id: 5 000c50 04f1e88ef Firmware Version: CC43 User Capacity: 4,000,787,030,016 bytes [4.00 TB] Disk /dev/sdd: 4000.8 GB, 4000787030016 bytes 255 heads, 63 sectors/track, 486401 cylinders, total 7814037168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 4096 bytes I/O size (minimum/optimal): 4096 bytes / 4096 bytes Disk identifier: 0x00000000 Device Boot Start End Blocks Id System /dev/sdd1 1 4294967295 2147483647+ 0 Empty Partition 1 does not start on physical sector boundary. ######################################################################## ========================================================================1.15 == == DISK /dev/sdd IS PRECLEARED with a GPT Protective MBR == ============================================================================ Also saw some strange behavior when testing preclear signature. Disk was in standby when preclear test command was issued. While drive was spinning up, unassigned devices tab was dimmed except for spinning wheel. Then unassigned devices tab refreshed with correct drive size. After another refresh, the unassigned devices tab again shows incorrect drive size. -

A single slash can make a big difference. It should be: rsync -nrcv /mnt/disk1/ /mnt/disk10/t >/boot/verify.txt Without the slash, rsync would copy the directory. With the slash rsync would copy the contents of the directory. @bjp999: Perhaps your instruction post should be edited to include the slash:

-

Nope, it really depends on how the trim support is implimented, and its really a situation by situation thing. Imagine the following: You have a data file written to sectors 1 through 100. You delete the file. Normally on a regular drive the delete simply does a write to the directory sectors (say sector 2000) to mark sectors 1 through 100 as available to store new data. This write is done through the OS so the change to sector 2000 is covered as part of the parity update and there is no change at all to sectors 1 through 100, they still contains the file data, it just doesnt show up in the directory listings. Now on an OS with built in trim support for ssd, the OS sends an additional different command indicating sectors 1 through 100 can be trimmed but does not send any write all zeros to those sectors. Its up to the drive at this point how to handle things. Some drives will erase those ssd flash sectors, some might do nothing until a later point. There are no writes of zeros to sectors 1 through 100 from an OS perspective, so parity still has your orignal data file in the calculated parity. If the drive erases those sectors, then your parity is now incorrect. I went through a similar thought process trying to answer my own question, but my thoughts included one key difference. When a sector of data is trimmed, the SSD firmware can do whatever to the associated memory cells, but a read of the trimmed device sector MUST return zeros. That is a big assumption on my part, but it is the only thing that makes sense to me. From an unRAID perspective, if a trim command to an md device is successful in reaching the SSD, then parity must be updated as well. I assume there is no mechanism to update parity with trim, so I would hope that any trim command to an md device is blocked. In my experience, an SSD works fine as a data drive. I recently had my SSD data drive disabled (I think due to a bad sata cable going to a cache drive on the same controller). I ended up copying data from the emulated SSD and a verification of md5 sums after the fact showed no problems. Keep in mind, when adding an SSD data drive, the clear or pre-clear process will fill the entire device with data. So write performance could be affected depending on how the firmware handles a full device. An SSD parity drive will always be full. But we are talking about Accelerator drives here and I think the idea is to accelerate reads, so write performance shouldn't be a big factor. It is working great for me also. I'm guessing for some people it is not worth the effort or risk of moving data around, but it just feels good to consume media with no disks spinning. I spent a bunch of time tracking what was spinning up my disks unexpectedly. It was almost always access to the artwork I have stored next to video files. I export my Kodi database to single files and whenever a new thumbnail or fanart file shows up on disk, each Kodi client will access that file when it is needed for the gui. So when browsing through tv shows or movies, disks would spinup when I didn't expect. So I moved all .jpg and .tbn files to the accelerator drive. I also move tv show seasons to the accelerator drive for binge watching.

-

You might be onto something... According to this doc: https://docs.docker.com/articles/networking/ Docker already does exactly what you describe, although it looks to me it's mounted rw. Can you post output of: docker exec <container> mounts of one of your containers? ps. I'd also be grateful if you changed your sig There is some information in the doc limetech linked that could explain the docker dns issue: If a container starts before dhcpd has updated the host resolv.conf, then the container will have a stale version of resolv.conf. A container stop and start will then pick up the changes. I'm guessing sparklyballs has a bunch of sparkly new containers created after Docker 1.5.0. I haven't seen any problems, but my containers are 3 months old which is before unRAID released with docker 1.5.0 (I think it first came public in unRAID 6.0beta15, Apr 17).