-

Posts

423 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by rix

-

-

On 2/24/2019 at 1:30 AM, saarg said:

It will be available when it's available.

Asking when it's released won't make it appear any faster. We have a life also and it's not all of us that does the compiling.

As much as I support this notion having little spare time to manage myself: it's been a week.

Can we help you guys build and troubleshoot an RC5 build with the latest drivers?

I would gladly spin up an unraid VM and try this out if you gave me directions on the steps required.

-

Could you perchance help me see this up with the Handbrake container? I cannot find any nvenc option there...

-

<?xml version='1.0' encoding='UTF-8'?> <domain type='kvm' id='7' xmlns:qemu='http://libvirt.org/schemas/domain/qemu/1.0'> <name>Windows</name> <uuid>HIDDEN</uuid> <description></description> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="Nvidia.png" os="windows10"/> </metadata> <memory unit='KiB'>16777216</memory> <currentMemory unit='KiB'>16777216</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>10</vcpu> <cputune> <vcpupin vcpu='0' cpuset='6'/> <vcpupin vcpu='1' cpuset='7'/> <vcpupin vcpu='2' cpuset='8'/> <vcpupin vcpu='3' cpuset='9'/> <vcpupin vcpu='4' cpuset='10'/> <vcpupin vcpu='5' cpuset='11'/> <vcpupin vcpu='6' cpuset='12'/> <vcpupin vcpu='7' cpuset='13'/> <vcpupin vcpu='8' cpuset='14'/> <vcpupin vcpu='9' cpuset='15'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-3.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/HIDDEN_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> <hyperv> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='none'/> </hyperv> </features> <cpu mode='host-passthrough' check='none'> <topology sockets='1' cores='10' threads='1'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source dev='/dev/disk/by-id/ata-SanDisk_SD8SN8U1T001122_161446440614'/> <backingStore/> <target dev='hdc' bus='sata'/> <boot order='1'/> <alias name='sata0-0-2'/> <address type='drive' controller='0' bus='0' target='0' unit='2'/> </disk> <disk type='block' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source dev='/dev/disk/by-id/ata-ST8000DM0004-1ZC11G_ZKG00C3A'/> <backingStore/> <target dev='hdd' bus='sata'/> <alias name='sata0-0-3'/> <address type='drive' controller='0' bus='0' target='0' unit='3'/> </disk> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='sata' index='0'> <alias name='sata0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </controller> <controller type='usb' index='0' model='ich9-ehci1'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <alias name='usb'/> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <alias name='usb'/> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <alias name='usb'/> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <interface type='bridge'> <mac address='RANDOM-MAC-ADR'/> <source bridge='br0'/> <target dev='vnet0'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/0'/> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/0'> <source path='/dev/pts/0'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-7-Windows/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <alias name='input0'/> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'> <alias name='input1'/> </input> <input type='keyboard' bus='ps2'> <alias name='input2'/> </input> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x2f' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x2f' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x30' slot='0x00' function='0x3'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x1b1c'/> <product id='0x0a14'/> <address bus='5' device='3'/> </source> <alias name='hostdev3'/> <address type='usb' bus='0' port='2'/> </hostdev> <hostdev mode='subsystem' type='usb' managed='no'> <source> <vendor id='0x28de'/> <product id='0x1142'/> <address bus='5' device='2'/> </source> <alias name='hostdev4'/> <address type='usb' bus='0' port='3'/> </hostdev> <memballoon model='none'/> </devices> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+100</label> <imagelabel>+0:+100</imagelabel> </seclabel> <qemu:commandline> <qemu:arg value='-global'/> <qemu:arg value='pcie-root-port.speed=8'/> <qemu:arg value='-global'/> <qemu:arg value='pcie-root-port.width=16'/> </qemu:commandline> </domain>

Am I doing something wrong?

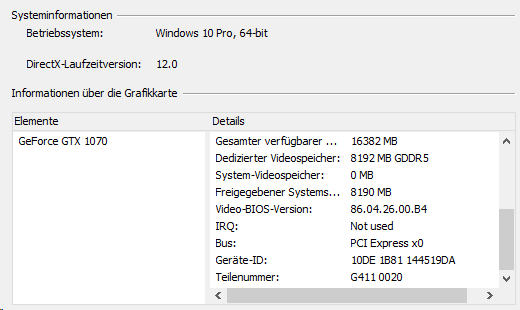

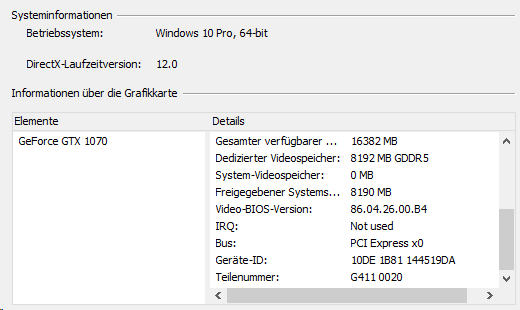

Still being reported as PCIE0 - I have however never experienced a significant performance drop going virtual in gaming.

-

10 hours ago, CHBMB said:

5. To be 100% safe, we recommend a dedicated GPU for transcoding that is not being used for any virtual machines, if you decide to ignore this, then you're on your own, we are not responsible for any problems that ensue.

If you don't stub the 1070 and don't pass it through to a container there shouldn't be any issues, BUT, we haven't tested this on Unraid GUI. I know it will work fine on the non-GUI boot.

Ok thanks for the clarification.

-

This is awesome and basically works fine.

Most importantly the unraid GUI boot now goes 1440p!

I cannot use this without stubbing my second GPU, though.

I have assigned the 1050Ti to my plex container and the 1070 to my windows vm. Starting up windows breaks my system: hard reset required because vm and docker is not available in the web interface anymore.

I would love to not stub the 1070, because with the driver it will finally stop its fans at idle, which it doesn't when stubbed.

Am I wrong in assuming this would work if I were not passing through the unstubbed 1070 to anything but the windows vm?

-

Thanks for the reply, I will rather go with an AIO that can be entirely controlled by my mainboard's headers.

-

I am looking into buying an x62 cooler. Is the RGB lighting working for you in general?

Have you been able to set lighting / fan speed up through usb pass through (that should not at all be harmful)?

-

On 2/11/2019 at 4:02 PM, extremeaudio said:

Now makemkv seems to support UHD disc rip ing. Anyone tried this with ripper? Does it work? Any first hand info would have been great before I take the plunge and buy a couple drives that wont work.

Let me know if you find out.

-

no, sorry. it sounds likely your cpu simply does not support virtualization.

-

Qemu 3.1 in the newest 6.7 RC is much faster for me!

-

7 minutes ago, realies said:

@rix, what does it take?

probably not much - they have added a few sections and sorted information in tiles. your plugin still works - but is misplaced at the top of the screen..

id rather have it beneath the "server hardware info" tile next to the ups stats.

an easier solution would be just copying the styling of one of these tiles and moving the psu stats to the bottom

-

Any chance you could make this v.6.7.x ready?

-

This has serious performance implications, though.

2 minutes ago, emiel1900 said:Okay I fixed it. The solution is to put the VM to emulated instead of host passthrough.

-

I still see this in 6.6.6. Assigning 16-20 GB slows down my VM boot up to 3 minutes... first boot is always quick.

-

On 5/3/2018 at 3:46 PM, bigjme said:

echo 0 > /sys/class/vtconsole/vtcon0/bind

echo 0 > /sys/class/vtconsole/vtcon1/bind

echo efi-framebuffer.0 > /sys/bus/platform/drivers/efi-framebuffer/unbind This has helped me with my High Sierra VM. I was soo close to giving up. Thanks a lot!

-

On 1/16/2019 at 2:06 AM, HotelErotica said:

Whats the best nginx config to use for SyncLounge? I'm using LetsEncrypt docker and i can't seem to get the proxy pass to work for some reason, I've used a few different configs and was curious if you had a working example for this environment. Thanks abunch still new to all this.

EDIT: I also should add, the /ptserver proxypass works fine just for some reason the /ptweb proxy pass just goes back to the server root.

I have just double checked. The config from the github readme is working fine for me.

Make sure that the environment variables are set correctly to

DOMAIN=example.com

autoJoin=true

webroot=slweb

serverroot=slserver

autoJoinServer=https://example.com/slserver

so basically your nginx config will make sure that a local synclounge instance is served at https://example.com/slserver

not http://example.com/slserver, not https://www.example.com/slserver

double check DOMAIN and autoJoinServer

if you set this up according to the readme, this is the most likely mistake.

-

Just restart the container afterwards. The interface will show again.

-

12 minutes ago, extremeaudio said:

Hey this is awesome. Just came across this one.

Where can the settings be edited? Can it rip direct to flac instead of wav first and then convert to flac and mp3 (if my understanding of the process is correct)

How does makemkv get updated? Is it automatic? How to edit makemkv options?

i have to update the docker image manually so it gets rebuilt, upon a new makemkv release.

for editing the ripper script, see above.

-

1

1

-

-

13 hours ago, Semajm85 said:

gosh, I wouldn't even know where to start......

havent heard of aiff before your request, so me neither.

best I can do is lead you towards your goal.

ripper will place a script (ripper.sh) at the /config volume

this script is responsible for the automated ripping process.

you can find the command responsible for creating mp3/flac files at https://github.com/rix1337/docker-ripper/blob/eb5b89bebd46306521701cd8129ab4df4156ec75/root/ripper/ripper.sh#L100

all you need is to modify that line on your local machine.

how, I cannot tell you

-

Yes, just edit the script yourself to include an appropriate tool.

Ripper contains merely an example of what to do.

-

Thanks for the advice. I now managed to set this up. Failed to understand the options.. 😅

-

lemme try to clear up the confusion.

how would i set this up:

-mover running daily to move all and any files to the array (regardless of space available on the cache)

-mover running hourly to only move all and any files to the array if cache is > 90 % full

i understand the options that setting the 90% limit will also affect the regular daily mover process..

-

Hi Squid,

up until now I have used a custom script for the following:

- Run mover at 3.30 am to move all movable files

- Run mover script hourly to check if cache is at > 90% used

I want to prevent my cache completely filling up.

I would like to stop using a script for that, but as of now, this is unsupported by the addon, correct?

Would be happy if you considered adding this.

Kind regards

-

Yes, this container has nothing to do with the software itself. Its just the shell that runs JDownloader. Any software specific issues have to be dealt with by the JDownloader devs.

[Plugin] Linuxserver.io - Unraid Nvidia

in Plugin Support

Posted

I 100% support that its literally the same with my open source projects - that's why I offered time and resources to help.