-

Posts

300 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by ksignorini

-

-

3 minutes ago, Hoopster said:

The personal parts are anonymized. Even full user share names are not shown in diagnostics.

One more thing... I did look through the files a bit. The drive that's failed is not showing a file in the zip at all (DISK 2) but I do see the other disks there, including DISK 3. At least in the SMART folder.

-

1 minute ago, Hoopster said:

The personal parts are anonymized. Even full user share names are not shown in diagnostics.

That's great!

-

Here it is. Just wasn't sure if there was anything personal in the file.

-

Which diags? The whole zip file or just a few parts?

-

I had a crapped out drive being emulated. I got a replacement but a second drive has failed prior to me being able to replace the first one. I have one operating parity disk.

The first drive that died, DISK 3, is unimportant to me.

The second drive that died, DISK 2, is more important.

I've installed the replacement drive into the machine.

Is there any way I can forget about DISK 3 and just replace/rebuild DISK 2?

Because when I look at the drives in Main, I can pick the new drive for DISK 2 but it says "Wrong" underneath it and set DISK 3 to "no device" but it still won't let me proceed. I need to do something to get the rest of the array back up, and hopefully, replace DISK 2 at least.

-

Could you please add "restic" ?

Thanks!

-

It's actually all been working great up until now, with 3 instances running.

Today I updated the container on one of the 3 instances and now I can't get it to restart. I don't know what things might have changed such that it won't start anymore. The other 2 (older) versions are still running fine.

There is nothing in the logs.

Where should I start looking for what broke?

-

5 hours ago, DZMM said:

It's been a while and I don't use pfsense anymore, but I think I created an alias that included her static IP address e.g. HIGH_PRIORITY_DEVICES, and then created floating rules (UDP and TCP) for the alias to assign traffic to the high priority queue

Thanks!

-

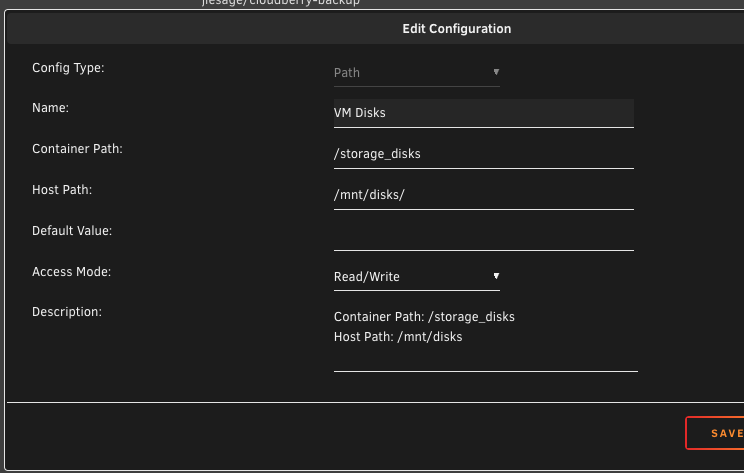

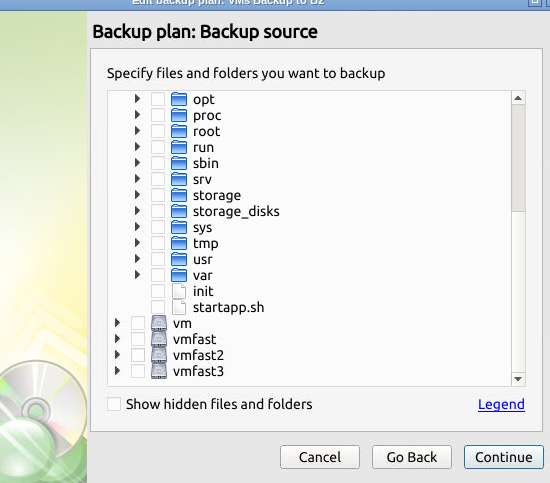

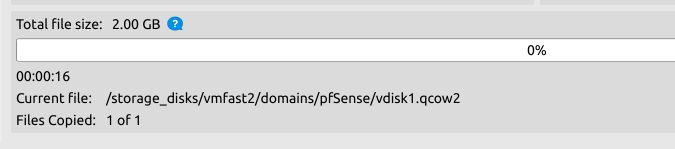

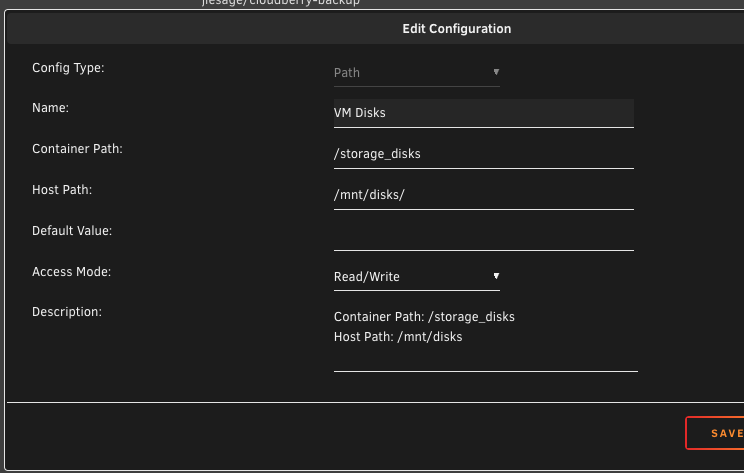

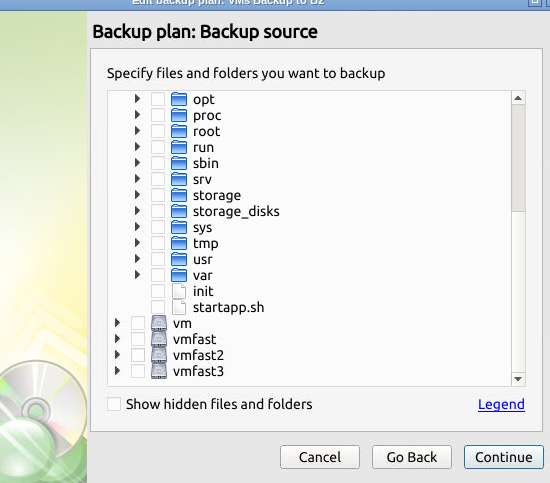

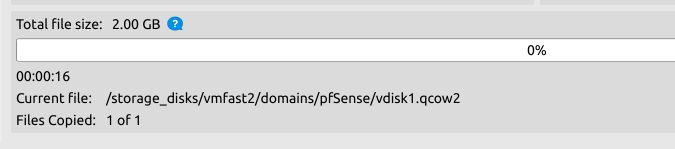

I also have another weird issue where I map another path from the host to the docker and rather than showing up where it's supposed to, it shows up separated? in the list (subdirectories listed rather than located at mapped location in docker). However, when doing backups from these paths, they show the right path... very weird.

(In this case, I'm backing up some disk images so I've mapped in my /mnt/disks/ path so I can pick some stuff there. These are non-array disks.)

They should be mapped into /storage_disks but end up being listed separated (vm, vmfast, vmfast2, vmfast3).

But when backing up, show correctly:

-

On 4/8/2017 at 9:45 AM, DZMM said:

- I've created a rule that makes sure any traffic from my wife's smartphone or laptop goes into the high priority queue so I don't get any 'why is the internet so slow?' complaints

Old thread, but if you're still using pfSense or have the info available, how did you do this rule for your wife's computer/phone?

Thanks.

-

I've had some conversations with 360MSP regarding weird behaviour and they've instructed me to update to the latest version of their software.

Are there any plans to update the Docker image to use 3.2.3.148?

Am I able to update the software in the Docker myself somehow?

Thanks.

-

Is there any way to change the VRAM assigned to VNC for the VM? Believe it or not, Photos.app has reduced functionality without higher VRAM than 7 MB.

-

Say I created a macOS VM (call it macOS VM #1) and a 4th qcow2 disk image to store "stuff" on, formatted HFS+ Journaled/GUID. Then, I blew away this macOS VM (but not that data disk image) and then tried to map that qcow2 data disk image to a new macOS VM (call it macOS VM #2)...

That should work right?

So why would macOS VM #2 want to reinitialize the qcow2 data disk again?

Shouldn't it have kept its format from macOS VM #1 and be perfectly usable in macOS VM #2?

-

1 hour ago, ksignorini said:

I hit Provision and got a good message back, but still I see this. What do I need to do to fix this? (Why is it "Disabled until you Provision an unraid.net SSL Cert and set SSL-TLS to Auto" for which both have been done?

... and nevermind. When I correctly forwarded the port on my router, it went away.

-

-

On 1/19/2019 at 9:16 AM, 1812 said:

technically, maybe very slightly faster but you wont notice a difference. there are some that feel (myself included) that this is somehow less secure than doing it via software to access the network. It's an odd feeling for some that the your server is just a software bridge away from exposing itself to the internet. Others point out that it's essentially the same thing, since the software just outputs via a hardware port to a switch with no filtering back to the server. There are discussions about it in several places on the internet. I'm not sure my belief is grounded in reality, but mine still access the pfsense vm via a switch.

How about just patching the onboard (Unraid) NIC to the 4-port pfSense NIC? Why go to the switch first?

-

7 minutes ago, docgyver said:

tl;dr;

Things that might help. Please read below for a better understanding of how each thing might help

- Check sshd_config for AllowUser, DenyUser, AllowGroup, DenyGroup config entries and adjust as necessary

-

reset ssh plugin

- backup /boot/config/plugins/ssh/<user folders>

- go to plugins tab and remove ssh plugin

- Add plugin back

- Restore user folders

I'll take a shot at the first question but need to know more about your setup.

First, as a complete guess it sounds like some 6.9.x behavior might have kicked in for you. Starting from 6.9.0 (from the release notes) "only root user is permitted to login via ssh". I'm not sure how that is being done but guess it is either with AllowUser, AllowGroup, DenyUser, and/or DenyGroup configuration items in sshd_config.

I don't have any of those but my config would not have been changed by the upgrade. Only new systems would have it blocked.

If one of my plugins is causing the problem, then is it "ssh.plg" or "denyhosts.plg"?

Deny hosts is more apt to block connections entirely (dropped before password prompt). For this reason I doubt it is Denyhosts.

Problems with the ssh plugin tend to be either breaking the ability to login as root (not what you are seeing) or user keys not working. Your experiment with a correct vs. incorrect password implies you aren't using keys either.

As to the second question, deny hosts uses /boot/config/plugins/denyhosts/denyhosts.cfg for its basic config. The default "working" directory defined in that config file is /usr/local/denyhosts. That folder is volatile memory and will go away on it's own. If you changed your working directory to a persistent store (e.g. /mnt/cache/apps) then you will need to clean that out manually.

Similarly, ssh.plg uses /boot/config/plugins/ssh/ssh.cfg for its basic config. User keys are stored in /boot/config/plugins/ssh/<user folder>.

If you use the "remove" option for either plugin then you start completely fresh. The ssh remove will remove /boot/config/plugins/ssh which includes the user key folders. Back it up if you don't have those public keys somewhere else.

Though I say "completely fresh" there is one thing which will persist even after a remove. The ssh config plugin persists changes to sshd_config to the copy managed by unraid itself. If you made a change, say to the port, then that change will remain even after removing the plugin.

That is especially important if PERMIT ROOT is set to NO. Removing the plugin would then require you to edit the sshd_config file manually. Hint: you might need to enable telnet to login if /boot is not exported.

I did many of these steps yesterday and it appears to be working again. I had to completely remove the SSH plugin, rename/remove unRAID's SSH config folder, and reboot. Then I reinstalled SSH plugin and I can now give users permission to SSH or not. That works.

(I'm going to double-check everything against your steps now.)

However, and this was not the case before, when I log in with a user account I get:

mylogin@Mylogins-MacBook-Pro-16 ~ % ssh [email protected] [email protected]'s password: Linux 5.10.28-Unraid. -bash: cd: /root: Permission denied -bash: /root/.bash_profile: Permission denied mylogin@unRAIDBox:/home/mylogin$

(Names have been changed to protect the innocent.)

What script is causing it to try to log into root's folder when a user logs in? It's dumping the user into their /home/mylogin folder correctly a the end, but it appears to try to dump into root's first.

Thanks.

-

The plugin was working for me, but for some reason stopped allowing me to log in from user accounts. It's started but when I ssh over, it accepts my user password (I know because I've tried incorrect passwords to check) and then immediately get "Connection to 10.0.0.10 closed."

What would this be caused by?

Can I totally reset things so I'm back to square one and start over, perhaps? (I don't know where configuration is saved or if unistalling/reinstalling is completely fresh.)

Thanks.

(Note: root@ works just fine.)

-

20 hours ago, JonathanM said:

The terms to google are

nat reflection

hairpinning

nat loopback

along with your specific router.

Turns out my ISP has a built-in bridged port on their modem/router (T3200M) that you don't even need to configure as bridged (port 1) and by throwing my inside router on port 1, NAT reflection started working. As a result, I've gone back to setting up Nextcloud and SWAG as intended and all is well.

-

9 hours ago, JonathanM said:

The terms to google are

nat reflection

hairpinning

nat loopback

along with your specific router.

Couldn't find much. I actually have 2 routers (one is an ISP modem/router that I use for nothing but the modem; the other is my main router that sits in the ISP router's DMZ).

However, when configuring Nextcloud, if I change these lines:

'overwrite.cli.url' => 'https://10.0.0.10:444', <----- the way it was originally 'overwritehost' => 'nextcloud.mydomain.net', <----- remove this line entirely

and add to the trusted_domains array:

1 => 'nextloud.mydomain.net',

Then it works from both inside (using 10.0.0.10:444) and outside the network (using subdomain.domain.net -- as far as I can tell by VPNing outside anyway).What am I breaking by not having the url and host overwritten with the external subdomain.domain name?

-

24 minutes ago, JonathanM said:

Why not? With the proper settings in your router it should work just fine.

Hm. What settings can cause this to not work? I'm forwarding all 80 and 443 to my unRAID box.

nextcloud.mydomain.net works great if I'm on a VPN from outside my local network or physically outside my local network, as do 4 other subdomains being used to access containers via SWAG. All of them hang when trying to access the outside subdomain from inside the network.

As well, why would 10.0.0.10:4434 be resolving to the outside subdomain?Also of note: 10.0.0.10 webDAV access works great using a webDAV client while inside the network.

-

I've installed Nextcloud and have SWAG correctly redirecting my external nextcloud.mydomain.net to Nextcloud when accessed from outside my network. When accessing from inside my network using 10.0.0.10:4434 (the port I chose for Nextcloud in the config) I can't access the webui.

I think it's trying to convert it to nextcloud.mydomain.net which of course can't be accessed from inside.

Ideas?

-

32 minutes ago, ksignorini said:

I can't seem to get this to run on any port other than 30000. I'm using "host" network and tried to remove and re-add the port as host port 30001/default 30001. (suggested above)

(Incidentally, I don't see "container port" even showing up in the port config.)

Is there a config file I can edit by hand? Anything else I can do?

I worked it out. Had to manually change the settings file for Foundry to target 30001. That's in the "config" folder: options.json. I suppose you could connect at 30000 first, change it in Foundry's settings screen, then adjust the port on the Docker and restart, too.

-

I can't seem to get this to run on any port other than 30000. I'm using "host" network and tried to remove and re-add the port as host port 30001/default 30001. (suggested above)

(Incidentally, I don't see "container port" even showing up in the port config.)

Is there a config file I can edit by hand? Anything else I can do?

Can't stop local IP from resolving to unraid.net

in General Support

Posted

I had connected My Servers to my Unraid box and it was resolving with somebigbunchofnumbers.unraid.net which used to work fine.

I now only want to access it with a local IP but when I do, it still resolves to the unraid.net addy. How can I fix this?

Thanks.