frodr

-

Posts

526 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by frodr

-

-

-

-

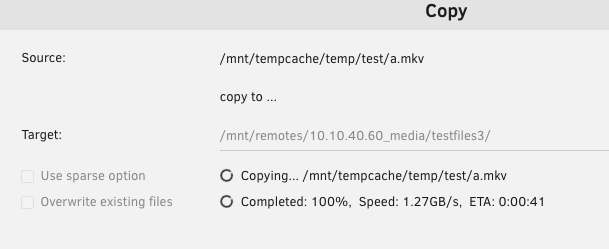

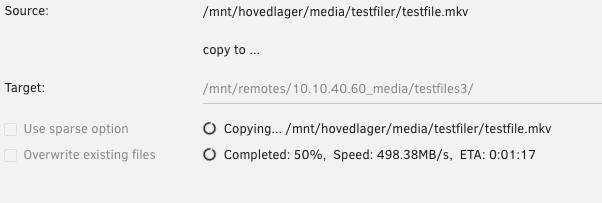

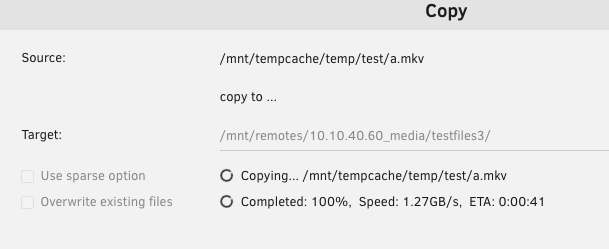

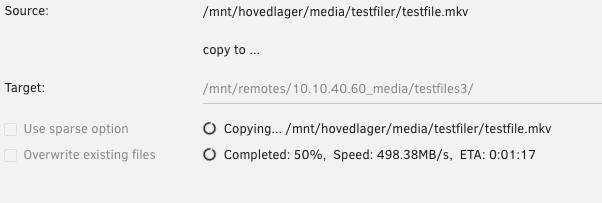

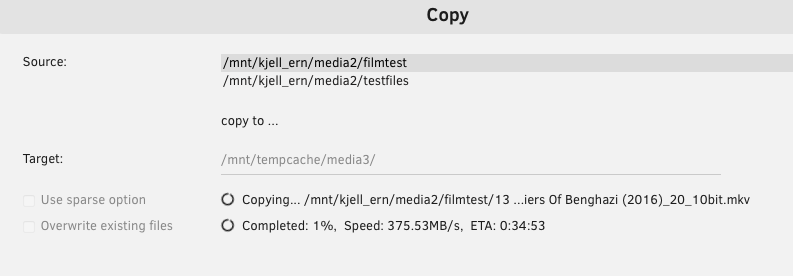

Some runs:

Testing receiving server1 main storage (10x raidz2) write performance: About 1.3 GB/S

Testing sebding sending server2 (6x raidz2) read performance: About 500 MB/s

Server2 (6x hdd raidz2) write speed was about 700 MB/s.

This is quite surprising to me. I thought it would be the write speed on the receiving server1 (10x raiz2)that would be the limting factor. But its the read speed of the 6x hdd raid2 on server 2. thats the most limiting factor.

-

I´m running two servers with the same user data on both. If one dies, I have the other server on hand. The first server is online 24/7, except when I mess around. The second server also serve as a game VM.

Every now and then (every 2-3 Months) I rebuild the main storage on Server1 due to expanding the pool. Write speed is a priority.

My goal is to achieve saturation for 2 x 10Gb port in Server1.As of today:

Server1: 10 x sata 8 TB ssd raidz2 pool.

Server2: 6 x sata 18 TB hdd raidz2 pool.

What will be the best pool setup on server1 for max write speed over 2 x 10 Gb nic with FreeFileSync (or simalar)? Server2 have a 25Gb nic.

Current speed:

Happy for every feedback. //F

-

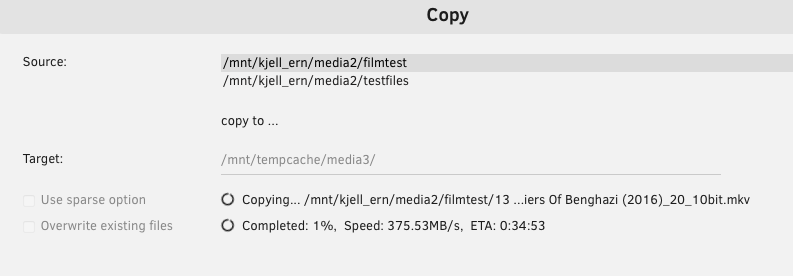

I removed the HBA card and mounted in the three M.2 slots on the mobo to get a baseline.

cache: 2 x mirror ZFS nvme ssd

tempdrive: 1 x btrfs nvme ssd

kjell_ern: 10 x sata ssd in raidz2

The nvme drives seems to be ok.

The reason for introducing the HBA in the first place, was to rebuild the storage pool "kjell_ern" (where the media library lives), to include nvme ssd´s as meta cache to improve write speeds. Unless Supermicro Support comes up with a fix, I abort the idea of a hba, and saves 30W on top.

We can kinda call this case closed. Thanks for holding hand.

-

1

1

-

-

Ok, I know a little bit more.

The HBA only works with a pcie card in the slot that is connected to the X16 slot. This slot then only works at x8:

LnkSta: Speed 16GT/s, Width x8 (downgraded)

Removing the pcie card from the switched slot, the mobo hangs at code 94 pre bios. I have talked to Supermicro Support, which by the way responds very quickly these days, Highpoint products are not validated. But they are consulting bios engineer.

This means that the HBA is running 6 x M.2´s at x8. And it is some switching between the slots as well. The copy test was done between 4 of the M.2´s, The last 2 is only sitting as unassigned devices.

-

16 hours ago, shpitz461 said:

Is this your Rocket 1508? It shows full Gen4 16x connection:

From what I understand, since PEX is used (bifurcation controller), all the drives share 16x, so in theory 4 drives will operate in full x4 speed, but more drives operating simulataneously will share 16x bandwitdth and will slow down, since you can't fit 32x bandwidth in a 16x-wide bus.

Did you try to boot your machine with a live ubuntu or debian and see if you get the same performance?

Did you try removing all other pcie cards and test with the rocket solo?

How many drives have you populated in the controller? If not 8 drives, did you try to move the drives around to see if you get any better performance? i.e. slot 1 and 2, 2 and 3, etc...

Yes, Rocket 1508. Great that its running x16. I thought that is mobo slot7 only runs x8 when slot4 is populated.

I will try Ubuntu or debian and move m.2´s around when a ZFS scrub test is done, tomorrow it seems.

I have 6 x m.2 in the hba as when tested.

Slot7 is the only slot available for x16. (Dear Intel, why can´t we have a proper HEDT motherboard with iGPU cpu´s?)

-

34 minutes ago, JorgeB said:

Either way it's not possible to have x32 lanes, still the performance should be much better if the x8 link was the only issue, but it could still be HBA related, it would be good to test with a couple of devices using the m.2 slots.

I will test moving around m.2´s. Strange thing, the mobo hangs on code 94 if hba in slot7 and none in the connected slot4. When adding a NIC in slot 4, the mobo do not hang. Tried resetting CMOS, no change. Also tried a few bios setting without luck. I will have to address Supermicro Support.

-

1 hour ago, JorgeB said:

LnkSta: Speed 16GT/s, Width x4Link for all NVMe devices is not reporting as downgraded, but the HBA will have to share the bandwidth of a x16 slot, since 8 NVMe devices at x4 would require 32 lanes, I suggest installing a couple of devices on the board m.2 slots and restest, in case the HBA is not working correctly.

The hba should run at x8 (slot7), not x16, as I have a pcie card the connecting pcie slot 4, Removing pcie card from the connecting slot (slot4), the hba in slot 7 should run at x16. Doing so the mobo startup stops before bios setting with code 94. I will try to solve this issue.

-

7 hours ago, frodr said:

I might have an idea. Intel Core/W680 supports 20 pcie lanes, right? In the server is hba (x16), nic (x4) and sata card (x4). I guess the hba might drop down to x8 effectively.

-

3 hours ago, JorgeB said:

That's much lower than I would expect, possibly something else going on.

Type:

lspci -vvv > /boot/lspci.txtThen attach that file here

-

3 hours ago, JorgeB said:

It is, I would expect 2GB/s+ without any device bottlenecks.

Note that in my experience btrfs raid0 does not scale very well past a certain number of devices, I would try copying from one NVMe device to another, assuming they are good fast devices, and use pv instead, rsync is not built for speed:

pv /path/to/large/file > /path/to/destinationOk, the test shows 420 - 480 MB/s from 2 x Kingston KC3000 M.2 2280 NVMe SSD 2TB in ZFS mirror to 2 x WD Black SN850P NVMe 1TB in btrfs Raid 0. Test both directions.

What I forgot to tell you, and to remember myself, is that the NVMe drives sits on a HBA, HighPoint Rocket 1508. It is a pcie 4 with 8 ports M.2 HBA. Well, I now know the penalty for being able to populate 8 NVMe´s with good cooling in a W680 chipset mobo.

Thanks for following along. My use case isn't that dependent on max NVMe speed. (But I would quickly change the mobo if Intel includes IGPU into higher I/O cpus as Xeon 2400/3400).

-

1 hour ago, JorgeB said:

Not clear if you are talking locally or over SMB? This looks local but the title mentions SMB.

That's never a straight calculation like that, it can vary with a lot of things, including devices used, what you are using to make the copy, and the CPU can have a large impact, single copy operations are single threaded, with a recent fast CPU you should see between 1 and 2 GB/s.

Sorry, its local speed, not over smb.

The singel threaded performance of I7-13700 is quite good. Can you see/suggest any reason this performance is well below 1 - 2GB/s?

-

Can anybody share some light on this topic, please? If the speed above is what's to be expected, well, then I now that. And now need chasing improvements.

Cheers,

-

The copy speed on my servers are generally quite poor.

For Server1 in the signature. Copy a single file from 10 x sata ssd raidz2 to 2 x NVMe ssd in btrfs raid 0 should be significantly better than below 400MB/s. That's the speed from one single sata ssd. To my understanding the speed should be: 10 x 550 MB/s x 50% = 2750 MB/s. Right/wrong?

Very nice to get views on this topic. //

-

32 minutes ago, ich777 said:

Can you be a bit more precise what "these" files are? Are these image files of some kind?

Since you've blurred the picture I can't see what file type these files are.

Usually qcow or image files in general are synced with their real maximum size which was specified when creating the image files.

The files are all .mkv. The progress bar also stops moving.

-

Syncing with DirsyncPro suddenly "stops", then some files are marked yellow of size blown up.

Happy for any ideas of what's wrong.

-

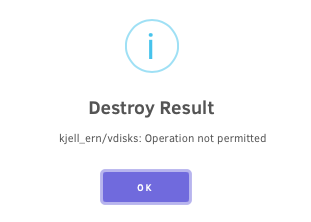

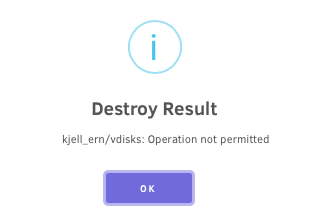

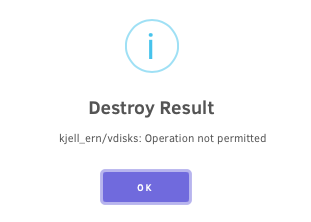

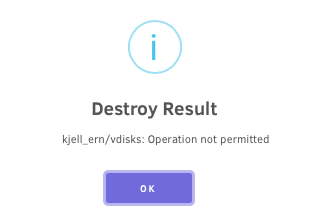

I can´t destroy a dataset. Tried several datasets right after restart. Not aware of any services or containers user them.

How do go about to destroy datasets? And to find out if they are in use? Happy of any help.

-

16 minutes ago, JorgeB said:

That suggests the issue is caused by a plugin, you can try removing/disabling them and re-test.

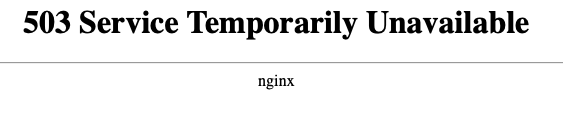

OK. I will test this during the coming days. What it seems to me, is that the nginx is restarting. If I wait a 20-40 sec., I usually can log in.

-

21 hours ago, JorgeB said:

Do you see the same after booting in safe mode?

It did not happened when booting into safe mode, tested 2 times.

-

4 hours ago, JorgeB said:

Do you see the same after booting in safe mode?

I will check tomorrow. A bit busy on the server now.

-

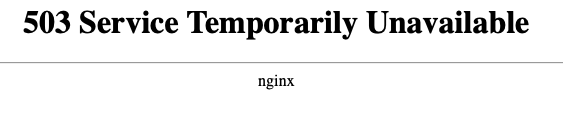

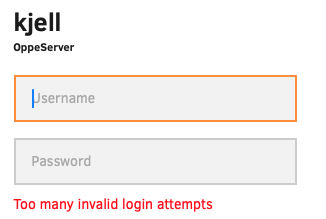

Most of the time I login I get this one:

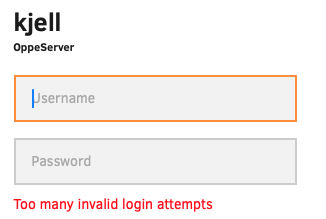

After several refreshes, and plugging user/pass, I get inn. This time I had this:

This came up because I plugged the other server password.

I can't add any diagnostics as of now. But how to fix the first issue? The second as well as guess.

The 503 issue as also on my other server.

Cheers,

Frode

-

How can I destroy a dataset? Thru ZFS Master I get this:

ZFS Master settings in destructive mode.

-

8 hours ago, frodr said:

I can't edit scripts. Holding the pointer of the cog, edit scrips is there. But nothing happens when pushing the button.

I was just Safari having a hick up. Strange, because it worked on the other server.

The Unraid Story: Lime Tech Co-CEO's Discuss the Past and Future of Unraid OS

in Unraid Blog and Uncast Show Discussion

Posted

Very nice and informative session. I kind of feel home with Unraid. Imagine that we can get 2-3 Pro licences for the price of a hard drive.

Really looking forward to version 7.0