Riotz

-

Posts

31 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Riotz

-

-

Yes please. I am getting tired of the constant reminders to upgrade to RC7. Cant because my PLEX server will lost HW Transcoding.

-

Where it at yo? 😈

-

Anyone else seeing this issue on their server?

When I apply the fix it doesn't work. Not sure if it because there is a space in the URL:

https://raw.githubusercontent.com/ninthwalker/docker-templates/master/Ninthwalker/NowShowing v2.xml

Seems to be working otherwise but really don't like warnings hanging and I also don't want to hit ignore.

Thanks!

-

4 hours ago, sauso said:

Stupid question but did your external IP change? I get cloudflare message only if my Internet is down or my IP has changed.

It turns out the configuration on my UniFi controller needed to be reloaded. Traffic was not passing through port 443. Now I have a new problem with the container...

Is there a way to fix this?

-

On 8/26/2019 at 5:59 PM, aptalca said:

Turn off cloudflare proxy (orange cloud)?

That's what we recommend anyway. If you want to proxy through cloudflare, we don't officially support that (ie. you're on your own).

I did this and I can connect to it internally but not from any outside network. It was working perfectly while proxied (orange cloud) through cloudflare. I am not sure why it stopped working all of a sudden. I guess I will look elsewhere for an explanation. I just dont get why it broke all of a sudden.

-

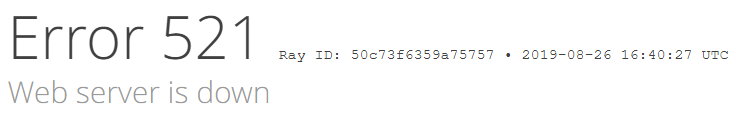

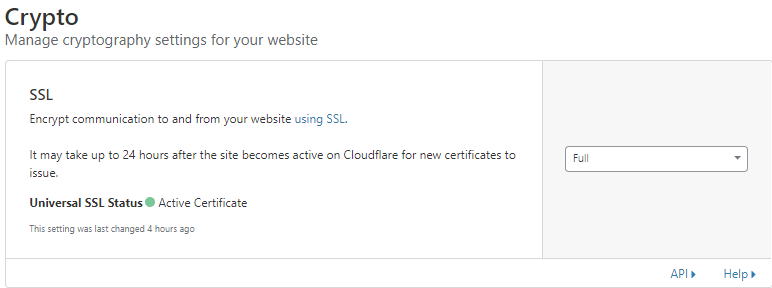

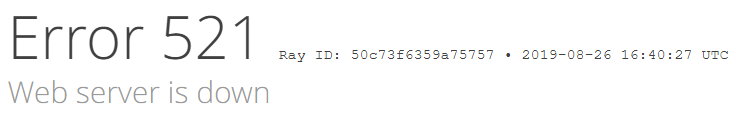

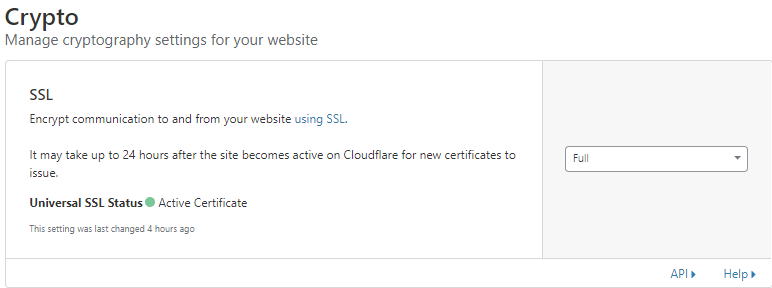

Hello, my server rebooted recently, ungracefully due to a power outage, and now my domains are reporting that the server is down through CloudFlare. I have Cypto set to Full as Flexible does not work for me if that matters.

I tracked the error down to mean that WordPress is blocking the IPs from CloudFlare so I tried to add the CloudFlare IPs into NGINX but I cant get it to work. Here is how I did that:-

Created the file cloudflare-allow.conf with the whitelisted CloudFlare IPs (contents below) and put it in the same location as ssl.conf and nginx.conf

-

# https://www.cloudflare.com/ips # IPv4 allow 173.245.48.0/20; allow 103.21.244.0/22; allow 103.22.200.0/22; allow 103.31.4.0/22; allow 141.101.64.0/18; allow 108.162.192.0/18; allow 190.93.240.0/20; allow 188.114.96.0/20; allow 197.234.240.0/22; allow 198.41.128.0/17; allow 162.158.0.0/15; allow 104.16.0.0/12; allow 172.64.0.0/13; allow 131.0.72.0/22; # IPv6 allow 2400:cb00::/32; allow 2606:4700::/32; allow 2803:f800::/32; allow 2405:b500::/32; allow 2405:8100::/32; allow 2a06:98c0::/29; allow 2c0f:f248::/32;

-

-

Edited the site-conf default file for my main site to add the lines:

-

include /config/nginx/cloudflare-allow.conf; deny all;

-

- Restarted the LetsEncrypt container.

This did not work so I am not sure I am doing this correctly. Can anyone lend a hand to advise the proper way to do this or if I am even barking up the right tree?

Thanks,

-

Created the file cloudflare-allow.conf with the whitelisted CloudFlare IPs (contents below) and put it in the same location as ssl.conf and nginx.conf

-

51 minutes ago, aptalca said:

GitHub readme and the docker hub pages both have changelogs

Thanks!

-

On 8/2/2019 at 2:57 PM, aptalca said:

We can add that, too if it doesn't add too much bloat

Hello, there have been quite a few updates to the container since this post. Can you please tell me if this was enabled? If so how would one activate it.

Also, is there somewhere that I can check for release notes on updated to the container?

-

4 hours ago, aptalca said:

Having additional modules doesn't really hurt anything. We're going for simplicity. Variable for modules would increase complexity

Exactly. Also we’re asking for them to be included, not enabled. Having the option to use it or not is all we want.

-

22 minutes ago, aptalca said:

We can add that, too if it doesn't add too much bloat

Awesome! Thanks so much. Will make this Wordpress project seamless having that integrated. And, allow me to use that user credential database for other services.

-

19 hours ago, aptalca said:

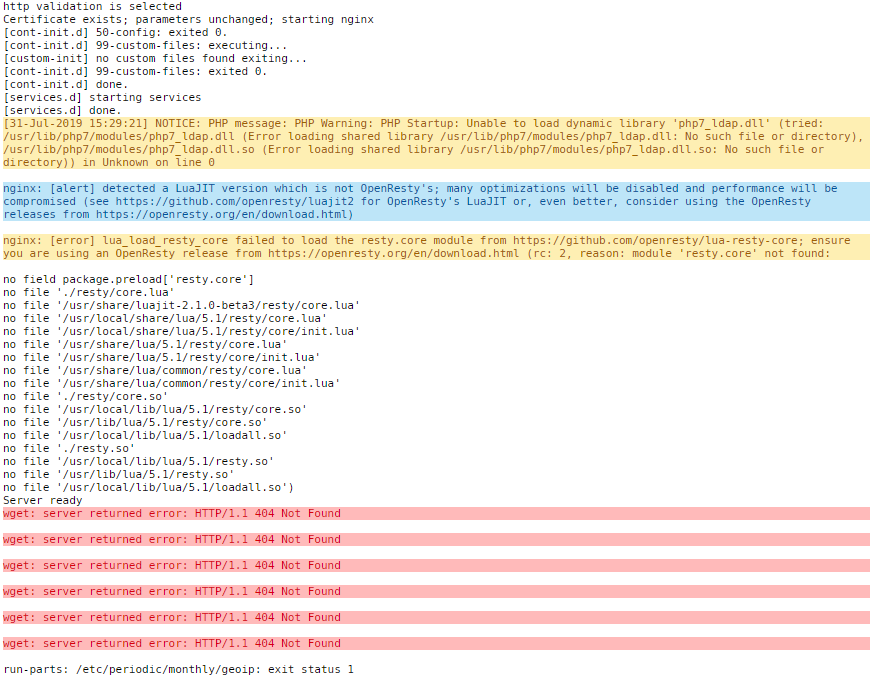

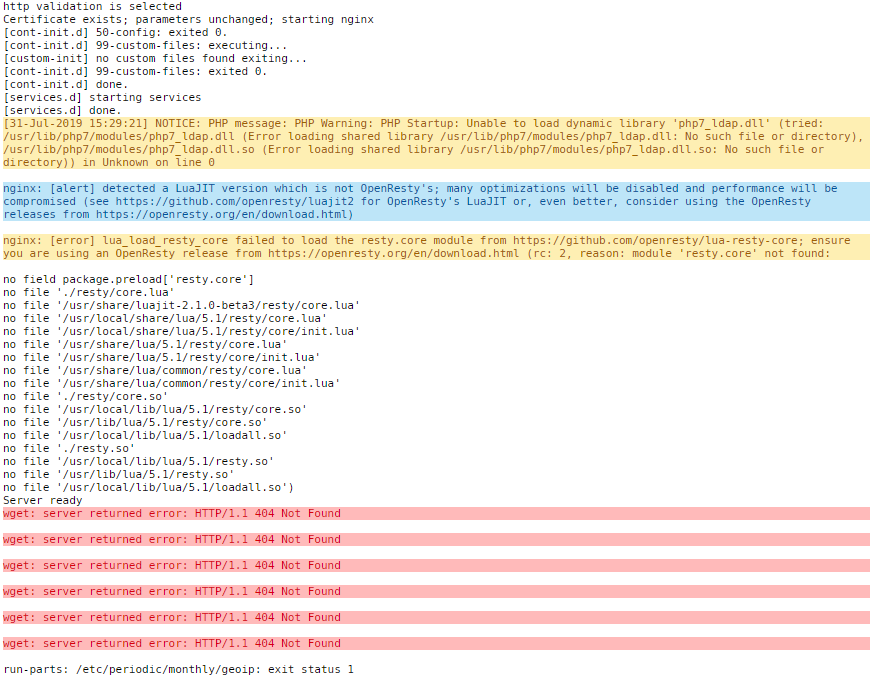

Looks like attempts to update the geoip db are failing. Harmless but we'll look into it

Thanks so much. What about php7_ldap integration into the container? Would really love to use the PLEX for LDAP container with my Wordpress sites.

Thanks again,

-

Can anyone please tell me why I am seeing these wget errors in my log? Also any change of getting php7_ladap added to the container?

Thanks,

-

-

Hey guys, is there anyway we can get php7-ldap integration? Or if its already possible to be used can someone explain how to turn it on? Here is my usecase:

I have a Wordpress site that I am trying to use LDAPforPlex (that was just added to CA) in order to allow my PLEX users to log in using the Plex accounts. Unfortunately the Wordpress plugin I am trying to use is telling me that the LDAP-extension is not loaded and that without it I would not be able to query a LDAP server.

Any help is greatly appreciated as always.

-

On 7/2/2019 at 6:24 PM, aptalca said:

Check line 4 of the default site config

Thanks I got it working

-

5 hours ago, blaine07 said:

That's funny because a bit back my certs all expired. Had to add a fictitious site to Letsencrypt, start service and let it error, delete fictitious and restart again to get all the certs to renew.

Sent from my SM-G975U using Tapatalk

Good to know! But I thought LetsEncrypt kept the certs updated automagically.

-

Is there a way to configure the conf files to forward http requests to https requests. So when I go to http://subdomain.domain.com I want it to auto forward to https://subdomain.domain.com

Thanks,

-

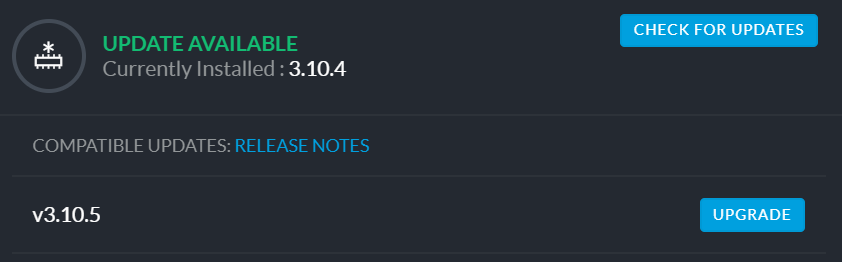

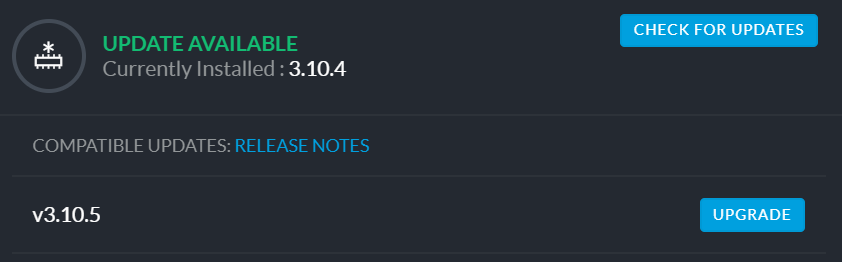

Looks like there's a new update. Can we get the docker updated, please?

-

On 7/4/2017 at 2:36 PM, scottc said:

You dont need to run 2 instances, just map your movies folder and kids_movies

so do something like this in your docker setup ( depending on how your shares are setup )

/movies -> /mnt/user/movies

/kids_movies -> /mnt/user/kids_moviesThen in Radarr add a movie and in the path dropdown when your adding kids movies you would choose kids_movies and other movies you would choose movies

If you do not see movies or kids_movies in the dropdown then choose the select different path option and click the folder button to search for path and find the movies or kids_movies path

So I have been reading through to find a solution to my problem with Radarr with Ombi integration and came upon this post. Not sure if this is still relevant so I asking for some assistance. Here is my situation:

I have a Plex server that has multiple libraries broken up like this:

4K Movies

HD Movies

Kids Movies

SD Movies

Marvel Movies

Marvel Animated

DC Movies

DC Animated

What I would like is when someone goes to Ombi to request movies and it gets to Radarr that it will download and move them to the appropriate library. Is this even possible?

Thanks

-

12 hours ago, aptalca said:

In your sonarr container settings, open advanced and into extra arguments enter --network-alias=sonarr

Thank you! This worked Perfectly! Now I just need to figure out how to make Proxy-Confs for the apps that dont have templates. Gonna try to figure this out today.

-

I FINALLY FIGURED IT OUT!!! It was my UniFi USG that was the problem. It needed to be rebooted to pass the ports properly. Once it rebooted I was able to access Sonar immediately using my subdomain. The only problem I have now which is a cosmetic one but it's going to irritate my OCD is it only works if the Docker container name is lowercase sonar. I like to have my dockers properly labeled so I would like it to be Sonar. I went into the Proxy-Conf folder and changed the set $upstream_sonarr sonarr; to set $upstream_sonarr Sonarr; in both locations then restarted LetsEncrypt after changing the container name back to Sonarr but I get a 502 Bad Gateway when I do. Is there something I can do to fix this naming dilemma.

Also, does anyone have a proxy-conf file for NowShowingv2

Thanks,

-

1

1

-

-

20 hours ago, aptalca said:

If you need help with troubleshooting, start with posting your docker log

Also, it's not a good idea to test a reverse proxy right off the bat.

Set up the container first, check the logs to make sure the certs are retrieved correctly.

Then test to make sure you can get to the placeholder homepage.

Only then you should test the reverse proxy.

Step by step.

And here's a detailed guide that covers many scenarios: https://blog.linuxserver.io/2019/04/25/letsencrypt-nginx-starter-guide/

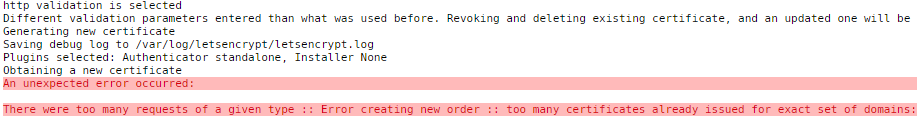

Weird, I wrote a reply but it didn't get posted. Here I go again then. So I am adding my docker log here for reference. I am able to see Server Ready each time the docker is started.

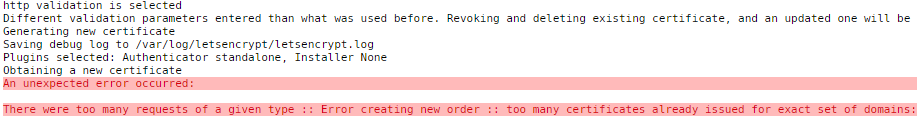

------------------------------------- _ () | | ___ _ __ | | / __| | | / \ | | \__ \ | | | () | |_| |___/ |_| \__/ Brought to you by linuxserver.io We gratefully accept donations at: https://www.linuxserver.io/donate/ ------------------------------------- GID/UID ------------------------------------- User uid: 99 User gid: 100 ------------------------------------- [cont-init.d] 10-adduser: exited 0. [cont-init.d] 20-config: executing... [cont-init.d] 20-config: exited 0. [cont-init.d] 30-keygen: executing... using keys found in /config/keys [cont-init.d] 30-keygen: exited 0. [cont-init.d] 50-config: executing... Variables set: PUID=99 PGID=100 TZ=America/New_York URL=mydomain.com SUBDOMAINS=tautulli,sonarr,nowshowing EXTRA_DOMAINS= ONLY_SUBDOMAINS=true DHLEVEL=2048 VALIDATION=http DNSPLUGIN= [email protected] STAGING= 2048 bit DH parameters present SUBDOMAINS entered, processing SUBDOMAINS entered, processing Only subdomains, no URL in cert Sub-domains processed are: -d tautulli.mydomain.com -d sonarr.mydomain.com -d nowshowing.mydomain.com E-mail address entered: [email protected] http validation is selected Different validation parameters entered than what was used before. Revoking and deleting existing certificate, and an updated one will be created Saving debug log to /var/log/letsencrypt/letsencrypt.log No match found for cert-path /config/etc/letsencrypt/live/tautulli.mydomain.com/fullchain.pem! Generating new certificate Saving debug log to /var/log/letsencrypt/letsencrypt.log Plugins selected: Authenticator standalone, Installer None Obtaining a new certificate Performing the following challenges: http-01 challenge for nowshowing.mydomain.com http-01 challenge for sonarr.mydomain.com http-01 challenge for tautulli.mydomain.com Waiting for verification... Waiting for verification... Cleaning up challenges IMPORTANT NOTES: - Congratulations! Your certificate and chain have been saved at: /etc/letsencrypt/live/tautulli.mydomain.com/fullchain.pem Your key file has been saved at: /etc/letsencrypt/live/tautulli.mydomain.com/privkey.pem Your cert will expire on 2019-09-17. To obtain a new or tweaked version of this certificate in the future, simply run certbot again. To non-interactively renew *all* of your certificates, run "certbot renew" - Your account credentials have been saved in your Certbot configuration directory at /etc/letsencrypt. You should make a secure backup of this folder now. This configuration directory will also contain certificates and private keys obtained by Certbot so making regular backups of this folder is ideal. - If you like Certbot, please consider supporting our work by: Donating to ISRG / Let's Encrypt: https://letsencrypt.org/donate Donating to EFF: https://eff.org/donate-le New certificate generated; starting nginx [cont-init.d] 50-config: exited 0. [cont-init.d] 99-custom-files: executing... [custom-init] no custom files found exiting... [cont-init.d] 99-custom-files: exited 0. [cont-init.d] done. [services.d] starting services [services.d] done. nginx: [alert] detected a LuaJIT version which is not OpenResty's; many optimizations will be disabled and performance will be compromised (see https://github.com/openresty/luajit2 for OpenResty's LuaJIT or, even better, consider using the OpenResty releases from https://openresty.org/en/download.html) nginx: [error] lua_load_resty_core failed to load the resty.core module from https://github.com/openresty/lua-resty-core; ensure you are using an OpenResty release from https://openresty.org/en/download.html (rc: 2, reason: module 'resty.core' not found: no field package.preload['resty.core'] no file './resty/core.lua' no file '/usr/share/luajit-2.1.0-beta3/resty/core.lua' no file '/usr/local/share/lua/5.1/resty/core.lua' no file '/usr/local/share/lua/5.1/resty/core/init.lua' no file '/usr/share/lua/5.1/resty/core.lua' no file '/usr/share/lua/5.1/resty/core/init.lua' no file '/usr/share/lua/common/resty/core.lua' no file '/usr/share/lua/common/resty/core/init.lua' no file './resty/core.so' no file '/usr/local/lib/lua/5.1/resty/core.so' no file '/usr/lib/lua/5.1/resty/core.so' no file '/usr/local/lib/lua/5.1/loadall.so' no file './resty.so' no file '/usr/local/lib/lua/5.1/resty.so' no file '/usr/lib/lua/5.1/resty.so' no file '/usr/local/lib/lua/5.1/loadall.so') Server readyHopefully, this helps to expose what I am doing incorrectly. I am going through the guide in the link posted above to see if I can glean anything that could help fix this.

-

7 hours ago, Mindsgoneawol said:

I am extremely confused. I have been reading through this trying to find the solution to my issue. Forgive me if i missed it but the more i read the more confused i get. I am trying to set the reverse proxy up but run into A) port 80 is blocked B) DNS doesn't want to work either. I followed spaceinvaderone's video on dns and it didn't work. CLoudflare just won't work for some reason. (just finished trying to delete my account there).

I have No-Ip as my domain host. (I do have my own domain with the CNAME's for servers i am trying to get to work with letsencrypt) I made a support ticket and the tech said:

"Historically, customers who use LetsEncrypt will ask us to add a DNS Record to the domain for the LetsEncrypt to work. You may need to see if they require this for your scenario as well. Take a look and let us know if we can answer any questions."

I am confused as to what i need. would someone help point me in the right direction please?

Thank you.

I am having a similar issue. I don't know where my disconnect is but I think it's with the ports not passing to the docker. So I also follow SpaceInvaders tutorial. I have a domain hosted with GoDaddy and I have setup CNAMES for Sonarr, & Tautulli to test with. They are configured as subdomains that point to my duckdns.org address. I have the DuckDNS docker installed on my unRAID server and it is properly updating the IP. When I pass my subdomains through https://www.whatsmydns.net/ I can see that the subdomain URLs are correctly pointing to my duckdns.org address. I have configured my UniFi USG to pass the port 80 to 180 and 443 to 1443 to the IP of the unRAID server. When I check ports 80 and 443 at https://www.yougetsignal.com/tools/open-ports/ I see port 80 open but 443 is not. Not sure why... I've edited the .conf files to reflect the names of the docker containers.

At this point, I am just confused as to why this is not working. Here are some screenshots of my configurations:

# make sure that your dns has a cname set for sonarr and that your sonarr container is not using a base url server { listen 443 ssl; listen [::]:443 ssl; server_name sonarr.*; include /config/nginx/ssl.conf; client_max_body_size 0; # enable for ldap auth, fill in ldap details in ldap.conf #include /config/nginx/ldap.conf; location / { # enable the next two lines for http auth #auth_basic "Restricted"; #auth_basic_user_file /config/nginx/.htpasswd; # enable the next two lines for ldap auth #auth_request /auth; #error_page 401 =200 /login; include /config/nginx/proxy.conf; resolver 127.0.0.11 valid=30s; set $upstream_sonarr sonarr; proxy_pass http://$upstream_sonarr:8989; } location ~ (/sonarr)?/api { include /config/nginx/proxy.conf; resolver 127.0.0.11 valid=30s; set $upstream_sonarr Sonarr; proxy_pass http://$upstream_sonarr:8989; } }# make sure that your dns has a cname set for tautulli and that your tautulli container is not using a base url server { listen 443 ssl; listen [::]:443 ssl; server_name Tautulli.*; include /config/nginx/ssl.conf; client_max_body_size 0; # enable for ldap auth, fill in ldap details in ldap.conf #include /config/nginx/ldap.conf; location / { # enable the next two lines for http auth #auth_basic "Restricted"; #auth_basic_user_file /config/nginx/.htpasswd; # enable the next two lines for ldap auth #auth_request /auth; #error_page 401 =200 /login; include /config/nginx/proxy.conf; resolver 127.0.0.11 valid=30s; set $upstream_tautulli Tautulli; proxy_pass http://$upstream_tautulli:8181; } location ~ (/mnt/disks/PlexMediaServer/tautulli)?/api { include /config/nginx/proxy.conf; resolver 127.0.0.11 valid=30s; set $upstream_tautulli Tautulli; proxy_pass http://$upstream_tautulli:8181; } }Any assistance or guidance would be greatly appreciated!

-

1 hour ago, aptalca said:

Download a new client config. Your current config was generated before you fixed the hostname on your server.

Yup that did it! Thank you so much! Hope this helps someone else as well!

[Support] Linuxserver.io - MariaDB

in Docker Containers

Posted

Hello, I woke up today to see all my websites were offline. Found out it was a database issue. The MariahDB docker image was orphaned. A quick google search said to reinstall the docker to fix the issue however when I did that it installed a fresh copy of the docker. Did I lose all my websites data? Can this be reversed or fixed?

Thanks