-

Posts

63 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Januszmirek

-

-

On 11/26/2021 at 4:31 AM, biggiesize said:

I'm glad that everything went smoothly for you so far. Personally, for torrenting, I use qbittorrent from the LinuxServer guys with the VueTorrent WebUI but I have also used Deluge from Binhex. Either are excellent choices. It really depends on your personal preference.

If you don't mind sharing, how did you manage to get vuetorrent to work with linuxserv qbit? I got to the point where I get white screen and vt icon in browser tab but the page is blank;( I don use standard port 8080 for webui, maybe that's why im stuck

-

11 minutes ago, Squid said:

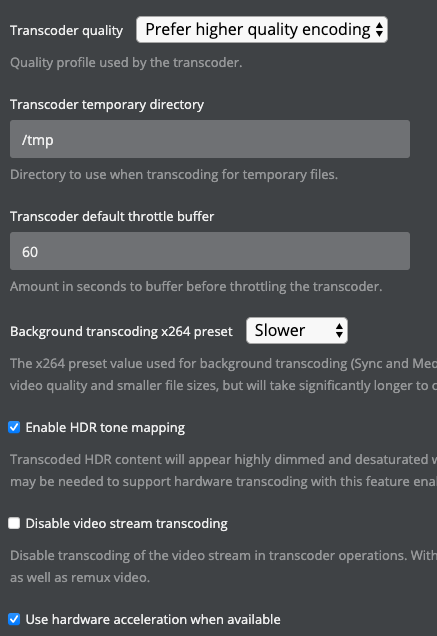

If there's no path mapping for /tmp in the Plex template, then it's transcoding directly to RAM within the container which has no upper limit on it's size.

If you've got it mapped to say /tmp/plex on the host then it has a 50% upper limit of memory. Either way it would appear that Plex isn't clearing out the files and you should probably post in the applicable Plex thread to get more suggestions from other users who are heavy Plex users.

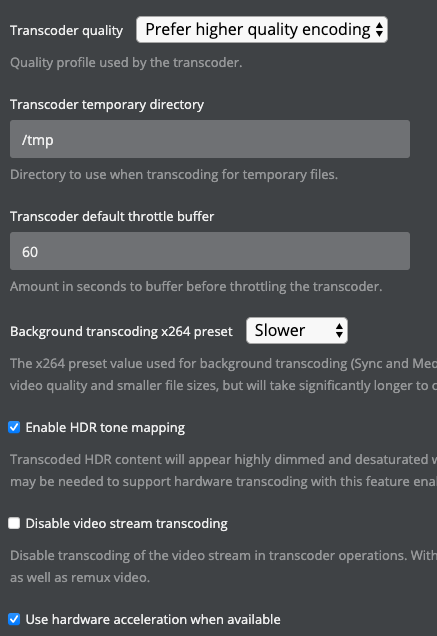

Got it, thank you for pointing me in the right direction. My plex mapping for tmp pasted below:

I followed this post when set up transcoding location, maybe ive messed up sth;)

-

3 minutes ago, Squid said:

The CPU isn't running anywhere near what the dashboard says, but rather it appears that you continually are having processes killed off due to out of memory issues and the dashboard can't make head or tails of what the current situation is.

I don't understand what make out of it and where to start.

3 minutes ago, Squid said:Looks like you've allocated ~3Gig to the Windows VM, but try and look at all of the docker apps. Are they storing stuff in memory that they shouldn't be? If you stop Plex does the memory issue immediately disappear?

Forget about the vm for a minute. I barely use vms. the issue described in original post happen regardless vm use.

Yes, if I stop Plex, no memory issues accur.

-

Diagnostics attached.

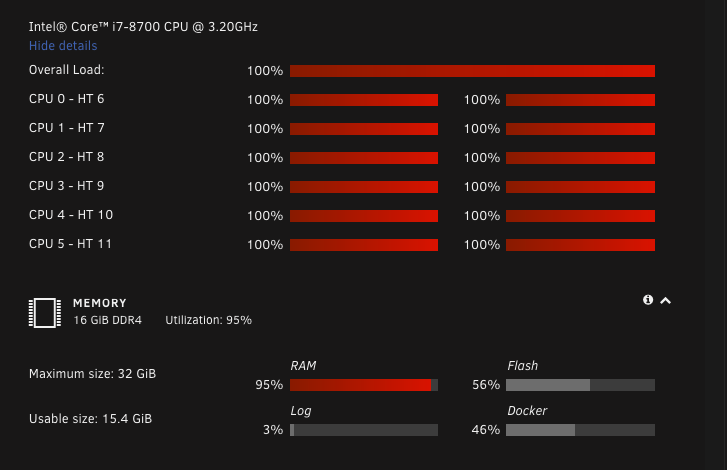

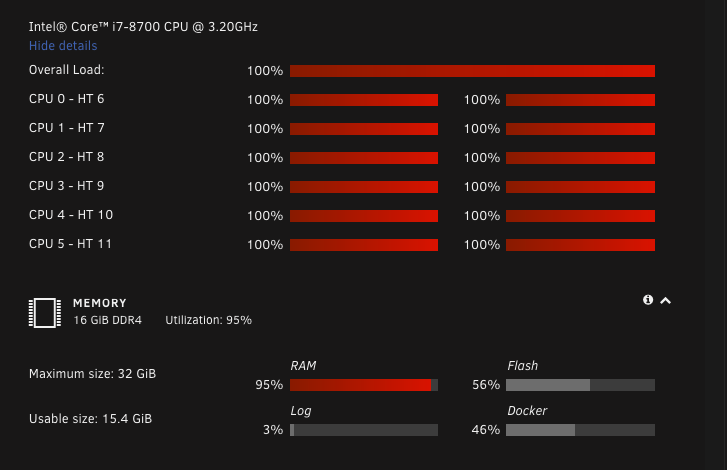

27 minutes ago, Squid said:Based on the screenshot sans any further information, it would appear that Plex is transcoding via the CPU, and maxing out the cores is what you should expect to see.

You should post the diagnostics when it's in this situation

It is hw transcode through internal igpu. It never maxes out cpu without maxing out ram first though, so i thought it is ram not cpu issue.

-

Not sure I picked the right section of the forum for this, if not please move it to the correct one.

So I run a rather standard set of docker containers and 2 vms that i very rarely turn on.

The issue I have is that even with 16g of ram which I thought would be plenty for my use, I do experience very high memory and cpu cores use and subsequently unraid and all containers not responding. This usually happens with Plex when multiple streams (3-4) are being played with transcoding.

I do realize that linux is using all the available Ram and this is fine, I dont need to see free ram;) The issue is that with all these containers runnning, and Plex streaming 3-4 streams my ram is touching 70-80%. If I turn on any vm, the system practically freezes with RAM utilization around 100% and same for all CPU cores.

Now, I dont have any issue with upgrading my 16gb to 32gb. But I wonder if this would actually make any difference since Linux would use all available ram anyway, and even with ram upgrade I can get back to square one;(.

As far as plex transcoding set up, i guess its also pretty standard - transcode to ram with 60seconds buffer. I would like to keep transcode to ram to save up on disks wear.

Turning off transcode is not an option as many clients do not support all formats natively and hence need for transcode.

I observed that when I stop the array and start it again the 'idle' ram usage drops to 30% and stays around 50% for a couple of days, then gradually builds up to reach around 70-80% after a week or so when I start to experience issues described above. I also don't get why ram fill up immediately kills cpu utilization which in turn practically freezes the whole unraid server;( Well, not freezes but makes it barely responsive.

Ultimately my questions are:

1. Would an upgrade to 32gb from 16gb make a meaningful difference in my case or its a waste of money as its rather configuration issue, not hardware?

2. If not, what easy solutions I can utilise to manage ram more efficiently? Maybe a cron job to release ram every now and then? Or maybe there's a plugin that can handle this job?

Thank for help.

-

Thanks! All updated now and works as it should;)

-

1

1

-

-

27 minutes ago, ich777 said:

Oh, I think you got a pretty old template from Krusader...

You can also pull a new copy from the template from the CA App.

Wow;) that did the trick with the permissions

Thanks!

Thanks!

I don't get what you mean by an old template, though. I use ich777 repository and it is on its latest update. What else can I update to a new 'template'?

-

1

1

-

-

OK, so a bit more context then, I believe this all goes down to permissions.

So the folders with subfolders and files that are created by me in krusader can be easily removed.

The folders I have issues with are the ones created by other docker containers, for example CA backup plugin, or created on a windows vm and copied across a network share.

Some folders permissions tab is greyed out like in the attached screen. But other folders have User: krusader, but are still non-removable with Delete button.

I think a setting that would give krusader root access might be the solution but I don't know how to set it up.

-

Potentially a noob query about krusader so bear with me;)

Some folders (with files and subfolders inside) when I try to delete them, do not respond to Delete/F8 command, literally nothing happens when I press Delete button.

If I however invoke terminal window in Krusader and rm -r the same folder it is deleted immediately every single time.

I used to remember Delete button worked with no problems. I did get the warning message that the folder contains files/subfolders inside but confirming these made the folders deleted.

Anyone knows what settings should be changed to bring back the functionality to Delete button? Thanks.

-

On 8/15/2020 at 2:36 PM, BeardedNoir said:

Hello all,

I use the appdata backup pluggin for all my apps, however I want to make double sure that I have no issues retrieving my passwords for Bitwarden in the event of a system failure.

Good to hear that the back-up process works for you. Just out of curiosity, did you actually try to recover that back-up into freshly new bw install, to make sure the back-up can be restored including all user accounts, and their data?

-

Hello, I am interested in bitwarden backup as well. Does this scrtipt actually back up everything? Included atachements? Did you try to recover your backup? Did it work?

-

23 hours ago, skois said:

Stupic macOS, that worked like a charm! Thanks a bunch;)

-

1

1

-

-

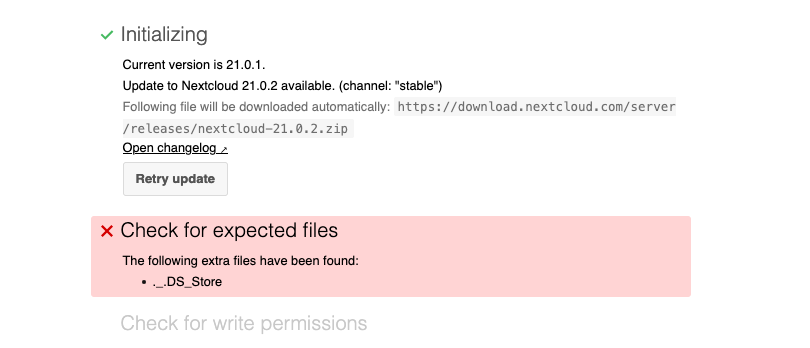

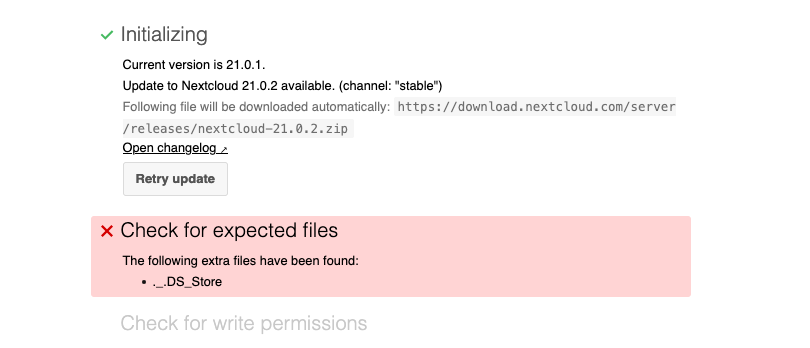

Tried to update to the latest version and below appeared. Anyone can help me fix this? Thanks.

-

On 5/28/2017 at 1:08 PM, CHBMB said:

Got to the bottom of this one:

Edit /config/letsencrypt/nginx/site-confs/nextcloud

location / { proxy_pass https://192.168.0.1:444/; proxy_max_temp_file_size 2048m; include /config/nginx/proxy.conf; }Change 2048m to a size that works for you.

From here.

If your site is behind a nginx frontend (for example a loadbalancer):

By default, downloads will be limited to 1GB due to

proxy_bufferingandproxy_max_temp_file_sizeon the frontend.- If you can access the frontend’s configuration, disable proxy_buffering or increase proxy_max_temp_file_size from the default 1GB.

-

If you do not have access to the frontend, set the X-Accel-Buffering header to

add_header X-Accel-Buffering no;on your backend server.

I think I have exact same issue with combo of nextcloud v21 and nginx proxy manager. Everything works like a breeze except that every file more than 1gb downloaded remotely stops after 1gb. Can someone please explain me like i'm 4-yr old which files in nextcloud and/or in NPM should I edit to get rid of this limitation once and for all. Many thanks in advance.

EDIT: so basically I found the solution myself. No need to edit any files in nextcloud.

In order to get rid of 1gb download limitation :

1. go to NPM Proxy Host tab.

2. Click '3 dots' and click 'Edit' in your nextcloud proxy host.

3. Go to advances tab and put the following:

proxy_request_buffering off; proxy_buffering off;

4. Click Save

That's it. Enjoy no more stupid limitations on the file size downloaded. Tested 13gb file from remote location and it worked like a charm. Hopefully someone finds it useful for their setup.

-

1

1

-

Had exactly the same issue as described above, in my case none of the solutions mentioned here helped. It was jDownloader2 container. Once removed all got back to normal.

Tip from me is to fire up htop, and close one container after another, and observe cpu usage in real time. This helped in my case. Reinstalling jdownloader and all is good now,

-

1

1

-

-

Hello, is it possible to downgrade deluge 2.x.x to 1.3.x and maintain present torrents state? Tried before using only tags and it ended up with successful downgrade but the torrents disappeared from the client.

If you could direct me how to transfer current state of torrents to downgraded version it would be appreciated, thanks;)

-

My issue is not the typo. The issue is that when I input the above command into the unraid terminal or netdada console, I always get the "-bash: -v: command not found" error;(

-

newbie query here, where do i put these commands?

Quote-v '/mnt/cache/appdata/netdata/netadata.conf':'/etc/netdata/netadata.conf':'rw'

and

Quote-v "/var/run/docker.sock":"/var/run/docker.sock":rw

When i put above in CLI i get:

Quoteroot@Tower:~# -v "/var/run/docker.sock":"/var/run/docker.sock":rw

-bash: -v: command not found-

1

1

-

-

Hello, I am using NPM with linuxio/nextcloud. Everything works perfectly except one issue. I have problem when someone tries to download a file more than 1GB in size. It either stops downloading a file or breaks the download entirely. In other topics I found different solutions how to address this issue but all solutions point towards letsencrypt config. Can anyone point me towards a solution with NPM and how to enable download of files >1GB? Much appreciate your input. Thanks.

Below suggested solutions I found so far but had no luck with finding files mentioned below:

QuoteI think I found a solution

Inside of the let's encrypt conf file for nextcloud I found

location / {

include /config/nginx/proxy.conf;

resolver 127.0.0.11 valid=30s;

set $upstream_nextcloud nextcloud;

proxy_max_temp_file_size 2048m;

proxy_pass https://$upstream_nextcloud:443;

}

}QuoteEdit /config/letsencrypt/nginx/site-confs/nextcloud

location / { proxy_pass https://192.168.0.1:444/; proxy_max_temp_file_size 2048m; include /config/nginx/proxy.conf; }

Change 2048m to a size that works for you.

-

-

1 minute ago, saarg said:

This has nothing to do with the container, so please open a thread in the correct place.

When there is an error when trying to create your container the previous container has already been removed and therefore you don't see it in the list as it's not there. You can probably re-install it from previous apps in CA.

Perhaps, I did not word it correctly. I don't know what is the reason for this issue. I did not reinstall it yet. What I am concerned about is if I reinstall it would it mean loosing all configuration? Do I need to start the config from scratch? I spent a lot of time configuring it and wouldn't want to loose it. Can you conform I can reinstall it with no risk of loosing configuration? Thanks

-

Something weird with nextcloud happened to me today. I was trying to add another external mount. I was running 19.0.4 version of nextcloud on unraid 6.8.3. After editing all the details under Add new Path in nextcloud container I hit Apply and was welcomed with an error. I thought its a standard mistake with paths, etc. so I wanted to try again. To my surprise the nextcloud container is gone. It doesn't show up among other containers. The service is not running - I cannot connect to it both locally and externally. I don't even know what to start with and what info I can provide you with to help me with this issue?

There is still a nextcloud folder in appdata. Please help;)

-

On 10/2/2018 at 10:35 PM, FlorinB said:

EdgeRouterX working GUI behind NGINX Reverse Proxy.

In case there is anyone else interested into this I have found the solution.

Here my config:

#Ubiquiti EdgeRouter-X Reverse Proxy #Source: https://community.ubnt.com/t5/EdgeRouter/Access-Edgemax-gui-via-nginx-reverse-proxy-websocket-problem/td-p/1544354 #Adapded by Florin Butoi for docker linuxserver/letsencrypt on 02 Oct 2018 server { listen 80; server_name edgex.*; return 301 https://$host$request_uri; } upstream erl { server 192.168.22.11:443; keepalive 32; } server { listen 443 ssl http2; server_name edgex.*; include /config/nginx/filterhosts.conf; include /config/nginx/ssl.conf; client_max_body_size 512m; location / { proxy_pass https://erl; proxy_http_version 1.1; proxy_buffering off; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection "Upgrade"; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forward-For $proxy_add_x_forwarded_for; } }include /config/nginx/filterhosts.conf;

#allow from this ip allow 212.122.123.124; #temporary internet ip on my router allow 178.112.221.111; #deny all others deny all;Great work, i'd like to replicate the same for my Edgerouter 4.

@FlorinB If you still follow this thread, please help me with the below queries.

1. Where exactly on unraid did you save the main configuration file and under what name?

2. The following section makes me think ports 80 and 443 need to be forwarded for this to work? Is this correct?

I can't use these as I have forwarded them already for nextcloud use. Is there any way configuring other ports for edgemax? If so, what modifications I need to make and where?

server { listen 80; server_name edgex.*; return 301 https://$host$request_uri; } upstream erl { server 192.168.22.11:443; keepalive 32; } server { listen 443 ssl http2; server_name edgex.*; include /config/nginx/filterhosts.conf; include /config/nginx/ssl.conf; client_max_body_size 512m;3. Is the below actually needed? I want to be able to access edgemax gui from anywhere, not limited by a certain IP range.

I don't have filterhost.conf file in /config/ngnix, Do I need to create one?

include /config/nginx/filterhosts.conf; #allow from this ip allow 212.122.123.124; #temporary internet ip on my router allow 178.112.221.111; #deny all others deny all;4. Are there any modifications needed on the edgemax side?

5. Are the above modifications enough? Anything else you didn't mention in your original post?

Thanks for help.

-

On 7/20/2019 at 11:12 PM, ProphetSe7en said:

Thank you. Need to look into that and see what I can make of it.

I have made a script for testing discord messages. This one sends a message to discord. All it does is showing a bell, pinging my user and type the text "this is a test". Now I need to figure out how to integrate it to the script to get the correct message.

curl -X POST "webhookurl" \ -H "Content-Type: application/json" \ -d '{"username":"borg", "content":":bell: Hey <@userid> This is a test"}'There is also a script that uses borg + rclone. At the end it sends a email if any error or backup has finished without errors. It should be possible to change this to use discord, I just dont know how to yet.

https://pastebin.com/8WGmJgiQHello, did you get any success with notifications for rclone? It's pretty much the only feature I'm missing from rclone - notifications. Don't need anything fancy, discord notification or email sent on sync errors only would be enough;)

[SUPPORT] DiamondPrecisionComputing - ALL IMAGES AND FILES

in Docker Containers

Posted

Do you mean environment variable like that?