theunraidhomeuser

Members-

Posts

66 -

Joined

-

Last visited

Converted

-

Gender

Male

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

theunraidhomeuser's Achievements

-

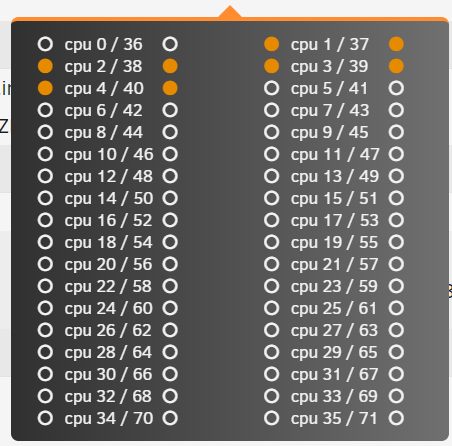

Hi there and thanks for your help. I'll try and remove memfs and shared section as a first try. I'm not an expert on numa nodes so not entirely sure what you mean by: Below is the CPU settings I have. as this is a VM, I don't know how you woulr restrict memory allocation to memory allocated to the CPU, if you could let me know what I need to do, I'd happily try that. I initally had 256GB assigned but then thought the machine might be running out of memory due to some leak but then that turned out not to be the cause. Thanks! and this is the VM setup but you will have seen that from the diags.

-

HI everyone, I get this on my ubuntu VM in Unraid, attaching the urnaid diags. Really hard to google this, I couldn't find any resolution.. These are the last view lines of the VM log file. The VM crashes after around 8-10 hours being on. I have a lot of hard drives attached via 2 USB PCI-E passthrough cards, but it used to work reliably without crashing, not sure what's now causing this.. -device '{"driver":"qxl-vga","id":"video0","max_outputs":1,"ram_size":67108864,"vram_size":67108864,"vram64_size_mb":0,"vgamem_mb":16,"bus":"pcie.0","addr":"0x1"}' \ -device '{"driver":"vfio-pci","host":"0000:0e:00.0","id":"hostdev0","bus":"pci.5","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:04:00.0","id":"hostdev1","bus":"pci.6","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:05:00.0","id":"hostdev2","bus":"pci.7","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:06:00.0","id":"hostdev3","bus":"pci.8","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:07:00.0","id":"hostdev4","bus":"pci.9","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:0e:00.2","id":"hostdev5","bus":"pci.10","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:0e:00.3","id":"hostdev6","bus":"pci.11","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:10:00.0","id":"hostdev7","bus":"pci.12","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:88:00.0","id":"hostdev8","bus":"pci.13","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:89:00.0","id":"hostdev9","bus":"pci.14","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:8a:00.0","id":"hostdev10","bus":"pci.15","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:8b:00.0","id":"hostdev11","bus":"pci.16","addr":"0x0"}' \ -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny \ -msg timestamp=on char device redirected to /dev/pts/0 (label charserial0) qxl_send_events: spice-server bug: guest stopped, ignoring failed to set up stack guard page: Cannot allocate memory 2023-12-23 01:13:59.993+0000: shutting down, reason=crashed Thanks for any suggestions! super-diagnostics-20231223-0917.zip

-

thanks for the suggestion, I might try that. Regarding btrfs errors: no, but I think it’s linked to the drives I’m passing through virtiofs, as I created a pool in unraid to pass through, and it’s formatted with btrfs. I’m thinking of undoing that and passing through the 2 sata drives with UAD to the VM directly, and then potentially creating a raid0 as I want to leverage higher speed. keep you posted! Thanks!

-

oh yes, I am using it. Was using the unraid 9p thing but it's so damn slow to read-write that I switched to virtiofs which seems a lot quicker. Do you suspect this could be a cause? I always used the 9P mode in the past but had much less need for good throughput... I might try out 9p if you think that could be a reason but would love to understand why that would be the case (and in that case, maybe try and address the root cause).

-

Hi and thanks for your help. I just updated to 6.12.6 last night and changed the QEMU version to 7.2. Worth mentioning, I never had this issue before, I was running UNRAID with an ubuntu VM for over 2 years, rock-solid. Recently, I changed the scope of the VM, adding to PCI-E USB 3.0 cards and a passthrough GPU. Since, then, I feel that I'm "overwhelming" the VM somehow, resulting in it to freeze. Log files don't really reveal what's going on (or I can't see it). Attached the latest diags. I'm also getting a "Machine error" in the Fix Common Problems plugin all of a sudden, not sure where that is coming from and don't know where to find the mcelog output... Appreciate your thoughts to trouble-shoot, am a bit lost TBH... super-diagnostics-20231217-1237.zip

-

Hi everyone, I'm hoping for some help, as I can't seem to figure out why my Ubuntu VM constantly crashed. I'm speculating overheating of the NVMe drives but I can't really trace it down. I've got a VM that has 2 USB 3.0 PCIe cards passed through to it and a NVIDIA RTX gpu. All seemed to work until recently... Will try to upgrade Unraid right now and see if that may help. The hardware I'm running is a HP Z840 Workstation with 512GB RAM. Cheers! super-diagnostics-20231216-1135.zip

-

Hey, sorry, I posted on your github an issue that's probably not a code issue, just one for me. Hence here again: Hi there, I'm trying to find documentation on this. I recently changed my setup, adding a lot of drives via USB (Syba 8 Bay Enclosures). These drives are not meant to be part of a pool, I just wnat to mount them. I believe previously, they may have been part of a mergerfs filesystem but normally that doesn't cause any issues as mergerfs is non-destructive to the partittion tables. Do you have an idea how I can mount these drives? I can' create an UNRAID pool, they are more than 30 drives (and I didn't have them in an UNRAID pool before).... Cheers!

-

Unraid with 72 HDDs over USB for unassigned devices

theunraidhomeuser replied to theunraidhomeuser's topic in General Support

sure thing, here comes! All 72 drives connected, however not all showing up. Tried a mix of powered USB 3.2 hubs, unpowered USB 2.0 hubs, and daisychaining the hubs as I read somewhere that could help... Appreciate your views or feedback from anyone really who has more than 30 USB hard drives attached to their NAS. super-diagnostics-20231122-1900.zip -

Unraid with 72 HDDs over USB for unassigned devices

theunraidhomeuser replied to theunraidhomeuser's topic in General Support

Thanks for the quick reply. I concur, I had that issue with Terramaster enclosures and also others. However with Syba, they pass through each drive "as is", i.e. I can even see the serial number of each single drive in Unraid... So I don't think that's the problem...