-

Posts

512 -

Joined

-

Last visited

-

Days Won

8

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by eschultz

-

-

I reported an issue with NFS nounts in 6.2 beta20. I am back to report it also in beta 21. I mount a remote SMB share (on another computer) on the local unraid using UD and try to share it via NFS on the unraid server and I get the following errors in the log:

Apr 8 19:14:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:15:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:16:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:17:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:18:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:19:02 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:19:28 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export Apr 8 19:20:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export

It does export via NFS though.

/etc/exports file:

# See exports(5) for a description. # This file contains a list of all directories exported to other computers. # It is used by rpc.nfsd and rpc.mountd. "/mnt/disks/HANDYMANSERVER_Backups" -async,no_subtree_check,fsid=200 *(sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash) "/mnt/user/Computer Backups" -async,no_subtree_check,fsid=103 *(sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash) "/mnt/user/Public" -async,no_subtree_check,fsid=100 *(sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash) "/mnt/user/iTunes" -async,no_subtree_check,fsid=101 *(sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash)

The line I add to the /etc/exports file is:

"/mnt/disks/HANDYMANSERVER_Backups" -async,no_subtree_check,fsid=200 *(sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash)

My code reads the /etc/exports file into an array, I add my line to the array, and then write the array back to the /etc/exports file. It should show up at the end of the file, not in the middle. It appears that something is altering the /etc/exports file in the background causing me to get parts of the file at times.

Please note that the mount point is /mnt/disks/, not /mnt/user/.

When I mount an iso file with UD and share it via NFS, I do not see the errors in the log.

This did not show up in the early 6.2 beta because NFS was not working, but has shown up in all subsequent beta versions.

Diagnostics attached.

I've done some more experimenting with this problem. If I export a NFS share using exportfs instead of using the /etc/exports file using:

/usr/sbin/exportfs -io async,sec=sys,rw,insecure,anongid=100,anonuid=99,all_squash :/mnt/disks/mountpoint

I see the directory exported using 'exportfs' to display the NFS exports, but a short while later the NFS export for the /mnt/disks/mountpoint is missing. It has been removed and is no longer exported.

I'd rather use the exportfs method of managing the UD NFS exports instead of changing the /etc/exports file, but it doesn't look like it is currently working like I expect.

I have done a little research and found that the log message 'Apr 8 19:14:01 Tower root: exportfs: /mnt/disks/HANDYMANSERVER_Backups does not support NFS export' can show up with an encrypted file system and it can also occur with a FUSE file system.

LT: There appears to be a background task that is periodically updating the NFS exports from the /etc/exports file that is overwriting my entry and that is why I lose my entries using exportfs. That would also explain why I get the log message constantly.

emhttp is managing '/etc/exports' and will rewrite it everytime you access the webgui or background task (e.g. SMART monitoring) is executed so that's why UD's changes are overwritten. I believe you might be able to write your changes to '/etc/exports-' which emhttp uses as a seed file, appends its own NFS entries and saves it out to '/etc/exports'.

-

Can this be turned on for testing? How?

SMB3 Multi-Channel------------------

Samba 4.4.0 adds *experimental* support for SMB3 Multi-Channel.

Multi-Channel is an SMB3 protocol feature that allows the client

to bind multiple transport connections into one authenticated

SMB session. This allows for increased fault tolerance and

throughput. The client chooses transport connections as reported

by the server and also chooses over which of the bound transport

connections to send traffic. I/O operations for a given file

handle can span multiple network connections this way.

An SMB multi-channel session will be valid as long as at least

one of its channels are up.

In Samba, multi-channel can be enabled by setting the new

smb.conf option "server multi channel support" to "yes".

It is disabled by default.

Samba has to report interface speeds and some capabilities to

the client. On Linux, Samba can auto-detect the speed of an

interface. But to support other platforms, and in order to be

able to manually override the detected values, the "interfaces"

smb.conf option has been given an extended syntax, by which an

interface specification can additionally carry speed and

capability information. The extended syntax looks like this

for setting the speed to 1 gigabit per second:

interfaces = 192.168.1.42;speed=1000000000

This extension should be used with care and are mainly intended

for testing. See the smb.conf manual page for details.

CAVEAT: While this should be working without problems mostly,

there are still corner cases in the treatment of channel failures

that may result in DATA CORRUPTION when these race conditions hit.

It is hence

NOT RECOMMENDED TO USE MULTI-CHANNEL IN PRODUCTION

at this stage. This situation can be expected to improve during

the life-time of the 4.4 release. Feed-back from test-setups is

highly welcome.

On the Settings --> SMB --> SMB Extras page you can add in:

server multi channel support = yes

Hit 'Apply'. You may also then need to stop and start the array for that setting to take effect.

Note: We haven't even experimented with multi channel support yet, they warn of data corruption in certain rare race conditions.

-

Thanks

Does the Samba release 4.4.0 fix the error in windows 10 not mounting iso files, and we no longer need to add max protocol = SMB2_02

Yes, mounting iso files in Windows 10 should work now without overriding the max protocol value.

-

FYI - Beta 21 has been released.

Here is the announcement and release thread: http://lime-technology.com/forum/index.php?topic=48193.0

-

As a work around, you can stick this in your /boot/config/go file

sed -i -e '/^SRCEXPR=/s/http/https/' /usr/sbin/update-smart-drivedb

The only problem with your sed replacement command is if, in a future release of unRAID, we fix the url in update-smart-drivedb then running it will actually break the url. Same thing would happen if you ran your sed command twice or more right now, it'll generate an additional 's' each time after 'http'. A safe and future proof replacement (aka idempotent) would be to add two separate colons:

sed -i -e '/^SRCEXPR=/s/http:/https:/' /usr/sbin/update-smart-drivedb

-

But on a incomplete xml with a required field missing (due to a port number being defined), dockerMan shouldn't allow you to install.If the port protocol field in the xml is blank, dockerman leaves a trailing slash after the port mapping and no protocol defined in the docker run command so container install fails.

In the previous stable versions it uses the default tcp as the protocol

You know it's a defective xml, don't you? It's not difficult to patch, but the protocol should always be declared.

I agree and I'll patch it, but it won't make beta19.

Well, even if I fixed all the xmls (which I just did), it would continue being an issue for folks who already have the container installed from before because their xmls would not be updated with the new changes unless they remove and reinstall.

Overall, my bad, though. I remember copying an xml from someone else's repo and I used that as a base for all my xmls. That original one must have been missing it and since it never became an issue I kept using it that way.

Thanks

Thanks for reporting, it's fixed in 6.2-Beta20 and the port protocol will now default to tcp when a port protocol isn't specified in the xml.

-

Thanks for reporting this issue. It was solved in 6.2-Beta19.

-

Both will be patched in the next Beta.

Sorry, I lied. :'( Beta20 only contains the fix for the "cAdvisor template never providing a WebUI link after editing the container details" type issues.

I know what is causing the other, still open, issue regarding the "context menus using old cached values after editing the webUI link" but I need a little bit more time to implement a proper fix for it.

-

also my cpu cores are in seperate fields now. before and after pics attached. is that new??

Yes, it's now shown as "thread pairs" instead of sequentially listing each core, two-per-row. If you don't have hyperthreading (or disabled it) then you'll see one core per row otherwise you'll see the two threads that physically make up a cpu core per row.

-

Shouldn't matter if it's a v1 or v2 template, dockerMan won't overwrite /config host path as long as 'xmlTemplate=default' isn't in the browser url.

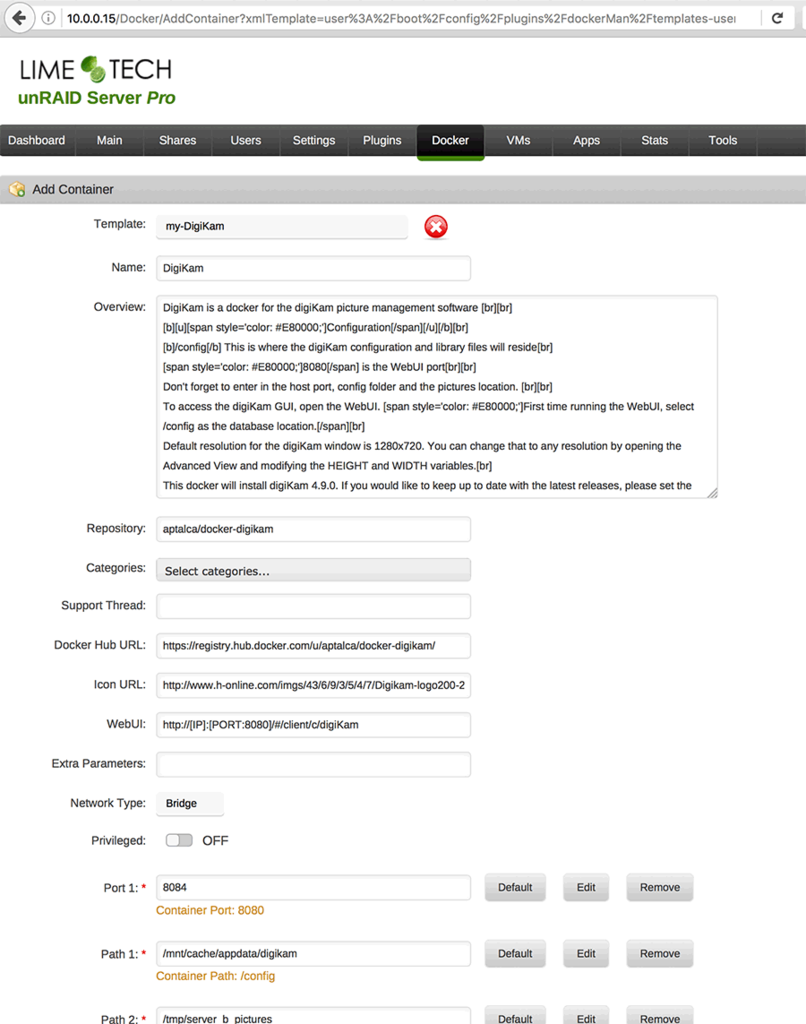

As a test, I took your digikam.xml (v1 user template) and saved it to my server as /boot/config/plugins/dockerMan/templates-user/my-DigiKam.xml

Next, Docker -> Add Container -> choose 'my-DigiKam' from Template dropdown. Turn on Advanced View and /config mapping untouched:

Are you adding user templates a different way? Does your browser URL differ after the host name?

-

Thanks for the defect report Squid!

The only time dockerMan will overwrite /config host mapping automatically is when 'default' is prefixed for the xmlTemplate url argument: (e.g. xmlTemplate=default:/path/to/xml_template)

If you delete a container (that had a custom /config host path) and install that user-template again dockerMan will not modify your original custom /config host path. This is because 'user' is prefixed for the xmlTemplate url argument: (e.g. xmlTemplate=user:/path/to/user_template)

Now I haven't gone full gangbusters and deleted my docker.img yet but I've confirmed the logic and cases above to work as intended which was integrated at least since Beta18.

-

While your description may be correct for some of the users due to that misunderstanding, it is not mine. I've always understood that the port listed in the WebUI entry refers to the container port. What I'm referring to is an actual change to the entryI think I can easy fix this for the next beta.

It seems like the expectation here is [iP]:[PORT:1234] will take you to http://ip_address:1234 but that's not how the PORT:<port> variable works. For example:

binhex-sabnzbd Docker defaults:

WebUI: http://[iP]:[PORT:8080]/

Container Port 8080 <----> Host port 8080

Now if you want to change the host port to 8085:

Container Port 8080 <----> Host port 8085

At this point the WebUI won't need to change and the WebUI link on the context menu will take you to 8085. That's because the WebUI's [PORT:8080] will look for the Container port 8080 and then use port number mapped the Host (8085) for the final link. Essentially "[PORT:8080]" is replaced with "8085".

As a workaround today, you can click 'Check for Updates' to make those WebUI links to refresh.

After I fix this bug you may never need to modify the default WebUI url again.

Take for instance, cAdvisor (smdion's repository). cAdvisor DOES have a webUI, but the template does not have that element filled out.

So, install cAdvisor with ALL of the defaults. Don't change a thing (except its host port if you have to)

Now go back and edit the container and ADD the appropriate WebUI entry in there ie: http://[iP]:[PORT:8080]/

No amount of checking for Updates, etc will get dockerMan to show the WebUI in the drop down, even though if you re-edit the container, it shows that's its there.

A somewhat related issue is where dockerMan on checking for updates (at least on 6.0/6.1 - haven't checked on 6.2) doesn't bring down any changed environment variables.

The problem here is that cAdvisor's *default* settings are to have no webUI, and that is the setting that dockerMan seems to be always picking up.

Sorry for being long winded and terse, but this issue and variations of it have been kicking around since 6.0 in various threads and this is the first response by LT or gfjardim on it.

(And if you follow the link earlier for another example http://lime-technology.com/forum/index.php?topic=40262.msg380740#msg380740), you'll see that a virgin install of a container worked properly, but aptalca could not get a changed value to work on his own container, without jumping through some hoops)

EDIT: I just toasted my docker.img file, rebooted, and installed cAdvisor and before clicking create added the WebUI entry. No WebUI appeared on the dropdown.

Squid, thanks for the details. Confirmed the issue with the cAdvisor template never providing a WebUI link after editing the container details. I fixed the underlying issue in the web gui code.

The issue with the cAdvisor template never providing a WebUI link after editing and, in general, editing the WebUI and saving keeps the old link value in the context menu are actually two separate issues. They're surprisingly similar but in totally unrelated sections of code. Both will be patched in the next Beta.

-

I think I can easy fix this for the next beta.

It seems like the expectation here is [iP]:[PORT:1234] will take you to http://ip_address:1234 but that's not how the PORT:<port> variable works. For example:

binhex-sabnzbd Docker defaults:

WebUI: http://[iP]:[PORT:8080]/

Container Port 8080 <----> Host port 8080

Now if you want to change the host port to 8085:

Container Port 8080 <----> Host port 8085

At this point the WebUI won't need to change and the WebUI link on the context menu will take you to 8085. That's because the WebUI's [PORT:8080] will look for the Container port 8080 and then use port number mapped the Host (8085) for the final link. Essentially "[PORT:8080]" is replaced with "8085".

As a workaround today, you can click 'Check for Updates' to make those WebUI links to refresh.

After I fix this bug you may never need to modify the default WebUI url again.

-

Hi

After login over FTP im always getting

[ 369.831674] vsftpd[18787]: segfault at 0 ip 00002aeeb9980e2a sp 00007ffd221ff098 error 4 in libc-2.23.so[2aeeb98e2000+1c0000][ 374.350005] vsftpd[18833]: segfault at 0 ip 00002ad7ea42ee2a sp 00007ffeafd81418 error 4 in libc-2.23.so[2ad7ea390000+1c0000]

[ 380.910259] vsftpd[18904]: segfault at 0 ip 00002b7b6d8eee2a sp 00007ffd4bdaaa98 error 4 in libc-2.23.so[2b7b6d850000+1c0000]

[ 396.746897] vsftpd[19151]: segfault at 0 ip 00002b7e89b2ee2a sp 00007fffb6490ca8 error 4 in libc-2.23.so[2b7e89a90000+1c0000]

[ 399.897735] vsftpd[19181]: segfault at 0 ip 00002b58c40e4e2a sp 00007ffe83003b78 error 4 in libc-2.23.so[2b58c4046000+1c0000]

This same was in b18 @limetech @jnop please try yourself connect and download/upload some bigger file. (thx)

I haven't been able to get it to crash yet. Transferred a 3GB file in both directions to the cache folder. And then again to user0, both directions. No errors or segfaults.

All I can think of is some installed plugin might have downgraded a package (e.g. openssl, libcap) that vsftpd was dependent on? Please submit diagnostics here (or via the webgui's feedback system).

-

sata works

Prefect! The real bug you helped find (Thanks!) was the OS install bus type is suppose to be 'SATA' when the machine type is Q35 otherwise it should be 'IDE' by default.

-

is this telling me that ipv6 is enabled ?

Mar 12 22:32:01 Unraid-Nas dnsmasq[7520]: compile time options: IPv6 GNU-getopt no-DBus i18n IDN DHCP DHCPv6 no-Lua TFTP no-conntrack ipset auth no-DNSSEC loop-detect inotify

No IPv6 in unRAID, yet

... that stock dnsmasq package we use was compiled by a slackware developer who had IPv6 support on their build machine.

... that stock dnsmasq package we use was compiled by a slackware developer who had IPv6 support on their build machine. -

spinning up an opensuse VM from the template, i had to change

OS Install CDRom Bus:

to virtio from ide or it error out about unsupported controller.

Can you try 'SATA' for the OS Install CDRom Bus for a opensuse VM? Also, which version of opensuse are you using?

-

Before I attempt to convert my two unassinged SSD/zfs pool drives to a cache pool - are we allowed to set and stick the raid mode? I want to combine the 2 240G drives into a single unprotected 480G pool

Thanks

Myk

Yes, you can post-configure your cache pool as raid0.

After assigning both SSDs to your cache pool and starting the array, you can click on the first Cache disk and Balance with the following options for raid0:

-dconvert=raid0 -mconvert=raid0

-

root@localhost:# /usr/local/emhttp/plugins/dynamix.docker.manager/scripts/docker run -d --name="CrashPlan" --net="host" --privileged="true" -e TZ="Europe/Berlin" -e HOST_OS="unRAID" -v "/boot/custom/crashplan_notify.sh":"/etc/service/notify/run":rw -v "/mnt/cache/.config/crashplan":"/config":rw -v "/mnt/user/":"/data":rw --cpuset=3 gfjardim/crashplan

flag provided but not defined: --cpuset

See '/usr/bin/docker run --help'.

The command failed.

I removed the --cpuset command and am trying again.. I missed that it saved that in the advanced view..

Its back now...

I was attempting to try and give the crashplan docker more, or more dedicated resources, I'll hold off on that till the beta has quieted down. Thanks for the quick help !

Instead of --cpuset it is now --cpuset-cpus

-

What about the idea to have a setting to select either single or dual parity operation?

This would also suppress a non-present second parity disk when not used.

Attached some examples

I was actually going to automatically hide Parity2 (and Parity) if they are unassigned instead of looking like they're invalid. It's something on the todo list that didn't make it in to this beta.

-

I'm going to remove the LT plugin repository from CA altogether.

While CA doesn't have an issue with blacklisting the LT version and preventing it from displaying if its not already installed, CA is having trouble distinguishing between the two versions under the Previously Installed and Installed sections (and that will become more important during the next update of CA)

Should LT create another plugin in the future, then the plugin for the nonGUI version will either have to be renamed to something else (and blacklisted again), or deleted altogether from the repository.

Thanks.

That'll work, I appreciate your dedication in maintaining CA and the repos/index involved.

Technically my Nerd Pack wasn't under Lime Tech but rather just a handful of tools I and others felt useful to have available. Some of those packages I put together myself because they weren't versions for slackware.

You have a test system ready for a upcoming unRAID 6.2 beta? [emoji3]

-

On second thought, with the GUI present, would it be more prudent for me to remove the LT plugin from CA once dmacias accepts the PR's I've got on his template?Fantastic! I wanted to build something like that eventually since our list of packages keeps growing. Nice work!

Are you open to collaboration and merging your work in to the existing Nerdpack repo/plugin?

I thought about this more, reviewed dmacias' awesome GUI and code in detail and decided to pop the question. Will you merge me? He said yes!

So, Nerd Pack + Nerd Pack GUI will become just one plugin and all will move over to dmacias' repo.

So, Nerd Pack + Nerd Pack GUI will become just one plugin and all will move over to dmacias' repo.Squid, please remove the my Nerd Pack plugin from CA when you get a chance. I'll make one more update to my plg, updating the repo url, so future updates will come from dmacias' repo instead of mine.

I will continue to update code and add new/updated packages to Nerd Pack for dmacias' repo going forward.

-

First post is not current, doesn't mention addition of perl.

First post updated, thanks!

-

On second thought, with the GUI present, would it be more prudent for me to remove the LT plugin from CA once dmacias accepts the PR's I've got on his template?Fantastic! I wanted to build something like that eventually since our list of packages keeps growing. Nice work!

Are you open to collaboration and merging your work in to the existing Nerdpack repo/plugin?

Maybe... It'd be nice to just have one plugin for this (I don't mind where the plg lives - either my repo or dmacias') but I'm trying to figure out the best way to upgrade everyone that currently has Nerdpack installed. I figured a majority of folks installed my plugin outside of community apps (since you just added plugin support).

unRAID Server Release 6.2.0-beta21 Available

in Announcements

Posted

Not sure what time your backup starts, but I noticed in your logs either the network was unplugged or the switch/router was reset that's connect to your unRAID box: