segator

Members-

Posts

175 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

segator's Achievements

Apprentice (3/14)

7

Reputation

-

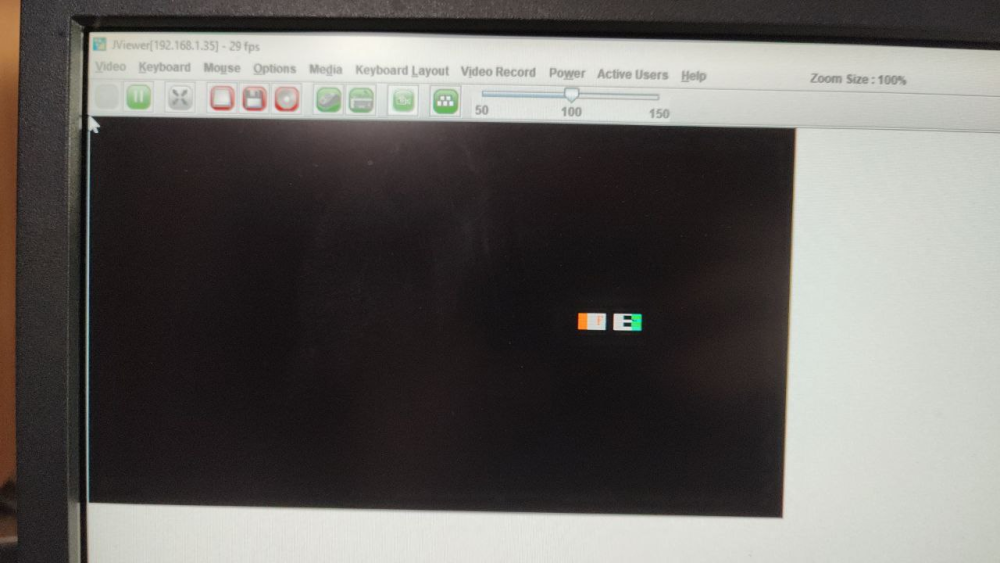

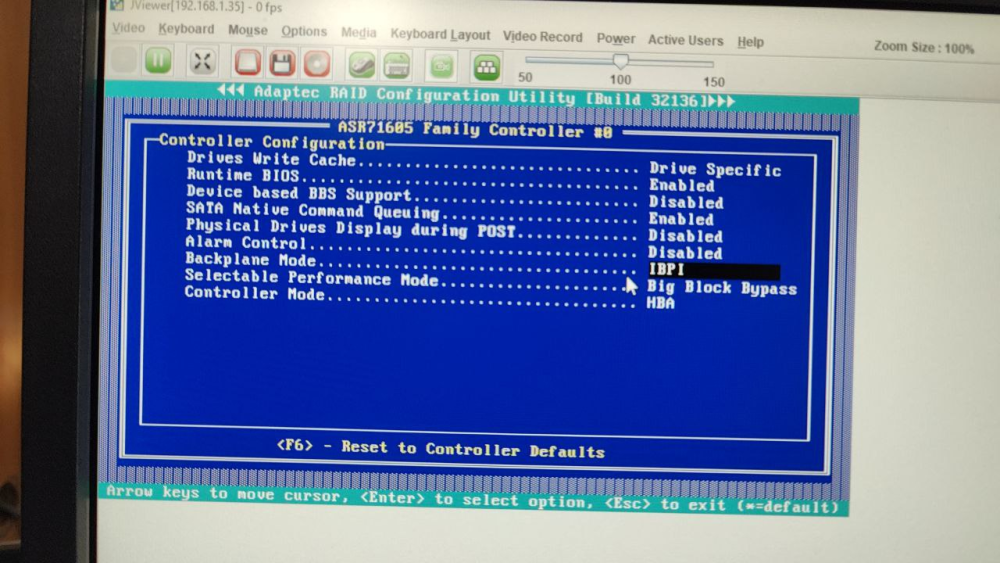

I connected the new card onto the mobo and I can see drives listed when posting in bios, I can even access to adaptec bios and modify configuration but as soon I try to move forward on the post system get stuck with this It stay here forever and it not responds to keyboard. Any idea? I have another 2x LSI HBA connected to the mobo. both conected to 2 backplane each one with 3 miniSAS connections total of 6 miniSAS connections. is the card broken? or is just I don't know how to properly configure it or connect? the card got extremly hot btw I planning to add a 40mm fan in the heatsink, I discard this as problem as I tryed multiple times to boot server cold and always I got the same weird symbol screen.

-

is just that I don't have any other 20TB disk avaiable to migrate the data and I didn't expected to buy another one HDD are expensive in europe. I think a better solution would be to stop using unraid, I can have "unraid like" using snapraid + mergerFS this solution won't require any "unraid requirements" for the partition layout. so it just will work. Anyway thanks for trying to help!

-

is really nothing we can do? if I need to format them I would need to recover 40TB of backup Unhappy, any technical reason why unraid is so picky with partition layout? disk works and I can mount partition by hand mount -t xfs ...

-

what I don't understand I shuked a USB drive that was plugged to unraid array, and now I got this partition layout issue, what it changes? USB controller does something to the hard drive? I checked sector starts at 64

-

could we know which are the "unraid requirements" as I have same problem and i can use mount command by hand and is working. Maybe we can fix partition layour matching unraid requirements but logs are poor

-

I shuked a 2x20TB usb hard disk that was previosuly connected to the array and working and now connected via sata as the other disks, and when starting unraid I got mount error: Unsupported partition layout. I notice if I mount in the shell by hand mount -t xfs /dev/sdt I can access to the file system I also tried using the unraid device mount -t xfs /dev/md18 /dev/sdt and works as well. sg disks shows sgdisk -v /dev/sdt Caution: Partition 1 doesn't end on a 64-sector boundary. This may result in problems with some disk encryption tools. No problems found. 65566 free sectors (32.0 MiB) available in 2 segments, the largest of which is 65536 (32.0 MiB) in size. not sure what else I can check, I could download from backup but 40TB it going to take a good time.. so if I can avoid it better. This seems like a bug in unraid as I can mount by hand the disk and works.

-

how can I automatically unlock encrypted datasets? if I do it manually after everything is started, dockers that are using the encrypted vol won't see the unlocked volume. before 6.12 I was using zfs properties keylocation and keyformat to set a file path with the encryption passphrase but seems not working anymore since 6.12

-

Hey I was able to passtrough single iGPU to a ubuntu 22 VM, in a way I can use it to transcode with plex or ffmpeg, I can see GPU stats with radeontop as well. But as soon VM startups I got black screen so I can not use it for desktop usage, I can not even see the tianocore bios boot screen. Also if I shutdown forcely the VM the host become inestable and I need to reboot full host to make it work again, soft reboot no problem. Hope you can help me, I tried almost everything I found on internet host bios CSM Enabled IOMMU Enabled SR-IOV Tried Enabled/Disabled 4G Decoder Enabled Dynamic BAR: Tried Enabled/Disabled. iGPU vram tried with static 1G,4G or auto. unraid boot append initrd=/bzroot rcu_nocbs=0-15 initcall_blacklist=sysfb_init video=efifb:off,vesafb:off nofb vfio-pci.ids=1002:1637,1002:1638,1022:15df mitigations=off IOMMU groups IOMMU group 0: [1022:1632] 00:01.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Renoir PCIe Dummy Host Bridge IOMMU group 1: [1022:1634] 00:01.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge IOMMU group 2: [1022:1634] 00:01.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge IOMMU group 3: [1022:1632] 00:02.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Renoir PCIe Dummy Host Bridge IOMMU group 4: [1022:1634] 00:02.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge IOMMU group 5: [1022:1634] 00:02.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge IOMMU group 6: [1022:1634] 00:02.3 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne PCIe GPP Bridge IOMMU group 7: [1022:1632] 00:08.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Renoir PCIe Dummy Host Bridge IOMMU group 8: [1022:1635] 00:08.1 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir Internal PCIe GPP Bridge to Bus IOMMU group 9: [1022:1635] 00:08.2 PCI bridge: Advanced Micro Devices, Inc. [AMD] Renoir Internal PCIe GPP Bridge to Bus IOMMU group 10: [1022:790b] 00:14.0 SMBus: Advanced Micro Devices, Inc. [AMD] FCH SMBus Controller (rev 51) [1022:790e] 00:14.3 ISA bridge: Advanced Micro Devices, Inc. [AMD] FCH LPC Bridge (rev 51) IOMMU group 11: [1022:166a] 00:18.0 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 0 [1022:166b] 00:18.1 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 1 [1022:166c] 00:18.2 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 2 [1022:166d] 00:18.3 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 3 [1022:166e] 00:18.4 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 4 [1022:166f] 00:18.5 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 5 [1022:1670] 00:18.6 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 6 [1022:1671] 00:18.7 Host bridge: Advanced Micro Devices, Inc. [AMD] Cezanne Data Fabric; Function 7 IOMMU group 12: [c0a9:540a] 01:00.0 Non-Volatile memory controller: Micron/Crucial Technology P2 NVMe PCIe SSD (rev 01) [N:0:1:1] disk CT1000P3SSD8__1 /dev/nvme0n1 1.00TB IOMMU group 13: [8086:125c] 02:00.0 Ethernet controller: Intel Corporation Ethernet Controller I226-V (rev 04) IOMMU group 14: [8086:125c] 03:00.0 Ethernet controller: Intel Corporation Ethernet Controller I226-V (rev 04) IOMMU group 15: [8086:125c] 04:00.0 Ethernet controller: Intel Corporation Ethernet Controller I226-V (rev 04) IOMMU group 16: [8086:125c] 05:00.0 Ethernet controller: Intel Corporation Ethernet Controller I226-V (rev 04) IOMMU group 17: [1002:1638] 06:00.0 VGA compatible controller: Advanced Micro Devices, Inc. [AMD/ATI] Cezanne [Radeon Vega Series / Radeon Vega Mobile Series] (rev c1) IOMMU group 18: [1002:1637] 06:00.1 Audio device: Advanced Micro Devices, Inc. [AMD/ATI] Renoir Radeon High Definition Audio Controller IOMMU group 19: [1022:15df] 06:00.2 Encryption controller: Advanced Micro Devices, Inc. [AMD] Family 17h (Models 10h-1fh) Platform Security Processor IOMMU group 20: [1022:1639] 06:00.3 USB controller: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne USB 3.1 Bus 001 Device 001 Port 1-0 ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 001 Device 002 Port 1-3 ID 0781:5567 SanDisk Corp. Cruzer Blade Bus 002 Device 001 Port 2-0 ID 1d6b:0003 Linux Foundation 3.0 root hub Bus 002 Device 002 Port 2-2 ID 13b1:0041 Linksys Gigabit Ethernet Adapter IOMMU group 21: [1022:1639] 06:00.4 USB controller: Advanced Micro Devices, Inc. [AMD] Renoir/Cezanne USB 3.1 Bus 003 Device 001 Port 3-0 ID 1d6b:0002 Linux Foundation 2.0 root hub Bus 003 Device 002 Port 3-3 ID 0bda:0129 Realtek Semiconductor Corp. RTS5129 Card Reader Controller Bus 004 Device 001 Port 4-0 ID 1d6b:0003 Linux Foundation 3.0 root hub IOMMU group 22: [1022:15e2] 06:00.5 Multimedia controller: Advanced Micro Devices, Inc. [AMD] ACP/ACP3X/ACP6x Audio Coprocessor (rev 01) IOMMU group 23: [1022:15e3] 06:00.6 Audio device: Advanced Micro Devices, Inc. [AMD] Family 17h/19h HD Audio Controller IOMMU group 24: [1022:15e4] 06:00.7 Signal processing controller: Advanced Micro Devices, Inc. [AMD] Sensor Fusion Hub IOMMU group 25: [1022:7901] 07:00.0 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 81) IOMMU group 26: [1022:7901] 07:00.1 SATA controller: Advanced Micro Devices, Inc. [AMD] FCH SATA Controller [AHCI mode] (rev 81) [3:0:0:0] disk ATA Crucial_CT240M50 MU03 /dev/sdb 240GB as you can see video GPU and audio GPU are split in different IOMMU, no PCS override, no unsafe interrupts enabled. VM Virsh (I tried passing only gpu, gpu+audio , gpu+audio+PSP) <?xml version='1.0' encoding='UTF-8'?> <domain type='kvm' id='4'> <name>Linux3</name> <uuid>b03e5f72-8528-9b18-afd0-609b97495dcb</uuid> <metadata> <vmtemplate xmlns="unraid" name="Linux" icon="linux.png" os="linux"/> </metadata> <memory unit='KiB'>8388608</memory> <currentMemory unit='KiB'>8388608</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>16</vcpu> <cputune> <vcpupin vcpu='0' cpuset='0'/> <vcpupin vcpu='1' cpuset='1'/> <vcpupin vcpu='2' cpuset='2'/> <vcpupin vcpu='3' cpuset='3'/> <vcpupin vcpu='4' cpuset='4'/> <vcpupin vcpu='5' cpuset='5'/> <vcpupin vcpu='6' cpuset='6'/> <vcpupin vcpu='7' cpuset='7'/> <vcpupin vcpu='8' cpuset='8'/> <vcpupin vcpu='9' cpuset='9'/> <vcpupin vcpu='10' cpuset='10'/> <vcpupin vcpu='11' cpuset='11'/> <vcpupin vcpu='12' cpuset='12'/> <vcpupin vcpu='13' cpuset='13'/> <vcpupin vcpu='14' cpuset='14'/> <vcpupin vcpu='15' cpuset='15'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-q35-7.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/b03e5f72-8528-9b18-afd0-609b97495dcb_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='8' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='utc'> <timer name='rtc' tickpolicy='catchup'/> <timer name='pit' tickpolicy='delay'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='qcow2' cache='writeback'/> <source file='/mnt/user/domains/Linux3/vdisk1.img' index='1'/> <backingStore/> <target dev='hdc' bus='virtio'/> <serial>vdisk1</serial> <boot order='1'/> <alias name='virtio-disk2'/> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <alias name='usb'/> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <alias name='usb'/> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <alias name='usb'/> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <controller type='sata' index='0'> <alias name='ide'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/> </controller> <controller type='pci' index='0' model='pcie-root'> <alias name='pcie.0'/> </controller> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x8'/> <alias name='pci.1'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x9'/> <alias name='pci.2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0xa'/> <alias name='pci.3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0xb'/> <alias name='pci.4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x3'/> </controller> <controller type='pci' index='5' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='5' port='0xc'/> <alias name='pci.5'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x4'/> </controller> <controller type='pci' index='6' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='6' port='0xd'/> <alias name='pci.6'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x5'/> </controller> <controller type='pci' index='7' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='7' port='0xe'/> <alias name='pci.7'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x6'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:c2:60:a2'/> <source bridge='br0'/> <target dev='vnet3'/> <model type='virtio-net'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/1'/> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/1'> <source path='/dev/pts/1'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-4-Linux3/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='mouse' bus='ps2'> <alias name='input0'/> </input> <input type='keyboard' bus='ps2'> <alias name='input1'/> </input> <audio id='1' type='none'/> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x06' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <rom file='/mnt/user/isos/vbios/vbios_1002_1638_1.rom'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x0' multifunction='on'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x06' slot='0x00' function='0x1'/> </source> <alias name='hostdev1'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x1'/> </hostdev> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x06' slot='0x00' function='0x2'/> </source> <alias name='hostdev2'/> <address type='pci' domain='0x0000' bus='0x06' slot='0x00' function='0x2'/> </hostdev> <memballoon model='none'/> </devices> <seclabel type='dynamic' model='dac' relabel='yes'> <label>+0:+100</label> <imagelabel>+0:+100</imagelabel> </seclabel> </domain> VM DMESG regarding amdgpu [ 3.089099] amdgpu: ATOM BIOS: 113-CEZANNE-021 [ 3.092861] [drm] VCN decode is enabled in VM mode [ 3.092865] [drm] VCN encode is enabled in VM mode [ 3.092868] [drm] JPEG decode is enabled in VM mode [ 3.092934] amdgpu 0000:05:00.0: vgaarb: deactivate vga console [ 3.092938] amdgpu 0000:05:00.0: amdgpu: Trusted Memory Zone (TMZ) feature enabled [ 3.092971] amdgpu 0000:05:00.0: amdgpu: PCIE atomic ops is not supported [ 3.092988] amdgpu 0000:05:00.0: amdgpu: MODE2 reset [ 3.093102] [drm] vm size is 262144 GB, 4 levels, block size is 9-bit, fragment size is 9-bit [ 3.093119] amdgpu 0000:05:00.0: amdgpu: VRAM: 4096M 0x000000F400000000 - 0x000000F4FFFFFFFF (4096M used) [ 3.093122] amdgpu 0000:05:00.0: amdgpu: GART: 1024M 0x0000000000000000 - 0x000000003FFFFFFF [ 3.093125] amdgpu 0000:05:00.0: amdgpu: AGP: 267419648M 0x000000F800000000 - 0x0000FFFFFFFFFFFF [ 3.093139] [drm] Detected VRAM RAM=4096M, BAR=256M [ 3.093141] [drm] RAM width 128bits DDR4 [ 3.093219] [drm] amdgpu: 4096M of VRAM memory ready [ 3.093222] [drm] amdgpu: 3072M of GTT memory ready. [ 3.093246] [drm] GART: num cpu pages 262144, num gpu pages 262144 [ 3.093383] [drm] PCIE GART of 1024M enabled. [ 3.093386] [drm] PTB located at 0x000000F400A00000 [ 3.103198] amdgpu 0000:05:00.0: amdgpu: PSP runtime database doesn't exist [ 3.103202] amdgpu 0000:05:00.0: amdgpu: PSP runtime database doesn't exist [ 3.108457] [drm] Loading DMUB firmware via PSP: version=0x01010024 [ 3.116187] [drm] Found VCN firmware Version ENC: 1.19 DEC: 5 VEP: 0 Revision: 0 [ 3.116198] amdgpu 0000:05:00.0: amdgpu: Will use PSP to load VCN firmware [ 3.749806] [drm] reserve 0x400000 from 0xf4ff800000 for PSP TMR [ 3.839219] amdgpu 0000:05:00.0: amdgpu: RAS: optional ras ta ucode is not available [ 3.851019] amdgpu 0000:05:00.0: amdgpu: RAP: optional rap ta ucode is not available [ 3.851023] amdgpu 0000:05:00.0: amdgpu: SECUREDISPLAY: securedisplay ta ucode is not available [ 3.851418] amdgpu 0000:05:00.0: amdgpu: SMU is initialized successfully! [ 3.851664] [drm] Unsupported Connector type:21! [ 3.851666] [drm] Unsupported Connector type:21! [ 3.851667] [drm] Unsupported Connector type:21! [ 3.851668] [drm] Unsupported Connector type:21! [ 3.851669] [drm] Unsupported Connector type:21! I suspect of this "Unsupported Connector type:21" BTW for those are not able to use spaceinvader script to extract vbios because your gpu is integrated I was able to extract from a BIOS update file of the motherboard provider using https://github.com/coderobe/VBiosFinder as soon I used that vbios I was able to get encoder works but not display yet . If you can not help me hope at least I was able to help you make your igpu usable for plex transcoder

-

segator started following Running Unraid 6.11.1 as VM in Unraid 6.11.1

-

I upgraded host unraid to 6.11.1 and guest VM to 6.11.1 Virtual disks are not shown the the unraid UI but lsblk are present. Virtual disks ara attached with "virtio" driver using unraid VM Manager. log appear emhttpd: device /dev/vda problem getting id each second I tried with q35-5.2(the one I had before upgrade) Q35-7.1 and same versions for i440fx Seems a bug

-

Fix is install perl and reboot!!, installing perl only was not enough for me

-

Seems not working on latest unraidOS 6.10.3 znapzend --debug --logto=/var/log/znapzend.log --daemonize /usr/bin/perl: /lib64/libc.so.6: version `GLIBC_2.34' not found (required by /usr/bin/perl) /usr/bin/perl: /lib64/libc.so.6: version `GLIBC_2.34' not found (required by /usr/lib64/perl5/CORE/libperl.so)

-

USB Hard disk discconections and Dev names changes

segator replied to segator's topic in General Support

Got it I just disabled spindown on unraid UI, I will let you know if the issue still happening. Anyway I have other HDD via USB can be spinned down and no problems so far, also are plugged to the same USB controller. -

USB Hard disk discconections and Dev names changes

segator replied to segator's topic in General Support

Ok I will try to disable spindown hdparm -S0 /dev/sdx it will be enough right? -

USB Hard disk discconections and Dev names changes

segator replied to segator's topic in General Support

segator-unraid-diagnostics-20220217-2035.zip The affected disk is disk28