cfloener

Members-

Posts

56 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by cfloener

-

Best Way To Install SiliconDust HDHR DVR Software on UnRAID

cfloener replied to bungee91's topic in Plugin System

I have been using the Docker without any problems and has very little impact on my system. I did a spin off the of docker so that i can keep it up to date though at this point the docker is not missing anything big right now. If you would like to install my docker then PM me and I will send you a link to try. -

Anyone working on the Plex DVR docker yet?

-

Maybe run VMware nested as VM in Unraid vs in Windows 10? https://lime-technology.com/forum/index.php?topic=47408.msg453745#msg453745

-

I was not passing the audio through to the VM, I have added it and it seems to have resolved the problem, still testing. Can you post how you are passing the ROM through?

-

Ok ran some tests and if I remove the AMD video card from the VM it will start without crashing the whole system. When I add the card back to the VM it will not display on the monitor connected to this GPU until I reboot after the hard lock. Rebooting the VM does nothing, no video from GPU and no crashing but as soon as I shut down the VM and restart the whole thing locks up.

-

I setup the VM without any mods to the XML file and i have not tried pulling the GPU from the VM but will try tonight. Here is the xml from the vm <domain type='kvm' id='1'> <name>BlurIris</name> <uuid>60f55ffd-3524-7c0a-6cac-020dfe7f1e6f</uuid> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>6291456</memory> <currentMemory unit='KiB'>6291456</currentMemory> <memoryBacking> <nosharepages/> <locked/> </memoryBacking> <vcpu placement='static'>4</vcpu> <cputune> <vcpupin vcpu='0' cpuset='4'/> <vcpupin vcpu='1' cpuset='5'/> <vcpupin vcpu='2' cpuset='6'/> <vcpupin vcpu='3' cpuset='7'/> </cputune> <resource> <partition>/machine</partition> </resource> <os> <type arch='x86_64' machine='pc-i440fx-2.5'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/60f55ffd-3524-7c0a-6cac-020dfe7f1e6f_VARS-pure-efi.fd</nvram> </os> <features> <acpi/> <apic/> <hyperv> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor id='none'/> </hyperv> </features> <cpu mode='host-passthrough'> <topology sockets='1' cores='2' threads='2'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/user/domains/BlurIris/vdisk1.img'/> <backingStore/> <target dev='hdc' bus='virtio'/> <boot order='1'/> <alias name='virtio-disk2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x04' function='0x0'/> </disk> <disk type='file' device='disk'> <driver name='qemu' type='raw' cache='writeback'/> <source file='/mnt/disks/WDC_WD7500AZEX_00RKKA0_WD_WMC1S4359145/BlueIris_Recordings/vdisk3.img'/> <backingStore/> <target dev='hdd' bus='virtio'/> <alias name='virtio-disk3'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x05' function='0x0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/user/ISO/Windows10.iso'/> <backingStore/> <target dev='hda' bus='ide'/> <readonly/> <boot order='2'/> <alias name='ide0-0-0'/> <address type='drive' controller='0' bus='0' target='0' unit='0'/> </disk> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/user/ISO/virtio-win-0.1.118-2.iso'/> <backingStore/> <target dev='hdb' bus='ide'/> <readonly/> <alias name='ide0-0-1'/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <alias name='usb'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <alias name='usb'/> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <alias name='usb'/> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <alias name='usb'/> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <controller type='pci' index='0' model='pci-root'> <alias name='pci.0'/> </controller> <controller type='ide' index='0'> <alias name='ide'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x1'/> </controller> <controller type='virtio-serial' index='0'> <alias name='virtio-serial0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x03' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:21:1f:33'/> <source bridge='br0'/> <target dev='vnet0'/> <model type='virtio'/> <alias name='net0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0'/> </interface> <serial type='pty'> <source path='/dev/pts/0'/> <target port='0'/> <alias name='serial0'/> </serial> <console type='pty' tty='/dev/pts/0'> <source path='/dev/pts/0'/> <target type='serial' port='0'/> <alias name='serial0'/> </console> <channel type='unix'> <source mode='bind' path='/var/lib/libvirt/qemu/channel/target/domain-BlurIris/org.qemu.guest_agent.0'/> <target type='virtio' name='org.qemu.guest_agent.0' state='disconnected'/> <alias name='channel0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x08' slot='0x00' function='0x0'/> </source> <alias name='hostdev0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x06' function='0x0'/> </hostdev> <memballoon model='virtio'> <alias name='balloon0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x08' function='0x0'/> </memballoon> </devices> </domain>

-

Currently running 6.2 RC3 and have noticed that when I reboot my Win 10 VM I have problems with the VM starting with my GPU in passthrough. If i shutdown the VM and restart it Unraid hard locks and the only way to fix is to power off/on. Didn't have this problem in 6.1.9 and not looking to go back unless I have to. Thanks for any help. diagnostics.zip

-

Likely related to a device you're passing through to the VM, most likely a GPU. Does this happen when you remove the GPU from the XML of the VM and start/stop the VM using VNC as the display adapter? This is also not likely specific to RC3, so I'd recommend starting a thread in general support so others can help to troubleshoot the issue, and get more details as needed. Yes I have a GPU on passthrough but didn't have this problem on 6.1.9 that is why I was bring it up here.

-

Did a fresh install of 6.2 and i have noticed that if I stop and start my Windows 10 VM my system hard locks and requires me to turn off the power to fix. The VM is new and not a carry over. The vm will start find with Unriad but again stopping and start will crash my system. Also I need to expand the size of a vdisk but don't see a way, am i missing something? diagnostics.zip

-

Problem with VM's after Upgrading to 6.2 RC3

cfloener replied to cfloener's topic in General Support

So i noticed that if i delete the libvirt img and recreate the problem goes away but I am not able to start the VM. As soon as I create a new VM the screen stays at creating VM but I can see that it was created in the background and started. I get the same error as above as soon as any VM is started. Anyone know what I can do to fix this? Also the misalignment of the dashboard is like that on any browser I use. unraid-diagnostics-20160812-2135.zip -

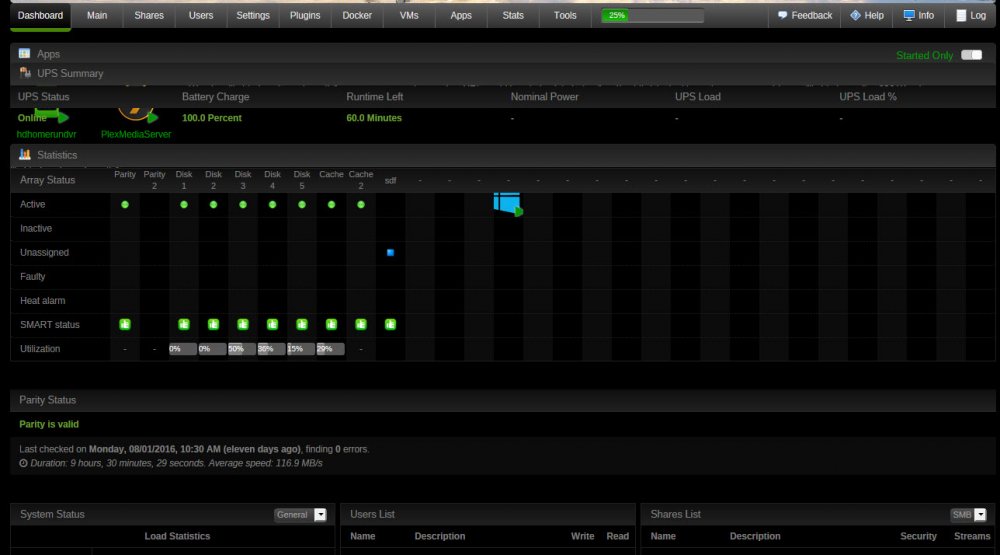

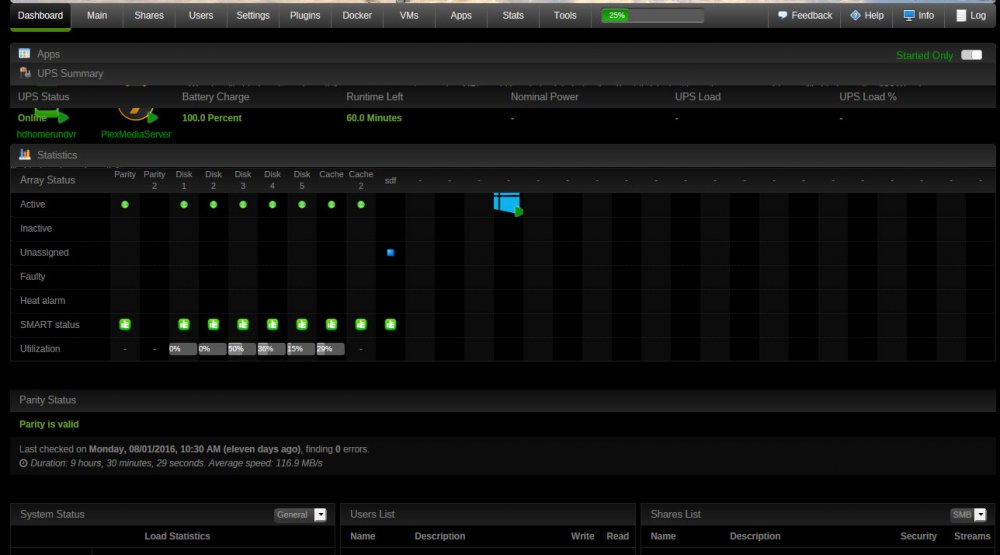

Upgraded to 6.2 RC3 and I get the following error on my VM screen. I only have one VM (Windows 10) and it start and runs fine but I am not able to edit or do any modification to the VM. I can see a new path was added with the update and the error looks to be referencing the new path but not sure what I need to do to correct the problem. Warning: libvirt_domain_xml_xpath(): namespace warning : xmlns: URI unraid is not absolute in /usr/local/emhttp/plugins/dynamix.vm.manager/classes/libvirt.php on line 936 Warning: libvirt_domain_xml_xpath(): Also noticed that everything on my dashboard is arranged crazy and I cannot click on anything. See attached photo.

-

Upgraded to 6.2 RC3 and I get the follwing error on my VM screen. I only have one VM (Windows 10) and it start and runs fine but I am not able to edit or do any modification to the VM. I can see a new path was added with the update and the error looks to be referencing the new path but not sure what I need to do to correct the problem. Warning: libvirt_domain_xml_xpath(): namespace warning : xmlns: URI unraid is not absolute in /usr/local/emhttp/plugins/dynamix.vm.manager/classes/libvirt.php on line 936 Warning: libvirt_domain_xml_xpath(): Also noticed that everything on my dashboard is arranged crazy and I cannot click on anything. See attached photo.

-

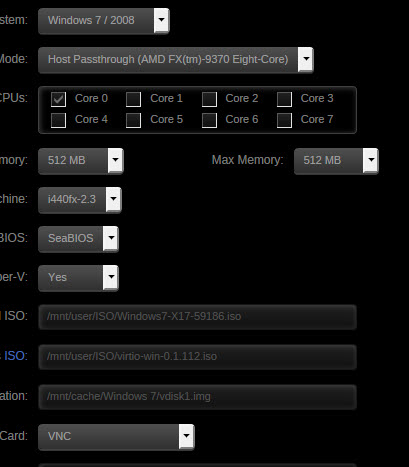

I am trying to create a new Windows 7 VM and I'm not getting pass creating VM. I can see it create the folder and build the vdisk but never completes the process. I currently have a Windows 10 VM up and running but that was created when I had KVM installed (now removed). Not sure what is going on. diagnostics.zip

-

I have noticed that my AMD FX-9370 Eight-Core is not scaling down. I have tried one of the scaling drivers (don't remember what one) but it didn't work.

-

OK I got it, thanks for the help.

-

so from within the docker /etc I should be using cp -b (file) /mnt/disk1 ?

-

I need to modify a conf file within a docker but for the life of me I cannot open the file. I can access the docker via docker exec and I can see the conf file I need to change but trying to run nano within the docker does not work. Anyway to access the docker from within nano? Running Unraid 6.1.9 Thanks for any help.

-

While changing out my cache drives yesterday I noticed after a reboot that one of my folders/shares (Downloads) was now missing. When I check the drives I cannot find the folder but when i look at the amount of storage used I would guess the files are somewhere. My question is, any way to find this folder if it's not showing in any of the drives?

-

I think I resolved the problem. It seem that all i needed to do is force download all the dockers, once complete the error stopped.

-

I upgraded my cache drives from spinner to SSD and now my log file is filling up with the following error and not sure why. Jul 19 18:44:11 UnRaid kernel: BTRFS warning (device loop0): csum failed ino 26616 off 58843136 csum 2529491896 expected csum 2612263610 unraid-diagnostics-20160719-1852.zip

-

User shares are perfectly fine to use if you have them enabled, but they are located at /mnt/user, not /user. Just a typo, it should have been mnt/user I assume you actually meant "/mnt/user" ? Just a thought - do you also have a user share on the path. i.e. something like "/mnt/user/sharename". If not then it will still end up in RAM as it only ends up pointing to the physical disks when it matches a folder (share) name on the disks. My normal share point for this is /mnt/user/HDHOMERUN/ and was getting OOM errors, yesterday I changed it to /mnt/disk4/HDHOMERUN and still got the OOM errors. I have now moved it back to /mnt/user/HDHOMERUN/. Not 100% sure what is causing the OOM errors now.

-

Still getting the Out of memory error, also have some hardware error that i'm not sure what it is. Mar 2 02:06:23 UnRaid kernel: mce: [Hardware Error]: Machine check events logged Mar 2 02:08:16 UnRaid kernel: qemu-system-x86 invoked oom-killer: gfp_mask=0xd0, order=0, oom_score_adj=0 Mar 2 02:08:16 UnRaid kernel: qemu-system-x86 cpuset=emulator mems_allowed=0 Mar 2 02:08:16 UnRaid kernel: CPU: 2 PID: 6926 Comm: qemu-system-x86 Not tainted 4.1.17-unRAID #1 Mar 2 02:08:16 UnRaid kernel: Hardware name: To be filled by O.E.M. To be filled by O.E.M./SABERTOOTH 990FX R2.0, BIOS 2501 04/08/2014 Mar 2 02:08:16 UnRaid kernel: 0000000000000000 ffff8807fd6576f8 ffffffff815f1df0 0000000000000000 Mar 2 02:08:16 UnRaid kernel: ffff880819733aa0 ffff8807fd6577a8 ffffffff815ee36c ffff8807fd657758 Mar 2 02:08:16 UnRaid kernel: ffffffff810f6d09 ffff88010023c000 01ff8807fd657718 ffff8807fd657758 Mar 2 02:08:16 UnRaid kernel: Call Trace: Mar 2 02:08:16 UnRaid kernel: [<ffffffff815f1df0>] dump_stack+0x4c/0x6e Mar 2 02:08:16 UnRaid kernel: [<ffffffff815ee36c>] dump_header+0x7a/0x20b Mar 2 02:08:16 UnRaid kernel: [<ffffffff810f6d09>] ? mem_cgroup_iter+0x2fd/0x3fb Mar 2 02:08:16 UnRaid kernel: [<ffffffff810fac83>] ? vmpressure+0x1c/0x6f Mar 2 02:08:16 UnRaid kernel: [<ffffffff813552f3>] ? ___ratelimit+0xcf/0xe0 Mar 2 02:08:16 UnRaid kernel: [<ffffffff810b3c72>] oom_kill_process+0xb7/0x37b Mar 2 02:08:16 UnRaid kernel: [<ffffffff810b3977>] ? oom_badness+0xb6/0x100 Mar 2 02:08:16 UnRaid kernel: [<ffffffff810b43c7>] __out_of_memory+0x441/0x463 Mar 2 02:08:16 UnRaid kernel: [<ffffffff810b4528>] out_of_memory+0x4f/0x66 Mar 2 02:08:16 UnRaid kernel: [<ffffffff810b852c>] __alloc_pages_nodemask+0x722/0x7b6 Mar 2 02:08:16 UnRaid kernel: [<ffffffff810e4a34>] alloc_pages_current+0xb4/0xd5 Mar 2 02:08:16 UnRaid kernel: [<ffffffff810b4c4f>] __get_free_pages+0x9/0x36 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110ca78>] __pollwait+0x59/0xc8 Mar 2 02:08:16 UnRaid kernel: [<ffffffff81131828>] eventfd_poll+0x28/0x4f Mar 2 02:08:16 UnRaid kernel: [<ffffffff81131800>] ? SyS_timerfd_gettime+0x144/0x144 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110dba2>] do_sys_poll+0x228/0x479 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110ca1f>] ? poll_initwait+0x3f/0x3f Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110cc3c>] ? poll_select_copy_remaining+0xf4/0xf4 Mar 2 02:08:16 UnRaid kernel: [<ffffffff8110dfae>] SyS_ppoll+0xba/0x148 Mar 2 02:08:16 UnRaid kernel: [<ffffffff815f74ee>] system_call_fastpath+0x12/0x71 Mar 2 02:08:16 UnRaid kernel: Mem-Info: Mar 2 02:08:16 UnRaid kernel: active_anon:6247009 inactive_anon:18439 isolated_anon:0 Mar 2 02:08:16 UnRaid kernel: active_file:1186 inactive_file:3854 isolated_file:74 Mar 2 02:08:16 UnRaid kernel: unevictable:1685622 dirty:345 writeback:38 unstable:0 Mar 2 02:08:16 UnRaid kernel: slab_reclaimable:130645 slab_unreclaimable:21387 Mar 2 02:08:16 UnRaid kernel: mapped:14565 shmem:92219 pagetables:18873 bounce:0 Mar 2 02:08:16 UnRaid kernel: free:38739 free_pcp:638 free_cma:0 Mar 2 02:08:16 UnRaid kernel: Node 0 DMA free:15744kB min:8kB low:8kB high:12kB active_anon:112kB inactive_anon:0kB active_file:0kB inactive_file:0kB unevictable:0kB isolated(anon):0kB isolated(file):0kB present:15988kB managed:15904kB mlocked:0kB dirty:0kB writeback:0kB mapped:16kB shmem:112kB slab_reclaimable:0kB slab_unreclaimable:32kB kernel_stack:0kB pagetables:0kB unstable:0kB bounce:0kB free_pcp:0kB local_pcp:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:0 all_unreclaimable? yes Mar 2 02:08:16 UnRaid kernel: lowmem_reserve[]: 0 2896 32062 32062 Mar 2 02:08:16 UnRaid kernel: Node 0 DMA32 free:118672kB min:2060kB low:2572kB high:3088kB active_anon:1018000kB inactive_anon:6268kB active_file:424kB inactive_file:3300kB unevictable:1618596kB isolated(anon):0kB isolated(file):0kB present:3042072kB managed:2967136kB mlocked:1618596kB dirty:316kB writeback:152kB mapped:4608kB shmem:32872kB slab_reclaimable:171344kB slab_unreclaimable:8348kB kernel_stack:1408kB pagetables:5944kB unstable:0kB bounce:0kB free_pcp:1468kB local_pcp:248kB free_cma:0kB writeback_tmp:0kB pages_scanned:29668 all_unreclaimable? yes Mar 2 02:08:16 UnRaid kernel: lowmem_reserve[]: 0 0 29166 29166 Mar 2 02:08:16 UnRaid kernel: Node 0 Normal free:20540kB min:20776kB low:25968kB high:31164kB active_anon:23969924kB inactive_anon:67488kB active_file:4320kB inactive_file:12116kB unevictable:5123892kB isolated(anon):0kB isolated(file):296kB present:30392316kB managed:29866192kB mlocked:5123892kB dirty:1064kB writeback:0kB mapped:53636kB shmem:335892kB slab_reclaimable:351236kB slab_unreclaimable:77168kB kernel_stack:9024kB pagetables:69548kB unstable:0kB bounce:0kB free_pcp:1084kB local_pcp:0kB free_cma:0kB writeback_tmp:0kB pages_scanned:105256 all_unreclaimable? yes Mar 2 02:08:16 UnRaid kernel: lowmem_reserve[]: 0 0 0 0 Mar 2 02:08:16 UnRaid kernel: Node 0 DMA: 0*4kB 0*8kB 2*16kB (UM) 1*32kB (U) 1*64kB (U) 2*128kB (UM) 2*256kB (UM) 1*512kB (M) 2*1024kB (UM) 2*2048kB (UM) 2*4096kB (MR) = 15744kB Mar 2 02:08:16 UnRaid kernel: Node 0 DMA32: 1057*4kB (UEM) 1030*8kB (UEM) 1826*16kB (UE) 763*32kB (UEM) 261*64kB (UEM) 131*128kB (UM) 51*256kB (UM) 12*512kB (UM) 0*1024kB 0*2048kB 0*4096kB = 118772kB Mar 2 02:08:16 UnRaid kernel: Node 0 Normal: 4624*4kB (UEM) 104*8kB (UEM) 0*16kB 2*32kB (R) 0*64kB 1*128kB (R) 1*256kB (R) 1*512kB (R) 1*1024kB (R) 0*2048kB 0*4096kB = 21312kB Mar 2 02:08:16 UnRaid kernel: 97756 total pagecache pages Mar 2 02:08:16 UnRaid kernel: 0 pages in swap cache Mar 2 02:08:16 UnRaid kernel: Swap cache stats: add 0, delete 0, find 0/0 Mar 2 02:08:16 UnRaid kernel: Free swap = 0kB Mar 2 02:08:16 UnRaid kernel: Total swap = 0kB Mar 2 02:08:16 UnRaid kernel: 8362594 pages RAM Mar 2 02:08:16 UnRaid kernel: 0 pages HighMem/MovableOnly Mar 2 02:08:16 UnRaid kernel: 150286 pages reserved Mar 2 02:08:16 UnRaid kernel: [ pid ] uid tgid total_vm rss nr_ptes nr_pmds swapents oom_score_adj name Mar 2 02:08:16 UnRaid kernel: [ 1063] 0 1063 5451 663 15 3 0 -1000 udevd Mar 2 02:08:16 UnRaid kernel: [ 1296] 0 1296 58398 638 25 3 0 0 rsyslogd Mar 2 02:08:16 UnRaid kernel: [ 1448] 1 1448 1714 425 8 3 0 0 rpc.portmap Mar 2 02:08:16 UnRaid kernel: [ 1452] 32 1452 3209 479 12 3 0 0 rpc.statd Mar 2 02:08:16 UnRaid kernel: [ 1462] 0 1462 1615 407 9 3 0 0 inetd Mar 2 02:08:16 UnRaid kernel: [ 1472] 0 1472 6016 797 16 3 0 -1000 sshd Mar 2 02:08:16 UnRaid kernel: [ 1485] 0 1485 24852 1201 21 3 0 0 ntpd Mar 2 02:08:16 UnRaid kernel: [ 1493] 0 1493 1096 406 7 3 0 0 acpid Mar 2 02:08:16 UnRaid kernel: [ 1506] 81 1506 4359 51 12 3 0 0 dbus-daemon Mar 2 02:08:16 UnRaid kernel: [ 1508] 0 1508 1617 421 8 3 0 0 crond Mar 2 02:08:16 UnRaid kernel: [ 1510] 0 1510 1615 25 8 3 0 0 atd Mar 2 02:08:16 UnRaid kernel: [ 1526] 0 1526 3339 1584 11 3 0 0 cpuload Mar 2 02:08:16 UnRaid kernel: [ 3633] 0 3633 48349 1446 94 3 0 0 nmbd Mar 2 02:08:16 UnRaid kernel: [ 3635] 0 3635 67739 3567 132 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [ 6149] 0 6149 22743 1369 19 3 0 0 emhttp Mar 2 02:08:16 UnRaid kernel: [ 6156] 0 6156 1618 450 8 3 0 0 agetty Mar 2 02:08:16 UnRaid kernel: [ 6157] 0 6157 1618 450 8 3 0 0 agetty Mar 2 02:08:16 UnRaid kernel: [ 6158] 0 6158 1618 449 8 3 0 0 agetty Mar 2 02:08:16 UnRaid kernel: [ 6159] 0 6159 1618 425 7 3 0 0 agetty Mar 2 02:08:16 UnRaid kernel: [ 6160] 0 6160 1618 449 7 3 0 0 agetty Mar 2 02:08:16 UnRaid kernel: [ 6161] 0 6161 1618 450 8 3 0 0 agetty Mar 2 02:08:16 UnRaid kernel: [ 6214] 0 6214 20727 552 15 3 0 0 apcupsd Mar 2 02:08:16 UnRaid kernel: [ 6373] 0 6373 55131 419 18 3 0 0 shfs Mar 2 02:08:16 UnRaid kernel: [ 6384] 0 6384 376783 2978 58 4 0 0 shfs Mar 2 02:08:16 UnRaid kernel: [ 6580] 0 6580 2375 1162 9 3 0 0 inotifywait Mar 2 02:08:16 UnRaid kernel: [ 6581] 0 6581 2341 554 9 3 0 0 watcher Mar 2 02:08:16 UnRaid kernel: [ 6636] 0 6636 215369 3147 81 3 0 0 libvirtd Mar 2 02:08:16 UnRaid kernel: [ 6828] 61 6828 8613 707 21 3 0 0 avahi-daemon Mar 2 02:08:16 UnRaid kernel: [ 6829] 61 6829 8547 60 21 3 0 0 avahi-daemon Mar 2 02:08:16 UnRaid kernel: [ 6837] 0 6837 3186 392 11 3 0 0 avahi-dnsconfd Mar 2 02:08:16 UnRaid kernel: [ 6909] 99 6909 3852 554 12 3 0 0 dnsmasq Mar 2 02:08:16 UnRaid kernel: [ 6910] 0 6910 3819 44 12 3 0 0 dnsmasq Mar 2 02:08:16 UnRaid kernel: [ 6913] 0 6913 249961 6263 61 6 0 0 docker Mar 2 02:08:16 UnRaid kernel: [ 6926] 0 6926 2797645 1689128 3550 14 0 0 qemu-system-x86 Mar 2 02:08:16 UnRaid kernel: [10247] 0 10247 78421 8749 151 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [11663] 0 11663 74633 4776 144 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [23094] 0 23094 74780 5103 144 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [30694] 0 30694 74631 4446 144 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [21112] 0 21112 10959 2220 24 3 0 0 snmpd Mar 2 02:08:16 UnRaid kernel: [21175] 99 21175 70153 3785 135 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [18057] 0 18057 74654 4486 143 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [18383] 0 18383 74411 3797 142 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [18086] 0 18086 3355 1599 11 3 0 0 cache_dirs Mar 2 02:08:16 UnRaid kernel: [ 2754] 0 2754 8468 1387 20 3 0 0 my_init Mar 2 02:08:16 UnRaid kernel: [ 2791] 0 2791 49 3 3 2 0 0 runsvdir Mar 2 02:08:16 UnRaid kernel: [ 2792] 0 2792 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [ 2793] 0 2793 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [ 2794] 0 2794 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [ 2795] 0 2795 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [ 2796] 0 2796 44 4 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [ 2797] 0 2797 2293 23 7 3 0 0 tail Mar 2 02:08:16 UnRaid kernel: [ 2798] 0 2798 7111 61 17 3 0 0 cron Mar 2 02:08:16 UnRaid kernel: [ 2800] 0 2800 16437 370 36 3 0 0 syslog-ng Mar 2 02:08:16 UnRaid kernel: [ 2807] 99 2807 39861 259 80 3 0 0 hdhomerun_recor Mar 2 02:08:16 UnRaid kernel: [30722] 0 30722 7058 1201 17 3 0 0 my_init Mar 2 02:08:16 UnRaid kernel: [32104] 0 32104 49 5 3 2 0 0 runsvdir Mar 2 02:08:16 UnRaid kernel: [32105] 0 32105 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [32106] 0 32106 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [32107] 0 32107 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [32108] 0 32108 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [32109] 0 32109 44 1 3 2 0 0 runsv Mar 2 02:08:16 UnRaid kernel: [32110] 0 32110 1870 19 8 3 0 0 tail Mar 2 02:08:16 UnRaid kernel: [32111] 0 32111 6688 67 18 3 0 0 cron Mar 2 02:08:16 UnRaid kernel: [32112] 0 32112 15917 380 34 3 0 0 syslog-ng Mar 2 02:08:16 UnRaid kernel: [32113] 999 32113 1112 19 6 3 0 0 start_pms Mar 2 02:08:16 UnRaid kernel: [32295] 999 32295 154268 44665 318 4 0 0 Plex Media Serv Mar 2 02:08:16 UnRaid kernel: [ 980] 999 980 461530 18725 151 5 0 0 python Mar 2 02:08:16 UnRaid kernel: [23887] 0 23887 74641 4590 143 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [23888] 0 23888 74703 4536 143 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [26949] 0 26949 49 1 3 2 0 0 s6-svscan Mar 2 02:08:16 UnRaid kernel: [26974] 0 26974 49 1 3 2 0 0 s6-supervise Mar 2 02:08:16 UnRaid kernel: [27210] 0 27210 49 1 3 2 0 0 s6-supervise Mar 2 02:08:16 UnRaid kernel: [ 7218] 0 7218 12795 87 29 3 0 0 su Mar 2 02:08:16 UnRaid kernel: [ 7241] 99 7241 1256745 140733 570 9 0 0 mono-sgen Mar 2 02:08:16 UnRaid kernel: [21100] 0 21100 74316 4571 144 3 0 0 smbd Mar 2 02:08:16 UnRaid kernel: [12148] 999 12148 6006415 5940021 11725 26 0 0 Plex Media Scan Mar 2 02:08:16 UnRaid kernel: [18179] 0 18179 2159 196 9 3 0 0 timeout Mar 2 02:08:16 UnRaid kernel: [18180] 0 18180 2982 580 11 3 0 0 find Mar 2 02:08:16 UnRaid kernel: [18312] 0 18312 331 1 5 3 0 0 run Mar 2 02:08:16 UnRaid kernel: [18330] 0 18330 1091 187 7 3 0 0 sleep Mar 2 02:08:16 UnRaid kernel: Out of memory: Kill process 12148 (Plex Media Scan) score 724 or sacrifice child Mar 2 02:08:16 UnRaid kernel: Killed process 12148 (Plex Media Scan) total-vm:24025660kB, anon-rss:23759784kB, file-rss:300kB Mar 2 02:08:53 UnRaid kernel: mce: [Hardware Error]: Machine check events logged

-

User shares are perfectly fine to use if you have them enabled, but they are located at /mnt/user, not /user. Just a typo, it should have been mnt/user

-

If that is really the path you have set that will explain your problem as /user is in RAM. Ok moved the path from /user to disk, I will check again tonight and see if i get the same errors.

-

I checked my VM's and while i have a total of 4 VM (only one running) each have about 6gig of RAM assigned to them, not sure if that is what your seeing. I can delete the other VM's in the event the system is reserving the ram for the other VM's. I checked the HDHomerun and it is writing to /user/HDhomerun, I did have that folder as part of folder caching but I have now removed it for testing. I guess I will remove some unused VM's and with folder caching removed from the HDHomerun folder I will have to see what happens. Thank you for the response.