Drewster727

Members-

Posts

42 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by Drewster727

-

I am rolling back to 6.9.x - not seeing any traction on this issue.

-

tower-diagnostics-20220704-1022.zip

-

Can confirm this issue with 6.10 -- Also upgraded to each latest hotfix version, still having NFS issues. One of my client servers basically loses access to half the data, empty folders in lots of places.

-

@zandrsn hey dude -- I believe that's in the glances.config file that you reference.

-

File sharing service become unresponsive at end of parity check

Drewster727 replied to klamath's topic in General Support

@klamath @johnnie.black I'm also running into this issue. I've got 22 drives in my array, most are 4-6TB, 1x8TB, and dual 8TBs in parity. Your last message is confusing, did you make a change that made a difference? My NFS files access is basically *useless* when running a parity check. I seem to be capped at 75MB/s throughout the whole check as well, so it's slowwwww. My CPU never goes above 40% either. Also, this is on 6.4.1, so the UI is still responsive during checks, just NFS access is atrocious. Thanks -

System Freezing with Out of Memory Reported

Drewster727 replied to cyberrad's topic in General Support

Try this: Slightly different issue, but could be the same solution. -

I had a similar issue, however, I was seeing a clear out of memory sign in the logs. You can check out the following post, get the tips & tweaks plugin, and try what fixed it for me: Worth a shot! -Drew

-

Thanks guys! That's what I will do.

-

Ok, so just to clarify: Turn off the array Switch to maintenance mode (ensures no writes?) Swap the parity disks in the GUI Let it rebuild Once complete, exit maintenance mode If anything fails, pop the old 6TB parity disks back in to resolve the issues. Is this correct?

-

Ok, figured that was probably the case. I may get risky and just do a full rebuild on both of them to minimize the time I'm putting pressure on the array.

-

Well, the only reason I wasn't considering doing them one at a time is because when parity checks run, they're slow and causes performance issues with my array during the sync that I'm trying to avoid. Question -- if I do it one at a time, is unRAID smart enough to rebuild parity from the existing parity disk or does it still have to read from the entire array during the sync process?

-

I've currently got 2x6TB WD Red drives as my parity disks (dual parity). I recently purchased 2x8TB HGST Deskstar drives to replace them (so that I can start adding 8TB drives to my array). I've never had to rebuild dual parity before, let alone replacing the disks. I assume it's exactly the same process as a single disk. In other words, my plan to upgrade them is: Preclear new 8TB disks (already done) Stop the array Shut down the server Swap the current parity disks with the new ones Boot up server Re-assign parity slots to the new drives Turn on the array and just let it rebuild Is this a correct procedure for dual parity rebuilds? Thanks!

-

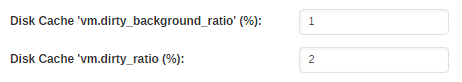

@johnnie.black hey man -- after adjusting those vm.dirty_ values down to 1 and 2, everything has been very stable. I have kicked off the mover several times the past week and have had 0 issues. Thanks again!

-

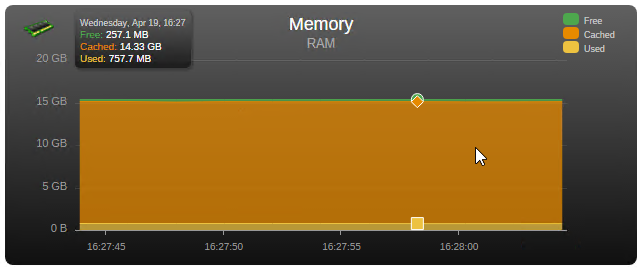

Anyone know if this is normal behavior with the cache amount? Was using this much before and after tweaking my 'dirty' cache settings... just curious.

-

@johnnie.black well, it crashed again when the mover ran, same out of memory exception. Pushing those values down to 1 and 2.

-

I changed the following values: vm.dirty_background_ratio: was at 10, set it to 5 vm.dirty_ratio: was at 20, set it to 10 Hopefully that helps... Will report back on results. Thanks again @johnnie.black Will be waiting anxiously for the next unraid version with the newer kernel.

-

Thanks @johnnie.black I will give that a shot. It's strange, I have 16GB of RAM. However, cache uses up most of that, but it was my understanding that if the system needed any of those resources, it could obtain them without issue.

-

When running the mover, I noticed it will sometimes (usually) freeze the whole system up. Unable to load the web UI and unable to SSH in. So, I've ran into this before and it has prevented me from running diagnostics, therefore I decided to run a "tail -f /var/log/syslog" output to a file from a remote box on the same network. Here's the tail of the log: putty.log It starts at about 17:26:24, right after the mover finishes, cache_dirs runs, coincidentally, that's when it all hangs. Apr 15 17:26:24 Tower kernel: cache_dirs: page allocation stalls for 54081ms, order:0, mode:0x27080c0(GFP_KERNEL_ACCOUNT|__GFP_ZERO|__GFP_NOTRACK) Now, it's possible the tail I was running (from another machine) wasn't able to grab the log line that shows what caused the hang, but it's very coincidental with the cache_dirs (and related) logs at that time. I may need to tail to a file on the unraid box itself. Any ideas? This is super annoying to have to hard reboot my box when it happens. Even attaching a keyboard/monitor locally after it happens, the command line is unresponsive.

-

Reformatted cache as xfs. I also had nic bonding enabled and working for over a year now, but I jumped into the network settings today and noticed it was complaining about eth0 not being connected. Swapped the cables for eth0 and eth1, then it said eth1 wasn't detected. soooo perhaps faulty nic. I disabled bonding and am just using the good nic. We'll see what happens now...

-

@razor I'm having the same problem with shares going missing. However, my cache drive isn't full when it happens. Changing to XFS on the cache drive help at all?

-

FYI - I did see a very similar issue... @razor any ideas? I noticed you talking about cache drive filesystem, mine is a 500GB SSD formatted as reiserfs. Should I format as xfs?

-

@itimpi @trurl hey guys, it happened again. It has happened a couple times today, but I was able to snag a diagnostics the most recent time. See attached. The whole server goes somewhat unresponsive for a bit of time, it did eventually come back tho. Shares went missing and all my servers were hanging trying to connect via NFS. I think it happened right at about 22:27 timeframe tower-diagnostics-20170408-2232.zip

-

@itimpi got it. I will provide diagnostics output next time. Unfortunately, I lost the full logs from that timeframe due to the reboot.

-

Description: Randomly, my user shares will completely disappear from unraid. Leaving all of my machines (connecting via NFS) unable to access any of the data. The shares do return if I stop the array and reboot unraid. How to reproduce: No idea how this is happening. I do have a log file attached from right when the incident occurred. (unraid_log_04072017.txt) It shows some warning/nfsd error. Expected results: Shares don't go missing... Actual results: Shares go missing... Other information: This issue has happened on every version of unraid 6.3.x I believe. It sometimes takes days for it to happen. Hardware: unRAID system: unRAID server Pro, version 6.3.3 Motherboard: ASRock - FM2A88X Extreme6+ Processor: AMD A10-7850K Radeon R7, 12 Compute Cores 4C+8G @ 3.7 GHz Memory: G. SKILL DDR3 8GB (2x4GB) 18 disks in array 2 parity disks (dual parity) No sync errors on last parity check a week ago. unraid_log_04072017.txt

-

I ended up creating my own docker container to get things working: https://hub.docker.com/r/drewster727/hddtemp-docker/