ixnu

Members-

Posts

135 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by ixnu

-

[SOLVED] 11th disk does not appear available in shares.

ixnu replied to ixnu's topic in General Support

thanks! -

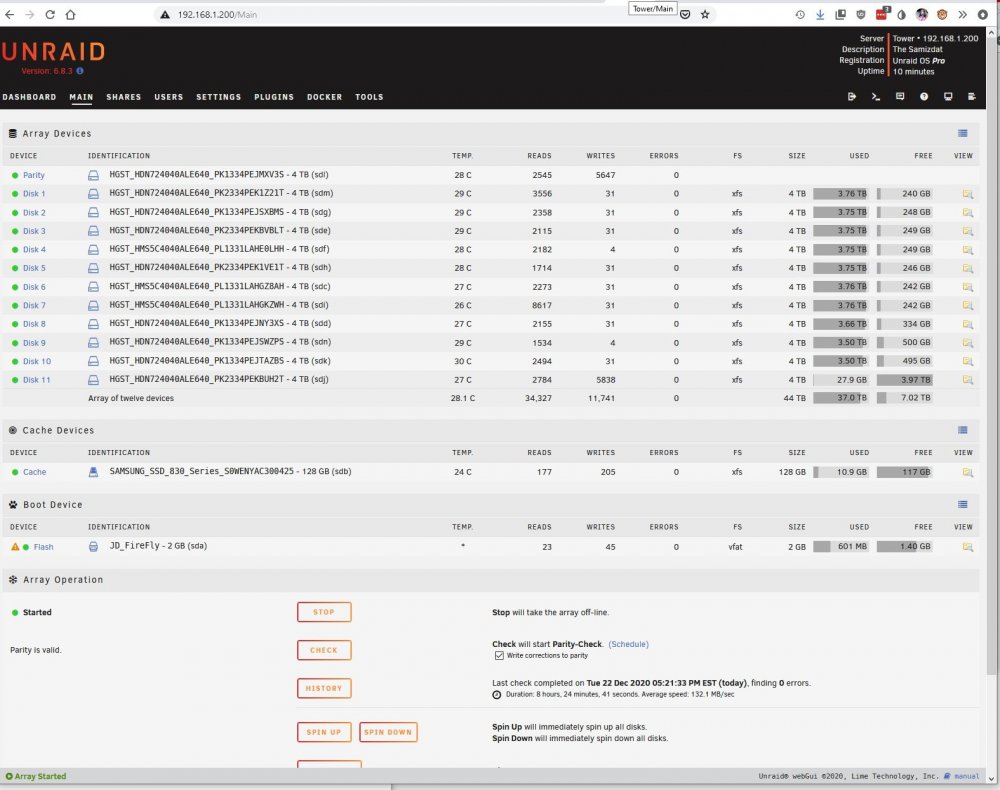

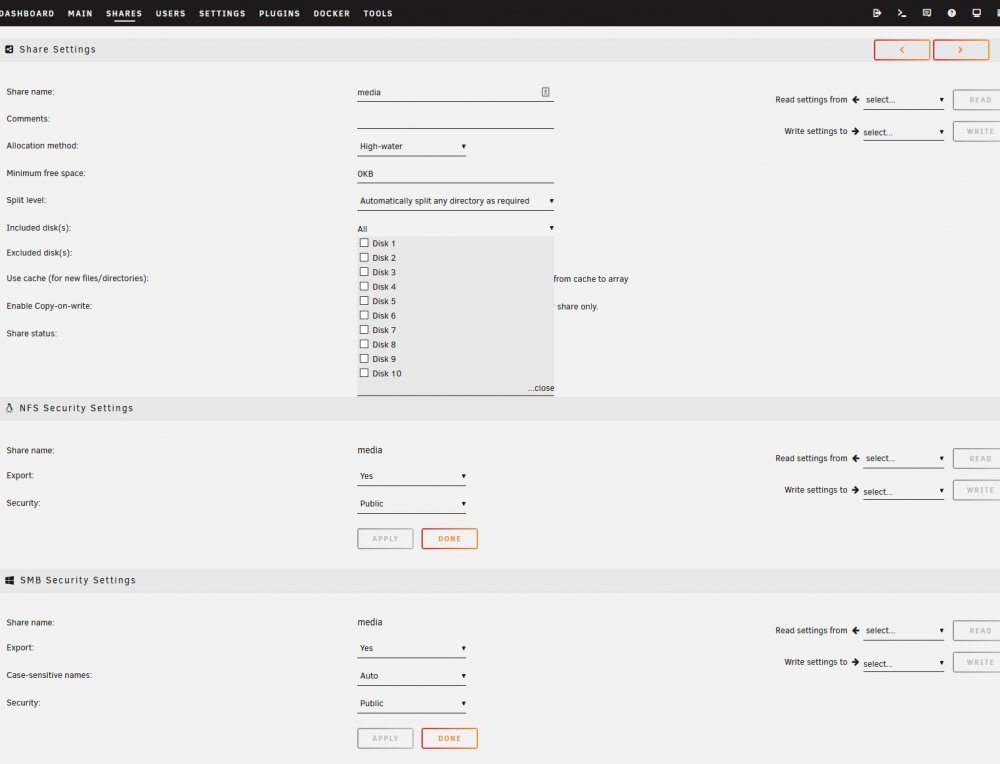

I have just added an 11th disk to my array. It cleared and formatted without issue. I have rebooted the array three times. It appears in the array and the aggregate free space is correct. However, the free space on the share only shows the original 10 disk free space. Also, the share has "All" for disks but does not write to it. Also, the 11th disk does not show for "included" disks. I also attempted to add a new share, but I can't added the 11th disk to the share.

-

I had the NFS issue too. My SMB and NFS shares disappeared after 4 days. I had friends over to watch a movie and quickly rebooted and did not save logs. Rolled back to 6.5.3

-

unRAID OS version 6.5.0 Stable Release - Update Notes

ixnu replied to ljm42's topic in Announcements

Congratulations on the outstanding release. This one might be as legendary as 4.7. -

I do not lurk much, but the first course of action always seems to be move off RFS. It's obviously a difficult problem, but seems to be far more common on RFS n'est pas?

-

I had this EXACT issue and failed to find the root cause after 30 hours of troubleshooting. My problem pointed to a software issue since it happened to me on completely disparate hardware using the same disks. I built a new machine and mounted the RFS disks on snapraid for a stable mount. It wasn't a big deal since I needed to replace my ancient backup server. The problem vanished after I migrated to new disks (including cache) and formatted as XFS. I emailed Tom with suggestion that RFS be completely removed from support, but he is convinced that this is too extreme.

-

[Closed] My UNRAID machine is freezing after some hours

ixnu replied to tokra's topic in General Support

Yes, that is correct. -

[Closed] My UNRAID machine is freezing after some hours

ixnu replied to tokra's topic in General Support

I would reformat the cache to XFS also. Very interested in your results. -

[Closed] My UNRAID machine is freezing after some hours

ixnu replied to tokra's topic in General Support

Also notice that you have ReiserFS. I'm surprised that a member has not suggested this as the root cause. I had a 6.2.4 AMD system that would not stay stable beyond 24 hours with ReiserFS. This box had been stable for over 3 years with 5x. It would lock up SMB and would neither restart nor shutdown. I eventually installed Debian on the erstwhile unstable unraid box in order to migrate data to XFS due to its mysterious instability. I emailed LimeTech for help about the problem, but never received a response https://lime-technology.com/forum/index.php?topic=54452.msg521132 Do you have a drive to test the system without Reiser? -

[Closed] My UNRAID machine is freezing after some hours

ixnu replied to tokra's topic in General Support

Yep. https://lime-technology.com/forum/index.php?topic=54731.msg522948 Oom killer invoked - although it probably had to do with cache dirs. As a side note, it appears that your MB variant had one of the highest RMA percentages in 2014: https://www.techpowerup.com/forums/threads/2014-motherboard-rma-rate-new-update-from-hardware-fr.207128/ -

[Closed] My UNRAID machine is freezing after some hours

ixnu replied to tokra's topic in General Support

I concur. I have an FM2+ A88X ASrock board (A88M-G/3.1) with an A8-7600 that has been stable since upgrading to 8GB of RAM. This MB looks almost exactly like yours. I run only run one SATA port (cache drive) on MB though. -

[SOLVED] oom-killer invoked- Kill process or sacrifice child

ixnu replied to ixnu's topic in General Support

Moving to 8GB seems to have solved it. Thanks! -

[SOLVED] oom-killer invoked- Kill process or sacrifice child

ixnu replied to ixnu's topic in General Support

Found some slower dimms to get to 8GB. Will see how that does. If ~4GB is not enough for my set up of 10 disks with no dockers, I would think the suggest that the wiki be updated n'est pas? https://lime-technology.com/wiki/index.php/Designing_an_unRAID_server -

[SOLVED] oom-killer invoked- Kill process or sacrifice child

ixnu replied to ixnu's topic in General Support

Have not tried safe mode. I have turned off all plugins except Dynamix webGui. More memory really means at least 16GB (why go with 8GB if ~4GB is an issue on the absolutely barest system). Also, I would want to run memtest for at least 24 hours on it. It's not the costs at all, but I'm about 60 hours into troubleshooting these two issues and I just want to get a stable system. Again, thanks for you guys taking a look at this! -

[SOLVED] oom-killer invoked- Kill process or sacrifice child

ixnu replied to ixnu's topic in General Support

Thanks John! Did not think about that. However, should just plain unraid with no dockers and just minimal plugins require > 3GB memory? I got away with 2 GB on my old system with more disks... -

And the winner for the most cold-blooded syslog message is... Out of memory: Kill process 8492 (monitor) score 5 or sacrifice child Dec 14 11:05:09 Tower kernel: Killed process 8509 (sh) total-vm:9496kB, anon-rss:208kB, file-rss:4kB, shmem-rss:2404kB Dec 14 14:34:25 Tower kernel: emhttp invoked oom-killer: gfp_mask=0x26040c0(GFP_KERNEL|__GFP_COMP|__GFP_NOTRACK), order=0, oom_score_adj=0 Dec 14 14:34:25 Tower kernel: emhttp cpuset=/ mems_allowed=0 Dec 14 14:34:25 Tower kernel: CPU: 3 PID: 3511 Comm: emhttp Not tainted 4.8.12-unRAID #1 Any ideas? This a brand new system with new disks and not running any dockers with 4GB. This box is currently being restored from backup because my other UnRaid box became unstable after 24 hours because Reiser. 6.3rc6 A8-7600 ASRock Motherboard Micro ATX DDR3 2400 NA A88M-G/3.1 2x Dell Perc 310's fashed to IT Not too happy with Unraid right now. tower-diagnostics-20161215-1530.zip

-

-

Thanks Squid! Has a root cause been identified or just cargo cult troubleshooting?

-

Still at a loss on how to troubleshoot. Would greatly appreciate any UnraidTM Inc. input. Since I have nothing to go on, it seems to happen at idle. I have created a simple cron to write a file every 15 mins to the array and set the mover to every hour. I'm hopeful this will give me something.

-

Still no joy. Web and smb not responsive. CPU is pegged with shfs (Htop screen included.) /mnt/user0 & /mnt/user will not complete a simple "ls" Neither "shudown -r now" nor "powerdown -r now" will complete.

-

And we are back to shfs at 100% cpu. However this time it's not zombie. Also no smbd processes. Can I try something during this state to help troubleshooting? Syslog is clean.

-

My apology. Thought diag might be enough. Again, thanks for taking a look! If this is not enough info, just let me know what you need. This box is about 5 years old and everything was bought new. I have had 2 red balls with Seagate drives that were rebuilt properly. All of the controller slots are occupied and one on the MB is empty. I shrunk the array so that I could use this slot to move everything to XFS. MB: AsRock 880GM-LE - AMD SB710 CPU: AMD Sempron 140 RAM: Gskill 2 x 4GB DDR3/1066 Conroller: Supermicro AOC-SASLP-MV8 PSU: CORSAIR CX series CX750 750W UPS: CyberPower CP1350AVRLCD (1350 VA) The below sit in 3 x Supermicro 5-in-3 drive containers. HDDs (Most are ~80% full and highest is 88% full) Parity HGST_HMS5C4040ALE640_PL2331LAHE3V0J -(sdc) 4 TB Disk 1 Hitachi_HDS5C3020ALA632_ML0221F30420HD - 2 TB reiserfs 2 TB Disk 2 SAMSUNG_HD204UI_S2H7J1SZ911253 - reiserfs 2 TB Disk 3 SAMSUNG_HD204UI_S2H7J1CZ918725 - reiserfs 2 TB Disk 4 WDC_WD40EZRZ-00WN9B0_WD-WCC4E0ST3K77 - reiserfs 4 TB -Empty Slot - Disk 6 WDC_WD20EZRX-00DC0B0_WD-WCC300793356 reiserfs 2 TB Disk 7 WDC_WD20EZRX-22D8PB0_WD-WCC4M5ET1KUD - xfs 2 TB Disk 8 WDC_WD20EVDS-63T3B0_WD-WCAVY5276605 - reiserfs 2 TB Disk 9 WDC_WD20EVDS-63T3B0_WD-WCAVY5328007 - reiserfs 2 TB Disk 10 ST2000DM001-1CH164_Z2F0TGTB - reiserfs 2 TB Disk 11 WDC_WD20EARX-008FB0_WD-WCAZAD975980 reiserfs 2 TB Disk 12 WDC_WD2002FAEX-007BA0_WD-WMAY05158670 reiserfs 2 TB Cache SAMSUNG_SSD_830_Series_S0WENYAC301281 xfs 128 GB Boot Flash JD_FireFly - 2 GB (sda)

-

Memtest 4 passes and no errors. Updated to rc6. No errors on: Memtest; file systems; and SMART. Nothing in the syslog. What next? Willing to try anything. Voodoo dolls? Blood sacrifice?

-

I have removed the cache drive to test this speed theory. My cache is an SSD. I should also mention that fuser -mv /dev/md* would not complete when I was having issues.

-

Ha! That's how nasty rumors get started. Any theories on how Reiser causes this (zombie shfs and runaway smbd processes)?