lrx345

-

Posts

33 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by lrx345

-

-

8 hours ago, binhex said:

this sounds to me like it maybe the libtorrentv2 issue, try appending a tag name of 'libtorrentv1' to your repository name, if you dont know how to do this then see Q5:- https://github.com/binhex/documentation/blob/master/docker/faq/unraid.md

Thanks, going to give this a shot.

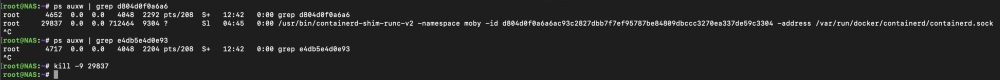

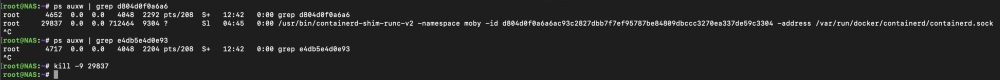

Doing some more troubleshooting - I installed your qBittorrentVPN container to compare how it functions to this deluge container. I found an interesting output comparison using the ps auxw | grep [containerID] command only after the deluge container failed again.

As you can see, the deluge docker (top one) has an extra process

root 29837 0.0 0.0 712464 9304 ? Sl 04:45 0:00 /usr/bin/containerd-shim-runc-v2 -namespace moby -id d804d0f0a6a6ac93c2827dbb7f7ef95787be84809dbccc3270ea337de59c3304 -address /var/run/docker/containerd/containerd.sock

Killing this process returns fixed a lockup issue with the Unraid WebUI and finally shut down the deluge container.

The interesting part is the call traces from the unraid syslog appear to be correlated timing wise with the docker logs complaining about this locked up process? I'm way out of my depth here though.

Either way, will downgrade to libtorrentv1 and see if that starts solving the issue

-

On 6/10/2023 at 2:47 AM, lrx345 said:

Hi @binhex,

I believe I've broken something with my instance and it is causing the unraid web UI to go unstable. After a short time of running the deluge webui no longer responds, and the container fails to exit even with a `docker kill` command.

I did recently change my downloads folder from a 1tb HDD to a 8tb HDD. I ended up using the same disk share name as the previous drive. That's the only change I've made recently. All my files loaded up great and started seeding so I don't think that is it?

Anyways, here are some logs from my system and the container itself.

System Log

Docker Log

Delugevpn Log

Unfortunately still struggling with this.

I've tried- Changing the mount point for the new HDD just in case

- Deleting the delugevpn image and re-adding it

- Clearing app data and starting fresh

Unfortunately in all cases, the delugevpn webui stops responding after a few hours and the container crashes. To shut down the container I am having to use

ps auxw | grep [containerID]

Followed by a kill -9 [processID]

Any help is greatly appreciated

-----

Edit:

I've found more logs that are related to deluge and are referencing call tracesJun 13 03:24:04 NAS kernel: Call Trace: Jun 13 03:24:04 NAS kernel: <TASK> Jun 13 03:24:04 NAS kernel: __schedule+0x596/0x5f6 Jun 13 03:24:04 NAS kernel: ? get_futex_key+0x281/0x2ad Jun 13 03:24:04 NAS kernel: schedule+0x8e/0xc3 Jun 13 03:24:04 NAS kernel: __down_read_common+0x241/0x295 Jun 13 03:24:04 NAS kernel: do_exit+0x279/0x8e5 Jun 13 03:24:04 NAS kernel: make_task_dead+0xba/0xba Jun 13 03:24:04 NAS kernel: rewind_stack_and_make_dead+0x17/0x17 Jun 13 03:24:04 NAS kernel: RIP: 0033:0x154c05f6c60d Jun 13 03:24:04 NAS kernel: RSP: 002b:0000154c01be6888 EFLAGS: 00010202 Jun 13 03:24:04 NAS kernel: RAX: 0000154be001ee90 RBX: 0000154be0000dd8 RCX: 0000154c01be6ac0 Jun 13 03:24:04 NAS kernel: RDX: 0000000000004000 RSI: 000015355e67fceb RDI: 0000154be001ee90 Jun 13 03:24:04 NAS kernel: RBP: 0000000000000000 R08: 0000000000000002 R09: 0000000000000000 Jun 13 03:24:04 NAS kernel: R10: 0000000000000008 R11: 0000000000000246 R12: 0000000000000000 Jun 13 03:24:04 NAS kernel: R13: 0000154be00272f0 R14: 0000000000000002 R15: 0000154bfc428540 Jun 13 03:24:04 NAS kernel: </TASK> Jun 13 03:24:32 NAS kernel: rcu: INFO: rcu_preempt detected expedited stalls on CPUs/tasks: { P22284 } 2052231 jiffies s: 47953 root: 0x0/T Jun 13 03:24:32 NAS kernel: rcu: blocking rcu_node structures (internal RCU debug): Jun 13 03:25:38 NAS kernel: rcu: INFO: rcu_preempt detected expedited stalls on CPUs/tasks: { P22284 } 2117767 jiffies s: 47953 root: 0x0/T Jun 13 03:25:38 NAS kernel: rcu: blocking rcu_node structures (internal RCU debug): Jun 13 03:26:43 NAS kernel: rcu: INFO: rcu_preempt detected expedited stalls on CPUs/tasks: { P22284 } 2183303 jiffies s: 47953 root: 0x0/T Jun 13 03:26:43 NAS kernel: rcu: blocking rcu_node structures (internal RCU debug): Jun 13 03:27:04 NAS kernel: rcu: INFO: rcu_preempt detected stalls on CPUs/tasks: Jun 13 03:27:04 NAS kernel: rcu: Tasks blocked on level-0 rcu_node (CPUs 0-3): P22284/1:b..l Jun 13 03:27:04 NAS kernel: (detected by 0, t=2220062 jiffies, g=86521621, q=1614141 ncpus=4) Jun 13 03:27:04 NAS kernel: task:deluged state:D stack: 0 pid:22284 ppid: 21270 flags:0x00004002 Jun 13 03:27:04 NAS kernel: Call Trace: Jun 13 03:27:04 NAS kernel: <TASK> Jun 13 03:27:04 NAS kernel: __schedule+0x596/0x5f6 Jun 13 03:27:04 NAS kernel: ? get_futex_key+0x281/0x2ad Jun 13 03:27:04 NAS kernel: schedule+0x8e/0xc3 Jun 13 03:27:04 NAS kernel: __down_read_common+0x241/0x295 Jun 13 03:27:04 NAS kernel: do_exit+0x279/0x8e5 Jun 13 03:27:04 NAS kernel: make_task_dead+0xba/0xba Jun 13 03:27:04 NAS kernel: rewind_stack_and_make_dead+0x17/0x17 Jun 13 03:27:04 NAS kernel: RIP: 0033:0x154c05f6c60d Jun 13 03:27:04 NAS kernel: RSP: 002b:0000154c01be6888 EFLAGS: 00010202 Jun 13 03:27:04 NAS kernel: RAX: 0000154be001ee90 RBX: 0000154be0000dd8 RCX: 0000154c01be6ac0 Jun 13 03:27:04 NAS kernel: RDX: 0000000000004000 RSI: 000015355e67fceb RDI: 0000154be001ee90 Jun 13 03:27:04 NAS kernel: RBP: 0000000000000000 R08: 0000000000000002 R09: 0000000000000000 Jun 13 03:27:04 NAS kernel: R10: 0000000000000008 R11: 0000000000000246 R12: 0000000000000000 Jun 13 03:27:04 NAS kernel: R13: 0000154be00272f0 R14: 0000000000000002 R15: 0000154bfc428540 Jun 13 03:27:04 NAS kernel: </TASK>Any thoughts?

-

20 minutes ago, itimpi said:

You might find this section of the online documentation to be of help.

Thank you. I somewhat accidentally discovered one of my docker containers is hanging and seems correlated with the WebUI freezes. I'll explore that route first and see if it reveals anything. This is somewhat present in the logs with binhex-delugevpn hanging

-

Hi @binhex,

I believe I've broken something with my instance and it is causing the unraid web UI to go unstable. After a short time of running the deluge webui no longer responds, and the container fails to exit even with a `docker kill` command.

I did recently change my downloads folder from a 1tb HDD to a 8tb HDD. I ended up using the same disk share name as the previous drive. That's the only change I've made recently. All my files loaded up great and started seeding so I don't think that is it?

Anyways, here are some logs from my system and the container itself.

System Log

QuoteJun 9 10:30:50 NAS kernel: microcode: microcode updated early to revision 0xf0, date = 2021-11-12

Jun 9 10:30:50 NAS kernel: Linux version 5.19.17-Unraid (root@Develop) (gcc (GCC) 12.2.0, GNU ld version 2.39-slack151) #2 SMP PREEMPT_DYNAMIC Wed Nov 2 11:54:15 PDT 2022

Jun 9 10:30:50 NAS kernel: Command line: BOOT_IMAGE=/bzimage initrd=/bzroot

Jun 9 10:30:50 NAS kernel: x86/fpu: Supporting XSAVE feature 0x001: 'x87 floating point registers'

Jun 9 10:30:50 NAS kernel: x86/fpu: Supporting XSAVE feature 0x002: 'SSE registers'

Jun 9 10:30:50 NAS kernel: x86/fpu: Supporting XSAVE feature 0x004: 'AVX registers'

Jun 9 10:30:50 NAS kernel: x86/fpu: Supporting XSAVE feature 0x008: 'MPX bounds registers'

Jun 9 10:30:50 NAS kernel: x86/fpu: Supporting XSAVE feature 0x010: 'MPX CSR'

Jun 9 10:30:50 NAS kernel: x86/fpu: xstate_offset[2]: 576, xstate_sizes[2]: 256

Jun 9 10:30:50 NAS kernel: x86/fpu: xstate_offset[3]: 832, xstate_sizes[3]: 64

Jun 9 10:30:50 NAS kernel: x86/fpu: xstate_offset[4]: 896, xstate_sizes[4]: 64

Jun 9 10:30:50 NAS kernel: x86/fpu: Enabled xstate features 0x1f, context size is 960 bytes, using 'compacted' format.

Jun 9 10:30:50 NAS kernel: signal: max sigframe size: 2032

Jun 9 10:30:50 NAS kernel: BIOS-provided physical RAM map:

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x0000000000000000-0x0000000000057fff] usable

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x0000000000058000-0x0000000000058fff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x0000000000059000-0x000000000009efff] usable

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x000000000009f000-0x00000000000fffff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x0000000000100000-0x00000000b2f78fff] usable

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000b2f79000-0x00000000b2f79fff] ACPI NVS

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000b2f7a000-0x00000000b2f7afff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000b2f7b000-0x00000000b9ae0fff] usable

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000b9ae1000-0x00000000b9e28fff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000b9e29000-0x00000000b9f60fff] usable

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000b9f61000-0x00000000ba646fff] ACPI NVS

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000ba647000-0x00000000baefefff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000baeff000-0x00000000baefffff] usable

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000baf00000-0x00000000bfffffff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000f0000000-0x00000000f7ffffff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000fe000000-0x00000000fe010fff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000fec00000-0x00000000fec00fff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000fee00000-0x00000000fee00fff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x00000000ff000000-0x00000000ffffffff] reserved

Jun 9 10:30:50 NAS kernel: BIOS-e820: [mem 0x0000000100000000-0x000000063effffff] usable

Jun 9 10:30:50 NAS kernel: NX (Execute Disable) protection: active

Jun 9 10:30:50 NAS kernel: e820: update [mem 0xa6801018-0xa680d857] usable ==> usable

### [PREVIOUS LINE REPEATED 1 TIMES] ###

Jun 9 10:30:50 NAS kernel: e820: update [mem 0xa67f0018-0xa6800057] usable ==> usable

### [PREVIOUS LINE REPEATED 1 TIMES] ###

Jun 9 10:30:50 NAS kernel: e820: update [mem 0xa67df018-0xa67efe57] usable ==> usable

### [PREVIOUS LINE REPEATED 1 TIMES] ###

Jun 9 10:30:50 NAS kernel: extended physical RAM map:

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x0000000000000000-0x0000000000057fff] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x0000000000058000-0x0000000000058fff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x0000000000059000-0x000000000009efff] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x000000000009f000-0x00000000000fffff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x0000000000100000-0x00000000a67df017] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000a67df018-0x00000000a67efe57] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000a67efe58-0x00000000a67f0017] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000a67f0018-0x00000000a6800057] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000a6800058-0x00000000a6801017] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000a6801018-0x00000000a680d857] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000a680d858-0x00000000b2f78fff] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000b2f79000-0x00000000b2f79fff] ACPI NVS

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000b2f7a000-0x00000000b2f7afff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000b2f7b000-0x00000000b9ae0fff] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000b9ae1000-0x00000000b9e28fff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000b9e29000-0x00000000b9f60fff] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000b9f61000-0x00000000ba646fff] ACPI NVS

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000ba647000-0x00000000baefefff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000baeff000-0x00000000baefffff] usable

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000baf00000-0x00000000bfffffff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000f0000000-0x00000000f7ffffff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000fe000000-0x00000000fe010fff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000fec00000-0x00000000fec00fff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000fee00000-0x00000000fee00fff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x00000000ff000000-0x00000000ffffffff] reserved

Jun 9 10:30:50 NAS kernel: reserve setup_data: [mem 0x0000000100000000-0x000000063effffff] usable

Jun 9 10:30:50 NAS kernel: efi: EFI v2.50 by American Megatrends

Jun 9 10:30:50 NAS kernel: efi: ACPI 2.0=0xb9f61000 ACPI=0xb9f61000 SMBIOS=0xbadce000 SMBIOS 3.0=0xbadcd000 ESRT=0xb87c29d8

Jun 9 10:30:50 NAS kernel: SMBIOS 3.0.0 present.

Jun 9 10:30:50 NAS kernel: DMI: Gigabyte Technology Co., Ltd. Z170XP-SLI/Z170XP-SLI-CF, BIOS F22c 12/01/2017

Jun 9 10:30:50 NAS kernel: tsc: Detected 3500.000 MHz processor

Jun 9 10:30:50 NAS kernel: tsc: Detected 3499.912 MHz TSC

Jun 9 10:30:50 NAS kernel: e820: update [mem 0x00000000-0x00000fff] usable ==> reserved

Jun 9 10:30:50 NAS kernel: e820: remove [mem 0x000a0000-0x000fffff] usable

Jun 9 10:30:50 NAS kernel: last_pfn = 0x63f000 max_arch_pfn = 0x400000000

Jun 9 10:30:50 NAS kernel: x86/PAT: Configuration [0-7]: WB WC UC- UC WB WP UC- WT

Jun 9 10:30:50 NAS kernel: last_pfn = 0xbaf00 max_arch_pfn = 0x400000000

Jun 9 10:30:50 NAS kernel: found SMP MP-table at [mem 0x000fccf0-0x000fccff]

Jun 9 10:30:50 NAS kernel: esrt: Reserving ESRT space from 0x00000000b87c29d8 to 0x00000000b87c2a10.

Jun 9 10:30:50 NAS kernel: e820: update [mem 0xb87c2000-0xb87c2fff] usable ==> reserved

Jun 9 10:30:50 NAS kernel: Using GB pages for direct mapping

Jun 9 10:30:50 NAS kernel: Secure boot disabled

Jun 9 10:30:50 NAS kernel: RAMDISK: [mem 0x76242000-0x7fffffff]

Jun 9 10:30:50 NAS kernel: ACPI: Early table checksum verification disabled

Jun 9 10:30:50 NAS kernel: ACPI: RSDP 0x00000000B9F61000 000024 (v02 ALASKA)

Jun 9 10:30:50 NAS kernel: ACPI: XSDT 0x00000000B9F610A8 0000CC (v01 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: FACP 0x00000000B9F896F8 000114 (v06 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: DSDT 0x00000000B9F61208 0284EA (v02 ALASKA A M I 01072009 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: FACS 0x00000000BA646C40 000040

Jun 9 10:30:50 NAS kernel: ACPI: APIC 0x00000000B9F89810 000084 (v03 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: FPDT 0x00000000B9F89898 000044 (v01 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: MCFG 0x00000000B9F898E0 00003C (v01 ALASKA A M I 01072009 MSFT 00000097)

Jun 9 10:30:50 NAS kernel: ACPI: FIDT 0x00000000B9F89920 00009C (v01 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F899C0 003154 (v02 SaSsdt SaSsdt 00003000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F8CB18 002544 (v02 PegSsd PegSsdt 00001000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: HPET 0x00000000B9F8F060 000038 (v01 INTEL SKL 00000001 MSFT 0000005F)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F8F098 000E3B (v02 INTEL Ther_Rvp 00001000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F8FED8 002AD7 (v02 INTEL xh_rvp10 00000000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: UEFI 0x00000000B9F929B0 000042 (v01 INTEL EDK2 00000002 01000013)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F929F8 000EDE (v02 CpuRef CpuSsdt 00003000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: LPIT 0x00000000B9F938D8 000094 (v01 INTEL SKL 00000000 MSFT 0000005F)

Jun 9 10:30:50 NAS kernel: ACPI: WSMT 0x00000000B9F93970 000028 (v01 INTEL SKL 00000000 MSFT 0000005F)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F93998 00029F (v02 INTEL sensrhub 00000000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0x00000000B9F93C38 003002 (v02 INTEL PtidDevc 00001000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: DBGP 0x00000000B9F96C40 000034 (v01 INTEL 00000002 MSFT 0000005F)

Jun 9 10:30:50 NAS kernel: ACPI: DBG2 0x00000000B9F96C78 000054 (v00 INTEL 00000002 MSFT 0000005F)

Jun 9 10:30:50 NAS kernel: ACPI: BGRT 0x00000000B9F96CD0 000038 (v01 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: DMAR 0x00000000B9F96D08 0000A8 (v01 INTEL SKL 00000001 INTL 00000001)

Jun 9 10:30:50 NAS kernel: ACPI: BGRT 0x00000000B9F96DB0 000038 (v01 ALASKA A M I 01072009 AMI 00010013)

Jun 9 10:30:50 NAS kernel: ACPI: Reserving FACP table memory at [mem 0xb9f896f8-0xb9f8980b]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving DSDT table memory at [mem 0xb9f61208-0xb9f896f1]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving FACS table memory at [mem 0xba646c40-0xba646c7f]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving APIC table memory at [mem 0xb9f89810-0xb9f89893]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving FPDT table memory at [mem 0xb9f89898-0xb9f898db]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving MCFG table memory at [mem 0xb9f898e0-0xb9f8991b]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving FIDT table memory at [mem 0xb9f89920-0xb9f899bb]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f899c0-0xb9f8cb13]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f8cb18-0xb9f8f05b]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving HPET table memory at [mem 0xb9f8f060-0xb9f8f097]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f8f098-0xb9f8fed2]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f8fed8-0xb9f929ae]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving UEFI table memory at [mem 0xb9f929b0-0xb9f929f1]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f929f8-0xb9f938d5]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving LPIT table memory at [mem 0xb9f938d8-0xb9f9396b]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving WSMT table memory at [mem 0xb9f93970-0xb9f93997]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f93998-0xb9f93c36]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving SSDT table memory at [mem 0xb9f93c38-0xb9f96c39]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving DBGP table memory at [mem 0xb9f96c40-0xb9f96c73]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving DBG2 table memory at [mem 0xb9f96c78-0xb9f96ccb]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving BGRT table memory at [mem 0xb9f96cd0-0xb9f96d07]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving DMAR table memory at [mem 0xb9f96d08-0xb9f96daf]

Jun 9 10:30:50 NAS kernel: ACPI: Reserving BGRT table memory at [mem 0xb9f96db0-0xb9f96de7]

Jun 9 10:30:50 NAS kernel: No NUMA configuration found

Jun 9 10:30:50 NAS kernel: Faking a node at [mem 0x0000000000000000-0x000000063effffff]

Jun 9 10:30:50 NAS kernel: NODE_DATA(0) allocated [mem 0x63eff9000-0x63effcfff]

Jun 9 10:30:50 NAS kernel: Zone ranges:

Jun 9 10:30:50 NAS kernel: DMA [mem 0x0000000000001000-0x0000000000ffffff]

Jun 9 10:30:50 NAS kernel: DMA32 [mem 0x0000000001000000-0x00000000ffffffff]

Jun 9 10:30:50 NAS kernel: Normal [mem 0x0000000100000000-0x000000063effffff]

Jun 9 10:30:50 NAS kernel: Movable zone start for each node

Jun 9 10:30:50 NAS kernel: Early memory node ranges

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x0000000000001000-0x0000000000057fff]

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x0000000000059000-0x000000000009efff]

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x0000000000100000-0x00000000b2f78fff]

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x00000000b2f7b000-0x00000000b9ae0fff]

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x00000000b9e29000-0x00000000b9f60fff]

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x00000000baeff000-0x00000000baefffff]

Jun 9 10:30:50 NAS kernel: node 0: [mem 0x0000000100000000-0x000000063effffff]

Jun 9 10:30:50 NAS kernel: Initmem setup node 0 [mem 0x0000000000001000-0x000000063effffff]

Jun 9 10:30:50 NAS kernel: On node 0, zone DMA: 1 pages in unavailable ranges

### [PREVIOUS LINE REPEATED 1 TIMES] ###

Jun 9 10:30:50 NAS kernel: On node 0, zone DMA: 97 pages in unavailable ranges

Jun 9 10:30:50 NAS kernel: On node 0, zone DMA32: 2 pages in unavailable ranges

Jun 9 10:30:50 NAS kernel: On node 0, zone DMA32: 840 pages in unavailable ranges

Jun 9 10:30:50 NAS kernel: On node 0, zone DMA32: 3998 pages in unavailable ranges

Jun 9 10:30:50 NAS kernel: On node 0, zone Normal: 20736 pages in unavailable ranges

Jun 9 10:30:50 NAS kernel: On node 0, zone Normal: 4096 pages in unavailable ranges

Jun 9 10:30:50 NAS kernel: Reserving Intel graphics memory at [mem 0xbc000000-0xbfffffff]

Jun 9 10:30:50 NAS kernel: ACPI: PM-Timer IO Port: 0x1808

Jun 9 10:30:50 NAS kernel: ACPI: LAPIC_NMI (acpi_id[0x01] high edge lint[0x1])

Jun 9 10:30:50 NAS kernel: ACPI: LAPIC_NMI (acpi_id[0x02] high edge lint[0x1])

Jun 9 10:30:50 NAS kernel: ACPI: LAPIC_NMI (acpi_id[0x03] high edge lint[0x1])

Jun 9 10:30:50 NAS kernel: ACPI: LAPIC_NMI (acpi_id[0x04] high edge lint[0x1])

Jun 9 10:30:50 NAS kernel: IOAPIC[0]: apic_id 2, version 32, address 0xfec00000, GSI 0-119

Jun 9 10:30:50 NAS kernel: ACPI: INT_SRC_OVR (bus 0 bus_irq 0 global_irq 2 dfl dfl)

Jun 9 10:30:50 NAS kernel: ACPI: INT_SRC_OVR (bus 0 bus_irq 9 global_irq 9 high level)

Jun 9 10:30:50 NAS kernel: ACPI: Using ACPI (MADT) for SMP configuration information

Jun 9 10:30:50 NAS kernel: ACPI: HPET id: 0x8086a201 base: 0xfed00000

Jun 9 10:30:50 NAS kernel: e820: update [mem 0xb69f0000-0xb6a33fff] usable ==> reserved

Jun 9 10:30:50 NAS kernel: TSC deadline timer available

Jun 9 10:30:50 NAS kernel: smpboot: Allowing 4 CPUs, 0 hotplug CPUs

Jun 9 10:30:50 NAS kernel: [mem 0xc0000000-0xefffffff] available for PCI devices

Jun 9 10:30:50 NAS kernel: Booting paravirtualized kernel on bare hardware

Jun 9 10:30:50 NAS kernel: clocksource: refined-jiffies: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 1910969940391419 ns

Jun 9 10:30:50 NAS kernel: setup_percpu: NR_CPUS:256 nr_cpumask_bits:256 nr_cpu_ids:4 nr_node_ids:1

Jun 9 10:30:50 NAS kernel: percpu: Embedded 55 pages/cpu s185960 r8192 d31128 u524288

Jun 9 10:30:50 NAS kernel: pcpu-alloc: s185960 r8192 d31128 u524288 alloc=1*2097152

Jun 9 10:30:50 NAS kernel: pcpu-alloc: [0] 0 1 2 3

Jun 9 10:30:50 NAS kernel: Fallback order for Node 0: 0

Jun 9 10:30:50 NAS kernel: Built 1 zonelists, mobility grouping on. Total pages: 6163687

Jun 9 10:30:50 NAS kernel: Policy zone: Normal

Jun 9 10:30:50 NAS kernel: Kernel command line: BOOT_IMAGE=/bzimage initrd=/bzroot

Jun 9 10:30:50 NAS kernel: Unknown kernel command line parameters "BOOT_IMAGE=/bzimage", will be passed to user space.

Jun 9 10:30:50 NAS kernel: Dentry cache hash table entries: 4194304 (order: 13, 33554432 bytes, linear)

Jun 9 10:30:50 NAS kernel: Inode-cache hash table entries: 2097152 (order: 12, 16777216 bytes, linear)

Jun 9 10:30:50 NAS kernel: mem auto-init: stack:off, heap alloc:off, heap free:off

Jun 9 10:30:50 NAS kernel: Memory: 24217408K/25046740K available (12295K kernel code, 1681K rwdata, 3916K rodata, 1836K init, 1820K bss, 829076K reserved, 0K cma-reserved)

Jun 9 10:30:50 NAS kernel: SLUB: HWalign=64, Order=0-3, MinObjects=0, CPUs=4, Nodes=1

Jun 9 10:30:50 NAS kernel: Kernel/User page tables isolation: enabled

Jun 9 10:30:50 NAS kernel: ftrace: allocating 41888 entries in 164 pages

Jun 9 10:30:50 NAS kernel: ftrace: allocated 164 pages with 3 groups

Jun 9 10:30:50 NAS kernel: Dynamic Preempt: voluntary

Jun 9 10:30:50 NAS kernel: rcu: Preemptible hierarchical RCU implementation.

Jun 9 10:30:50 NAS kernel: rcu: RCU event tracing is enabled.

Jun 9 10:30:50 NAS kernel: rcu: RCU restricting CPUs from NR_CPUS=256 to nr_cpu_ids=4.

Jun 9 10:30:50 NAS kernel: Trampoline variant of Tasks RCU enabled.

Jun 9 10:30:50 NAS kernel: Rude variant of Tasks RCU enabled.

Jun 9 10:30:50 NAS kernel: Tracing variant of Tasks RCU enabled.

Jun 9 10:30:50 NAS kernel: rcu: RCU calculated value of scheduler-enlistment delay is 100 jiffies.

Jun 9 10:30:50 NAS kernel: rcu: Adjusting geometry for rcu_fanout_leaf=16, nr_cpu_ids=4

Jun 9 10:30:50 NAS kernel: NR_IRQS: 16640, nr_irqs: 1024, preallocated irqs: 16

Jun 9 10:30:50 NAS kernel: rcu: srcu_init: Setting srcu_struct sizes based on contention.

Jun 9 10:30:50 NAS kernel: Console: colour dummy device 80x25

Jun 9 10:30:50 NAS kernel: printk: console [tty0] enabled

Jun 9 10:30:50 NAS kernel: ACPI: Core revision 20220331

Jun 9 10:30:50 NAS kernel: clocksource: hpet: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 79635855245 ns

Jun 9 10:30:50 NAS kernel: APIC: Switch to symmetric I/O mode setup

Jun 9 10:30:50 NAS kernel: DMAR: Host address width 39

Jun 9 10:30:50 NAS kernel: DMAR: DRHD base: 0x000000fed90000 flags: 0x0

Jun 9 10:30:50 NAS kernel: DMAR: dmar0: reg_base_addr fed90000 ver 1:0 cap 1c0000c40660462 ecap 7e3ff0505e

Jun 9 10:30:50 NAS kernel: DMAR: DRHD base: 0x000000fed91000 flags: 0x1

Jun 9 10:30:50 NAS kernel: DMAR: dmar1: reg_base_addr fed91000 ver 1:0 cap d2008c40660462 ecap f050da

Jun 9 10:30:50 NAS kernel: DMAR: RMRR base: 0x000000b9da3000 end: 0x000000b9dc2fff

Jun 9 10:30:50 NAS kernel: DMAR: RMRR base: 0x000000bb800000 end: 0x000000bfffffff

Jun 9 10:30:50 NAS kernel: DMAR-IR: IOAPIC id 2 under DRHD base 0xfed91000 IOMMU 1

Jun 9 10:30:50 NAS kernel: DMAR-IR: HPET id 0 under DRHD base 0xfed91000

Jun 9 10:30:50 NAS kernel: DMAR-IR: Queued invalidation will be enabled to support x2apic and Intr-remapping.

Jun 9 10:30:50 NAS kernel: DMAR-IR: Enabled IRQ remapping in x2apic mode

Jun 9 10:30:50 NAS kernel: x2apic enabled

Jun 9 10:30:50 NAS kernel: Switched APIC routing to cluster x2apic.

Jun 9 10:30:50 NAS kernel: ..TIMER: vector=0x30 apic1=0 pin1=2 apic2=-1 pin2=-1

Jun 9 10:30:50 NAS kernel: clocksource: tsc-early: mask: 0xffffffffffffffff max_cycles: 0x3272fd97217, max_idle_ns: 440795241220 ns

Jun 9 10:30:50 NAS kernel: Calibrating delay loop (skipped), value calculated using timer frequency.. 6999.82 BogoMIPS (lpj=3499912)

Jun 9 10:30:50 NAS kernel: pid_max: default: 32768 minimum: 301

Jun 9 10:30:50 NAS kernel: Mount-cache hash table entries: 65536 (order: 7, 524288 bytes, linear)

Jun 9 10:30:50 NAS kernel: Mountpoint-cache hash table entries: 65536 (order: 7, 524288 bytes, linear)

Jun 9 10:30:50 NAS kernel: x86/cpu: SGX disabled by BIOS.

Jun 9 10:30:50 NAS kernel: CPU0: Thermal monitoring enabled (TM1)

Jun 9 10:30:50 NAS kernel: process: using mwait in idle threads

Jun 9 10:30:50 NAS kernel: Last level iTLB entries: 4KB 128, 2MB 8, 4MB 8

Jun 9 10:30:50 NAS kernel: Last level dTLB entries: 4KB 64, 2MB 0, 4MB 0, 1GB 4

Jun 9 10:30:50 NAS kernel: Spectre V1 : Mitigation: usercopy/swapgs barriers and __user pointer sanitization

Jun 9 10:30:50 NAS kernel: Spectre V2 : Mitigation: IBRS

Jun 9 10:30:50 NAS kernel: Spectre V2 : Spectre v2 / SpectreRSB mitigation: Filling RSB on context switch

Jun 9 10:30:50 NAS kernel: Spectre V2 : Spectre v2 / SpectreRSB : Filling RSB on VMEXIT

Jun 9 10:30:50 NAS kernel: RETBleed: Mitigation: IBRS

Jun 9 10:30:50 NAS kernel: Spectre V2 : mitigation: Enabling conditional Indirect Branch Prediction Barrier

Jun 9 10:30:50 NAS kernel: Speculative Store Bypass: Mitigation: Speculative Store Bypass disabled via prctl

Jun 9 10:30:50 NAS kernel: MDS: Mitigation: Clear CPU buffers

Jun 9 10:30:50 NAS kernel: TAA: Mitigation: TSX disabled

Jun 9 10:30:50 NAS kernel: MMIO Stale Data: Mitigation: Clear CPU buffers

Jun 9 10:30:50 NAS kernel: SRBDS: Mitigation: Microcode

Jun 9 10:30:50 NAS kernel: Freeing SMP alternatives memory: 24K

Jun 9 10:30:50 NAS kernel: smpboot: CPU0: Intel(R) Core(TM) i5-6600K CPU @ 3.50GHz (family: 0x6, model: 0x5e, stepping: 0x3)

Jun 9 10:30:50 NAS kernel: cblist_init_generic: Setting adjustable number of callback queues.

Jun 9 10:30:50 NAS kernel: cblist_init_generic: Setting shift to 2 and lim to 1.

### [PREVIOUS LINE REPEATED 2 TIMES] ###

Jun 9 10:30:50 NAS kernel: Performance Events: PEBS fmt3+, Skylake events, 32-deep LBR, full-width counters, Intel PMU driver.

Jun 9 10:30:50 NAS kernel: ... version: 4

Jun 9 10:30:50 NAS kernel: ... bit width: 48

Jun 9 10:30:50 NAS kernel: ... generic registers: 8

Jun 9 10:30:50 NAS kernel: ... value mask: 0000ffffffffffff

Jun 9 10:30:50 NAS kernel: ... max period: 00007fffffffffff

Jun 9 10:30:50 NAS kernel: ... fixed-purpose events: 3

Jun 9 10:30:50 NAS kernel: ... event mask: 00000007000000ff

Jun 9 10:30:50 NAS kernel: Estimated ratio of average max frequency by base frequency (times 1024): 1053

Jun 9 10:30:50 NAS kernel: rcu: Hierarchical SRCU implementation.

Jun 9 10:30:50 NAS kernel: rcu: Max phase no-delay instances is 400.

Jun 9 10:30:50 NAS kernel: smp: Bringing up secondary CPUs ...

Jun 9 10:30:50 NAS kernel: x86: Booting SMP configuration:

Jun 9 10:30:50 NAS kernel: .... node #0, CPUs: #1 #2 #3

Jun 9 10:30:50 NAS kernel: smp: Brought up 1 node, 4 CPUs

Jun 9 10:30:50 NAS kernel: smpboot: Max logical packages: 1

Jun 9 10:30:50 NAS kernel: smpboot: Total of 4 processors activated (27999.29 BogoMIPS)

Jun 9 10:30:50 NAS kernel: devtmpfs: initialized

Jun 9 10:30:50 NAS kernel: x86/mm: Memory block size: 128MB

Jun 9 10:30:50 NAS kernel: ACPI: PM: Registering ACPI NVS region [mem 0xb2f79000-0xb2f79fff] (4096 bytes)

Jun 9 10:30:50 NAS kernel: ACPI: PM: Registering ACPI NVS region [mem 0xb9f61000-0xba646fff] (7233536 bytes)

Jun 9 10:30:50 NAS kernel: clocksource: jiffies: mask: 0xffffffff max_cycles: 0xffffffff, max_idle_ns: 1911260446275000 ns

Jun 9 10:30:50 NAS kernel: futex hash table entries: 1024 (order: 4, 65536 bytes, linear)

Jun 9 10:30:50 NAS kernel: pinctrl core: initialized pinctrl subsystem

Jun 9 10:30:50 NAS kernel: NET: Registered PF_NETLINK/PF_ROUTE protocol family

Jun 9 10:30:50 NAS kernel: thermal_sys: Registered thermal governor 'fair_share'

Jun 9 10:30:50 NAS kernel: thermal_sys: Registered thermal governor 'bang_bang'

Jun 9 10:30:50 NAS kernel: thermal_sys: Registered thermal governor 'step_wise'

Jun 9 10:30:50 NAS kernel: thermal_sys: Registered thermal governor 'user_space'

Jun 9 10:30:50 NAS kernel: cpuidle: using governor ladder

Jun 9 10:30:50 NAS kernel: cpuidle: using governor menu

Jun 9 10:30:50 NAS kernel: HugeTLB: can optimize 4095 vmemmap pages for hugepages-1048576kB

Jun 9 10:30:50 NAS kernel: ACPI FADT declares the system doesn't support PCIe ASPM, so disable it

Jun 9 10:30:50 NAS kernel: PCI: MMCONFIG for domain 0000 [bus 00-7f] at [mem 0xf0000000-0xf7ffffff] (base 0xf0000000)

Jun 9 10:30:50 NAS kernel: PCI: MMCONFIG at [mem 0xf0000000-0xf7ffffff] reserved in E820

Jun 9 10:30:50 NAS kernel: PCI: Using configuration type 1 for base access

Jun 9 10:30:50 NAS kernel: kprobes: kprobe jump-optimization is enabled. All kprobes are optimized if possible.

Jun 9 10:30:50 NAS kernel: HugeTLB: can optimize 7 vmemmap pages for hugepages-2048kB

Jun 9 10:30:50 NAS kernel: HugeTLB registered 1.00 GiB page size, pre-allocated 0 pages

Jun 9 10:30:50 NAS kernel: HugeTLB registered 2.00 MiB page size, pre-allocated 0 pages

Jun 9 10:30:50 NAS kernel: raid6: avx2x4 gen() 37432 MB/s

Jun 9 10:30:50 NAS kernel: raid6: avx2x2 gen() 31926 MB/s

Jun 9 10:30:50 NAS kernel: raid6: avx2x1 gen() 29627 MB/s

Jun 9 10:30:50 NAS kernel: raid6: using algorithm avx2x4 gen() 37432 MB/s

Jun 9 10:30:50 NAS kernel: raid6: .... xor() 18700 MB/s, rmw enabled

Jun 9 10:30:50 NAS kernel: raid6: using avx2x2 recovery algorithm

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(Module Device)

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(Processor Device)

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(3.0 _SCP Extensions)

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(Processor Aggregator Device)

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(Linux-Dell-Video)

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(Linux-Lenovo-NV-HDMI-Audio)

Jun 9 10:30:50 NAS kernel: ACPI: Added _OSI(Linux-HPI-Hybrid-Graphics)

Jun 9 10:30:50 NAS kernel: ACPI: 8 ACPI AML tables successfully acquired and loaded

Jun 9 10:30:50 NAS kernel: ACPI: [Firmware Bug]: BIOS _OSI(Linux) query ignored

Jun 9 10:30:50 NAS kernel: ACPI: Dynamic OEM Table Load:

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0xFFFF888100F42000 000738 (v02 PmRef Cpu0Ist 00003000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: \_PR_.CPU0: _OSC native thermal LVT Acked

Jun 9 10:30:50 NAS kernel: ACPI: Dynamic OEM Table Load:

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0xFFFF888100F3D000 0003FF (v02 PmRef Cpu0Cst 00003001 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: Dynamic OEM Table Load:

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0xFFFF888100F43000 00065C (v02 PmRef ApIst 00003000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: Dynamic OEM Table Load:

Jun 9 10:30:50 NAS kernel: ACPI: SSDT 0xFFFF888101071800 00018A (v02 PmRef ApCst 00003000 INTL 20160422)

Jun 9 10:30:50 NAS kernel: ACPI: Interpreter enabled

Jun 9 10:30:50 NAS kernel: ACPI: PM: (supports S0 S3 S5)

Jun 9 10:30:50 NAS kernel: ACPI: Using IOAPIC for interrupt routing

Jun 9 10:30:50 NAS kernel: PCI: Using host bridge windows from ACPI; if necessary, use "pci=nocrs" and report a bug

Jun 9 10:30:50 NAS kernel: PCI: Using E820 reservations for host bridge windows

Jun 9 10:30:50 NAS kernel: ACPI: Enabled 6 GPEs in block 00 to 7F

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [PG00]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [PG01]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [PG02]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [WRST]

### [PREVIOUS LINE REPEATED 19 TIMES] ###

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [FN00]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [FN01]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [FN02]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [FN03]

Jun 9 10:30:50 NAS kernel: ACPI: PM: Power Resource [FN04]

Jun 9 10:30:50 NAS kernel: ACPI: PCI Root Bridge [PCI0] (domain 0000 [bus 00-7e])

Jun 9 10:30:50 NAS kernel: acpi PNP0A08:00: _OSC: OS supports [ExtendedConfig ASPM ClockPM Segments MSI HPX-Type3]

Jun 9 10:30:50 NAS kernel: acpi PNP0A08:00: _OSC: OS requested [PME AER PCIeCapability LTR]

Jun 9 10:30:50 NAS kernel: acpi PNP0A08:00: _OSC: platform willing to grant [PME AER PCIeCapability LTR]

Jun 9 10:30:50 NAS kernel: acpi PNP0A08:00: _OSC: platform retains control of PCIe features (AE_ERROR)

Jun 9 10:30:50 NAS kernel: PCI host bridge to bus 0000:00

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: root bus resource [io 0x0000-0x0cf7 window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: root bus resource [io 0x0d00-0xffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: root bus resource [mem 0x000a0000-0x000bffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: root bus resource [mem 0xc0000000-0xefffffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: root bus resource [mem 0xfd000000-0xfe7fffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: root bus resource [bus 00-7e]

Jun 9 10:30:50 NAS kernel: pci 0000:00:00.0: [8086:191f] type 00 class 0x060000

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.0: [8086:1901] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.0: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: [8086:1905] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: [8086:1912] type 00 class 0x030000

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: reg 0x10: [mem 0xee000000-0xeeffffff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: reg 0x18: [mem 0xd0000000-0xdfffffff 64bit pref]

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: reg 0x20: [io 0xf000-0xf03f]

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: BAR 2: assigned to efifb

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: Video device with shadowed ROM at [mem 0x000c0000-0x000dffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:14.0: [8086:a12f] type 00 class 0x0c0330

Jun 9 10:30:50 NAS kernel: pci 0000:00:14.0: reg 0x10: [mem 0xef330000-0xef33ffff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:00:14.0: PME# supported from D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:16.0: [8086:a13a] type 00 class 0x078000

Jun 9 10:30:50 NAS kernel: pci 0000:00:16.0: reg 0x10: [mem 0xef34d000-0xef34dfff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:00:16.0: PME# supported from D3hot

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: [8086:a102] type 00 class 0x010601

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: reg 0x10: [mem 0xef348000-0xef349fff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: reg 0x14: [mem 0xef34c000-0xef34c0ff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: reg 0x18: [io 0xf090-0xf097]

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: reg 0x1c: [io 0xf080-0xf083]

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: reg 0x20: [io 0xf060-0xf07f]

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: reg 0x24: [mem 0xef34b000-0xef34b7ff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: PME# supported from D3hot

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.0: [8086:a167] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.0: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.2: [8086:a169] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.2: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.2: Intel SPT PCH root port ACS workaround enabled

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.3: [8086:a16a] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.3: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.3: Intel SPT PCH root port ACS workaround enabled

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: [8086:a110] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: Intel SPT PCH root port ACS workaround enabled

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.4: [8086:a114] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.4: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.4: Intel SPT PCH root port ACS workaround enabled

Jun 9 10:30:50 NAS kernel: pci 0000:00:1d.0: [8086:a118] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:00:1d.0: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1d.0: Intel SPT PCH root port ACS workaround enabled

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.0: [8086:a145] type 00 class 0x060100

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.2: [8086:a121] type 00 class 0x058000

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.2: reg 0x10: [mem 0xef344000-0xef347fff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.3: [8086:a170] type 00 class 0x040300

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.3: reg 0x10: [mem 0xef340000-0xef343fff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.3: reg 0x20: [mem 0xef320000-0xef32ffff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.3: PME# supported from D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.4: [8086:a123] type 00 class 0x0c0500

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.4: reg 0x10: [mem 0xef34a000-0xef34a0ff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.4: reg 0x20: [io 0xf040-0xf05f]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.6: [8086:15b8] type 00 class 0x020000

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.6: reg 0x10: [mem 0xef300000-0xef31ffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.6: PME# supported from D0 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.0: PCI bridge to [bus 01]

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: [1000:0087] type 00 class 0x010700

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: reg 0x10: [io 0xe000-0xe0ff]

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: reg 0x14: [mem 0xef140000-0xef14ffff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: reg 0x1c: [mem 0xef100000-0xef13ffff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: reg 0x30: [mem 0xef000000-0xef0fffff pref]

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: supports D1 D2

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: PCI bridge to [bus 02]

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: bridge window [io 0xe000-0xefff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: bridge window [mem 0xef000000-0xef1fffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.0: PCI bridge to [bus 03]

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: [1b21:1080] type 01 class 0x060400

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: supports D1 D2

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: PME# supported from D0 D1 D2 D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.2: PCI bridge to [bus 04-05]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:05: extended config space not accessible

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: PCI bridge to [bus 05]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.3: PCI bridge to [bus 06]

Jun 9 10:30:50 NAS kernel: pci 0000:07:00.0: [1b21:1242] type 00 class 0x0c0330

Jun 9 10:30:50 NAS kernel: pci 0000:07:00.0: reg 0x10: [mem 0xef200000-0xef207fff 64bit]

Jun 9 10:30:50 NAS kernel: pci 0000:07:00.0: enabling Extended Tags

Jun 9 10:30:50 NAS kernel: pci 0000:07:00.0: PME# supported from D3hot D3cold

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: PCI bridge to [bus 07]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: bridge window [mem 0xef200000-0xef2fffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.4: PCI bridge to [bus 08]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1d.0: PCI bridge to [bus 09]

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKA configured for IRQ 11

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKB configured for IRQ 10

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKC configured for IRQ 11

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKD configured for IRQ 11

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKE configured for IRQ 11

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKF configured for IRQ 11

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKG configured for IRQ 11

Jun 9 10:30:50 NAS kernel: ACPI: PCI: Interrupt link LNKH configured for IRQ 11

Jun 9 10:30:50 NAS kernel: iommu: Default domain type: Passthrough

Jun 9 10:30:50 NAS kernel: SCSI subsystem initialized

Jun 9 10:30:50 NAS kernel: libata version 3.00 loaded.

Jun 9 10:30:50 NAS kernel: ACPI: bus type USB registered

Jun 9 10:30:50 NAS kernel: usbcore: registered new interface driver usbfs

Jun 9 10:30:50 NAS kernel: usbcore: registered new interface driver hub

Jun 9 10:30:50 NAS kernel: usbcore: registered new device driver usb

Jun 9 10:30:50 NAS kernel: pps_core: LinuxPPS API ver. 1 registered

Jun 9 10:30:50 NAS kernel: pps_core: Software ver. 5.3.6 - Copyright 2005-2007 Rodolfo Giometti <[email protected]>

Jun 9 10:30:50 NAS kernel: PTP clock support registered

Jun 9 10:30:50 NAS kernel: Registered efivars operations

Jun 9 10:30:50 NAS kernel: PCI: Using ACPI for IRQ routing

Jun 9 10:30:50 NAS kernel: PCI: pci_cache_line_size set to 64 bytes

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0x00058000-0x0005ffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0x0009f000-0x0009ffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xa67df018-0xa7ffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xa67f0018-0xa7ffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xa6801018-0xa7ffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xb2f79000-0xb3ffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xb69f0000-0xb7ffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xb87c2000-0xbbffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xb9ae1000-0xbbffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xb9f61000-0xbbffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0xbaf00000-0xbbffffff]

Jun 9 10:30:50 NAS kernel: e820: reserve RAM buffer [mem 0x63f000000-0x63fffffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: vgaarb: setting as boot VGA device

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: vgaarb: bridge control possible

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: vgaarb: VGA device added: decodes=io+mem,owns=io+mem,locks=none

Jun 9 10:30:50 NAS kernel: vgaarb: loaded

Jun 9 10:30:50 NAS kernel: hpet0: at MMIO 0xfed00000, IRQs 2, 8, 0, 0, 0, 0, 0, 0

Jun 9 10:30:50 NAS kernel: hpet0: 8 comparators, 64-bit 24.000000 MHz counter

Jun 9 10:30:50 NAS kernel: clocksource: Switched to clocksource tsc-early

Jun 9 10:30:50 NAS kernel: FS-Cache: Loaded

Jun 9 10:30:50 NAS kernel: pnp: PnP ACPI init

Jun 9 10:30:50 NAS kernel: system 00:00: [io 0x0a00-0x0a2f] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:00: [io 0x0a30-0x0a3f] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:00: [io 0x0a40-0x0a4f] has been reserved

Jun 9 10:30:50 NAS kernel: pnp 00:01: [dma 0 disabled]

Jun 9 10:30:50 NAS kernel: pnp 00:02: [dma 0 disabled]

Jun 9 10:30:50 NAS kernel: system 00:03: [io 0x0680-0x069f] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:03: [io 0xffff] has been reserved

### [PREVIOUS LINE REPEATED 2 TIMES] ###

Jun 9 10:30:50 NAS kernel: system 00:03: [io 0x1800-0x18fe] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:03: [io 0x164e-0x164f] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:04: [io 0x0800-0x087f] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:06: [io 0x1854-0x1857] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfed10000-0xfed17fff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfed18000-0xfed18fff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfed19000-0xfed19fff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xf0000000-0xf7ffffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfed20000-0xfed3ffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfed90000-0xfed93fff] could not be reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfed45000-0xfed8ffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xff000000-0xffffffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xfee00000-0xfeefffff] could not be reserved

Jun 9 10:30:50 NAS kernel: system 00:07: [mem 0xeffe0000-0xefffffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfd000000-0xfdabffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfdad0000-0xfdadffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfdb00000-0xfdffffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfe000000-0xfe01ffff] could not be reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfe036000-0xfe03bfff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfe03d000-0xfe3fffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:08: [mem 0xfe410000-0xfe7fffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:09: [io 0xff00-0xfffe] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:0a: [mem 0xfdaf0000-0xfdafffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:0a: [mem 0xfdae0000-0xfdaeffff] has been reserved

Jun 9 10:30:50 NAS kernel: system 00:0a: [mem 0xfdac0000-0xfdacffff] has been reserved

Jun 9 10:30:50 NAS kernel: pnp: PnP ACPI: found 11 devices

Jun 9 10:30:50 NAS kernel: clocksource: acpi_pm: mask: 0xffffff max_cycles: 0xffffff, max_idle_ns: 2085701024 ns

Jun 9 10:30:50 NAS kernel: NET: Registered PF_INET protocol family

Jun 9 10:30:50 NAS kernel: IP idents hash table entries: 262144 (order: 9, 2097152 bytes, linear)

Jun 9 10:30:50 NAS kernel: tcp_listen_portaddr_hash hash table entries: 16384 (order: 6, 262144 bytes, linear)

Jun 9 10:30:50 NAS kernel: Table-perturb hash table entries: 65536 (order: 6, 262144 bytes, linear)

Jun 9 10:30:50 NAS kernel: TCP established hash table entries: 262144 (order: 9, 2097152 bytes, linear)

Jun 9 10:30:50 NAS kernel: TCP bind hash table entries: 65536 (order: 8, 1048576 bytes, linear)

Jun 9 10:30:50 NAS kernel: TCP: Hash tables configured (established 262144 bind 65536)

Jun 9 10:30:50 NAS kernel: UDP hash table entries: 16384 (order: 7, 524288 bytes, linear)

Jun 9 10:30:50 NAS kernel: UDP-Lite hash table entries: 16384 (order: 7, 524288 bytes, linear)

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.0: PCI bridge to [bus 01]

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: PCI bridge to [bus 02]

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: bridge window [io 0xe000-0xefff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: bridge window [mem 0xef000000-0xef1fffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.0: PCI bridge to [bus 03]

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: PCI bridge to [bus 05]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.2: PCI bridge to [bus 04-05]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.3: PCI bridge to [bus 06]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: PCI bridge to [bus 07]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: bridge window [mem 0xef200000-0xef2fffff]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.4: PCI bridge to [bus 08]

Jun 9 10:30:50 NAS kernel: pci 0000:00:1d.0: PCI bridge to [bus 09]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: resource 4 [io 0x0000-0x0cf7 window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: resource 5 [io 0x0d00-0xffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: resource 6 [mem 0x000a0000-0x000bffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: resource 7 [mem 0xc0000000-0xefffffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:00: resource 8 [mem 0xfd000000-0xfe7fffff window]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:02: resource 0 [io 0xe000-0xefff]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:02: resource 1 [mem 0xef000000-0xef1fffff]

Jun 9 10:30:50 NAS kernel: pci_bus 0000:07: resource 1 [mem 0xef200000-0xef2fffff]

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: Disabling ASPM L0s/L1

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: can't disable ASPM; OS doesn't have ASPM control

Jun 9 10:30:50 NAS kernel: PCI: CLS 64 bytes, default 64

Jun 9 10:30:50 NAS kernel: DMAR: No ATSR found

Jun 9 10:30:50 NAS kernel: DMAR: No SATC found

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature fl1gp_support inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature pgsel_inv inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature nwfs inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature eafs inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature prs inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature nest inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature mts inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature sc_support inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: IOMMU feature dev_iotlb_support inconsistent

Jun 9 10:30:50 NAS kernel: DMAR: dmar0: Using Queued invalidation

Jun 9 10:30:50 NAS kernel: DMAR: dmar1: Using Queued invalidation

Jun 9 10:30:50 NAS kernel: Unpacking initramfs...

Jun 9 10:30:50 NAS kernel: pci 0000:00:00.0: Adding to iommu group 0

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.0: Adding to iommu group 1

Jun 9 10:30:50 NAS kernel: pci 0000:00:01.1: Adding to iommu group 1

Jun 9 10:30:50 NAS kernel: pci 0000:00:02.0: Adding to iommu group 2

Jun 9 10:30:50 NAS kernel: pci 0000:00:14.0: Adding to iommu group 3

Jun 9 10:30:50 NAS kernel: pci 0000:00:16.0: Adding to iommu group 4

Jun 9 10:30:50 NAS kernel: pci 0000:00:17.0: Adding to iommu group 5

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.0: Adding to iommu group 6

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.2: Adding to iommu group 7

Jun 9 10:30:50 NAS kernel: pci 0000:00:1b.3: Adding to iommu group 8

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.0: Adding to iommu group 9

Jun 9 10:30:50 NAS kernel: pci 0000:00:1c.4: Adding to iommu group 10

Jun 9 10:30:50 NAS kernel: pci 0000:00:1d.0: Adding to iommu group 11

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.0: Adding to iommu group 12

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.2: Adding to iommu group 12

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.3: Adding to iommu group 12

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.4: Adding to iommu group 12

Jun 9 10:30:50 NAS kernel: pci 0000:00:1f.6: Adding to iommu group 13

Jun 9 10:30:50 NAS kernel: pci 0000:02:00.0: Adding to iommu group 1

Jun 9 10:30:50 NAS kernel: pci 0000:04:00.0: Adding to iommu group 14

Jun 9 10:30:50 NAS kernel: pci 0000:07:00.0: Adding to iommu group 15

Jun 9 10:30:50 NAS kernel: DMAR: Intel(R) Virtualization Technology for Directed I/O

Jun 9 10:30:50 NAS kernel: PCI-DMA: Using software bounce buffering for IO (SWIOTLB)

Jun 9 10:30:50 NAS kernel: software IO TLB: mapped [mem 0x00000000ada51000-0x00000000b1a51000] (64MB)

Jun 9 10:30:50 NAS kernel: workingset: timestamp_bits=40 max_order=23 bucket_order=0

Jun 9 10:30:50 NAS kernel: squashfs: version 4.0 (2009/01/31) Phillip Lougher

Jun 9 10:30:50 NAS kernel: fuse: init (API version 7.36)

Jun 9 10:30:50 NAS kernel: xor: automatically using best checksumming function avx

Jun 9 10:30:50 NAS kernel: Key type asymmetric registered

Jun 9 10:30:50 NAS kernel: Block layer SCSI generic (bsg) driver version 0.4 loaded (major 250)

Jun 9 10:30:50 NAS kernel: io scheduler mq-deadline registered

Jun 9 10:30:50 NAS kernel: io scheduler kyber registered

Jun 9 10:30:50 NAS kernel: io scheduler bfq registered

Jun 9 10:30:50 NAS kernel: IPMI message handler: version 39.2

Jun 9 10:30:50 NAS kernel: Serial: 8250/16550 driver, 1 ports, IRQ sharing disabled

Jun 9 10:30:50 NAS kernel: 00:02: ttyS0 at I/O 0x3f8 (irq = 4, base_baud = 115200) is a 16550A

Jun 9 10:30:50 NAS kernel: tsc: Refined TSC clocksource calibration: 3503.999 MHz

Jun 9 10:30:50 NAS kernel: clocksource: tsc: mask: 0xffffffffffffffff max_cycles: 0x3282124c47b, max_idle_ns: 440795239402 ns

Jun 9 10:30:50 NAS kernel: clocksource: Switched to clocksource tsc

Jun 9 10:30:50 NAS kernel: Freeing initrd memory: 161528K

Jun 9 10:30:50 NAS kernel: lp: driver loaded but no devices found

Jun 9 10:30:50 NAS kernel: Hangcheck: starting hangcheck timer 0.9.1 (tick is 180 seconds, margin is 60 seconds).

Jun 9 10:30:50 NAS kernel: AMD-Vi: AMD IOMMUv2 functionality not available on this system - This is not a bug.

Jun 9 10:30:50 NAS kernel: parport_pc 00:01: reported by Plug and Play ACPI

Jun 9 10:30:50 NAS kernel: parport0: PC-style at 0x378, irq 5 [PCSPP(,...)]

Jun 9 10:30:50 NAS kernel: lp0: using parport0 (interrupt-driven).

Jun 9 10:30:50 NAS kernel: loop: module loaded

Jun 9 10:30:50 NAS kernel: Rounding down aligned max_sectors from 4294967295 to 4294967288

Jun 9 10:30:50 NAS kernel: db_root: cannot open: /etc/target

Jun 9 10:30:50 NAS kernel: VFIO - User Level meta-driver version: 0.3

Jun 9 10:30:50 NAS kernel: ehci_hcd: USB 2.0 'Enhanced' Host Controller (EHCI) Driver

Jun 9 10:30:50 NAS kernel: ehci-pci: EHCI PCI platform driver

Jun 9 10:30:50 NAS kernel: ohci_hcd: USB 1.1 'Open' Host Controller (OHCI) Driver

Jun 9 10:30:50 NAS kernel: ohci-pci: OHCI PCI platform driver

Jun 9 10:30:50 NAS kernel: uhci_hcd: USB Universal Host Controller Interface driver

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:00:14.0: xHCI Host Controller

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:00:14.0: new USB bus registered, assigned bus number 1

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:00:14.0: hcc params 0x200077c1 hci version 0x100 quirks 0x0000000001109810

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:00:14.0: xHCI Host Controller

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:00:14.0: new USB bus registered, assigned bus number 2

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:00:14.0: Host supports USB 3.0 SuperSpeed

Jun 9 10:30:50 NAS kernel: hub 1-0:1.0: USB hub found

Jun 9 10:30:50 NAS kernel: hub 1-0:1.0: 16 ports detected

Jun 9 10:30:50 NAS kernel: hub 2-0:1.0: USB hub found

Jun 9 10:30:50 NAS kernel: hub 2-0:1.0: 10 ports detected

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:07:00.0: xHCI Host Controller

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:07:00.0: new USB bus registered, assigned bus number 3

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:07:00.0: hcc params 0x0200eec0 hci version 0x110 quirks 0x0000000000800010

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:07:00.0: xHCI Host Controller

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:07:00.0: new USB bus registered, assigned bus number 4

Jun 9 10:30:50 NAS kernel: xhci_hcd 0000:07:00.0: Host supports USB 3.1 Enhanced SuperSpeed

Jun 9 10:30:50 NAS kernel: hub 3-0:1.0: USB hub found

Jun 9 10:30:50 NAS kernel: hub 3-0:1.0: 2 ports detected

Jun 9 10:30:50 NAS kernel: usb usb4: We don't know the algorithms for LPM for this host, disabling LPM.

Jun 9 10:30:50 NAS kernel: hub 4-0:1.0: USB hub found

Jun 9 10:30:50 NAS kernel: hub 4-0:1.0: 2 ports detected

Jun 9 10:30:50 NAS kernel: usbcore: registered new interface driver usb-storage

Jun 9 10:30:50 NAS kernel: i8042: PNP: No PS/2 controller found.

Jun 9 10:30:50 NAS kernel: mousedev: PS/2 mouse device common for all mice

Jun 9 10:30:50 NAS kernel: usbcore: registered new interface driver synaptics_usb

Jun 9 10:30:50 NAS kernel: input: PC Speaker as /devices/platform/pcspkr/input/input0

Jun 9 10:30:50 NAS kernel: rtc_cmos 00:05: RTC can wake from S4

Jun 9 10:30:50 NAS kernel: rtc_cmos 00:05: registered as rtc0

Jun 9 10:30:50 NAS kernel: rtc_cmos 00:05: setting system clock to 2023-06-09T16:30:41 UTC (1686328241)

Jun 9 10:30:50 NAS kernel: rtc_cmos 00:05: alarms up to one month, y3k, 242 bytes nvram, hpet irqs

Jun 9 10:30:50 NAS kernel: intel_pstate: Intel P-state driver initializing

Jun 9 10:30:50 NAS kernel: intel_pstate: HWP enabled

Jun 9 10:30:50 NAS kernel: efifb: probing for efifb

Jun 9 10:30:50 NAS kernel: efifb: framebuffer at 0xd0000000, using 1876k, total 1875k

Jun 9 10:30:50 NAS kernel: efifb: mode is 800x600x32, linelength=3200, pages=1

Jun 9 10:30:50 NAS kernel: efifb: scrolling: redraw

Jun 9 10:30:50 NAS kernel: efifb: Truecolor: size=8:8:8:8, shift=24:16:8:0

Jun 9 10:30:50 NAS kernel: Console: switching to colour frame buffer device 100x37

Jun 9 10:30:50 NAS kernel: fb0: EFI VGA frame buffer device

Jun 9 10:30:50 NAS kernel: pstore: Registered efi as persistent store backend

Jun 9 10:30:50 NAS kernel: hid: raw HID events driver (C) Jiri Kosina

Jun 9 10:30:50 NAS kernel: usbcore: registered new interface driver usbhid

Jun 9 10:30:50 NAS kernel: usbhid: USB HID core driver

Jun 9 10:30:50 NAS kernel: ipip: IPv4 and MPLS over IPv4 tunneling driver

Jun 9 10:30:50 NAS kernel: NET: Registered PF_INET6 protocol family

Jun 9 10:30:50 NAS kernel: Segment Routing with IPv6

Jun 9 10:30:50 NAS kernel: RPL Segment Routing with IPv6

Jun 9 10:30:50 NAS kernel: In-situ OAM (IOAM) with IPv6

Jun 9 10:30:50 NAS kernel: 9pnet: Installing 9P2000 support

Jun 9 10:30:50 NAS kernel: microcode: sig=0x506e3, pf=0x2, revision=0xf0

Jun 9 10:30:50 NAS kernel: microcode: Microcode Update Driver: v2.2.

Jun 9 10:30:50 NAS kernel: IPI shorthand broadcast: enabled

Jun 9 10:30:50 NAS kernel: sched_clock: Marking stable (12425950203, 3528501)->(12431259347, -1780643)

Jun 9 10:30:50 NAS kernel: registered taskstats version 1

Jun 9 10:30:50 NAS kernel: Btrfs loaded, crc32c=crc32c-generic, zoned=no, fsverity=no

Jun 9 10:30:50 NAS kernel: pstore: Using crash dump compression: deflate

Jun 9 10:30:50 NAS kernel: usb 1-6: new full-speed USB device number 2 using xhci_hcd

Jun 9 10:30:50 NAS kernel: hid-generic 0003:0764:0501.0001: hiddev96,hidraw0: USB HID v1.10 Device [CPS CP1500PFCLCD] on usb-0000:00:14.0-6/input0

Jun 9 10:30:50 NAS kernel: usb 4-1: new SuperSpeed USB device number 2 using xhci_hcd

Jun 9 10:30:50 NAS kernel: usb-storage 4-1:1.0: USB Mass Storage device detected

Jun 9 10:30:50 NAS kernel: scsi host0: usb-storage 4-1:1.0

Jun 9 10:30:50 NAS kernel: scsi 0:0:0:0: Direct-Access Samsung Flash Drive 1100 PQ: 0 ANSI: 6

Jun 9 10:30:50 NAS kernel: sd 0:0:0:0: Attached scsi generic sg0 type 0

Jun 9 10:30:50 NAS kernel: sd 0:0:0:0: [sda] 125313283 512-byte logical blocks: (64.2 GB/59.8 GiB)

Jun 9 10:30:50 NAS kernel: sd 0:0:0:0: [sda] Write Protect is off

Jun 9 10:30:50 NAS kernel: sd 0:0:0:0: [sda] Mode Sense: 43 00 00 00

Jun 9 10:30:50 NAS kernel: sd 0:0:0:0: [sda] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA

Jun 9 10:30:50 NAS kernel: sda: sda1

Jun 9 10:30:50 NAS kernel: sd 0:0:0:0: [sda] Attached SCSI removable disk

Jun 9 10:30:50 NAS kernel: floppy0: no floppy controllers found

Jun 9 10:30:50 NAS kernel: Freeing unused kernel image (initmem) memory: 1836K

Jun 9 10:30:50 NAS kernel: Write protecting the kernel read-only data: 18432k

Jun 9 10:30:50 NAS kernel: Freeing unused kernel image (text/rodata gap) memory: 2040K

Jun 9 10:30:50 NAS kernel: Freeing unused kernel image (rodata/data gap) memory: 180K

Jun 9 10:30:50 NAS kernel: rodata_test: all tests were successful

Jun 9 10:30:50 NAS kernel: Run /init as init process

Jun 9 10:30:50 NAS kernel: with arguments:

Jun 9 10:30:50 NAS kernel: /init

Jun 9 10:30:50 NAS kernel: with environment:

Jun 9 10:30:50 NAS kernel: HOME=/

Jun 9 10:30:50 NAS kernel: TERM=linux

Jun 9 10:30:50 NAS kernel: BOOT_IMAGE=/bzimage

Jun 9 10:30:50 NAS kernel: random: crng init done

Jun 9 10:30:50 NAS kernel: loop0: detected capacity change from 0 to 241472

Jun 9 10:30:50 NAS kernel: loop1: detected capacity change from 0 to 39792

Jun 9 10:30:50 NAS kernel: NET: Registered PF_UNIX/PF_LOCAL protocol family

Jun 9 10:30:50 NAS kernel: input: Sleep Button as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0C0E:00/input/input1

Jun 9 10:30:50 NAS kernel: ACPI: button: Sleep Button [SLPB]

Jun 9 10:30:50 NAS kernel: input: Power Button as /devices/LNXSYSTM:00/LNXSYBUS:00/PNP0C0C:00/input/input2

Jun 9 10:30:50 NAS kernel: ACPI: button: Power Button [PWRB]

Jun 9 10:30:50 NAS kernel: input: Power Button as /devices/LNXSYSTM:00/LNXPWRBN:00/input/input3

Jun 9 10:30:50 NAS kernel: ACPI: button: Power Button [PWRF]

Jun 9 10:30:50 NAS kernel: thermal LNXTHERM:00: registered as thermal_zone0

Jun 9 10:30:50 NAS kernel: ACPI: thermal: Thermal Zone [TZ00] (28 C)

Jun 9 10:30:50 NAS kernel: thermal LNXTHERM:01: registered as thermal_zone1

Jun 9 10:30:50 NAS kernel: ACPI: thermal: Thermal Zone [TZ01] (30 C)

Jun 9 10:30:50 NAS kernel: ahci 0000:00:17.0: version 3.0

Jun 9 10:30:50 NAS kernel: Linux agpgart interface v0.103

Jun 9 10:30:50 NAS kernel: ahci 0000:00:17.0: AHCI 0001.0301 32 slots 6 ports 6 Gbps 0x3f impl SATA mode

Jun 9 10:30:50 NAS kernel: ahci 0000:00:17.0: flags: 64bit ncq sntf pm led clo only pio slum part ems deso sadm sds apst

Jun 9 10:30:50 NAS kernel: scsi host1: ahci

Jun 9 10:30:50 NAS kernel: scsi host2: ahci

Jun 9 10:30:50 NAS kernel: scsi host3: ahci

Jun 9 10:30:50 NAS kernel: scsi host4: ahci

Jun 9 10:30:50 NAS kernel: scsi host5: ahci

Jun 9 10:30:50 NAS kernel: scsi host6: ahci

Jun 9 10:30:50 NAS kernel: ata1: SATA max UDMA/133 abar m2048@0xef34b000 port 0xef34b100 irq 136

Jun 9 10:30:50 NAS kernel: ata2: SATA max UDMA/133 abar m2048@0xef34b000 port 0xef34b180 irq 136

Jun 9 10:30:50 NAS kernel: ata3: SATA max UDMA/133 abar m2048@0xef34b000 port 0xef34b200 irq 136

Jun 9 10:30:50 NAS kernel: ata4: SATA max UDMA/133 abar m2048@0xef34b000 port 0xef34b280 irq 136

Jun 9 10:30:50 NAS kernel: ata5: SATA max UDMA/133 abar m2048@0xef34b000 port 0xef34b300 irq 136

Jun 9 10:30:50 NAS kernel: ata6: SATA max UDMA/133 abar m2048@0xef34b000 port 0xef34b380 irq 136

Jun 9 10:30:50 NAS kernel: i801_smbus 0000:00:1f.4: enabling device (0001 -> 0003)

Jun 9 10:30:50 NAS kernel: i801_smbus 0000:00:1f.4: SPD Write Disable is set

Jun 9 10:30:50 NAS kernel: i801_smbus 0000:00:1f.4: SMBus using PCI interrupt

Jun 9 10:30:50 NAS kernel: mpt3sas version 42.100.00.00 loaded

Jun 9 10:30:50 NAS kernel: mpt3sas 0000:02:00.0: can't disable ASPM; OS doesn't have ASPM control

Jun 9 10:30:50 NAS kernel: i2c i2c-0: 4/4 memory slots populated (from DMI)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: 64 BIT PCI BUS DMA ADDRESSING SUPPORTED, total mem (24513432 kB)

Jun 9 10:30:50 NAS kernel: e1000e: Intel(R) PRO/1000 Network Driver

Jun 9 10:30:50 NAS kernel: i2c i2c-0: Successfully instantiated SPD at 0x50

Jun 9 10:30:50 NAS kernel: e1000e: Copyright(c) 1999 - 2015 Intel Corporation.

Jun 9 10:30:50 NAS kernel: i2c i2c-0: Successfully instantiated SPD at 0x51

Jun 9 10:30:50 NAS kernel: e1000e 0000:00:1f.6: Interrupt Throttling Rate (ints/sec) set to dynamic conservative mode

Jun 9 10:30:50 NAS kernel: i2c i2c-0: Successfully instantiated SPD at 0x52

Jun 9 10:30:50 NAS kernel: i2c i2c-0: Successfully instantiated SPD at 0x53

Jun 9 10:30:50 NAS kernel: RAPL PMU: API unit is 2^-32 Joules, 4 fixed counters, 655360 ms ovfl timer

Jun 9 10:30:50 NAS kernel: RAPL PMU: hw unit of domain pp0-core 2^-14 Joules

Jun 9 10:30:50 NAS kernel: RAPL PMU: hw unit of domain package 2^-14 Joules

Jun 9 10:30:50 NAS kernel: RAPL PMU: hw unit of domain dram 2^-14 Joules

Jun 9 10:30:50 NAS kernel: RAPL PMU: hw unit of domain pp1-gpu 2^-14 Joules

Jun 9 10:30:50 NAS kernel: cryptd: max_cpu_qlen set to 1000

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: CurrentHostPageSize is 0: Setting default host page size to 4k

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: MSI-X vectors supported: 16

Jun 9 10:30:50 NAS kernel: no of cores: 4, max_msix_vectors: -1

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: 0 4 4

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: High IOPs queues : disabled

Jun 9 10:30:50 NAS kernel: mpt2sas0-msix0: PCI-MSI-X enabled: IRQ 138

Jun 9 10:30:50 NAS kernel: mpt2sas0-msix1: PCI-MSI-X enabled: IRQ 139

Jun 9 10:30:50 NAS kernel: mpt2sas0-msix2: PCI-MSI-X enabled: IRQ 140

Jun 9 10:30:50 NAS kernel: mpt2sas0-msix3: PCI-MSI-X enabled: IRQ 141

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: iomem(0x00000000ef140000), mapped(0x00000000e618d7ed), size(65536)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: ioport(0x000000000000e000), size(256)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: CurrentHostPageSize is 0: Setting default host page size to 4k

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: sending message unit reset !!

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: message unit reset: SUCCESS

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: scatter gather: sge_in_main_msg(1), sge_per_chain(9), sge_per_io(128), chains_per_io(15)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: request pool(0x000000003d11bf0a) - dma(0x103e00000): depth(10261), frame_size(128), pool_size(1282 kB)

Jun 9 10:30:50 NAS kernel: e1000e 0000:00:1f.6 0000:00:1f.6 (uninitialized): registered PHC clock

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: sense pool(0x0000000095682a3a) - dma(0x105500000): depth(10000), element_size(96), pool_size (937 kB)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: sense pool(0x0000000095682a3a)- dma(0x105500000): depth(10000),element_size(96), pool_size(0 kB)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: reply pool(0x00000000e309a68e) - dma(0x104000000): depth(10325), frame_size(128), pool_size(1290 kB)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: config page(0x0000000075673224) - dma(0x1054ef000): size(512)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: Allocated physical memory: size(22945 kB)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: Current Controller Queue Depth(9997),Max Controller Queue Depth(10240)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: Scatter Gather Elements per IO(128)

Jun 9 10:30:50 NAS kernel: e1000e 0000:00:1f.6 eth0: (PCI Express:2.5GT/s:Width x1) 40:8d:5c:1c:6f:0d

Jun 9 10:30:50 NAS kernel: e1000e 0000:00:1f.6 eth0: Intel(R) PRO/1000 Network Connection

Jun 9 10:30:50 NAS kernel: e1000e 0000:00:1f.6 eth0: MAC: 12, PHY: 12, PBA No: FFFFFF-0FF

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: LSISAS2308: FWVersion(15.00.00.00), ChipRevision(0x05), BiosVersion(07.29.00.00)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: Protocol=(Initiator,Target), Capabilities=(TLR,EEDP,Snapshot Buffer,Diag Trace Buffer,Task Set Full,NCQ)

Jun 9 10:30:50 NAS kernel: scsi host7: Fusion MPT SAS Host

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: sending port enable !!

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: hba_port entry: 00000000903bed4b, port: 255 is added to hba_port list

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: host_add: handle(0x0001), sas_addr(0x500605b008c0c300), phys(8)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0x9) sas_address(0x4433221100000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0xa) sas_address(0x4433221101000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0xd) sas_address(0x4433221102000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0xb) sas_address(0x4433221103000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0xc) sas_address(0x4433221104000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0xe) sas_address(0x4433221105000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0x10) sas_address(0x4433221106000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: handle(0xf) sas_address(0x4433221107000000) port_type(0x1)

Jun 9 10:30:50 NAS kernel: ata2: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

Jun 9 10:30:50 NAS kernel: ata3: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

Jun 9 10:30:50 NAS kernel: ata5: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

Jun 9 10:30:50 NAS kernel: ata4: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

Jun 9 10:30:50 NAS kernel: ata1: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

Jun 9 10:30:50 NAS kernel: ata6: SATA link up 6.0 Gbps (SStatus 133 SControl 300)

Jun 9 10:30:50 NAS kernel: ata1.00: ATA-11: SATA SSD, SBFM11.2, max UDMA/133

Jun 9 10:30:50 NAS kernel: mpt2sas_cm0: port enable: SUCCESS

Jun 9 10:30:50 NAS kernel: ata3.00: ATA-9: WDC WD80EMAZ-00WJTA0, 83.H0A83, max UDMA/133

Jun 9 10:30:50 NAS kernel: ata4.00: ATA-9: WDC WD80EMAZ-00WJTA0, 83.H0A83, max UDMA/133

Jun 9 10:30:50 NAS kernel: ata1.00: 468862128 sectors, multi 16: LBA48 NCQ (depth 32), AA

Jun 9 10:30:50 NAS kernel: ata6.00: ATA-9: WDC WD80EMAZ-00WJTA0, 83.H0A83, max UDMA/133

Jun 9 10:30:50 NAS kernel: scsi 7:0:0:0: Direct-Access ATA WDC WD80EDAZ-11T 0A81 PQ: 0 ANSI: 6

Jun 9 10:30:50 NAS kernel: ata1.00: configured for UDMA/133

Jun 9 10:30:50 NAS kernel: scsi 7:0:0:0: SATA: handle(0x000b), sas_addr(0x4433221103000000), phy(3), device_name(0x5000cca0bed25ea1)

Jun 9 10:30:50 NAS kernel: scsi 1:0:0:0: Direct-Access ATA SATA SSD 11.2 PQ: 0 ANSI: 5

Jun 9 10:30:50 NAS kernel: scsi 7:0:0:0: enclosure logical id (0x500605b008c0c300), slot(0)

Jun 9 10:30:50 NAS kernel: scsi 7:0:0:0: atapi(n), ncq(y), asyn_notify(n), smart(y), fua(y), sw_preserve(y)

Jun 9 10:30:50 NAS kernel: scsi 7:0:0:0: qdepth(32), tagged(1), scsi_level(7), cmd_que(1)

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: Attached scsi generic sg1 type 0

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: [sdb] 468862128 512-byte logical blocks: (240 GB/224 GiB)

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: [sdb] Write Protect is off

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: [sdb] Mode Sense: 00 3a 00 00

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: [sdb] Write cache: enabled, read cache: enabled, doesn't support DPO or FUA

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: [sdb] Preferred minimum I/O size 512 bytes

Jun 9 10:30:50 NAS kernel: sdb: sdb1

Jun 9 10:30:50 NAS kernel: sd 1:0:0:0: [sdb] Attached SCSI disk

Jun 9 10:30:50 NAS kernel: sd 7:0:0:0: Attached scsi generic sg2 type 0

Jun 9 10:30:50 NAS kernel: end_device-7:0: add: handle(0x000b), sas_addr(0x4433221103000000)

Jun 9 10:30:50 NAS kernel: sd 7:0:0:0: [sdc] 15628053168 512-byte logical blocks: (8.00 TB/7.28 TiB)

Jun 9 10:30:50 NAS kernel: sd 7:0:0:0: [sdc] 4096-byte physical blocks

Jun 9 10:30:50 NAS kernel: scsi 7:0:1:0: Direct-Access ATA WDC WD80EMAZ-00W 0A83 PQ: 0 ANSI: 6

Jun 9 10:30:50 NAS kernel: scsi 7:0:1:0: SATA: handle(0x0009), sas_addr(0x4433221100000000), phy(0), device_name(0x5000cca266ec2525)

Jun 9 10:30:50 NAS kernel: scsi 7:0:1:0: enclosure logical id (0x500605b008c0c300), slot(3)

Jun 9 10:30:50 NAS kernel: scsi 7:0:1:0: atapi(n), ncq(y), asyn_notify(n), smart(y), fua(y), sw_preserve(y)

Jun 9 10:30:50 NAS kernel: scsi 7:0:1:0: qdepth(32), tagged(1), scsi_level(7), cmd_que(1)

Jun 9 10:30:50 NAS kernel: ata3.00: 15628053168 sectors, multi 16: LBA48 NCQ (depth 32), AA

Jun 9 10:30:50 NAS kernel: ata3.00: Features: NCQ-sndrcv NCQ-prio

Jun 9 10:30:50 NAS kernel: ata4.00: 15628053168 sectors, multi 16: LBA48 NCQ (depth 32), AA

Jun 9 10:30:50 NAS kernel: ata4.00: Features: NCQ-sndrcv NCQ-prio

Jun 9 10:30:50 NAS kernel: ata6.00: 15628053168 sectors, multi 16: LBA48 NCQ (depth 32), AA

Jun 9 10:30:50 NAS kernel: ata6.00: Features: NCQ-sndrcv NCQ-prio

Jun 9 10:30:50 NAS kernel: sd 7:0:1:0: Attached scsi generic sg3 type 0

Jun 9 10:30:50 NAS kernel: sd 7:0:0:0: [sdc] Write Protect is off

Jun 9 10:30:50 NAS kernel: end_device-7:1: add: handle(0x0009), sas_addr(0x4433221100000000)

Jun 9 10:30:50 NAS kernel: sd 7:0:1:0: [sdd] 15628053168 512-byte logical blocks: (8.00 TB/7.28 TiB)

Jun 9 10:30:50 NAS kernel: sd 7:0:1:0: [sdd] 4096-byte physical blocks

Jun 9 10:30:50 NAS kernel: sd 7:0:0:0: [sdc] Mode Sense: 7f 00 10 08

Jun 9 10:30:50 NAS kernel: scsi 7:0:2:0: Direct-Access ATA WDC WD80EFBX-68A 0A85 PQ: 0 ANSI: 6

Jun 9 10:30:50 NAS kernel: sd 7:0:0:0: [sdc] Write cache: enabled, read cache: enabled, supports DPO and FUA

Jun 9 10:30:50 NAS kernel: scsi 7:0:2:0: SATA: handle(0x000a), sas_addr(0x4433221101000000), phy(1), device_name(0x5000cca0c3c48d88)

Jun 9 10:30:50 NAS kernel: scsi 7:0:2:0: enclosure logical id (0x500605b008c0c300), slot(2)

Jun 9 10:30:50 NAS kernel: scsi 7:0:2:0: atapi(n), ncq(y), asyn_notify(n), smart(y), fua(y), sw_preserve(y)

Jun 9 10:30:50 NAS kernel: scsi 7:0:2:0: qdepth(32), tagged(1), scsi_level(7), cmd_que(1)

Jun 9 10:30:50 NAS kernel: sd 7:0:1:0: [sdd] Write Protect is off

Jun 9 10:30:50 NAS kernel: sd 7:0:1:0: [sdd] Mode Sense: 7f 00 10 08

Jun 9 10:30:50 NAS kernel: sd 7:0:1:0: [sdd] Write cache: enabled, read cache: enabled, supports DPO and FUA

Jun 9 10:30:50 NAS kernel: sd 7:0:2:0: Attached scsi generic sg4 type 0

Jun 9 10:30:50 NAS kernel: end_device-7:2: add: handle(0x000a), sas_addr(0x4433221101000000)

Jun 9 10:30:50 NAS kernel: sd 7:0:2:0: [sde] 15628053168 512-byte logical blocks: (8.00 TB/7.28 TiB)

Jun 9 10:30:50 NAS kernel: sd 7:0:2:0: [sde] 4096-byte physical blocks

Jun 9 10:30:50 NAS kernel: scsi 7:0:3:0: Direct-Access ATA WDC WD80EFBX-68A 0A85 PQ: 0 ANSI: 6

Jun 9 10:30:50 NAS kernel: scsi 7:0:3:0: SATA: handle(0x000d), sas_addr(0x4433221102000000), phy(2), device_name(0x5000cca0c3c4ab5e)

Jun 9 10:30:50 NAS kernel: scsi 7:0:3:0: enclosure logical id (0x500605b008c0c300), slot(1)

Jun 9 10:30:50 NAS kernel: scsi 7:0:3:0: atapi(n), ncq(y), asyn_notify(n), smart(y), fua(y), sw_preserve(y)

Jun 9 10:30:50 NAS kernel: scsi 7:0:3:0: qdepth(32), tagged(1), scsi_level(7), cmd_que(1)

Jun 9 10:30:50 NAS kernel: ata3.00: configured for UDMA/133

Jun 9 10:30:50 NAS kernel: sd 7:0:2:0: [sde] Write Protect is off