-

Posts

78 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by timekiller

-

-

That worked, thank you!

-

Bump for visibility

-

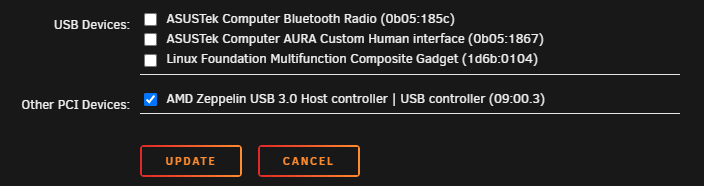

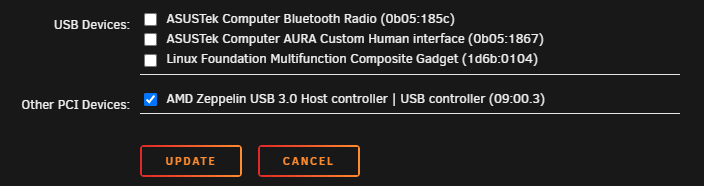

I have a Home Assistant VM that I am trying to pass a USB controller to. I have to pass the whole controller because I have a Coral AI accelerator which changes device ids when it's initiated, so I can't pass just the device.

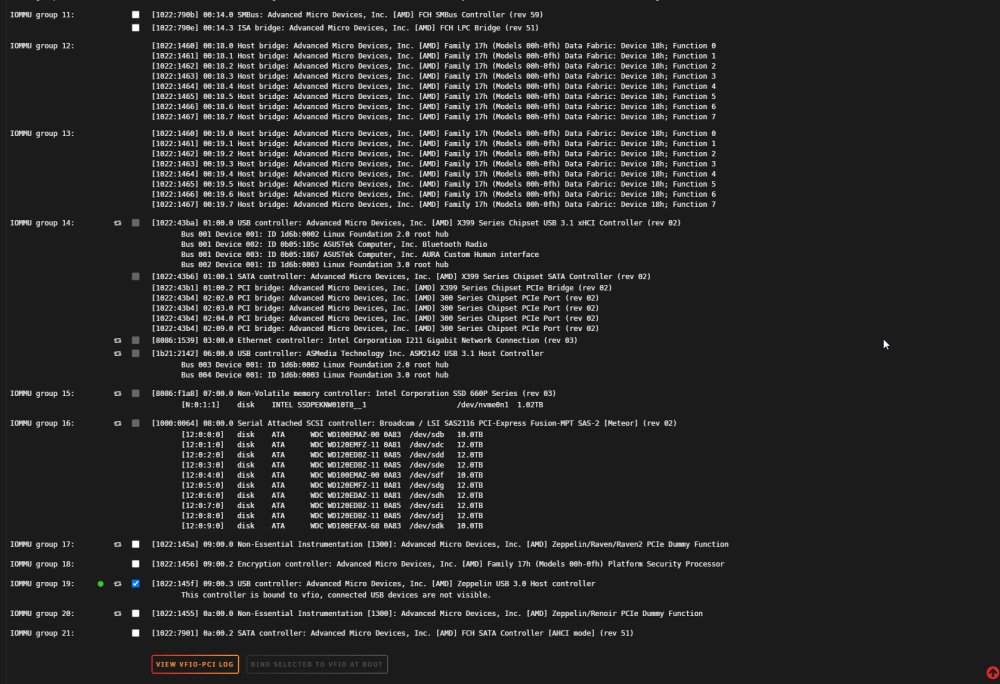

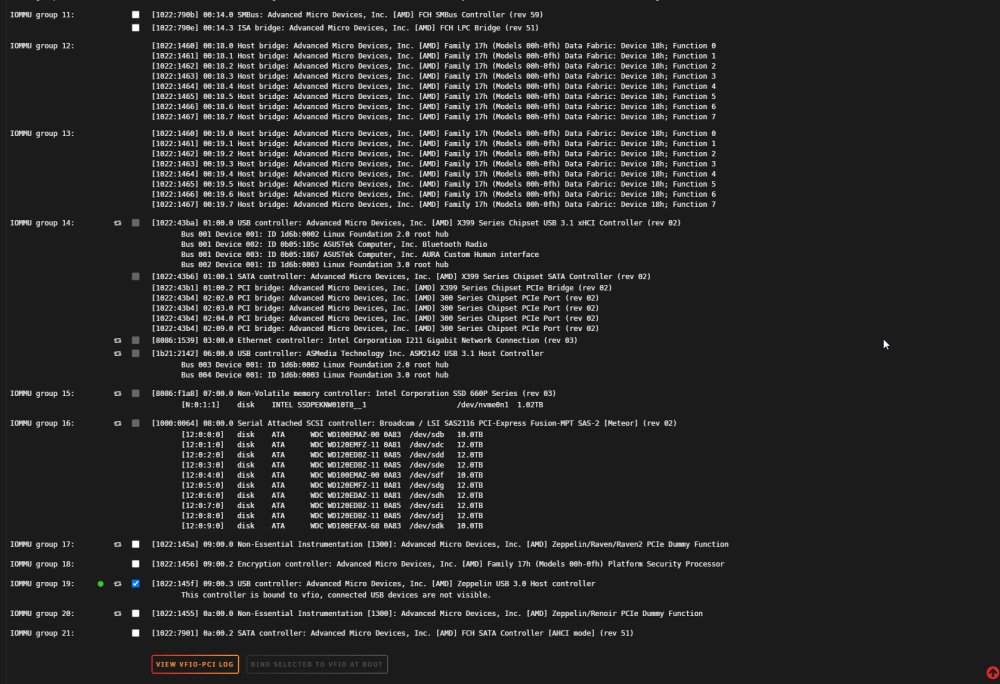

I have the controller bound in System Tools:

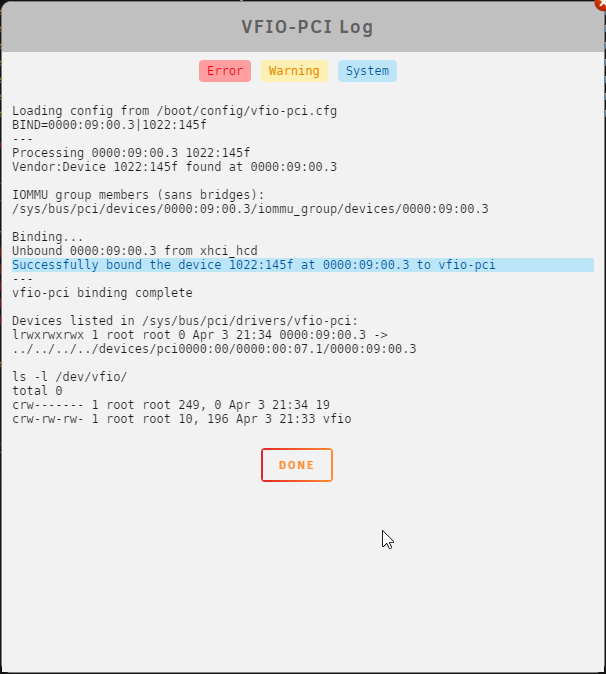

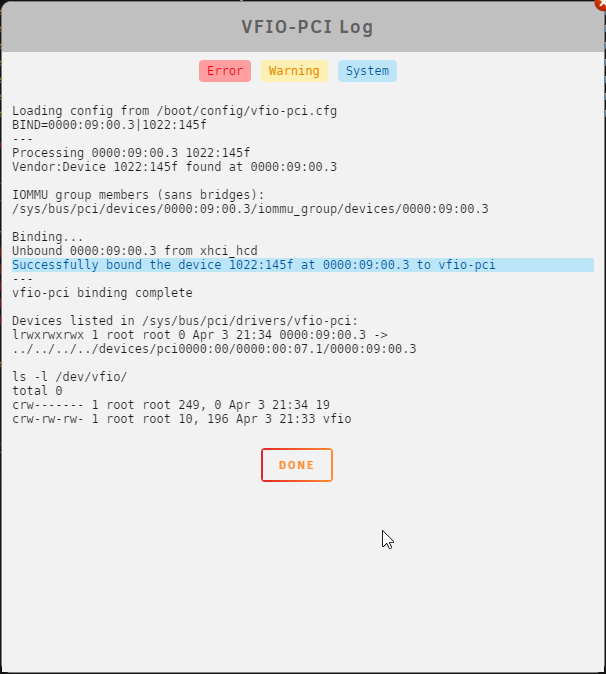

When I view the VFIO-PCI Log it says it was successfully bound:

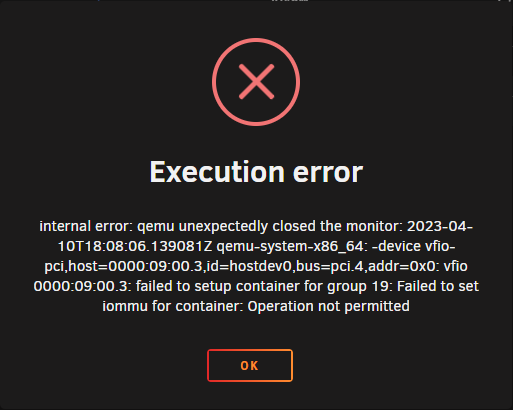

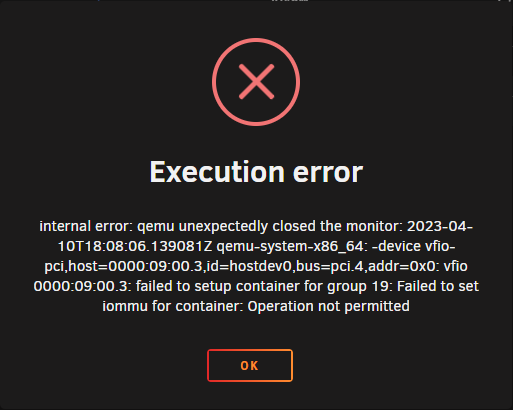

When I try to attach it to the VM and start I get the error

Execution error

internal error: qemu unexpectedly closed the monitor: 2023-04-10T18:08:06.139081Z qemu-system-x86_64: -device vfio-pci,host=0000:09:00.3,id=hostdev0,bus=pci.4,addr=0x0: vfio 0000:09:00.3: failed to setup container for group 19: Failed to set iommu for container: Operation not permitted

Diagnostics file attached. Thanks in advance.

-

Posting an update in case it's helpful to others. I believe I have this solved. I realized that the drive issues I was having were all on drives in this drive cage I bought to try and squeeze an extra drive into the server. I removed the cage, rearranging drives to get everything mounted securely and started yet another parity rebuild. This one took about 37 hours and completed successfully last night. The array has been online and stable since then. Hopefully That was the source of all my issues and I can move on.

Thank you everyone who gave advice, even if I didn't necessarily take all of it.

-

1

1

-

-

3 hours ago, Michael_P said:

Splitting power to too many drives can cause power to sag, and cause the issues you describe when all drives are under load. The wattage of the PSU is plenty sufficient, but power handing per connector may not be (4 drives per connector is pushing it, for example). Eliminate the splitters and see if it solves your problem.

of the 24 drives, there is a grand total of 2 splitters (not daisy chained, and not on the same line). Again, I don't believe this is causing the issue.

-

2 hours ago, Michael_P said:

Any splitters?

2 hours ago, Michael_P said:Any splitters?

a couple, just due to the runs I have. Not as many as you might be thinking. It's a 1,000 watt PSU so there are plenty of ports for peripherals. I really on't think the PSU or the way I have the power wired is the issue.

-

2 hours ago, trurl said:

This thread where the emulated drive was unmountable?

Yup. My mistake. The data drive was marked unmountable, not disabled. The 2 parity drives were still online and the data on the unmountable drive was missing fromt he array. That was 3 weeks ago and I've been dealing with so many issues since then I forgot the specific error for the drive. This doesn't answer my current problem though. New drives, new cables, new controller cards, and I still can't get a successful parity rebuild.

-

18 minutes ago, Michael_P said:

Yes 😄 but are you using splitters, how many drives are connected per run back to the PS, etc

Evenly split. PSU is more than capable of handling the load.

-

5 minutes ago, trurl said:

If you have parity, then a disabled disk is emulated from the parity calculation be reading parity plus all other disk. If the emulated disk was unmountable then its contents wouldn't be available but filesystem repair probably would have made it mountable again.

I understand how it's supposed to work. Both parity drives were online. The disabled drive was no emulated. Don't know what else to tell you on that. Doesn't really matter since this was like 3 weeks ago and not relevant to my current issues.

-

3 hours ago, Michael_P said:

How are you powering the drives?

...with the power supply?

-

2 hours ago, trurl said:

Unless the drive had an unmountable filesystem, its data is still online due to parity emulation.

No. The drive was "disabled" and all of it's contents were removed from the array. Not emulated.

-

New. EVGA SuperNOVA 1000 G+, 80 Plus Gold. Bought it in May

-

I have a very large array - 24 drives, all 10,12, or 14 TB. A couple of weeks again I started having issues with the parity drives getting disabled. I was told to ditch my Marvel based controllers, which I did. I bought two LSI 9201-16i cards and installed them. I still had issues. I was told my firmware is very old, so I updated to the latest. I thought maybe the drives were faulty, so I bought new 14TB drives for parity. I've swapped my cables I don't even know how many times at this point. I moved the server out of my network closet to a cooler room.

Every time I made a change I had to start the parity build over. With this many drives it takes several days to complete. Most of the time, it doesn't complete. It did complete once, and a few hours after completion a data drive was disabled, taking all it's data offline.

Yesterday I moved the server and updated the controller firmware. Now I have read errors on 3 drives and unraid has disabled one of the parity drives.

I'm pulling my hair out with frustration. I've swapped out all the hardware short of building a new server from scratch. I need to nail down the actual issue and get my server back.

Please help!

diag attached

-

Firmware is updated, I also moved the server to another room because it was getting pretty hot where it was. Parity is rebuilding AGAIN 🤞

-

Pulling my hair out with this server for weeks now. Was having a ton of issues that seemed to be caused by my Marvel based sata controllers. I took this community's advice and replaced both 16 port cards with LSI 9201-16i cards. I wound up having to rebuild parity, which finally finished yesterday with no errors and I thought I was in the clear.

This morning I woke up to errors on both parity drives and both parity drives disabled. Now I assume I need to do a new config to trigger a parity rebuild AGAIN. But I need to fix the underlying problem. I have swapped sata cables, and both of these parity drives are brand new because I was having the same issue with another set of parity drives, so I pulled them and both 2 new 14TB drives. I have also tried a number of sata cables.

I'm extremely frustrated at this point and just want my data reliably protected. At this point I have swapped out the controllers, the sata cables, AND the parity drives. What is going on here?

diag attached.

-

9 minutes ago, JorgeB said:

Nov 19 09:28:27 Storage kernel: Call Trace: Nov 19 09:28:27 Storage kernel: fh_getattr+0x45/0x5f [nfsd] Nov 19 09:28:27 Storage kernel: fill_post_wcc+0x2c/0x94 [nfsd] Nov 19 09:28:27 Storage kernel: fh_unlock+0x12/0x33 [nfsd] Nov 19 09:28:27 Storage kernel: nfsd3_proc_rmdir+0x4a/0x4f [nfsd] Nov 19 09:28:27 Storage kernel: nfsd_dispatch+0xb0/0x11e [nfsd] Nov 19 09:28:27 Storage kernel: svc_process+0x3dd/0x546 [sunrpc] Nov 19 09:28:27 Storage kernel: ? nfsd_svc+0x27e/0x27e [nfsd] Nov 19 09:28:27 Storage kernel: nfsd+0xef/0x146 [nfsd] Nov 19 09:28:27 Storage kernel: ? nfsd_destroy+0x57/0x57 [nfsd] Nov 19 09:28:27 Storage kernel: kthread+0xe5/0xea Nov 19 09:28:27 Storage kernel: ? __kthread_bind_mask+0x57/0x57 Nov 19 09:28:27 Storage kernel: ret_from_fork+0x22/0x30 Nov 19 09:28:27 Storage kernel: ---[ end trace 83e36f2bb8ca0fa2 ]---nfsd is the NFS daemon.

yup, saw the call trace and my eyes skimmed right past the nfsd stuff - thanks!

-

Just now, JorgeB said:

Not really, just that NFS crashed bringing down the user shares.

Thanks, at least it's not hardware related this time! So I can better diagnose in the future, where did you find this? I'm looking through the diagnostics file and don't see it.

-

2 minutes ago, JorgeB said:

AFAIK only a reboot will fix it, also it was caused by NFS, so disable if you don't need it or can change everything to SMB.

My desktop is Linux, so definitely need NFS. Never seen NFS cause the array to go offline before, any idea how this happened?

-

So I finally took everyone here's advice and replaced my Marvell based controller cards (IO Crest 16 Port) with 2 LSI 9201-16i cards. In addition I needed to shuffle some disks around, so when I installed the new cards I also wound having to do a new config and start a parity rebuild. It's been running for about 33 hours and everything was going great until about 30 minutes ago when I got an error deleting a file. Investigation shows that I lost /mnt/user - "Transport endpoint is not connected".

Interestingly, /mnt/user0 is still connected and the array is accessible from there. Of course all of my docker container and shares use /mnt/user, so now the entire server is effectively offline. I stopped all my docker containers to hopefully avoid further issues there.

I assume a reboot will fix this, but 1) I'd like to know what happened here, and 2) I don't want to have to restart the parity rebuild. There is currently an estimated 9 hours left and it appears to be running fine.

Do I have any options beyond reboot and start over, or go 9 hours or more without my server?

Diagnostics attached

-

59 minutes ago, trurl said:

Thank you

-

48 minutes ago, trurl said:

Probably these are the root cause of all your trouble, including why the emulated drive repair didn't go well:

04:00.0 SATA controller [0106]: Marvell Technology Group Ltd. 88SE9215 PCIe 2.0 x1 4-port SATA 6 Gb/s Controller [1b4b:9215] 05:00.0 SATA controller [0106]: Marvell Technology Group Ltd. 88SE9215 PCIe 2.0 x1 4-port SATA 6 Gb/s Controller [1b4b:9215] 06:00.0 SATA controller [0106]: Marvell Technology Group Ltd. 88SE9215 PCIe 2.0 x1 4-port SATA 6 Gb/s Controller [1b4b:9215] 07:00.0 SATA controller [0106]: Marvell Technology Group Ltd. 88SE9215 PCIe 2.0 x1 4-port SATA 6 Gb/s Controller [1b4b:9215]And likely to give problems in the future.

I'm open to recommendations for a replacement. I've asked for suggestions more than once, but haven't received a straight answer. I have 21 drives, so need 16 port cards.

-

Another update:

I restarted the array in maintenance mode so I could repair the now missing, emulated drive. I ran xfs_repair on /dev/mapper/md1 and it did it's thing. What I did not expect is that it xfs_repair moved every single file/directory on the drive to lost+found. This is especially confusing because running the same command on the real drive did not do this.

Since the original drive is fine, I'm just going to do a new config and let parity get rebuilt.

I realize I did not handle this the "right" way from the beginning, but I can't help but wonder if I had run the repair against the real drive in the first place if I would now be forced to manually go through 10TB worth of lost+found files and manually move/rename them.

-

Update:

I powered down the server and removed the unmountable drive. After rebooting, unraid didn't start the array and I had to tell it to with a missing drive, as expected. However, the missing data is still missing. I pulled the unmountable drive and attached it to my desktop (Linux). I opened the drive with cryptsetup and had to run xfs_repair befor eI could mount it, but the missing data is still there, so that's good. But now unraid is in a state where it knows a drive is missing, but unraid is not emulating the missing data. I can rsync the entire drive back, but that will take a long while. I don't think there is any way around this though since unraid believes the missing drive was just waiting to be formatted.

Unraid shows the missing drive, but is still labelling it as "Unmountable" and there is no directory for it under /mnt/.

I feel like the only option now is to reinstall the drive use the Tools->New Config option to construct a new array with all the drives in place.

Anyone see another option here?

-

Hello, last night I went to watch a movie on my server and plex was showing I had 6 movies (I have have way more than six). After looking into it I noticed the entire array was offline. I rebooted the server (Yes I should have gotten a diagnostics snapshot first, but I didn't). When the server came up, the array was back online, and a plex rescan repopulated all my movies.

This morning I woke up to many missing files and the unraid UI showing a drive as "Unmountable: not mounted", and Unraid wants me to format the disk. I logged into the server and dmesg shows a bunch of

[54431.657222] ata5: SATA link up 1.5 Gbps (SStatus 113 SControl 310) [54433.274993] ata5.00: configured for UDMA/33 [54433.275011] ata5: EH complete [54433.465251] ata5.00: exception Emask 0x10 SAct 0x1fc00 SErr 0x190002 action 0xe frozen [54433.465253] ata5.00: irq_stat 0x80400000, PHY RDY changed [54433.465254] ata5: SError: { RecovComm PHYRdyChg 10B8B Dispar } [54433.465256] ata5.00: failed command: READ FPDMA QUEUED [54433.465258] ata5.00: cmd 60/40:50:90:2b:d9/05:00:09:00:00/40 tag 10 ncq dma 688128 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465258] ata5.00: status: { DRDY } [54433.465259] ata5.00: failed command: READ FPDMA QUEUED [54433.465261] ata5.00: cmd 60/10:58:d0:30:d9/02:00:09:00:00/40 tag 11 ncq dma 270336 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465262] ata5.00: status: { DRDY } [54433.465262] ata5.00: failed command: READ FPDMA QUEUED [54433.465264] ata5.00: cmd 60/40:60:e0:32:d9/05:00:09:00:00/40 tag 12 ncq dma 688128 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465264] ata5.00: status: { DRDY } [54433.465265] ata5.00: failed command: READ FPDMA QUEUED [54433.465267] ata5.00: cmd 60/b0:68:20:38:d9/03:00:09:00:00/40 tag 13 ncq dma 483328 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465267] ata5.00: status: { DRDY } [54433.465268] ata5.00: failed command: READ FPDMA QUEUED [54433.465270] ata5.00: cmd 60/40:70:d0:3b:d9/05:00:09:00:00/40 tag 14 ncq dma 688128 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465270] ata5.00: status: { DRDY } [54433.465271] ata5.00: failed command: READ FPDMA QUEUED [54433.465273] ata5.00: cmd 60/40:78:10:41:d9/05:00:09:00:00/40 tag 15 ncq dma 688128 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465273] ata5.00: status: { DRDY } [54433.465274] ata5.00: failed command: READ FPDMA QUEUED [54433.465275] ata5.00: cmd 60/40:80:50:46:d9/05:00:09:00:00/40 tag 16 ncq dma 688128 in res 40/00:00:d0:30:d9/00:00:09:00:00/40 Emask 0x10 (ATA bus error) [54433.465276] ata5.00: status: { DRDY } [54433.465278] ata5: hard resetting linkThis time I did take a diagnostic snapshot (attached). I rebooted the server and it came up in the same state - 1 drive is "unmountable" and the data on it is missing. Furthermore, Unraid is running a parity check (which I cancelled).

What I can't figure out is:

1) Why isn't unraid emulating the missing drive?

2) Why did unraid restart the array if a drive is missing?

3) Is the data on the missing "unmountable" drive gone if the parity check started? My fear is that it started rewriting parity to align with the missing drive.

how screwed am I?

Want to reuse a license I bought, but not sure how

in Pre-Sales Support

Posted

I previously bought a license, but stopped using it for a while. Now I have built a new server but don't have the usb drive I used, and can't find a way to re-use my license. Can someone help, or will I be forced to buy a new license?