stridemat

-

Posts

71 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by stridemat

-

-

3 hours ago, Swarles said:

Config>Special>ignore_unrar_dates

Thanks. I found it earlier and on a test all seems to be working.

-

9 hours ago, Swarles said:

With those settings applied to the "media" share, no files in the media share will be moved until the time it was last modified (mtime) exceeds 7 days. If there are other files in the media share you want to move sooner than 7 days they won't move either and there's no way to do this. Those settings will only apply to the "media" share and no other share. Note, if you're using usenet you might need to disabled a setting that preserves the original timestamps of a .rar file. Otherwise this will result in the 7 days being unreliable because.

If you store metadata or cover art etc with your media, I'd recommend using the skip file types setting to keep those on the cache for whenever they are loaded, this will stop the disks spinning up when someone is browsing.

Thanks. I am using usenet so will have to see if I can find this setting in SAB.

-

Hi, so I’m trying to get it so new media stays on the cache drive (media share) for a period of time, say 7 days in this example, but all other data in other shares moves from cache to array once a night - normal mover behaviour. I’m trying to avoid spinning up disks for recently added media that gets watched a few times.

I have used the following settings. Will this work as desired?

-

3 hours ago, sdub said:

OK, @Greygoose and @stridemat, I updated the CA template to point to a static location for the borgmatic icon. Future pulls should get this, but I'm not sure if it will automatically update for you.

To manually fix, go to advanced view in the Borgmatic docker container config. Change "Icon URL" to

https://raw.githubusercontent.com/Sdub76/unraid_docker_templates/main/images/borgmatic.png

This is a static copy that I have in the CA repo, so it shouldn't change unless github changes it's static URLs.

That worked. Thank you.

-

1

1

-

-

10 minutes ago, sdub said:

Sorry I missed this issue. I’ll take a look and get back to you… for most issues it’s more effective to ask in the Borgmatic GitHub support page.

last year they moved the Borgmatic repo from b3vis’s repo to the main “Borgmatic-collective” repo. I updated the template but maybe something else changed.

that specific error at first glance looks like the link to the icon just changed. Is it causing Borgmatic to not update/work for you?

Container is still working great (apart from the icon), but I was hoping it wasn’t the first sign of the container no longer being supported moving forward.

Thanks for you work

-

Is this container still being supported?

-

On 6/6/2023 at 10:44 AM, bonienl said:

HOW TO SETUP TAILSCALE OR ZEROTIER COMMUNICATION

- Install the Tailscale or Zerotier docker container as usual and start the container

- It is recommended to have this container autostart as the first container in the list

- Go to Settings -> Network Settings -> Interface Extra

- This is a new section which allows the user to define which interfaces are used by the Unraid services. By default all regular interfaces with an IP address are included in the list of listening interfaces

- The tunnels of the built-in WireGuard function of Unraid are automatically added or removed from the list when the Wireguard tunnels are activated or deactivated. The user may exclude these tunnels from the list of listening interfaces

- To use the Tailscale or Zerotier interface, it is required to add the interface name or IP address of the communication to the list of included listening interfaces. It is imperative that Tailscale or Zerotier container is running before the interface is added to the list.

- A check is done if a valid name or IP address is entered and the new entry is added to the list of current listening interfaces. At this point, services are restarted to make them listen to the new interface as well

- When the new listening interface is active, it is possible to use it. For example it allows Tailscale to enter the GUI on its designated IP address

Included and Excluded listening interfaces need to be reactivated each time the server reboots or the array is restarted.

To automate this process, you can add the following code in the "go" file (place it before starting the emhttpd daemon)

# reload services after starting docker with 20 seconds grace period to allow starting up containers event=/usr/local/emhttp/webGui/event/docker_started mkdir -p $event cat <<- 'EOF' >$event/reload_services #!/bin/bash echo '/usr/local/emhttp/webGui/scripts/reload_services' | at -M -t $(date +%Y%m%d%H%M.%S -d '+20 sec') 2>/dev/null EOF chmod +x $event/reload_servicesWith this code in place and autostart of containers is enabled, it will ensure the listening interfaces are automatically updated after a system reboot or array restart.

Looks like the fix will be in the final build (it seems to be in rc7).

Seems like a support headache though?

-

Looks like I'm waiting until a .1 or .2 release then

-

-

1 hour ago, EDACerton said:

I’m waiting to see what feedback I can get from LimeTech. I figured out a way to make it work, but it’s not particularly pretty and so I’d rather see if I can get a fix from them before I roll out the workaround.

Agreed. Much better than running in docker from a resilience point of view.

-

1

1

-

-

On 5/20/2023 at 4:03 PM, EDACerton said:

Implementing functional changes to the platform with stable imminent... great

Is there likely to be a fix in the short term with this or is it a more fundamental change?

-

On 11/18/2022 at 11:56 PM, u.stu said:

Unraid is slackware, so let's use a slackware package.

# Download libffi from slackware.uk to /boot/extra: wget -P /boot/extra/ https://slackware.uk/slackware/slackware64-15.0/slackware64/l/libffi-3.3-x86_64-3.txz # Install the package: installpkg /boot/extra/libffi-3.3-x86_64-3.txz

Once you have the package placed into /boot/extra it will be re-installed as needed each time you boot.

I don't know if Nerd Tools will remove/replace outdated packages that have been manually downloaded, so leave yourself a reminder to check on this after libffi is added to nerd tools to make sure you're not hanging on to an outdated version.

This fixed it for me!

-

Anyone know why when trying to use Borg installed via the plugin (along with other dependencies) I get the following error:

root@Tower:~# borg Traceback (most recent call last): File "/usr/lib64/python3.9/site-packages/borg/archiver.py", line 41, in <module> from .archive import Archive, ArchiveChecker, ArchiveRecreater, Statistics, is_special File "/usr/lib64/python3.9/site-packages/borg/archive.py", line 20, in <module> from . import xattr File "/usr/lib64/python3.9/site-packages/borg/xattr.py", line 9, in <module> from ctypes import CDLL, create_string_buffer, c_ssize_t, c_size_t, c_char_p, c_int, c_uint32, get_errno File "/usr/lib64/python3.9/ctypes/__init__.py", line 8, in <module> from _ctypes import Union, Structure, Array ImportError: libffi.so.7: cannot open shared object file: No such file or directory

-

On 3/23/2022 at 2:29 PM, sdub said:

The package maintainer declined to add ssh-server to the borgmatic docker container... see the discussion in the link. You'll either need to install this simple borgserver docker or you'll need the borg binary installed on the machine you're trying to remotely backup to. If the target is also running Unraid this is easy enough with nerdpack.

On 10/19/2022 at 11:35 AM, Roi said:Ah wow thank you. That did not come to my attention, yet. Great!

So I can use borgbackup in the meantime like before.

As described it would be better to have borgbackup inside a Docker container for security reasons, so I would like to stick to my suggestion adding an SSH daemon and borgbackup to this container.

Is the above post from sdub the accepted way of making Unraid the target for backups without altering the base Unraid install? -

On 10/25/2022 at 4:35 AM, stridemat said:

Hi all,

So I have managed to get a two config set up going how I want manually (yay) but cannot work out how to auto start each one on a different schedule using cron.

Ideally they would run on different schedules due to the nature of what each one is backing up and how often it changes.

Pease can someone point me in the right direction?

So have resolved. Posting here for others in the future. Crontab text as follows to call specific config files. Obviously change the paths / file names as required:

0 2 * * 1 borgmatic -c /etc/borgmatic.d/UnraidBackup.yaml prune create -v 1 --stats 2>&1 0 4 * * * borgmatic -c /etc/borgmatic.d/DocsBackup.yaml prune create -v 1 --stats 2>&1 0 6 15 * * borgmatic check -v 1 2>&1 -

Hi all,

So I have managed to get a two config set up going how I want manually (yay) but cannot work out how to auto start each one on a different schedule using cron.

Ideally they would run on different schedules due to the nature of what each one is backing up and how often it changes.

Pease can someone point me in the right direction?

-

With the recent demise of Nerdpack I am looking to use this container for my Borg usage. Though I am having trouble trying to configure.

A few questions which I hope someone could answer:

- It is possible to 'reuse' existing repositories from Borg installed via Nerdpack? Or am I better just starting again?

-

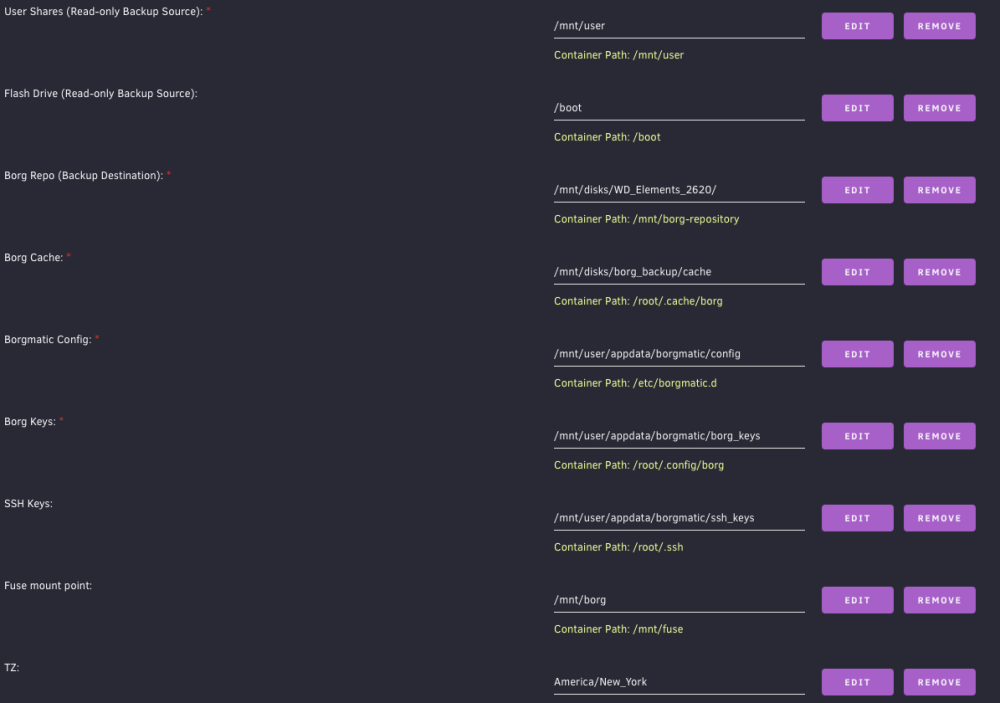

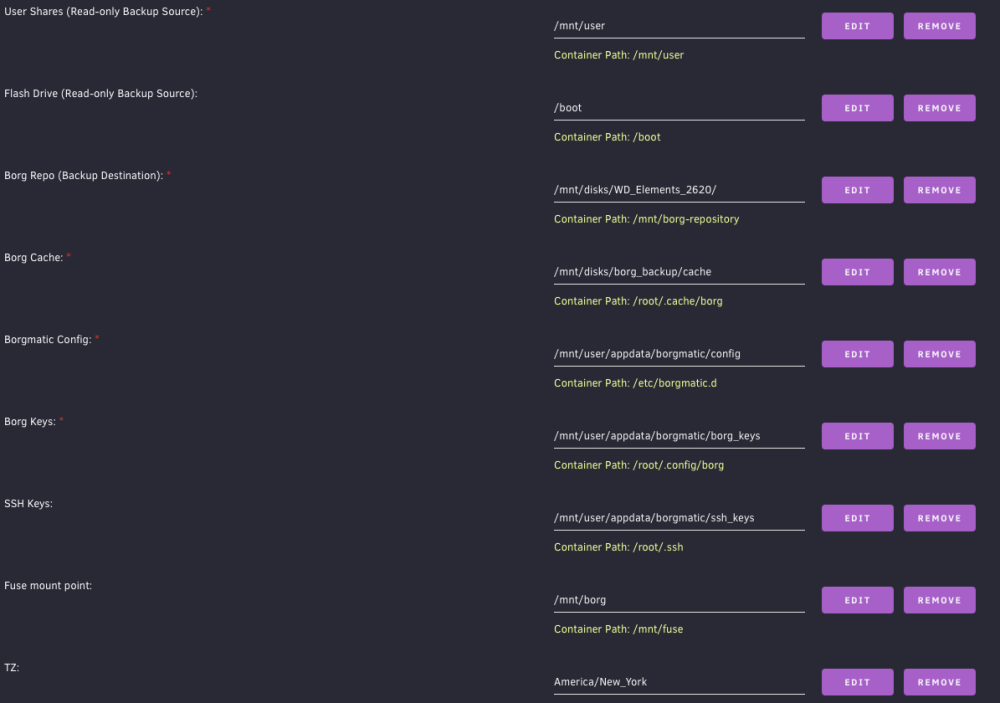

My existing repo is located /mnt/disks/WD_Elements_2620/UnraidBackup (I also have another repo at the following location /mnt/disks/WD_Elements_2620/DocsBackup. I have read this thread and can see the links as to how to have multiple repo's etc.) Config page from the Docker tab below. The Borg Repo path takes you to the location of both repos, meaning that I need to specify the specific repo each time.

-

Config .yaml file text. This looks ok to me, albeit appreciating that I am backing up my CA Back up folder. Once I get this working I plan to use Borg for all rather the CA Backup:

location: - /mnt/user/backups repositories: - /mnt/borg-repository/UnraidBackup - ssh://[email protected]/mnt/mydisk/UnraidBackup one_file_system: true files_cache: mtime,size patterns: - '- [Tt]rash' - '- [Cc]ache' exclude_if_present: - .nobackup - .NOBACKUP storage: encryption_passphrase: "NOTMYPASSWORD" compression: lz4 ssh_command: ssh -i /root/.ssh/id_rsa archive_name_format: 'backup-{now}' retention: keep_weekly: 4 consistency: checks: - repository - archives prefix: 'backup-' hooks: before_backup: - echo "Starting Unraid backup." after_backup: - echo "Finished Unraid backup." on_error: - echo "Error during Unraid backup." - Assuming I can use existing repo's, then how could I dryrun. This seems to kick out an error however I try it: borg create --list --dry-run /mnt/borg-repository/UnraidBackup ~/UnraidBackup

- Anything else that I could be doing?

-

-

46 minutes ago, norp90 said:

As of last night, on 6.10.3, fix common problems is alerting me that:

The plugin ca.backup2.plg is not known to Community Applications and is possibly incompatible with your server.What's changed and what now?

Me too.

-

Yeah I’m having the same issues as set out above.

Rolling back fixed the error and also the proxy connections started working again.

-

7 hours ago, binhex said:

have you upgraded to unraid 6.10.0 by any chance?, i see on reddit people complaining about random permissions changes to appdata so it maybe related to that.

Yes it seems to be an issue arising in 6.10.0. Knew I should have held on.

Updating permissions seemed to work.

-

On 5/4/2022 at 7:59 PM, bumpyclock said:

I was able to fix this, some how permissions had changed on /data/complete to root. chown -R nobody:users /data/complete seems to have fixed this for me

Any more thoughts on what may have caused this? I’m having the same issue. What’s to stop it happening again?

Looking at my system it chewed through 50GB of bandwidth over the last few hours trying to download and redownload files that were no longer importing.

-

Pastebin links if easier:

- Libvert log: https://pastebin.com/q5brknKN

- Syslog: https://pastebin.com/qCzZHEq6

- VM XML: https://pastebin.com/eHDK5Nd5 -

You could always try something like https://parsec.app.

Have a gaming VM hosted on Unraid and then a light weight client elsewhere in the house on the network.

[Script] binhex - no_ransom.sh

in User Customizations

Posted

I still use it and like the added piece of mind with only a little inconvenience.