-

Posts

74 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by gilladur

-

-

On 1/31/2024 at 9:54 AM, motophil said:

So here it is mentioned that the squeezebox server should be run with the --nomysqueezebox command line option. How can I do this with the plugin, or is it already done?

As far as I understood, the only thing needed is to turn off the "mysqueezebox.com-Service" plugin and that's it.

I've also installed the replacement Tidal Plugin by "philippe_44, Michael Herger" and that's working perfect so far + I removed the old Logitech hosted plugins Tidal, Deezer etc.

-

I've actually done it already and the warning is gone 🙂 but I agree the documentation is quite hard to overlook.

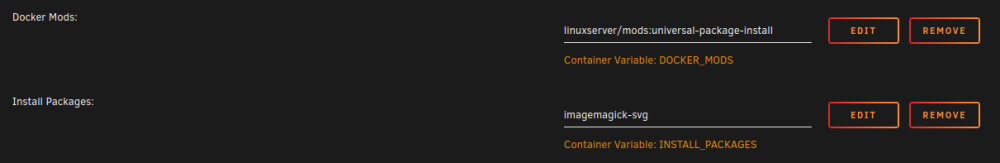

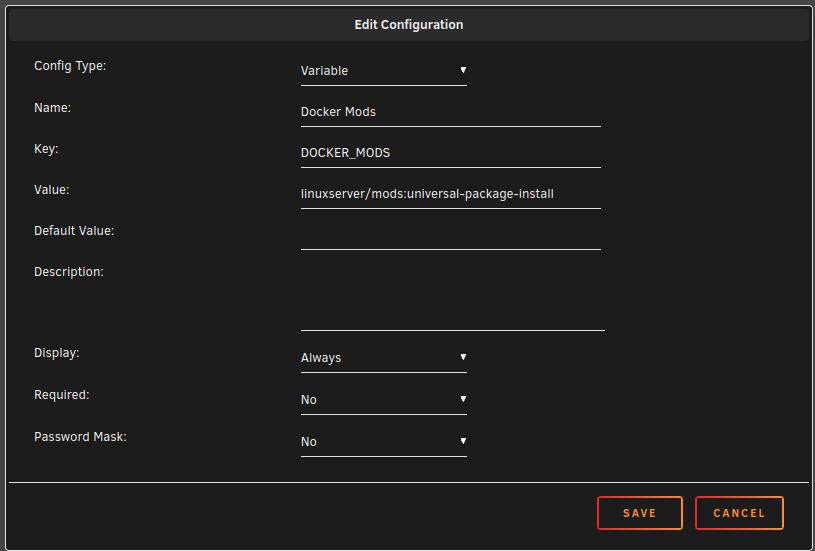

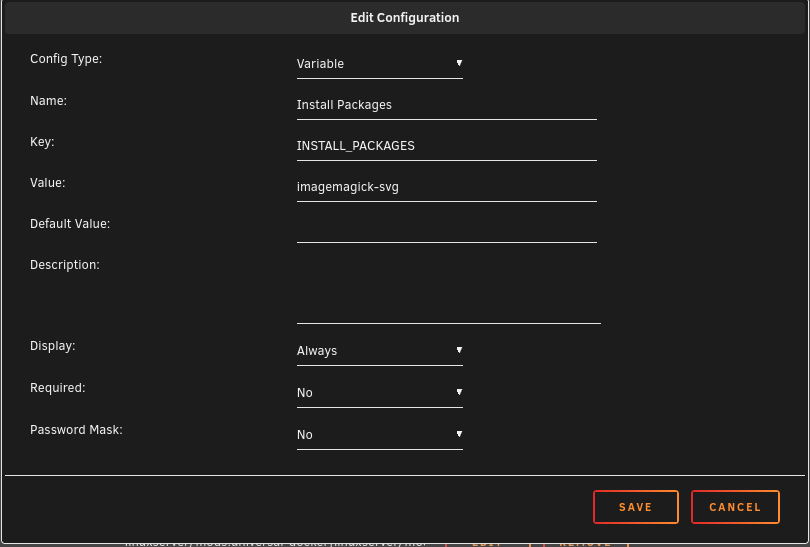

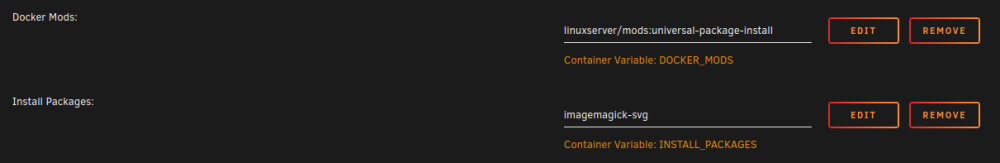

Basically, you just need to set up these two variables in the container in two steps...

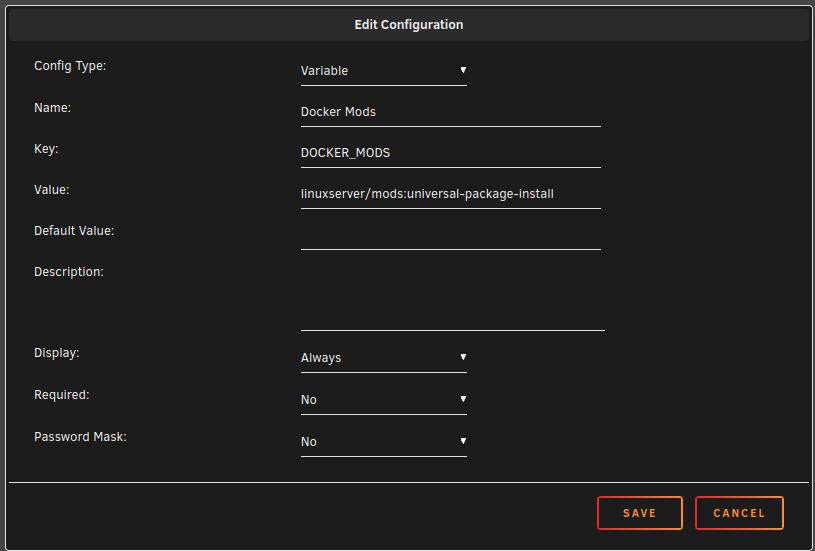

1. add the universal-package-installl Mod by adding a new variable to the container (2. screenshot)linuxserver/mods:universal-package-install

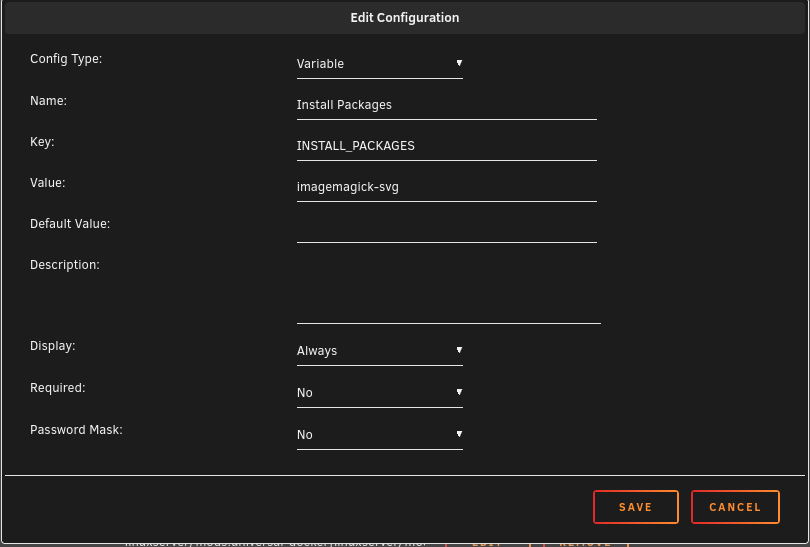

2. save -> apply which restarts the Docker3. Add a new variable "Install Packages" which lists the stuff for the mod to be installed on the next restart, in our case: imagemagick-svg

After save and apply, the packet should be installed during restart of the container and the warning should be gone

This should be also shown in the logs of containerThere is a good video on how use these Mods particular useful for the SWAG container - but the approach is the same:

hope that helps 🙂-

2

2

-

-

Das wird es wahrscheinlich sein, da ich die Version von linuxserver.io nutze.

----------------------------------------------------------------------------

Nur als Ergänzung, man kann auch mit der Linuxserver.io Version das SVG Problem lösen, und zwar über die Docker Mods, die mit dem io-container kommen - über diesen Weg kann man das Paket "imagemagick-svg" nachinstallieren:

https://github.com/linuxserver/docker-mods/tree/universal-package-installWie die Docker Mods funktionieren, kann man in diesem Video erfahren:

die Mods die ich jetzt lade "linuxserver/mods:universal-docker|linuxserver/mods:universal-package-install" - wie es grundsätzlich funktioniert wird in dem Video gut erklärt

-

Hallo, vielleicht eine dumme Frage beim Hinzufügen dieses Postarguments bekomme ich folgende Fehlermeldung

On 2/3/2024 at 4:56 PM, EliteGroup said:&& docker exec -u 0 Nextcloud /bin/bash -c "apt update && apt install -y libmagickcore-6.q16-6-extra ffmpeg libbz2-dev && docker-php-ext-install bz2"

Quote/bin/bash: line 1: apt: command not found

Den apt Befehl kennt mein Unraid wohl nicht und das einzige, was ich dazu gefunden habe, ist der Hinweis das gar nicht zu nutzen:

Wo habe ich da meinen Denkfehler?

-

I have the same error with the missing SVG support.

@blaine07 do I understand the github page correct, that it will be added later? I'm a bit confused how to fix or if I need to fix the issue?

Edit:

I've found the answer in the IO Discord by Driz- you could install the needed package via Docker Mods:Quotewith that said, we do not include imagemagick-svg (and never have) you can install it using https://github.com/linuxserver/docker-mods/tree/universal-package-install

package name looks like imagemagick-svg -

Ich hatte mich da gestern auch noch etwas eingelesen und es wird - um an eine korrekt konfigurierte yaml zu kommen - empfohlen diese zuerst durch eine lokale Installation zu erzeugen und dann in den Docker zu kopieren.

-

1

1

-

-

Ich hänge leider auch bei dem Problem, dass die yaml als Ordner und nicht als Date angelegt wird.

-

Hi Tuetenk0pp,

where you actually able to fix the issue. I'm running currently into the same issues with my Win 10 VM.

My Home assistant VM is not affected. -

Community Apps tells me about Photoprism after I've updated the docker:

QuoteAttention:

This application template has been deprecatedIs that correct @ich777

-

Hi ich777,

erst einmal vielen Dank für die sehr gute Anleitung.

Es hat alles auf Anhieb geklappt.

Ich hätte nur eine Frage, vielleicht wurde die auch schon beantwortet, welche Einstellungen sind denn für den Lancache sinnvoll. Mich wundert z.B. die lange Vorhaltezeit von 3650 Tagen: -e CACHE_MAX_AGE="3650d" \

Oder verstehe ich da was falsch.

Wenn ich das nachträglich ändern will z.B. auf 300 Tage - wie mache ich das am besten.

Im Terminal z.B. über diese Befehle (aus der update-containers.de ausgeliehen):

# pull new containers docker pull lancachenet/monolithic # stop containers docker container stop LANCache # remove containers docker container rm LANCache # start container again # LANCache Monolithic docker run -d --name='LANCache' \ --net='bridge' \ -e CACHE_DISK_SIZE="1000000m" \ -e CACHE_INDEX_SIZE="250m" \ -e CACHE_MAX_AGE="300d" \ -e TZ="Europe/Vienna" \ -v '/mnt/lancache/cache':'/data/cache':'rw' \ -v '/mnt/lancache/logs':'/data/logs':'rw' \ -p 80:80 -p 443:443 \ --no-healthcheck --restart=unless-stopped \ 'lancachenet/monolithic' # remove dangling images docker rmi $(docker images -f dangling=true -q) # clear access log from lancache monolithic echo -n "" > /mnt/lancache/logs/access.logWas mir in Bezug auf Adguard übel aufgestoßen ist, ist diese Anmerkung zu der DNS Blockliste von ph00lt0:

"We recommend against using the AdGuard DNS filter, they whitellist many tracking domains using @@ which overwrite your settings in the name of functionality. They allow for trackers in emails links and page ads in search results, something we do not compromise for."

Und wenn man sich die DNS Liste anschaut, dann findet man wirklich diese Einträge, die alle anderen Regeln überschreiben/whitelisten:

@@||ad.10010.com^ @@||ad.abchina.com^ @@||ad.kazakinfo.com^ @@||ad.ourgame.com^ @@||adcdn.pingan.com^ @@||advert.kf5.com^ @@||api.ads.tvb.com^ @@||app-advertise.zhihuishu.com^ @@||buyad.bi-xenon.cn^ @@||captcha.su.baidu.com^ @@||img.ads.tvb.com^

So etwas gibt es bei Pi-Hole nicht. Was denkt ihr dazu?Dann noch eine kurze letzte Frage, habt ihr DNSSEC in Adguard aktiviert?

Unbound kann ja die Anfragen nicht verschlüsselt senden, da die Root-Nameserver das DNS over HTTPS (DoH) Protokolle (noch) nicht unterstützen.

"Unbound unterstützt zwar DNS over TLS (DoT) und auch DNS over HTTPS (DoH), aber die Root-Nameserver unterstützen diese Protokolle (noch) nicht. Wenn wir also auf einen DNS-Mittelsmann verzichten wollen, müssen wir auch auf die Verschlüsselung unserer DNS-Anfragen verzichten. Der Einsatz von DNSSEC unter unbound stellt aber zumindest sicher, dass die zurückgelieferten Daten echt (Authentizität) und unverändert (Integrität) sind." kuketz-blog.de -

I've dived a bit deeper in the dump_all command could it be that the option -s is incorrect?

# pg_dump -U nextcloud -s > /var/lib/postgresql/data/bak.sqlaccording to the postgres documentation:

Quote-s

--schema-onlyDump only the object definitions (schema), not data.

This would explain why no data is in the tables after import and therefore I can't login with my login data or?

Edit: dumping the Database without -s did the trick - Nextcloud is now running fine under V15

pg_dumpall -U nextcloud > /var/lib/postgresql/data/bak14.sql==>

-

Hi jj9987,

I've used the Dump all data from the old version and imported it into the new V15 docker - the same way CryPt00n explained it.According to the logs all connections seem to work. Still can't login in the web interface.

Could it be, that the password encryption changed between V14 and V15?

Edit: I've checked the tables in adminer and unfortunately they are there but empty - somehow the data is not imported properly with the command

psql -U nextcloud -d nextcloud < /var/lib/postgresql/data/bak.sqlif you wonder, my database is named only nextcloud

-

On 11/25/2022 at 10:41 PM, CryPt00n said:

Hi, i don´t know how it would work with adminer, should just export from the old one and import to the new. But this is how i migrated:

I´ve used the same port for the new database, so i switch with powering on and off between them. Just don´t wanted to change the nextcloud config.

-shut down nextcloud & postgres and do a backup of your appdata folders

-install target postgres docker

IMPORTANT: You need to create a user with the same credentials used by nextcloud after starting the docker (you can find it in the nextcloud config) or add it to the postgres docker template (easiest way)

-start target postgres docker (if you did not entered the user credentials for nextcloud to the template, you have to create the user now)

-shut down target postgres docker

-start source postgres docker

-backup Database

run in source docker:

pg_dumpall -U YOUR-NEXTCLOUD-DB-USER -s > /var/lib/postgresql/data/bak.sql-in unraid, move it to your target appdata folder (e.g. from /mnt/user/appdata/postgres13 to /mnt/user/appdata/postgres15)

-stop source docker

-import Database

run in target docker:psql -U YOUR-NEXTCLOUD-DB-USER -d nextclouddb < /var/lib/postgresql/data/bak.sqlDatabase should be imported, you can now start nextcloud again and test it

Thank you for your very clear explanation.

I've followed it and it seems all fine regarding nextcloud being able to connect to the V15 if it's active.

Nevertheless, I can't login into the webUI into my Nextcloud - it says that the User or Password is wrong.

If I switch back to V14 everything works again.

Any idea? -

-

Thanks, that's working for me too.

-

Am I the only one with internal server error since the update yesterday?

Traceback (most recent call last): File "/usr/local/searxng/searx/webapp.py", line 1398, in <module> redis_initialize() File "/usr/local/searxng/searx/redisdb.py", line 56, in initialize _CLIENT.ping() File "/usr/lib/python3.10/site-packages/redis/commands/core.py", line 1194, in ping return self.execute_command("PING", **kwargs) File "/usr/lib/python3.10/site-packages/redis/client.py", line 1255, in execute_command conn = self.connection or pool.get_connection(command_name, **options) File "/usr/lib/python3.10/site-packages/redis/connection.py", line 1441, in get_connection connection.connect() File "/usr/lib/python3.10/site-packages/redis/connection.py", line 698, in connect sock = self.retry.call_with_retry( File "/usr/lib/python3.10/site-packages/redis/retry.py", line 46, in call_with_retry return do() File "/usr/lib/python3.10/site-packages/redis/connection.py", line 699, in <lambda> lambda: self._connect(), lambda error: self.disconnect(error) File "/usr/lib/python3.10/site-packages/redis/connection.py", line 1171, in _connect sock.settimeout(self.socket_timeout) AttributeError: 'UnixDomainSocketConnection' object has no attribute 'socket_timeout' unable to load app 0 (mountpoint='') (callable not found or import error) *** no app loaded. going in full dynamic mode *** --- no python application found, check your startup logs for errors --- [pid: 15|app: -1|req: -1/1] 192.168.178.162 () {36 vars in 1944 bytes} [Sat Mar 25 11:50:20 2023] GET / => generated 21 bytes in 0 msecs (HTTP/1.1 500) 3 headers in 102 bytes (0 switches on core 0) --- no python application found, check your startup logs for errors --- [pid: 49|app: -1|req: -1/2] 192.168.178.162 () {36 vars in 1942 bytes} [Sat Mar 25 11:50:20 2023] GET /favicon.ico => generated 21 bytes in 0 msecs (HTTP/1.1 500) 3 headers in 102 bytes (0 switches on core 0)

-

Unraid is showing that it can't get the current version to check about updates - is the docker retired?

-

I had next to the changes in my Fritzbox to add the Local DNS Records [A/AAAA] in my pihole.

You'll find it on the left side in PiHole under "Local DNS" -

Does someone of you also have this error in the settings overview after updating to the latest version 24:

Technical information ===================== The following list covers which files have failed the integrity check. Please read the previous linked documentation to learn more about the errors and how to fix them. Results ======= - core - INVALID_HASH - core/js/mimetypelist.js Raw output ========== Array ( [core] => Array ( [INVALID_HASH] => Array ( [core/js/mimetypelist.js] => Array ( [expected] => 94195a260a005dac543c3f6aa504f1b28e0078297fe94a4f52f012c16c109f0323eecc9f767d6949f860dfe454625fcaf1dc56f87bb8350975d8f006bbbdf14a [current] => 1b07fb272efa65a10011ed52a6e51260343c5de2a256e1ae49f180173e2b6684ccf90d1af3c19fa97c31d42914866db46e3216883ec0d6a82cec0ad5529e78b1 ) ) ) )It seems to be linked to OnlyOffice app overwriting the default mimetypelist which causes the mismatch - but I don't know how to fix it.

There are some post about this this issue on Github but none seem to fix the issue:

https://github.com/nextcloud/server/issues/30732

https://github.com/ONLYOFFICE/onlyoffice-nextcloud/issues/600

Never had this before and to be honest I don't know if I should bother or just wait for the next update to get it fixed?

-

1

1

-

-

On 1/7/2022 at 3:24 PM, Squid said:

OTOH, revert the changes you made that were in some thread that put the logs to RAM

Jan 7 14:30:01 KingServer docker: RAM-Disk syncedand if that solves it ask about it in the relevant thread.

It seems to be that this was the issue - I'll will ask as you mentioned in the corresponding thread (did not had the time yet and wanted to be sure that the issue is gone).

Thank you for your help! -

Do you think it's coming from my Ram-Disk - I'm using this now for at least a few months and never had this issue?

I can disable it, but I think it's not the root cause.

It's taken from this post: Ram-DiskAnyway I've removed the code from the Go-File and there is no Ram-Disk therefore - let's see.

-

Hi Squid, you mean two times the same container?

No - I have just one instance of each container.

I seems that it is also not limited to a specific container.It first happened with Prostgres 14 then I deleted the Docker directory and reinstalled everything

Then same issue with Minecraft and the next time with tvheadend and Photoprism.

What I noticed, is that after installing all previous Dockers Postgres seems to be recognized as not installed, even that I've installed it and it's working.

Don't know if this is related?

-

Hi Guys,

since a few days I have the issue when updating my docker containers.I seems that the docker can't be stopped during the update and then the update fails. With something like that:

"Server error - can not remove image"

After the failed update when I try to start the containers it's giving me:

"Image can not be deleted, in use by other container(s)"

I did not had this in the past and it started 3 days ago and since then I've deleted the Docker directory (which I use instead of the image) and reinstalled the containers several times as this issue comes back again an again. Not with every update and not all the time with the same containers.

Any idea where this could come from and whats broken?

I'm currently on RC2

-

8 hours ago, Tucubanito07 said:

I am experiencing the same. My Nextcloud gui instance is slow. Hopefully, this gets fixed with a Unraid update or a docker update.

I've reported this issue also when RC1 was released but, even with some help of the community, I could not get to the root of the issue. On the other hand I did find an user which is on RC1 or RC2 which is not seeing the reduced speed.

If someone has an idea how to track this down - I'm open for testing 🙂-

1

1

-

Nextcloud Sicherheits- & Einrichtungswarnungen beheben

in Anleitungen/Guides

Posted

So wie ich das verstanden habe, ist das SVG Problem kein wirkliches Problem - nur wenn man SVG Dateien auf der Nextcloud verarbeiten möchte, dann hat man das passende Tool für imagick mit installiert. Das ist also höchstens eine Optimierung für SVG, aber sicher kein muss.

Für die Verwendung eines eigenen Icons kann das Tool wohl eine Rolle spielen:

Die Installation durchzuführen gibt einem also in den meisten Fällen nur das Gefühl, die Meldung nicht mehr angezeigt zu bekommen 😊