-

Posts

498 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by coppit

-

Mover stops on this file: prw-rw---- 1 201 201 0 Feb 12 11:38 /mnt/disk2/appdata/netdata/cache/.netdata_bash_sleep_timer_fifo| which is a named pipe. The solution is to log into the server, manually delete the pipe, hard reset your server, and rerun mover. You can kill mover and restart it without rebooting, but this leaves a "move" process that cannot be killed, and which blocks the share from being unmounted during reboot. A hard server reset is the only way to reboot. I'm on 6.12.8

-

I've been dealing with frequent sqlite DB errors in Sonarr. I'm hopeful this was the cause. Thanks for posting the disk I/O errors. That helped me find this solution.

-

Just to follow up on this, in case anyone else has similar problems. mprime would warn about hardware errors within 5 seconds of starting. (The memtest ran a complete test without any errors.) I did two things that helped: 1) I saw a video where Intel CPUs would become unstable if the cooler was tightened too much on an Asrock Tai Chi motherboard. I removed my cooler and reinstalled, tightening just until I felt resistance. That stopped mprime from failing quickly. However after about 5 minutes I still got another error. The parity check after doing this found about 60 errors... 2) I increased my CPU voltage by two steps. (.10 V I think.) That seems to have removed that last of the instability.

-

Okay, finally a breakthrough. The server hanged in the BIOS bootup screen. My theory is that there is a problem with my hyperthreaded CPU cores 0-15. 16-31 are allocated to my VMs. The lower cores are used by docker and UNRAID. Disabling docker basically idles those cores since UNRAID doesn't use much CPU, which is why things are stable in that configuration. I'm going to try disabling hyperthreading to see if that helps. I might have to replace the CPU. Or maybe the motherboard. Ugh. Since my CPU temps never go above 41C even under load, I don't think it's overheating.

-

It's been another 5 days without a crash. This time I changed my NPM to use bridge networking instead of br0. Now I'm going to enable PiHole, which uses br0. If it crashes again then we'll know it's related to br0.

-

I woke up to a hung server. It seems Docker related. Next I'll try enabling specific containers, starting with Plex due to popular demand. Any other suggestions on how to debug this would be welcome.

-

Oops. I missed that. Sorry. I disabled the Docker service, and the server ran for 5 days without any crashes. Before this, the longest it ran was about 2.5 days before a crash. Now I'm going to re-enable Docker, but only use containers with bridge or host networking. I suspect it's related to br0, so I've disabled NGINX Proxy manager and two PiHole containers. I'll let this soak for another 5 days. One reason I suspect br0 is that I've had trouble with NPM being able to route requests to my bridge mode containers. When I hopped into one of the containers, it couldn't ping the server. I figured I would debug this later, but I mention it in case it might be relevant.

-

Okay, I ran it in safe mode for several days. After 53 hours it crashed. Syslog attached. I noticed that docker was running. Could it be related to that? I'm getting intermittent warnings about errors on one of my pooled cache drives. Could that cause kernel crashes? I replaced my power supply, increased all my fans to max, and passed Memtest86. CPU temp is 29°C, and the motherboard is 37°C. Should I try replacing the CPU? Motherboard? Is there a way to isolate the cause? syslog-20240117-145920.txt

-

Here's another from last night. Instead of a null pointer dereference, this time there was a page fault for an invalid address. syslog-20240110-045742.txt

-

I'm looking for some help with my server hanging up. When it happens while I'm using it, things start failing one by one. Maybe the internet fails, but I can still ssh into the server from my VM. Then the VM hangs. Then I can't reach the web UI from my phone. At that point nothing responds. I have to power cycle the server. But more often I come to the server and it's just frozen. This was happening 1-2 times per day. My memory passes memcheck86+, and I replaced the power supply with no effect. I installed the driver for my RTL8125 NIC (even though my crashes don't seem exactly the same), and it had no effect. I upgraded to 6.12.6 pretty much as soon as it came out. The crashes started happening mid-December. I don't recall making any server changes around that time, but perhaps I did. I changed my VMs from br0 to virbr0 as an experiment, and my crashes seem to happen only once every 2-3 days now. (But now the VMs can't use PiHole DNS because they can't reach docker.) So maybe the crashes are still related to my NIC? Attached is a syslog with a couple of call traces in it, as well as my system diagnostics. Any ideas would be appreciated. storage-diagnostics-20240109-1527.zip syslog-20231227-232940.txt

-

Unraid UI inaccessible but services still running

coppit replied to Craig Dennis's topic in General Support

@Craig Dennis did you resolve this? How? -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

I spent some time with SpaceInvaderOne this morning on this. We assigned the server a different IP and everything started working again. Then for fun we assigned the old IP to an IPad, and it exhibited the same behavior. So it's definitely related to my TP-Link XE75 router, and not the server. Ed's only recommendation there was to factory reset the router and set it up again. So this can be closed as not a bug. Separately, I also had an issue where the VMs couldn't be reached, but could reach out. (That said, intermittently, I also had issues with the VMs being able to reach the server.) We switched to bridge networking and that issue cleared up as well. I guess I was too eager to follow the release notes. There might be some issue with not using bridge networking, but at this point I don't think I want to delve into it. -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

It was fun decoding the .plg file to manually download and install the files with my laptop. (CA doesn't work without internet.) Based on the output of "modinfo r8125", it looks like the driver installed okay after I rebooted. I don't see any "network is unreachable" errors in the syslog, but that could be chance. Pinging the gateway still fails. -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

After rebooting into normal mode, now sshd is failing to stay up. But then later in the log ntpd comes up fine. What the heck? System diagnostics attached. Based on the "Network is unreachable" errors, this thread suggested that I disable C states, but that didn't help. I manually ran "/etc/rc.d/rc.sshd start" after the server was up to get sshd running again. Thank god for the GUI console feature. I feel like my server is falling apart before my eyes... storage-diagnostics-no-sshd-20231107-0006.zip -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

I stopped the docker service entirely. Oddly, my Windows VM that I was using lost connectivity to the unpaid host at that point. Weird. I hopped on my laptop and disabled the VM service as well. Then I rebooted into safe mode and started the array. The server still can't ping the gateway or 8.8.8.8. Safe mode diagnostics attached. This is with wg0, docker, and KVM all disabled. storage-diagnostics-safe-mode-20231106-2338.zip -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

There's probably a lot of flotsam and jetsam in there dating back to the 5.x days. Disabled the tunnel and its autostart, and rebooted. No change. Oops. I forgot to try the other things. I'll reply in a few minutes with those results as well. -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

Thanks for the assistance. I see that it's defaulting to 1500. Did you see something where the MTU was different? I got no warning from FCP. I stopped VMs and Docker, and manually set it to 1500. Then I re-enabled VMs and Docker, and rebooted for good measure. Still no luck. -

[6.12.4] Server can't ping gateway or access internet

coppit commented on coppit's report in Stable Releases

Could someone please post a screenshot of their Docker settings page? Mine doesn't have the "Docker custom network type" referred to in the release notes. -

The problem is fairly self-explanatory. VMs can ping other devices (including the server), the gateway, and the internet. Diagnostics attached. LMK if you need more info. storage-diagnostics-20231027-1254.zip

-

Maybe someone on 6.12.4 who set up a VM with vhost0 networking as per the release notes could post their routing table? That way I could compare. This seems very suspicious to me: IPv4 10.22.33.0/24 vhost0 0 IPv4 10.22.33.0/24 eth0 1003

-

Hi all, I recently upgraded to 6.12.4, and am trying to sort out networking of my server, VMs, WireGuard, and docker containers. My server can't ping 8.8.8.8, which I'm guessing is due to a broken routing table. My home network is 10.22.33.X, and my wireguard network is 10.253.0.X. Can someone with more networking experience help me debug this routing issue for 8.8.8.8? Also, I may have added the 10.253.0.3, .5, .6, .7, .8 while trying to get Wireguard to be able to see the VMs. Can I remove them? PROTOCOL ROUTE GATEWAY METRIC IPv4 default 10.22.33.1 via eth0 1003 IPv4 10.22.33.0/24 vhost0 0 IPv4 10.22.33.0/24 eth0 1003 IPv4 10.253.0.3 wg0 0 IPv4 10.253.0.5 wg0 0 IPv4 10.253.0.6 wg0 0 IPv4 10.253.0.7 wg0 0 IPv4 10.253.0.8 wg0 0 IPv4 172.17.0.0/16 docker0 0 IPv4 172.31.200.0/24 br-e5184ce4c231 0 IPv4 192.168.122.0/24 virbr0 0 System diagnostics attached. storage-diagnostics-20231022-0703.zip

-

Hi, I'm trying to pass the "UHD Graphics 770" from my "13th Gen Intel(R) Core(TM) i9-13900K" to a Windows 10 VM. At first I tried passing the physical GPU directly to the VM, but I got the Code 43 error. I don't know if it's relevant, but I've been running two gaming VMs with discrete GPUs for a while now. I'm trying to set up a headless VM with the integrated GPU. Then I found this thread, and thought I'd try passing a virtualized iGPU. I've unbound the VFIO from 00:02.0, and installed this plugin. But it looks like the driver says my iGPU is not supported? $ dmesg | grep i915 [ 48.309332] i915 0000:00:02.0: enabling device (0006 -> 0007) [ 48.310076] i915 0000:00:02.0: [drm] VT-d active for gfx access [ 48.310102] i915 0000:00:02.0: [drm] Using Transparent Hugepages [ 48.311491] mei_hdcp 0000:00:16.0-b638ab7e-94e2-4ea2-a552-d1c54b627f04: bound 0000:00:02.0 (ops i915_hdcp_component_ops [i915]) [ 48.312329] i915 0000:00:02.0: [drm] Finished loading DMC firmware i915/adls_dmc_ver2_01.bin (v2.1) [ 48.978485] i915 0000:00:02.0: [drm] failed to retrieve link info, disabling eDP [ 49.067476] i915 0000:00:02.0: [drm] GuC firmware i915/tgl_guc_70.bin version 70.5.1 [ 49.067481] i915 0000:00:02.0: [drm] HuC firmware i915/tgl_huc.bin version 7.9.3 [ 49.070461] i915 0000:00:02.0: [drm] HuC authenticated [ 49.070910] i915 0000:00:02.0: [drm] GuC submission enabled [ 49.070911] i915 0000:00:02.0: [drm] GuC SLPC enabled [ 49.071369] i915 0000:00:02.0: [drm] GuC RC: enabled [ 49.071980] mei_pxp 0000:00:16.0-fbf6fcf1-96cf-4e2e-a6a6-1bab8cbe36b1: bound 0000:00:02.0 (ops i915_pxp_tee_component_ops [i915]) [ 49.072029] i915 0000:00:02.0: [drm] Protected Xe Path (PXP) protected content support initialized [ 49.073624] [drm] Initialized i915 1.6.0 20201103 for 0000:00:02.0 on minor 0 [ 49.137484] i915 0000:00:02.0: [drm] fb0: i915drmfb frame buffer device [ 49.154410] i915 0000:00:02.0: [drm] Unsupported device. GVT-g is disabled Thanks for any help!

-

Docker containers using br0 can't access the UnRAID IP address

coppit replied to CorruptComputer's topic in General Support

Thanks @strend. I already had "allow access to custom networks" enabled, but NPM required privileged as well. -

Updating to 6.12.1 brought br0 back. I guess I'll chalk this up to 6.12.0 RC weirdness.

-

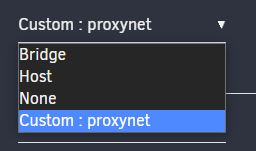

Hi all, recently I noticed my docker containers weren't auto-starting. I figured it might be a glitch in the 6.12.0 RCs, and didn't think much about it. But yesterday I noticed that my PiHole containers aren't starting even after I click "start all". Digging a little more, I found that they had lost their IP addresses, and that br0 wasn't listed in the available networks: I tried rebooting, and also following the docker network rebuild instructions in this thread, but neither helped. I'll attach my system diagnostics. I'll also attach my docker and network settings. Any help would be appreciated. Things I've done recently: installed various 6.12.0 RCs, installed a gluetun container to experiment with (which created a proxynet I think), booted the computer with the network cable unplugged. storage-diagnostics-20230623-0849.zip