stottle

Members-

Posts

162 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by stottle

-

The changes to add the add to Community Applications went live this morning. I just pushed a bunch of changes to the image. It now starts with two example notebooks * chat_example.ipynb provides a running chat example * diffusion_example.ipynb provides (wait for it....) a running stable-diffusion example It currently requires an NVIDIA GPU

-

Overview: Support for the JupyterLabNN (Neural Network) docker image from the bstottle repo Application: JupyterLab - https://jupyter.org DockerHub: https://hub.docker.com/r/bstottle42/python_base/ GitHub: https://github.com/bstottle/python_base There are two goals for this container 1) Provide an interactive (via Jupyter notebooks) python environment set up for evaluating AI/ML applications including Large Language Models (LLMs). 2) Provide a common _base_ image for LLM user interfaces like InvokeAI or text-generation-webui that leverage python for the actual processing.

-

I didn't see an answer posted, but per a post in another netdata support thread, the nvidia-smi support was moved from python.d.conf to go.d.conf (and set to "no" instead of "yes"). I was able to get Nvidia visualizations by changing (in go.conf.d) # nvidia_smi: no to nvidia_smi: yes I needed to add the parameter/value NVIDIA_VISIBLE_DEVICES / 'all' to the docker template and append `--runtime=nvidia` to Extra Parameters as well. I used the following to create/edit the config file (from the post linked above):

-

Understanding exclusive shares - not working in some dockers/VMs

stottle replied to stottle's topic in General Support

Ok. That does make the folders accessible. But seems only slightly better than mounting from /mnt/cache/<share>. -

Understanding exclusive shares - not working in some dockers/VMs

stottle replied to stottle's topic in General Support

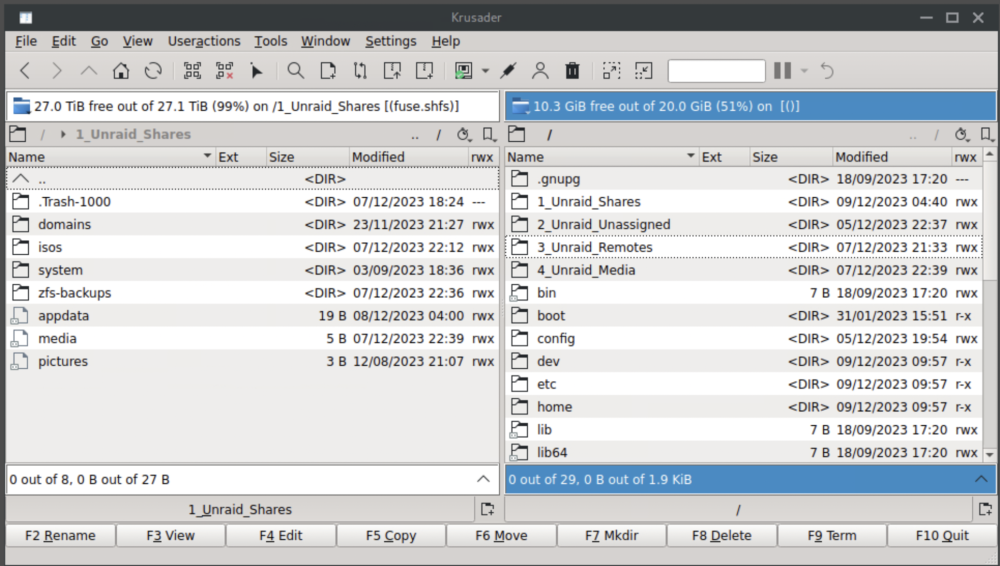

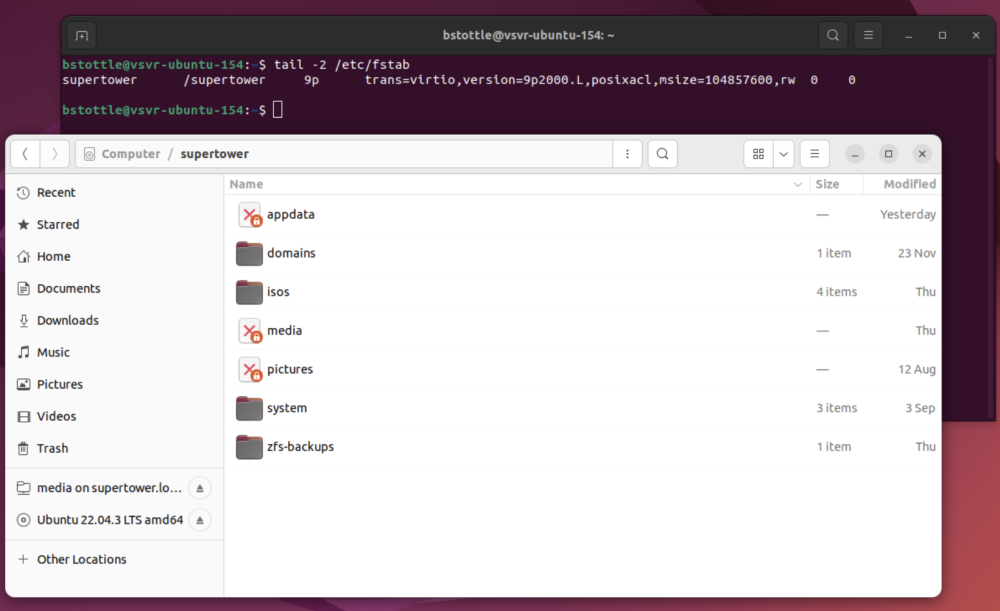

Sorry if my original post wasn't clear. I have 7 shares, and with exclusive shares setting enabled, 3 of them became exclusive shares. Exclusive: appdata, media, pictures Non-exclusive: domains, isos, system, zfs-backup. In the top image (binhex-krusader), the left side of the window shows a folder view of /mnt/user exposed to the docker (all of the shares). The three exclusive shares are shown with a different icon and are not accessible as folders in the docker container. The non-exclusive folders are accessible and behave as expected. For the Ubuntu VM, the situation is similar. I manually expose /mnt/user to the VM, but only the non-exclusive folders show as folders, the exclusive ones have an icon with an "X" and don't work (in the bottom image). I've tried both 9p and virtiofs and had the same results. Note - I don't expect any files to move automatically, I was expecting to be able to use the docker/VM to test if the write speed was improved (via drag-n-drop in the systems) skipping FUSE. Hopefully this is clearer? Are you successfully using exclusive shares? If so, can you describe what you are doing that works? -

Understanding exclusive shares - not working in some dockers/VMs

stottle replied to stottle's topic in General Support

I didn't have an issue getting shares to be recognized as "Exclusive Access". I had trouble with both dockers and the VM being able to use the shares once they were set as exclusive access. Was that not clear from the screenshots? -

Understanding exclusive shares - not working in some dockers/VMs

stottle replied to stottle's topic in General Support

Alrighty. `Permit exclusive shares: No` -

Understanding exclusive shares - not working in some dockers/VMs

stottle replied to stottle's topic in General Support

Is no on else having similar issues? If there is a fix posted somewhere else, can someone share a link? -

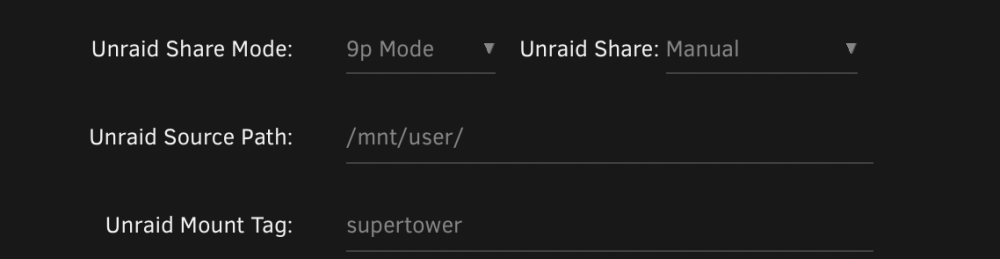

Hi, I've enabled exclusive shares and I have several shares on a ZFS cache pool that show as having exclusive access. Looking at /mnt/user/ in terminal, I do see three folders that are symlinks. From this, I believe my configuration is correct and working, with `appdata` being one of the exclusive access shares. The limited dockers I've tested all seem to start and run. But results are mixed: * `code-server` is able to open exclusive and non-exclusive share folders * `binhex-krusader` set per spaceinvader1's video - only non-exclusive shares are accessible (note the last 3 folders on the left are grayed out) * Ubuntu VM - only non-exclusive shares are accessible via VM mount Here is the section from the VM config: Here is the fstab config and folder view of the mount showing the 3 exclusive folders as unaccessible: It seems like different ways to access the shares are hit or miss. Are these bugs? Something I need to change in the config? Thanks!

-

DiskSpeed, hdd/ssd benchmarking (unRAID 6+), version 2.10.8

stottle replied to jbartlett's topic in Docker Containers

What operations are safe/non-destructive? Are all operations from this docker safe, as in no damage to existing content? The first topic says "Disk Drives with platters are benchmarked by reading the drive at certain percentages", but "Solid State drives are benchmarked by writing large files to the device and then reading them back". I have two NVMe drives pooled as my cache, which makes me think testing them individually could corrupt the data on the pool. Is it correct to assume benchmarking spinner disks is safe in a running server, but testing _pooled_ solid state drives is not? If only some tests are safe, what about testing controllers? Apologies if this has been answered before - I read the first 4 pages of topics and searched for "safe" and didn't find anything that answered my question. Thanks! -

Looks like it was a temporary issue that resolved itself. Tried a new USB with clean 6.12.6 and that worked fine. Was installing 6.12.5 (just to check), and tried the original USB drive while that was installing and networking worked with it as well. I had tried resetting various parts of the network yesterday before posting this topic - it hadn't helped. Today without actively changing anything, everything was working. While I'd love to say I knew what root cause was, I'm good with a working system. Thanks for the help!

-

I have two Unraid servers (which have been working for a while, until today) - they have different IP addresses and different names. There is another topic with the same title and a solution related to NPM - which I have been messing with, but I don't have the same message in the logs and I'm having issues even if the array is stopped. With one array powered off and the other booted into the gui, but with the array stopped, I see the powered on server on Unraid connect (2nd on shows off). With a display/keyboard/mouse directly connected to the server, I see the GUI. But the device isn't showing on the network and Unraid connect can't connect to it. the ifconfig command shows no init address for bond0, eth0, eth1, and x.x.x.142 for br0. I don't know what ifconfig showed before I was having issues, though. It shows as x.x.x.142 on the network from Google Home (I have Nest mesh wifi) which lists wired and wifi devices connected, but pinging x.x.x.142 returns timeout and I can't connect to the GUI from local web address either. I didn't change any hardware configs, but I was messing with NPM and CloudFlare tunnels while also updating both machines from 6.12.4 to 6.12.5. I was trying to change the NPM config and restart when it failed because the IP was taken, and it looks like for a while the Unraid server changed its IP address from x.x.x.142 to x.x.x.2 (the static address I assigned to NPM) (maybe?). The other server, which isn't running NPM, seemed to have the same connection issues with 6.12.5. Please help!

-

Hi, I've got AdGuard running in a docker container which lets me add a filter to an IP address and give it my domain name (DNS rewrite is another term for this I believe). The intent is to be able to use the same urls from my lan as externally, where the ip address I use would be the IP for the reverse router. The issue is that NginxProxyManager seems to require 8080 for http and 4443 for https, not the default 80/443. This means even if I set a static IP for the docker, urls won't work. If I try to port forward 80/443, that conflicts with the webUI. The solution I've been pondering is creating an additional host IP address, and then port forwarding to that. Pipework seems to be an option, or manually creating an interface alias with ifconfig. Seems like Settings->Network Settings->Routing Table could help as well. Right now I'm using a cloud flare tunnel (wildcard) and not port forwarding, but ideally the ip would be something I could port forward to if desired (which I think means needing a Mac address). Is there a recommended approach? Other alternatives to consider? TIA

-

I've got Seagate SAS drives and an LSI HBA. Sounds like there are variations of Seagate + controllers that don't work, but the UI/log seems to show my drives spinning down. But they almost immediately spin back up. Before I installed this plugin, requesting a spin down of one of the SAS drives appeared to be a no-op (the green circle would not change). After installation (actually setting the spin down to never, rebooting, setting to 1 hr, rebooting, and then installing the plugin) now shows a spinner, then gray for the a drive, but quickly spins back up. The log simply says Aug 13 12:17:40 SuperTower emhttpd: spinning down /dev/sdd Aug 13 12:17:40 SuperTower SAS Assist v2022.08.02: Spinning down device /dev/sdd Aug 13 12:17:57 SuperTower emhttpd: read SMART /dev/sdd I have turned off docker and VMs, and have basically no data on these drives yet so nothing should be trying to access the drive other than Unraid itself. I'm on 6.12.3 if that matters. Any suggestions for fixing this or troubleshooting?

-

1) USB port - it is in the same 2.0 port it has been in for a very long time, with no issues until now. 2) Re-format - what is on the flash drive vs. elsewhere on the system? Are the flash contents backed up somewhere so I can replace them if I re-format the flash drive? FWIW - The UI came up (although in what looked like a daytime theme instead of the usual nighttime theme), and I could start the array so most functionality seems to be working. Root of this question is if/how I can keep my system intact if I re-format the flash drive. 3) Replace - same as #2, but with `Root of this question is if/how I can keep my system intact if I re-formatreplace the flash drive.` I appreciate the help

-

I upgraded from 6.9.3 to 6.11.5 yesterday. Everything I tested (mostly plex and a windows VM) looked to be working fine. Then I started getting the subject message on the UI. The requested diagnostics are attached. If it matters, I was messing with a nextcloud docker container that I haven't touched in a while, and had run Settings->Management Access->Provision to try to get rid of ssh warnings in accessing the UI when I started getting this error. What are the recommended steps here? TIA tower2-diagnostics-20221225-1146.zip

-

I've followed Spaceinvaderone's video for setting up SWAG, but the docker container is giving an error: Requesting a certificate for <mySubDomain>.duckdns.org Certbot failed to authenticate some domains (authenticator: standalone). The Certificate Authority reported these problems: Domain: <mySubDomain>.duckdns.org Type: unauthorized Detail: Invalid response from http://<mySubDomain>.duckdns.org/.well-known/acme-challenge/U9o-N70woR3z5jnFl0cEVPWd711PJT8SAqRPiZLYAXc [<My IP>]: "<html>\r\n<head><title>404 Not Found</title></head>\r\n<body>\r\n<center><h1>404 Not Found</h1></center>\r\n<hr><center>nginx</center>\r\n" Hint: The Certificate Authority failed to download the challenge files from the temporary standalone webserver started by Certbot on port 80. Ensure that the listed domains point to this machine and that it can accept inbound connections from the internet. Some challenges have failed. I have two gateways, AT&T for ISP and a Google WiFi mesh, but I believe I have the port forwarding correct. Two reasons for this. 1) I can see my Plex server, so the two hop forwarding to that container is working 2) I was getting timeout errors in the log, but those have now changed to this unauthorized/404 error. For SWAG, I am have AT&T forward 80 and 443 directly (the only option I saw), and Google changing the ports to 180 and 1443. SWAG is set up for 180 and 1443. I'm trying to get http auth working as that seemed like the best place to start. I need to understand the other options better, too. Any tips for debugging?

-

Thanks for all of the work here. I've got nextcloud/letsencrypt working with duckdns, which I wouldn't have tried without the support here and tutorials. One annoyance - is there an easy way to get unset urls (https://mydomain.duckdns.org/random_garbage) to map to 404 instead of the default "Welcome to our server?" Google searches for 404 and "welcome to our server" don't help...

-

VM won't start after update to 6.3.0 and restart

stottle replied to stottle's topic in VM Engine (KVM)

Balance failed and there are other errors. Here's a snippet Feb 15 18:30:52 Tower2 emhttp: shcmd (147): set -o pipefail ; /sbin/btrfs balance start -dconvert=raid1 -mconvert=raid1 /mnt/cache |& logger & Feb 15 18:30:52 Tower2 emhttp: shcmd (148): sync Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1937353211904 flags 1 Feb 15 18:30:52 Tower2 emhttp: shcmd (149): mkdir /mnt/user0 Feb 15 18:30:52 Tower2 emhttp: shcmd (150): /usr/local/sbin/shfs /mnt/user0 -disks 62 -o noatime,big_writes,allow_other |& logger Feb 15 18:30:52 Tower2 emhttp: shcmd (151): mkdir /mnt/user Feb 15 18:30:52 Tower2 emhttp: shcmd (152): /usr/local/sbin/shfs /mnt/user -disks 63 2048000000 -o noatime,big_writes,allow_other -o remember=0 |& logger Feb 15 18:30:52 Tower2 emhttp: shcmd (153): cat - > /boot/config/plugins/dynamix/mover.cron <<< "# Generated mover schedule:#01240 3 * * * /usr/local/sbin/mover |& logger#012" Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1936279470080 flags 1 Feb 15 18:30:52 Tower2 emhttp: shcmd (154): /usr/local/sbin/update_cron &> /dev/null Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1935205728256 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1934131986432 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1933058244608 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1931984502784 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1930910760960 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1929837019136 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1928763277312 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1927689535488 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1926615793664 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1925542051840 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1924468310016 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1923394568192 flags 1 Feb 15 18:30:52 Tower2 emhttp: Starting services... Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1922320826368 flags 1 Feb 15 18:30:52 Tower2 kernel: BTRFS info (device sdb1): relocating block group 1921247084544 flags 1 Feb 15 18:30:52 Tower2 kernel: ata8.00: exception Emask 0x10 SAct 0x70000 SErr 0x400000 action 0x6 frozen Feb 15 18:30:52 Tower2 kernel: ata8.00: irq_stat 0x08000000, interface fatal error Diagnostics attached tower2-diagnostics-20170216-1754.zip -

VM won't start after update to 6.3.0 and restart

stottle replied to stottle's topic in VM Engine (KVM)

Something seems to be wrong. The GUI is running very slowly (several second wait to load webpages) and I've clicked "Balance" twice without it making any changes to the screen. I.e., still looks like the image I sent previous, not saying it is doing a balance operation. I'm seeing the following repeated in the logs Feb 15 18:57:41 Tower2 root: ERROR: unable to resize '/var/lib/docker': Read-only file system Feb 15 18:57:41 Tower2 root: Resize '/var/lib/docker' of 'max' Feb 15 18:57:41 Tower2 emhttp: shcmd (461): /etc/rc.d/rc.docker start |& logger Feb 15 18:57:41 Tower2 root: starting docker ... Feb 15 18:57:51 Tower2 emhttp: shcmd (463): umount /var/lib/docker |& logger Feb 15 18:58:09 Tower2 php: /usr/local/emhttp/plugins/dynamix/scripts/btrfs_balance 'start' '/mnt/cache' '-dconvert=raid1 -mconvert=raid1' Feb 15 18:58:09 Tower2 emhttp: shcmd (475): set -o pipefail ; /usr/local/sbin/mount_image '/mnt/cache/docker.img' /var/lib/docker 20 |& logger Feb 15 18:58:09 Tower2 root: truncate: cannot open '/mnt/cache/docker.img' for writing: Read-only file system Feb 15 18:58:09 Tower2 kernel: BTRFS info (device loop1): disk space caching is enabled Feb 15 18:58:09 Tower2 kernel: BTRFS info (device loop1): has skinny extents -

VM won't start after update to 6.3.0 and restart

stottle replied to stottle's topic in VM Engine (KVM)

It isn't looking like a balance started automatically. Under Main->Cache Devices, both SSDs were listed. When I ran blkdiscard and refreshed, Cache 2's icon turned from green to blue, so I started the array. Now the cache details look like the attached image, with no balance seeming to be running. Diagnostics attached as well. tower2-diagnostics-20170215-1843.zip -

VM won't start after update to 6.3.0 and restart

stottle replied to stottle's topic in VM Engine (KVM)

@johnnie.black - thanks for your help and patience. I've updated to 6.3.1, powered down, disconnected cache2 and restarted. Then started the array. At that point, cache mounts to /mnt/cache. So far so good. Should just add the 2nd drive and then balance, or do you have other suggestions in this case? FYI root@Tower2:~# btrfs dev stats /mnt/cache [devid:1].write_io_errs 441396 [devid:1].read_io_errs 407459 [devid:1].flush_io_errs 2047 [devid:1].corruption_errs 0 [devid:1].generation_errs 0 [/dev/sdb1].write_io_errs 0 [/dev/sdb1].read_io_errs 0 [/dev/sdb1].flush_io_errs 0 [/dev/sdb1].corruption_errs 0 [/dev/sdb1].generation_errs 0 I assume that the errors are leftovers, as I didn't zero stat before I removed the 2nd cache drive. NB - the serial matches cache1, the drive that wasn't seeing errors (S21HNXAGC11924P) -

VM won't start after update to 6.3.0 and restart

stottle replied to stottle's topic in VM Engine (KVM)

<swearing> So I tried changing the sata port for the problematic drive on my mobo. Array and cache drives looked ok on reboot, so I tried stats and got root@Tower2:~# btrfs dev stats /mnt/cache ERROR: cannot check /mnt/cache: No such file or directory ERROR: '/mnt/cache' is not a mounted btrfs device Hmm, ok. Maybe the cache isn't available until I start the array, so I start the array. It now says unmountable disk present Cache • Samsung_SSD_850_EVO_500GB_S21HNXAGC11924P (sdb) Immediately turned off the array. Apologies, but I don't want to touch anything until I get some advice. Diagnostics attached. Main->Cache Devices shows both drives. How do I recover the cache pool? Note, it is the good drive that isn't being recognized. tower2-diagnostics-20170213-1908.zip