steini84

-

Posts

434 -

Joined

-

Last visited

-

Days Won

1

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by steini84

-

-

Updated for unRAID 6.8.3 (kernel 4.19.107 )

-

1

1

-

-

Yeah it's 0.19.1

Sent from my iPhone using Tapatalk -

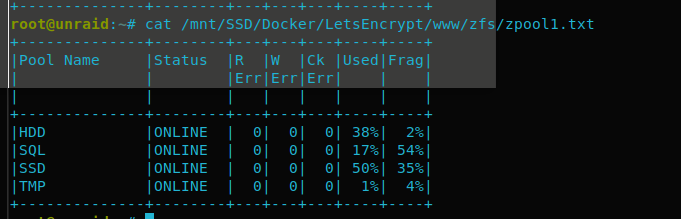

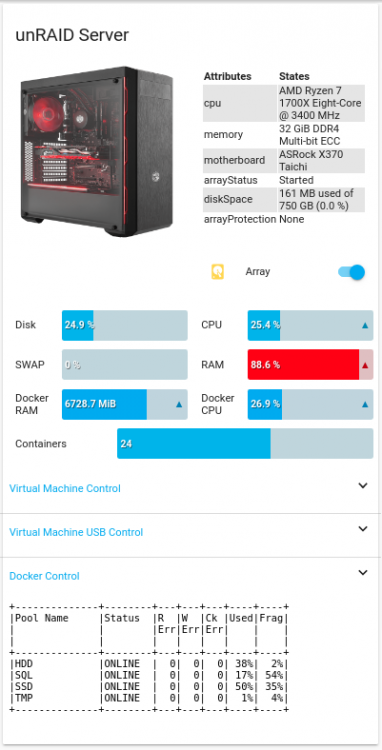

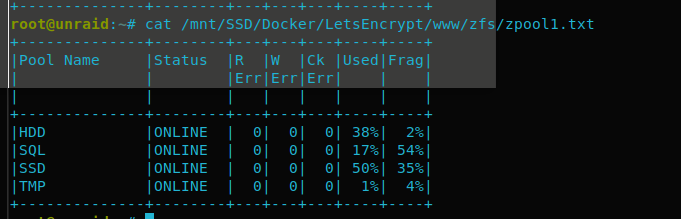

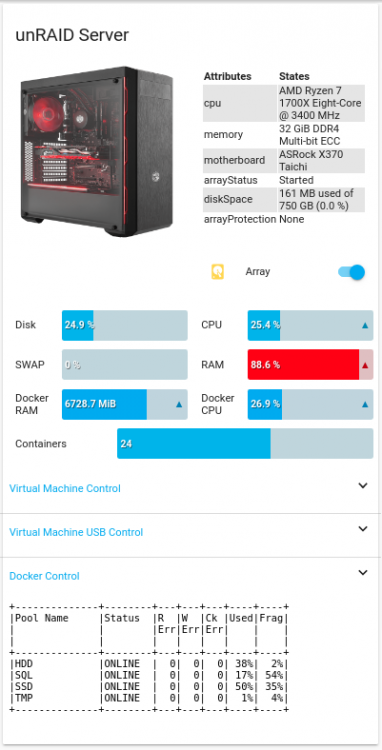

Here is an update for an overview of your pools. I use this with user scripts cron and paste the output to an nginx webserver, home assistant grabs that data and displays it:

logfile="/tmp/zpool_report.tmp"ZPOOL="/mnt/SSD/Docker/LetsEncrypt/www/zfs/zpool.txt"pools=$(zpool list -H -o name)usedWarn=75usedCrit=90warnSymbol="?"critSymbol="!"( echo "+--------------+--------+---+---+---+----+----+" echo "|Pool Name |Status |R |W |Ck |Used|Frag|" echo "| | |Err|Err|Err| | |" echo "| | | | | | | |" echo "+--------------+--------+---+---+---+----+----+") > ${logfile}for pool in $pools; do frag="$(zpool list -H -o frag "$pool")" status="$(zpool list -H -o health "$pool")" errors="$(zpool status "$pool" | grep -E "(ONLINE|DEGRADED|FAULTED|UNAVAIL|REMOVED)[ \t]+[0-9]+")" readErrors=0 for err in $(echo "$errors" | awk '{print $3}'); do if echo "$err" | grep -E -q "[^0-9]+"; then readErrors=1000 break fi readErrors=$((readErrors + err)) done writeErrors=0 for err in $(echo "$errors" | awk '{print $4}'); do if echo "$err" | grep -E -q "[^0-9]+"; then writeErrors=1000 break fi writeErrors=$((writeErrors + err)) done cksumErrors=0 for err in $(echo "$errors" | awk '{print $5}'); do if echo "$err" | grep -E -q "[^0-9]+"; then cksumErrors=1000 break fi cksumErrors=$((cksumErrors + err)) done if [ "$readErrors" -gt 999 ]; then readErrors=">1K"; fi if [ "$writeErrors" -gt 999 ]; then writeErrors=">1K"; fi if [ "$cksumErrors" -gt 999 ]; then cksumErrors=">1K"; fi used="$(zpool list -H -p -o capacity "$pool")" if [ "$status" = "FAULTED" ] \ || [ "$used" -gt "$usedCrit" ] then symbol="$critSymbol" elif [ "$status" != "ONLINE" ] \ || [ "$readErrors" != "0" ] \ || [ "$writeErrors" != "0" ] \ || [ "$cksumErrors" != "0" ] \ || [ "$used" -gt "$usedWarn" ] then symbol="$warnSymbol" else symbol=" " fi ( printf "|%-12s %1s|%-8s|%3s|%3s|%3s|%3s%%|%4s|\n" \ "$pool" "$symbol" "$status" "$readErrors" "$writeErrors" "$cksumErrors" \ "$used" "$frag" ) >> ${logfile} done( echo "+--------------+--------+---+---+---+----+----+") >> ${logfile}cat ${logfile} > "$ZPOOL"

Cool integration. Custom card for home assistant?

Sent from my iPhone using Tapatalk -

1 hour ago, Marshalleq said:

@steini84 Also there is yet another version of unraid out with a number of security fixes.

Upgraded to zfs-0.8.3

Updated for unRAID 6.8.2 (kernel 4.19.98)-

1

1

-

-

Updated for unRAID 6.8.1 (kernel 4.19.94)

-

7 minutes ago, Yros said:

There's no absolute benefit for a GUI other than being able to monitor (in real time?) the ZFS pool and maybe even some additionnal perks. Either way it's, in my humble opinion, better to have a separate space for the Zpool(s) rather than be aggregated with the Unassigned Devices plugin and risk some mishap down the line due to a erronous mounting on unraid or something.

As for the script, I'll have a look though I don't promise anything (my level is basically 1st year of IT college in programming... I was more of a design' guy at the time), and I'm still trying to figure out why my GPU won't pass-through @.@I use check_mk to monitor my pools and it lets me know if there is a problem:

-

9 minutes ago, Marshalleq said:

This is one place I'd somewhat disagree. I can use it fine in the command line, but I think a gui would also be fantastic. @steini84 did you mean to say we don't need to update for every build of ZFS, or don't need to update it for every build of unraid? Thanks.

You don't need to update the plugin every time, but I still have to push new builds for new kernels.

I changed the wording a little bit in the post to better explain... but what i meant is that I don't see any benefits for me so I wont take the time to try to put something together.

-

Updated for 6.8.1-rc1

The plugin was redesigned so that you do not need to update it for every new build of ZFS. (ZFS still needs to be built for every new kernel - you just dont have to upgrade the plugin it selfe)

I have done some testing (using this small script ) but please let me know if something breaks for you in the install. You can still get the old version if you need it here: old version

I am open for ideas on the best way for users to know if the latest build is available before an update, but for now I will continue to build and announce it in this thread.

@Yros I dont personally see the benefit of a GUI for my use-case (the I would just move back to Napp-IT or Freenas for my ZFS) but if you want to dust off your programming skills the install script could use some polishing. The github is here https://github.com/Steini1984/unRAID6-ZFS so please take a look.

-

To quote the creators of the program “If you find have a question, head over to the ZnapZend section on serverfault.com and tag your question with 'znapzend'.”

http://serverfault.com/questions/tagged/znapzend -

Thanks! That did it. It is now mounted. Do you have any suggestions on how to copy the data off this drive?

You can use cp / rsync or if you like a GUI you can use mc

Sent from my iPhone using Tapatalk -

I'm moving from Freenas to Unraid. I currently have Unraid up and running with 3 8tb drives (one used for parity). I have 3 drives from my ZFS Freenas system (8tb, 6tb, and 3tb) with data on them that I would like to move to my new Unraid pool. All three drives are have data on them separately and were not part of a ZFS pool. I've tried to piece together a solution from previous posts, but I'm stuck. I have added the ZFS and Unassigned Devices plugins, and have tried to import one of my ZFS discs using terminal in Unraid and the command "zpool import -f". I get this:

pool: Red8tb

id: 14029892406868332685

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://zfsonlinux.org/msg/ZFS-8000-EY

config:

Red8tb ONLINE

sde ONLINE

I'm not sure if the disc is successfully mounted yet, and if it is I'm not sure how to access its data. Thanks for any help you can give.

What is the output from zpool list ?

It should force import with zpool import -f Red8tb

Sent from my iPhone using Tapatalk -

I'm moving from Freenas to Unraid. I currently have Unraid up and running with 3 8tb drives (one used for parity). I have 3 drives from my ZFS Freenas system (8tb, 6tb, and 3tb) with data on them that I would like to move to my new Unraid pool. All three drives are have data on them separately and were not part of a ZFS pool. I've tried to piece together a solution from previous posts, but I'm stuck. I have added the ZFS and Unassigned Devices plugins, and have tried to import one of my ZFS discs using terminal in Unraid and the command "zpool import -f". I get this:

pool: Red8tb

id: 14029892406868332685

state: ONLINE

status: The pool was last accessed by another system.

action: The pool can be imported using its name or numeric identifier and

the '-f' flag.

see: http://zfsonlinux.org/msg/ZFS-8000-EY

config:

Red8tb ONLINE

sde ONLINE

I'm not sure if the disc is successfully mounted yet, and if it is I'm not sure how to access its data. Thanks for any help you can give.

What is the output from zpool list ?

It should force import with zpool import -f Red8tb

Sent from my iPhone using Tapatalk-

1

1

-

-

How is the stability of ZFS under UnRAID?

How about CIFS speeds, using 10gbe?

Was just about to move away from UnRAID to FreeNAS for the striped array speed increases when I noticed this.

Really like the UnRAID ecosystem, but the array write speed is a killer for me; using striped disks via ZFS might plug that gap!

Stability has been great for me running the same pool for 4 years.

I can’t speak of 10gb speeds since I’m only on 1gb, but it’s just straight up zfs on Linux so it’s rather a Linux vs FreeBSD question.

ZFS on unRaid does not work for array drives so if you want to go all in on ZFS imo FreeNAS makes more sense.

Is a cache drive not enough for you (do all the slow writes during off hours)?

Sent from my iPhone using Tapatalk -

Hmm

Can you send me the output from these commands

Quotecat /var/log/syslog | head

cat /var/log/znapzend.log | head

ls -la /boot/config/plugins/unRAID6-ZnapZend/auto_boot_on

ps aux | grep -i znapzend -

Updated for 6.8 final

-

1

1

-

-

Okay, ignore my previous post, I gave up on 9p. Someone on the ubuntu forums showed me a guide for mounting nfs shares in fstab, and I was able to get that working.

I have a new question now. how do I get zfs to auto publish the zfs shares on boot and on array start? My VMs seem to start up before the shares are available, which doesn't make them happy. If I enter "zfs share -a" into the unraid console and restart the VMs they all work fine.

I've tried:- using the user script addon to run "zfs share -a" on array start, but it either doesn't work, or runs too late

- adding "zfs share -a" to the /boot/config/go (not 100% sure I did this correctly though)

- manually adding the shares to /etc/exports, but it got overwritten the next time I restarted unraid].

I also did not figure out the 9p problem, but great that you have figured out nfs.

You could try adding

zfs share -a

after zpool import -a in your plugin file and try restartingnano /boot/config/plugins/unRAID6-ZFS.plg

If that works for you I could add that to the plugin

....or you could try to delay the startup of VMs until your command has run in the GO file or even start the VMs from the go file ?

Here is some discussion about this topic

https://forums.unraid.net/topic/78454-boot-orderpriority-for-vms-and-dockers/

Sent from my iPhone using Tapatalk -

Updated to RC9

Sent from my iPhone using Tapatalk -

Updated for RC8

-

11 hours ago, Dtrain said:

Did you find a Solution for this ? I am on the same Boat ...

2 hours ago, Marshalleq said:I'm well expereinced in SMB, though not so much in ZFS. However i did this and the first trick was that all the shares are set in the SMB extras portion of the SMB icon within unraid. I didn't actually need to do anything with zfs. However, there were a few enhancements you could do with ZFS / SMB if you read the SMB manual. I don't think any of them are mandatory though. I think the misconception is that ZFS does the SMB sharing, it doesn't, it just has the capability to work with it built in. At least that's how I understand it.

If i remember correctly then ZFS does not include a SMB server, it relies on a SMB configuration that is written to work with the ZFS tools. I want to steal this sentence: "let ZFS take care of the file-system and let Samba take care of SMB sharing."

Here you can find a great guide for SMB https://forum.level1techs.com/t/zfs-on-unraid-lets-do-it-bonus-shadowcopy-setup-guide-project/148764

-

On 11/25/2019 at 10:36 PM, Zoroeyes said:

That sounds great steini84, appreciate it and will look forward to it.

Finally found some time and rewrote the original post with a small guide. Hope that helps

-

I should be able to put something together for you next weekend if someone has not already done that by then

Sent from my iPhone using Tapatalk -

Excellent, thanks steini84, I didn’t realise that had been implemented yet. I had read about it being available in some implementations, but didn’t for a second think it’d be the one I actually wanted to use!

So with auto trim, is it ‘set and forget’? No periodic commands etc?

Yes that is the idea. If you have an hour you can check this out:

-

RC7 is now out kernel 5.3.12

Updated for RC7

Sent from my iPhone using Tapatalk -

Apologies if this is covered elsewhere, but if I wanted to use this to create a 4x NVME vdev with 1 disk parity (like a raid 5), how would I deal with TRIM and would this become an issue?

You can turn on autotrim:

zpool set autotrim=on poolname

Sent from my iPhone using Tapatalk

ZFS plugin for unRAID

in Plugin Support

Posted

Updated for unRAID 6.9.0-beta1 (kernel 5.5.8)