-

Posts

251 -

Joined

-

Last visited

About Tophicles

- Birthday 05/04/1973

Converted

-

Gender

Male

-

URL

http://thelocalstream.ca

-

Location

Windsor, ON, Canada

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Tophicles's Achievements

Contributor (5/14)

2

Reputation

-

Tophicles started following Just checking... , Probably a stupid question but.... , bond0 reporting dropped packets and 4 others

-

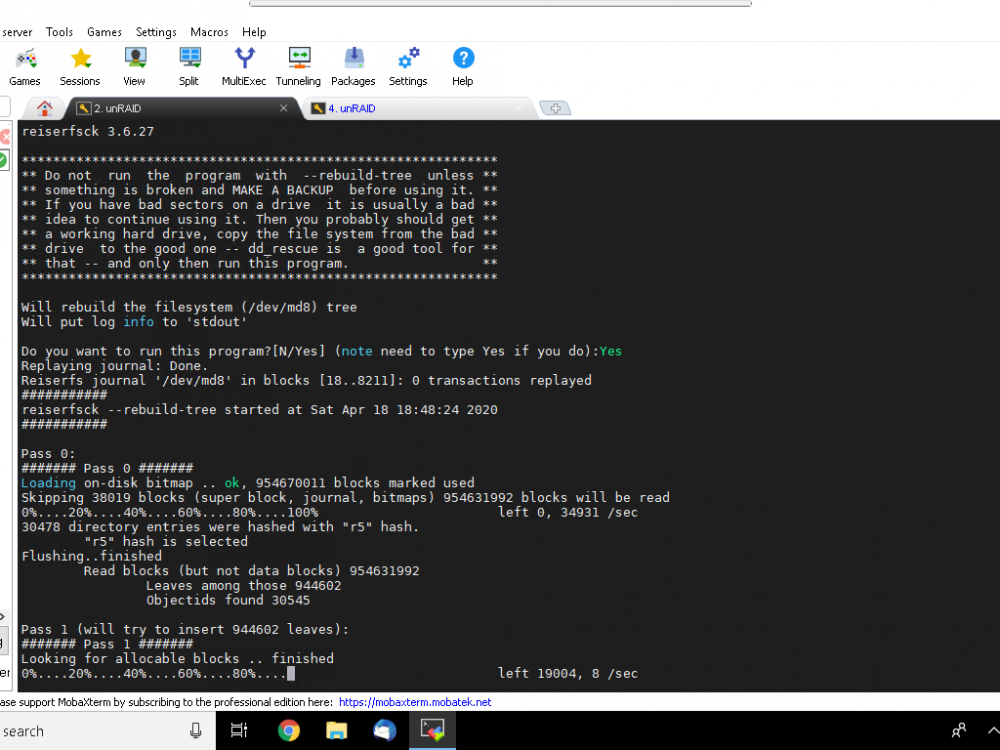

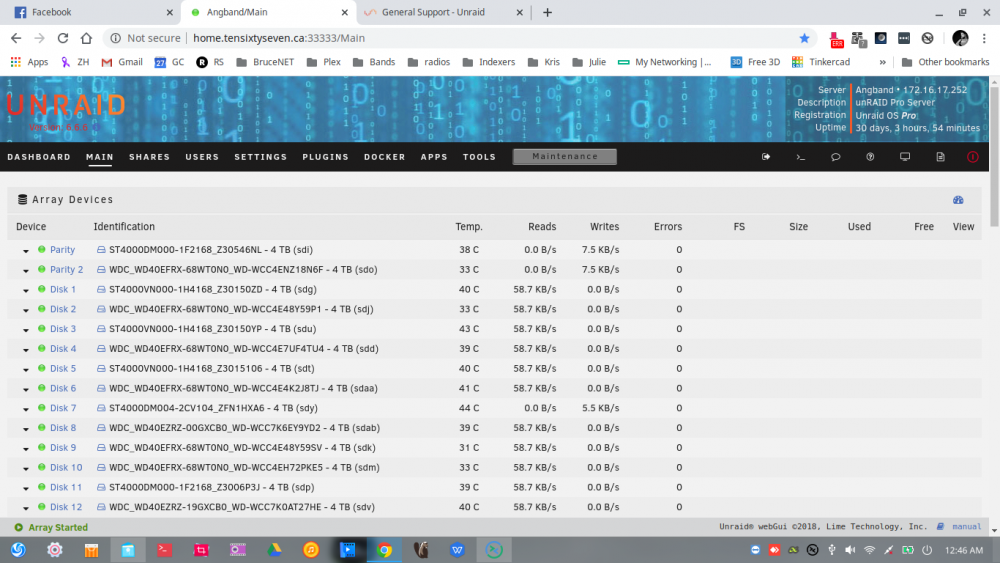

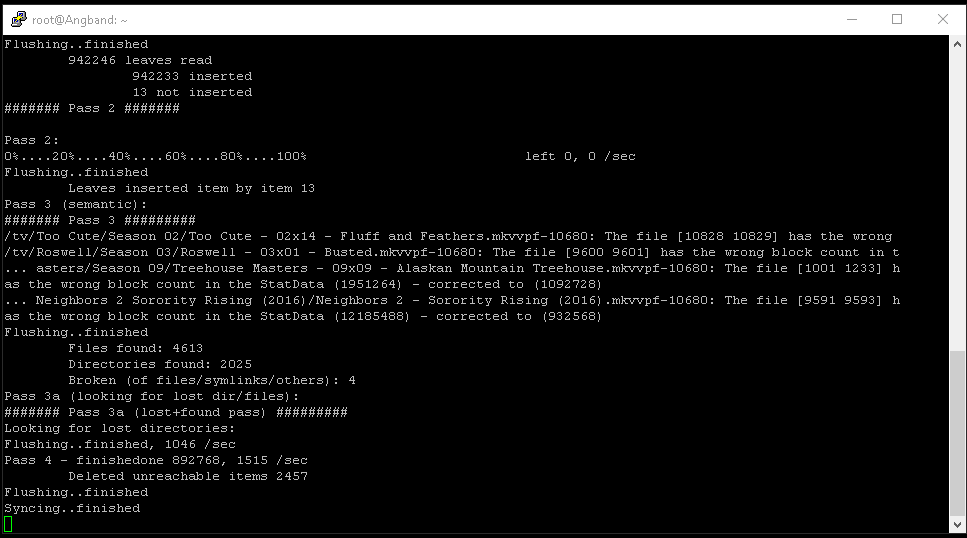

I have a failed disk (disk 16, not that it matters) and it crapped out during a resierfsck. I know, I know, I should have them as XFS. Anyway, it was going through a resiserfsck --rebuild-tree and the system crashed (power outage past UPS runtime). Now it was showing as "unmountable, no file system" - to be safe I swapped it with a new disk and it went through the disk-rebuild. Now I've got the new disk showing as "unmountable, no file system". I supposed it transferred the wrecked data over to the new drive. I'd rather avoid taking the entire array offline to scan/fix the disk (if it even will) and, when trolling the intertubes, I saw someone saying you don't need to unmount the entire array, just the problematic drive? IS this correct?

-

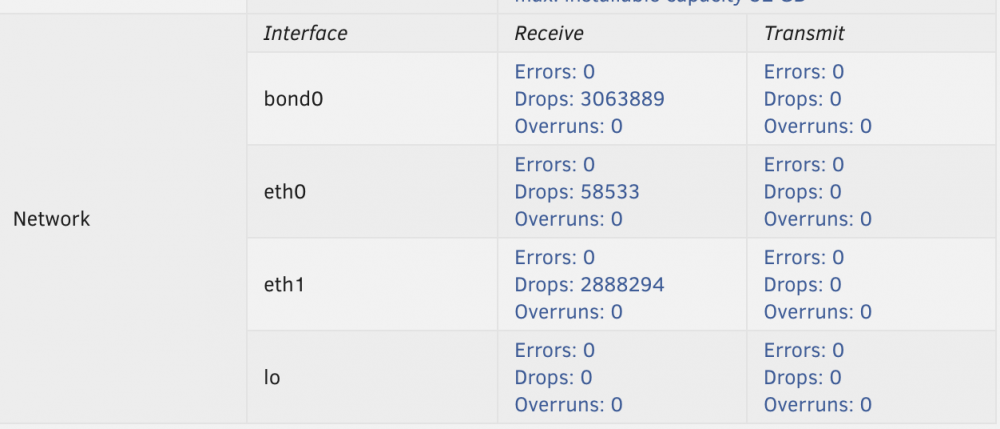

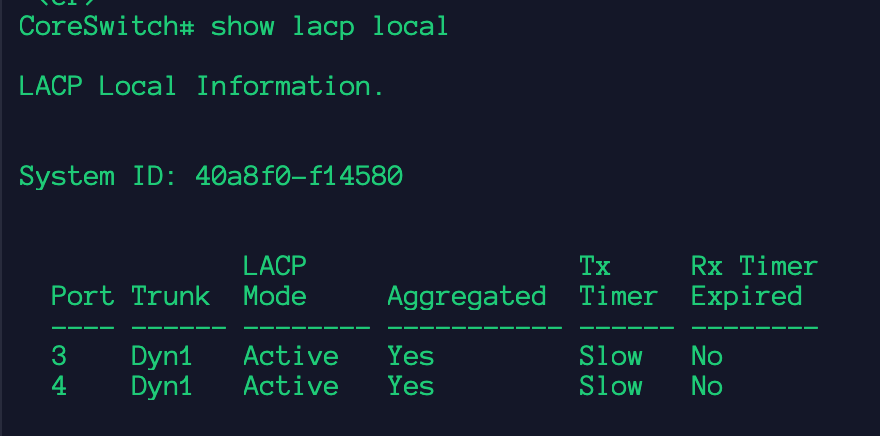

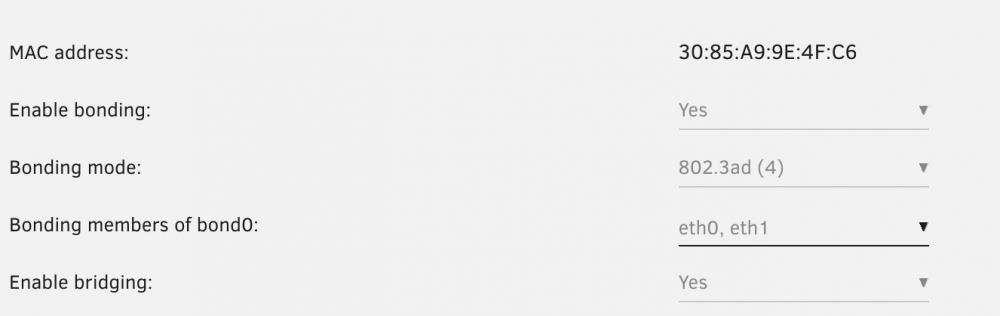

Just wondering if this is "normal" behaviour? I see many conflicting suggestions (using Google-fu) so I thought I should ask the gurus Screen shots of Dashboard Report, LACP config on Aruba Switch and bond0 config in unRAID:

-

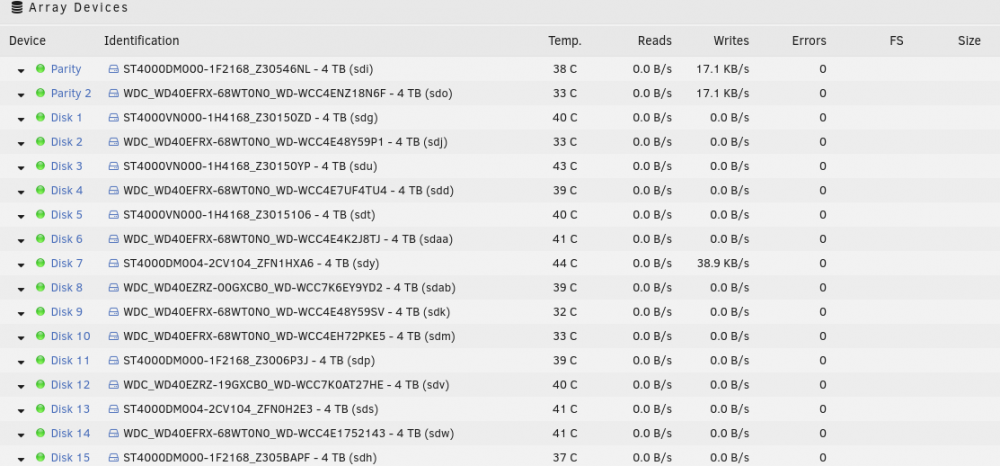

SO, I've been realizing that I need to convert my RFS to XFS. The problem is, I have dual parity and no "free" slots on my controllers. My understanding is the I'll have to break the dual parity anyway (if I'm reading the wiki correctly) so should I un-assign one of my parity drives and use it as the "transfer" slot/drive? For example, un-assign the second parity drive then mount the drive as a "new" data drive and follow the rsync procedure? Frankly, I'm worried about losing data - not the end of the world, but why rebuild if I don't have to, right? On the other hand, I do have another controller, should I install it just for the purposes of drive migration? Do I still lose the dual parity?

-

Understood... I'm just a bit nervous about the "conversion" process... I wish there were a way to just swap a data drive and have it rebuild as the new FS (I know, not possible). The other thing that would be amazing (again, probably not possible) would be to have the disks in "error" complete their processes WHILE the contents are emulated, then have the corrected data updated somehow afterward. Again, I know not possible, just the extremely exhausted, delerious ramblings of a worried mind

-

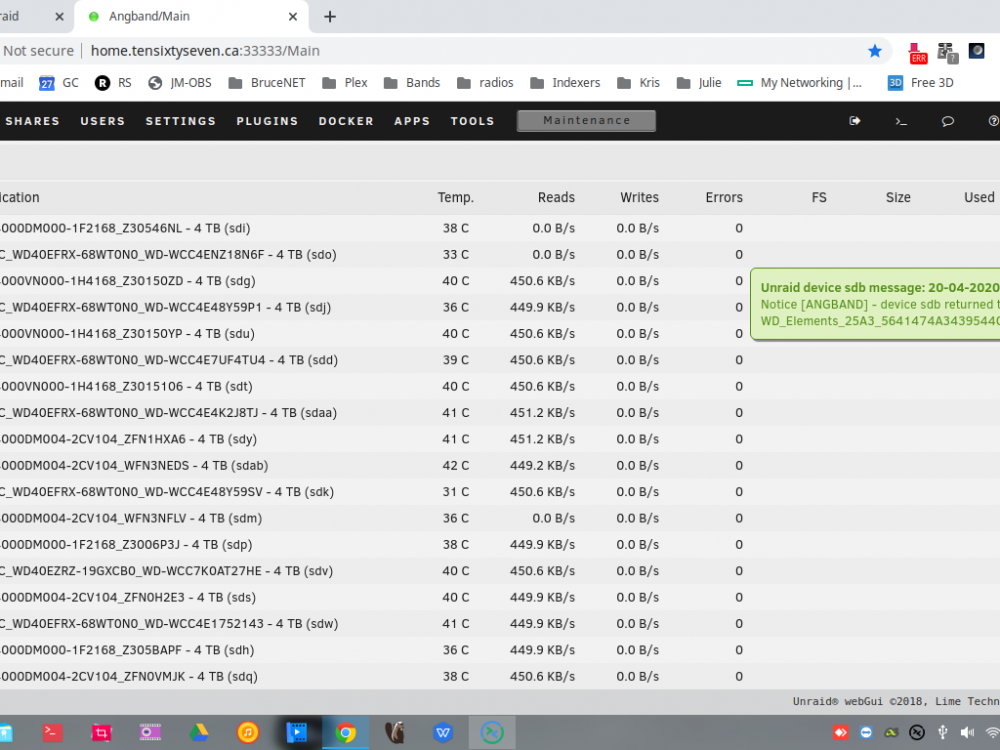

So I'm running a --rebuild-tree on two data disks. Just wondering if anyone knows why it always sloooooooooows to a crawl at the end? There are no errors in the log, the /s rating just drops. It starts at 36/s or so in stage two and then drops to 8/s (see image). There is, obviously disk activity in the GUI but it's taking DAYS to complete the check - during which users get upset because the shares are offline. I know REISER is deprecated and I should be moving to XFS but the process for that scares the crap outta me Anyway, just wondering if anyone has a guess why this occurs - is it a configuration issue on my end? Hardware? OR is it "just the way" it is? Please don't take this as a criticism or complaint, just curiosity. angband-diagnostics-20200420-0731.zip

-

Thanks for the reply.... it eventually DID finish, just took 3.5 days... I think I'll replace the disk? I dunno.... very weird.

-

-

So, I had a disk go read-only (not marked off line and no errors in the log). Took the array into Maintenance Mode three days ago, ran the resierfsck -- check and it told me to rebuild the tree. So I did, it ran (took forever) and then it finished "Flushing finished.... Syncing finished... " Since then it's been sitting at that window, not "Completed" (ie, no prompt, still running) and the dashboard shows the disk being written to (along with parity) for the last two days. Le ugh. Do I just let it limp along? Diagnostics attached. angband-diagnostics-20200401-2323.zip

-

100% agreed. I will have to investigate how to do that. I think I'm out of ports so I'll have to figure out how to migrate data.

-

Hmm interesting... that reboot was because I replaced the Marvel controller with an LSI and after MONTHS of disk8 saying it's a read-only file system, it now does not. I can make/remove dirs without issue. So, the file system check does require the array to be offline though, correct? I'll have to do that when users are not using it - it takes like 20+hours to test.

-

Here are the unRAID diagnostics... angband-diagnostics-20200208-0313.zip

-

Yes, the disk has been disabled. Now, the thing to note here is I was using Marvel controllers (now switched to LSI) so there have been "bad" disks before that weren't bad. I realize rebuilding won't fix the corruption, that's why I was thinking that the rebuild would simply put the "bad: filesystem on the new drive. I'll post the diags....

-

... but I'll ask anyway I have a disk that's been marked bad and it's in "read only" for that one disk. If I pull it and put in a new disk, I'm under the impression that the data rebuild will write back the read only filesystem, correct? So I have to pull the array offline while the disk scans (approx 20 hours, ugh). So would correct procedure be: 1) stop the array, run reiserfsck on the disk (/dev/md8) 2) correct the file system errors 3) replace the disk OR 1) replace the disk 2) have the data rebuild commence and complete 3) run reiserfsck on the new disk to remove the read-only file system? Or can I replace the disk and have the array rebuild the data? I am just trying to avoid downtime. Again, probably a stupid question. Be kind

-

Exactly what I thought, like I said, just double checking, thank you Johnnie

-

I think I know the answer, but better safe than sorry ;) I am finally able to move some drives from a Marvel controller to an LSI controller. The drives are assigned via UUID, correct? So moving to a new controller should be no problem since they are controller agnostic? I'll make sure I snap a screenshot of the current assignments, just in case, but there really should be no big cause for concern, correct?