chickensoup

Members-

Posts

527 -

Joined

-

Last visited

About chickensoup

- Birthday 05/09/1987

Converted

-

Gender

Male

-

Location

Brisbane, Australia

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

chickensoup's Achievements

Enthusiast (6/14)

1

Reputation

-

Unraid 6.10, permission denied from docker containers

chickensoup replied to ChuskyX's topic in General Support

Had the same issue, mostly Plex completely broken. Looked like permissions problems in the log. Tried to migrate from Plex-Media-Server to a fresh binhex-plex but error persisted. Appdata folders didn't look like they had been taken over by root but the whole lot was a giant mess. I'm in the process of rolling back to 6.9.2 now and will keep an eye on the progress since I don't have enough time to spend mucking around with it right now. -

Trying to determine the cause of ongoing parity corrections

chickensoup replied to chickensoup's topic in General Support

Disk 2 passed an extended SMART check. -

chickensoup started following Trying to determine the cause of ongoing parity corrections

-

I ran a [correcting] parity check recently and it fixed up 13 parity sync errors. I wasn't expecting this and as a result, ran another one the next night [non-correcting] which picked up another 9 parity sync errors. I'm not sure whether this is related to my recent migration of disks to XFS (see below) or if I have a disk on the way out. There aren't any SMART warnings that I'm concerned about at this stage, I did a zero-byte search against my shares and found two TV eps with zero bytes but this might be unrelated. On another share however (different disk set) I have 227 zero-byte files. Some are expected, random html and config placeholders but there is some music and old documents which wouldn't be expected. Almost all of these appear to originate on Disk 2, so I'm running a full SMART check on that disk now. The disk is pretty old and so is the data, could this be bitrot? Any suggestions on what might be causing this? If the disk checks OK, I'm wondering if a new config might be in order. Just worried about potential data loss at this stage. Background relating the filesystem changes: I recently migrated a bunch of disks from ResierFS to XFS following what guides I could find, I wasn't overly concerned about maintaining perfect metadata or even parity during the moves, just that the files moved OK. I used a Krusader docker to shuffle the data on to a blank XFS formatted disk and then changed the FS on the old, now blank Reiser disk to XFS, let unRAID format the blank disk then ran a parity check. With each move there were about 12/13 parity errors corrected which I attributed to the shuffling of ~2-3TB of data and wasn't overly concerned but now I'm wondering if I should have been. unraid-diagnostics-20190811-0100.zip

-

What sort of symptoms? I've had SATA cables tied like this for years and never had a problem. Just want to know what I should look out for I guess.

-

chickensoup started following Custom icon on dashboard

-

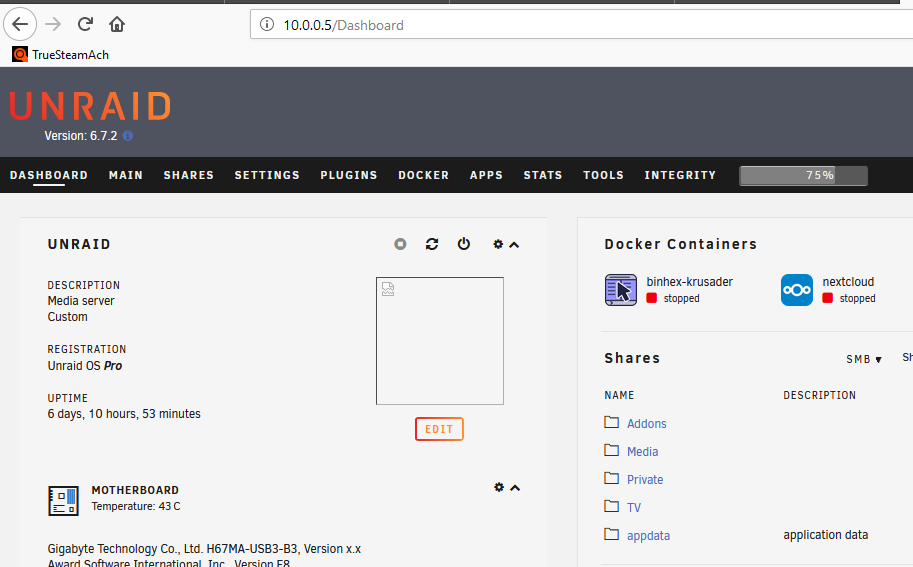

Happened again today, this might be useful.. Image location is listed as "http://10.0.0.5/%3Cbr%20/%3E%3Cb%3EWarning%3C/b%3E:%20%20filemtime():%20stat%20failed%20for%20/usr/local/emhttp/webGui/images/case-model.png%20in%20%3Cb%3E/usr/local/emhttp/plugins/dynamix/include/Helpers.php%3C/b%3E%20on%20line%20%3Cb%3E248%3C/b%3E%3Cbr%20/%3E/webGui/images/case-model.png?v=" unraid-diagnostics-20190727-1306.zip

-

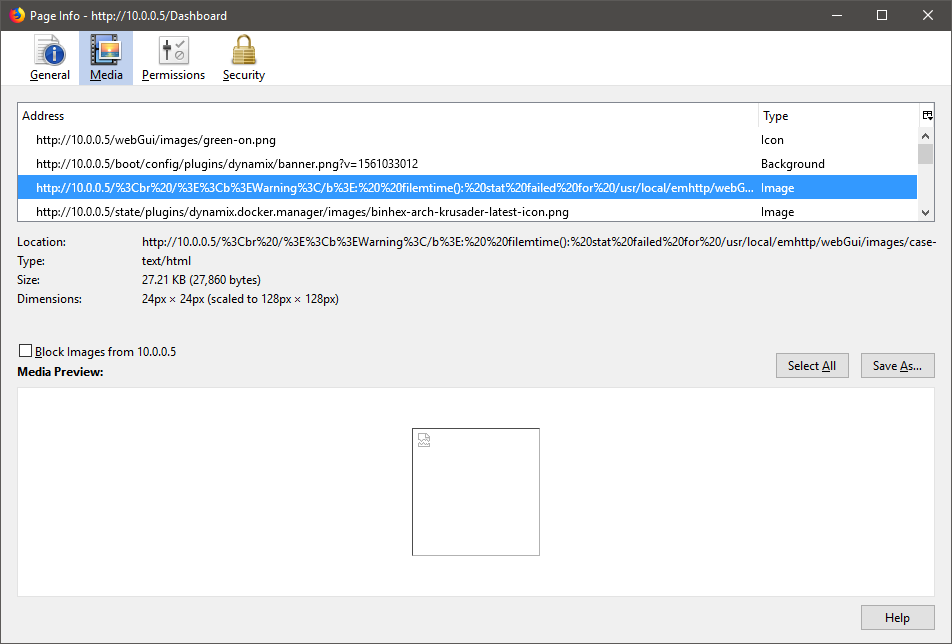

Lian-Li PC-201A, Silver version of the 201B and was discontinued a long time ago. I managed to stumble across one second hand for $50. Looks like this Internal looks like this

-

chickensoup started following Minor: Review of e-mail notifications and 16p SAS controller recommendation

-

Hey yall, Looking for advice & suggestions on 16 channel controller cards and if they'll need any special flash, etc. FYI I'm probably going to change motherboards so I can add a GPU for pass-through. My Hyper-V server is using a Gigabyte Z77X-D3H which has more PCI-E lanes. Current setup however, using all SATA ports - photo below, I'll probably need longer breakout cables than what would be included anyway. - Gigabyte H67MA-USB3 (6 SATA onboard) - 2 x Adaptec 1430SA (4-Port SATA2) The ones which look to be suitable candidates so far are: - Adaptec ASR-71605 $56.99 USD = $80.95 AUD + shipping - - Another ASR-71605 which includes breakout cables $95.98 USD = $136.33 AUD + shipping - LSI 9201-16i $128.68 USD = $182.77 AUD + shipping There are cheaper options if I stick with SATA2 - LSI MegaRAID MR SAS 84016E $55.00 USD = $78.12 AUD + shipping - HP ATTO H30F There are heaps of these without cables for < $50 AUD I'm located in Brisbane, Australia so anything domestic would be preferable. Shipping stuff over from the US is pretty expensive right now, our dollaridoo sucks. Thanks.

-

Just wanted to add, I've noticed this as well. Just displays a broken image link. Can't really pinpoint when it happens, between reboots maybe? I'm running 6.7.2, re-selecting the image fixes it immediately. If/when it happens again I'll see if I can post a screenshot, but I'm not sure a small torn icon image is going to be much help =]

-

Keeping this alive with my +1 as well. Also bonus points if we can add a little of our own flair, just like the banner

-

Minor: Review of e-mail notifications

chickensoup replied to chickensoup's topic in Feature Requests

I just checked my work inbox since it goes to both - looks like that's my mistake, I did get one with errors (33) and it must have gotten missed in the noise. For some reason I got the pending sector email twice, once at 4.46am and again at 5.07am; same disk, same count. -

Minor: Review of e-mail notifications

chickensoup replied to chickensoup's topic in Feature Requests

Just to add, I had an e-mail saying one of my drives had 1 current pending sector. I wasn't really concerned since I just copied 1.97TB to the 2TB disk and the count hadn't increased. I check the UI today and notice that there are apparently 30,000 errors on the drives.. but no email notification? -

Hey Tom, I have more of a personal/career question to throw in. I have worked in IT my whole life and primarily fall into the technical support / system administrative category. I have limited Unix experience but I'm comfortable navigating my way around now and I have a high interest in hardware and storage at the firmware level. What advice would you give to further my knowledge and experience in these areas and have you found there is much industry demand for these types of skills? Side question, you have an interesting background and I would love to one day shake the imposter syndrome, do you ever still feel this way? I mean, you've practically built an Operating System 🙂 Thanks, B

-

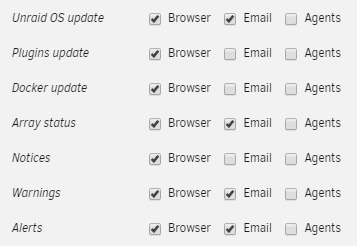

Hey LT, I just started using e-mail notifications and it seems to be working well but is a little excessive on some of the classifications. Some of the 'alerts' I get which I would argue should maybe be re-classified are as follows: Importance: Warning, Parity check started (this is a monthly scheduled check) - should be Notice Importance: normal, Parity check in progress (3%) - 6 sync errors detected. Shouldn't sync errors trigger a Warning? Importance: Warning, Docker application updates - again probably should be a notice, not a warning sent with High Priority. This was also immediately following an OS update, which is why the dockers were out of date These are just a few, I'm sure they probably all need a check over. Perhaps LT could jazz them up a bit with some of that new branding flair at the same time? Obviously the option to choose between fancy and plain text would keep most people happy, I expect. Just some thoughts. Keep up the great work guys. B

-

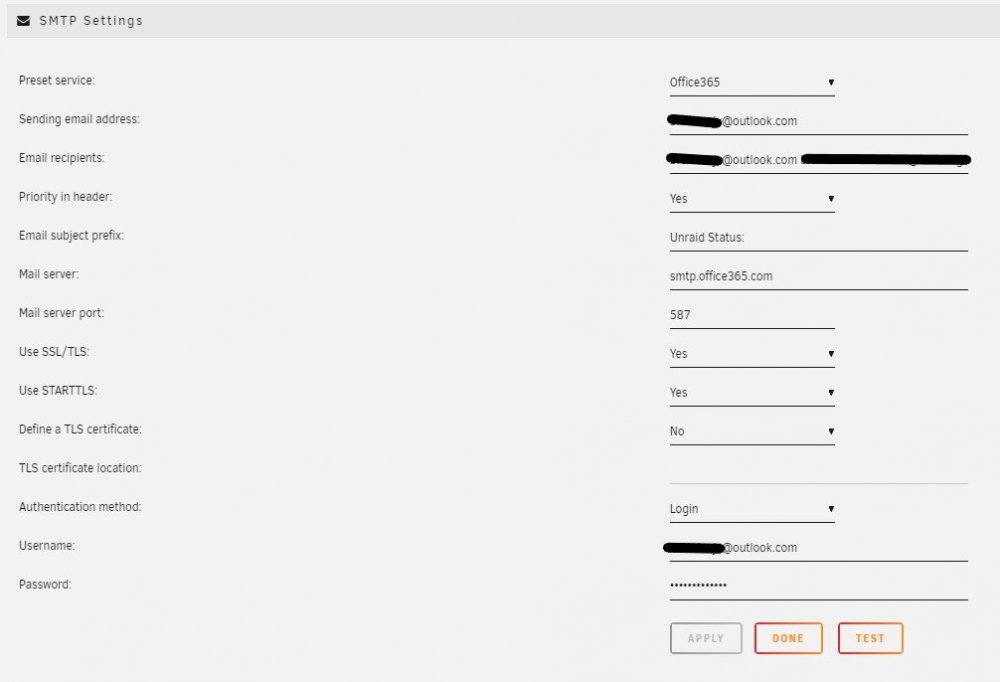

Can't figure out email notifications - gotta be simple

chickensoup replied to khager's topic in General Support

I actually set this up very recently and it works, but I was about to suggest some changes to the classification of certain messages when I found your post. I have an @outlook email address, but these settings work for me. -

Made one last night from a inspired by a wallpaper i really like, thought I'd keep it simple. Recommend changing "Header custom text color" to FFFFFF as well.