Chugalug

Members-

Posts

27 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Chugalug's Achievements

Noob (1/14)

0

Reputation

-

Been having some network issues on my server. All of my dockers were showing that the version was unknown etc and I could ping the server but would get packets dropped. Rebooted the server and it was better for a while, not it is starting again. I see this in the log which I find weird specially as the IP addresses it is showing are not on my network, my IP subnet is 10.10.20.1 etc, any help would be appreciated. Sep 6 08:02:15 Unraid avahi-daemon[9910]: Joining mDNS multicast group on interface vetha2baa46.IPv6 with address fe80::401a:ebff:fe30:e7d1. Sep 6 08:02:15 Unraid avahi-daemon[9910]: New relevant interface vetha2baa46.IPv6 for mDNS. Sep 6 08:02:15 Unraid avahi-daemon[9910]: Registering new address record for fe80::401a:ebff:fe30:e7d1 on vetha2baa46.*. Sep 6 08:02:28 Unraid kernel: veth62aff59: renamed from eth0 Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethdb6932f) entered disabled state Sep 6 08:02:28 Unraid avahi-daemon[9910]: Interface vethdb6932f.IPv6 no longer relevant for mDNS. Sep 6 08:02:28 Unraid avahi-daemon[9910]: Leaving mDNS multicast group on interface vethdb6932f.IPv6 with address fe80::40bc:caff:fea8:811e. Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethdb6932f) entered disabled state Sep 6 08:02:28 Unraid kernel: device vethdb6932f left promiscuous mode Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethdb6932f) entered disabled state Sep 6 08:02:28 Unraid avahi-daemon[9910]: Withdrawing address record for fe80::40bc:caff:fea8:811e on vethdb6932f. Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethe633046) entered blocking state Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethe633046) entered disabled state Sep 6 08:02:28 Unraid kernel: device vethe633046 entered promiscuous mode Sep 6 08:02:28 Unraid kernel: IPv6: ADDRCONF(NETDEV_UP): vethe633046: link is not ready Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethe633046) entered blocking state Sep 6 08:02:28 Unraid kernel: br-8ae76cee11aa: port 9(vethe633046) entered forwarding state Sep 6 08:02:28 Unraid kernel: eth0: renamed from vethbcca532 Sep 6 08:02:28 Unraid kernel: IPv6: ADDRCONF(NETDEV_CHANGE): vethe633046: link becomes ready Sep 6 08:02:30 Unraid avahi-daemon[9910]: Joining mDNS multicast group on interface vethe633046.IPv6 with address fe80::fc2a:2eff:febf:fc33. Sep 6 08:02:30 Unraid avahi-daemon[9910]: New relevant interface vethe633046.IPv6 for mDNS. Sep 6 08:02:30 Unraid avahi-daemon[9910]: Registering new address record for fe80::fc2a:2eff:febf:fc33 on vethe633046.*. Sep 6 08:23:49 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 08:23:49 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O Sep 6 08:51:15 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 08:51:15 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O Sep 6 09:18:43 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 09:18:43 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O Sep 6 09:46:49 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 09:46:49 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O Sep 6 09:59:18 Unraid emhttpd: shcmd (1511): /usr/local/sbin/mover &> /dev/null & Sep 6 10:13:45 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 10:13:45 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O Sep 6 10:41:30 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 10:41:30 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O Sep 6 11:09:20 Unraid dnsmasq-dhcp[12916]: DHCPREQUEST(virbr0) 192.168.122.178 52:54:00:d7:c3:5d Sep 6 11:09:20 Unraid dnsmasq-dhcp[12916]: DHCPACK(virbr0) 192.168.122.178 52:54:00:d7:c3:5d DESKTOP-DAUHJ0O

-

I followed the Spaceinvaderone video guide on how to get everything up and running and I am getting the following error in the log when trying to start the rocketchat docker. The mongodb seems to start ok from what I can tell. Any ideas? } /app/bundle/programs/server/node_modules/fibers/future.js:280 throw(ex); ^ MongoParseError: Unescaped at-sign in authority section at parseConnectionString (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/core/uri_parser.js:589:21) at connect (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/operations/connect.js:272:3) at /app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/mongo_client.js:221:5 at maybePromise (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/utils.js:714:3) at MongoClient.connect (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/mongo_client.js:217:10) at Function.MongoClient.connect (/app/bundle/programs/server/npm/node_modules/meteor/npm-mongo/node_modules/mongodb/lib/mongo_client.js:427:22) at new MongoConnection (packages/mongo/mongo_driver.js:206:11) at new MongoInternals.RemoteCollectionDriver (packages/mongo/remote_collection_driver.js:4:16) at Object.<anonymous> (packages/mongo/remote_collection_driver.js:38:10) at Object.defaultRemoteCollectionDriver (packages/underscore.js:784:19) at new Collection (packages/mongo/collection.js:97:40) at new AccountsCommon (packages/accounts-base/accounts_common.js:23:18) at new AccountsServer (packages/accounts-base/accounts_server.js:23:5) at packages/accounts-base/server_main.js:7:12 at module (packages/accounts-base/server_main.js:19:1) at fileEvaluate (packages/modules-runtime.js:336:7) { name: 'MongoParseError', [Symbol(mongoErrorContextSymbol)]: {} }

-

A bunch of IP addresses trying to access my server

Chugalug replied to Chugalug's topic in General Support

It appears I did have SSH open so I have closed it and those have stopped now. Thank you so much!!!! -

Hi Everyone I just looked in my logs and I am seeing a bunch of different IP addresses trying to gain access to my server, mainly from China looking up the IP addresses. What can I do about this, freaking out a little bit. Here is a small snippet of the log but there are a bunch of different ones trying to gain access. Thank you Apr 20 09:05:28 Tower sshd[1077]: Invalid user dnsmasq from 62.210.125.29 port 57258 Apr 20 09:05:28 Tower sshd[1077]: error: Could not get shadow information for NOUSER Apr 20 09:05:28 Tower sshd[1077]: Failed password for invalid user dnsmasq from 62.210.125.29 port 57258 ssh2 Apr 20 09:05:29 Tower sshd[1077]: Received disconnect from 62.210.125.29 port 57258:11: Bye Bye [preauth] Apr 20 09:05:29 Tower sshd[1077]: Disconnected from invalid user dnsmasq 62.210.125.29 port 57258 [preauth] Apr 20 09:05:36 Tower sshd[1269]: Failed password for root from 206.189.229.112 port 50756 ssh2 Apr 20 09:05:36 Tower sshd[1269]: Received disconnect from 206.189.229.112 port 50756:11: Bye Bye [preauth] Apr 20 09:05:36 Tower sshd[1269]: Disconnected from authenticating user root 206.189.229.112 port 50756 [preauth] Apr 20 09:05:49 Tower sshd[2154]: Failed password for root from 49.88.112.71 port 37939 ssh2 Apr 20 09:05:49 Tower sshd[2154]: Failed password for root from 49.88.112.71 port 37939 ssh2 Apr 20 09:05:50 Tower sshd[2154]: Failed password for root from 49.88.112.71 port 37939 ssh2 Apr 20 09:05:50 Tower sshd[2154]: Received disconnect from 49.88.112.71 port 37939:11: [preauth] Apr 20 09:05:50 Tower sshd[2154]: Disconnected from authenticating user root 49.88.112.71 port 37939 [preauth] Apr 20 09:06:32 Tower sshd[2767]: Invalid user rt from 159.138.65.33 port 38316 Apr 20 09:06:32 Tower sshd[2767]: error: Could not get shadow information for NOUSER Apr 20 09:06:32 Tower sshd[2767]: Failed password for invalid user rt from 159.138.65.33 port 38316 ssh2 Apr 20 09:06:33 Tower sshd[2767]: Received disconnect from 159.138.65.33 port 38316:11: Bye Bye [preauth] Apr 20 09:06:33 Tower sshd[2767]: Disconnected from invalid user rt 159.138.65.33 port 38316 [preauth] Apr 20 09:06:54 Tower sshd[3124]: Accepted none for adm from 62.112.11.88 port 49806 ssh2

-

Formatted cache drive from BTRFS to XFS still getting BTRFS errors

Chugalug replied to Chugalug's topic in General Support

Sorry this is the start of the error I meant. Feb 21 19:19:36 Tower kernel: blk_update_request: I/O error, dev sdh, sector 1026147647 Feb 21 19:19:36 Tower kernel: XFS (sdh1): metadata I/O error: block 0x3d29c4ff ("xlog_iodone") error 5 numblks 64 Feb 21 19:19:36 Tower kernel: XFS (sdh1): xfs_do_force_shutdown(0x2) called from line 1200 of file fs/xfs/xfs_log.c. Return address = 0xffffffff812b37d1 Feb 21 19:19:36 Tower kernel: XFS (sdh1): Log I/O Error Detected. Shutting down filesystem Feb 21 19:19:36 Tower kernel: XFS (sdh1): Please umount the filesystem and rectify the problem(s) Feb 21 19:19:36 Tower kernel: sd 7:0:0:0: [sdh] tag#7 UNKNOWN(0x2003) Result: hostbyte=0x04 driverbyte=0x00 Feb 21 19:19:36 Tower kernel: sd 7:0:0:0: [sdh] tag#7 CDB: opcode=0x2a 2a 00 01 ba 3c 98 00 00 10 00 Feb 21 19:19:36 Tower kernel: blk_update_request: I/O error, dev sdh, sector 28982424 Feb 21 19:19:36 Tower kernel: sdh: detected capacity change from 1050214588416 to 0 Feb 21 19:19:36 Tower kernel: Buffer I/O error on dev sdh1, logical block 3622795, lost async page write Feb 21 19:19:36 Tower kernel: Buffer I/O error on dev sdh1, logical block 3622796, lost async page write Feb 21 19:25:28 Tower kernel: blk_update_request: I/O error, dev loop0, sector 175584 Feb 21 19:25:28 Tower kernel: BTRFS error (device loop0): bdev /dev/loop0 errs: wr 0, rd 1, flush 0, corrupt 0, gen 0 Feb 21 19:25:28 Tower kernel: blk_update_request: I/O error, dev loop0, sector 2272736 Feb 21 19:25:28 Tower kernel: BTRFS error (device loop0): bdev /dev/loop0 errs: wr 0, rd 2, flush 0, corrupt 0, gen 0 -

Chugalug started following Formatted cache drive from BTRFS to XFS still getting BTRFS errors

-

Formatted cache drive from BTRFS to XFS still getting BTRFS errors

Chugalug replied to Chugalug's topic in General Support

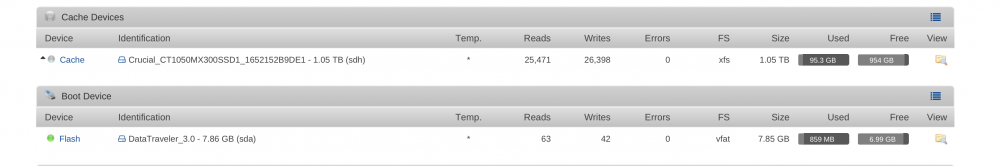

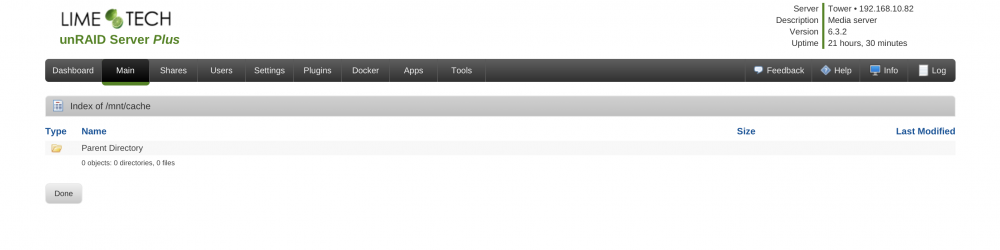

I tried to delete my docker and recreate it but still getting errors as well getting a bunch of other errors that are concerning, wondering if I am having motherboard issues. Its weird that there are errors relating to BTRFS when the cache was reformatted to XFS. The error below causes my cache drive to show no directories on it, shows normal on the main page but click on the directory and it shows nothing is on it. syslog-4.txt -

Formatted cache drive from BTRFS to XFS still getting BTRFS errors

Chugalug replied to Chugalug's topic in General Support

Ok thanks for the info, I'm going to delete my docker and try and create a new image and see. I will report back. Cheers -

Hello I upgraded to a new larger cache drive and it automatically got formatted to BTRFS, I figured I would give it a go and transferred all of my apps etc over to is. When writing data to the cache drive I was getting a lot of error messaged in the log pertaining to BTRFS errors so I figured I would switch to XFS. I transferred my data off, reformatted to XFS and transferred all of the data back, I am still getting BTRFS errors, things seem fine but I would like to figure out the root of this and if there is a fix for it. The errors are along the lines of this and I have no drives that are BTRFS in my system anymore: Feb 19 20:36:26 Tower kernel: BTRFS error (device loop1): bad fsid on block 20987904 Feb 19 20:36:26 Tower kernel: BTRFS error (device loop1): bad fsid on block 20987904 Feb 19 20:36:26 Tower kernel: BTRFS error (device loop1): failed to read chunk root Feb 19 20:36:26 Tower kernel: BTRFS error (device loop1): open_ctree failed Feb 19 20:36:26 Tower root: mount: wrong fs type, bad option, bad superblock on /dev/loop1, Feb 19 20:36:26 Tower root: missing codepage or helper program, or other error Feb 19 20:36:26 Tower root: Feb 19 20:36:26 Tower root: In some cases useful info is found in syslog - try Feb 19 20:36:26 Tower root: dmesg | tail or so. Feb 19 20:36:26 Tower root: mount error

-

I got the plugins to run it looks like and the plugininstallfile.done is in there now and it showed the following at the end: Installing homebridge-LogitechHarmony Loaded plugin: homebridge-philipshue Registering platform 'homebridge-philipshue.PhilipsHue' --- Loaded plugin: homebridge-sonos Registering accessory 'homebridge-sonos.Sonos' --- Loaded plugin: homebridge-wink Registering platform 'homebridge-wink.Wink' But it still won't keep the docker running, when I refresh the page it shows the red stop button.

-

Ok thank you. Did you just leave the ones you wanted to use in the config file? Like only the ones you're going to list in the plugin install file? And do I need to do anything else but have the config file and the plugin install file, is there a step I'm missing?

-

I have put the config.json file and the pluginsInstallList file into a folder that was named /mnt/cache/appdata/homebridge which is the host path in the docker settings. A folder called persist is created and it changed the one file to pluginsInstallList.done, not sure if that sounds correct, not sure what else to try

-

I can't seem to get this docker to start at all. I click the start button and it says started with the green play button, but I refresh the page and it says its stopped with the red stop button on it. The log on the docker page doesn't show anything, not sure what I am doing wrong here, anyone have any suggestions?

-

Grey Triangle on Drive and Missing Parity Check Funtion

Chugalug replied to Chugalug's topic in General Support

Thanks Squid. I did what you said and it is rebuilding the disk now, I will report back to let you know how it all worked out. Cheers. -

Grey Triangle on Drive and Missing Parity Check Funtion

Chugalug replied to Chugalug's topic in General Support

I've tried the spin up and it doesn't do anything either. Not sure what way to go here with this, scared of losing data. -

Grey Triangle on Drive and Missing Parity Check Funtion

Chugalug replied to Chugalug's topic in General Support

Thank you for the reply. That is totally possible as I didn't notice until after a reboot so not sure if it was red or not before. Here is the smart report. smart_report.txt