-

Posts

332 -

Joined

-

Last visited

About ratmice

- Birthday 08/28/4

Retained

-

Member Title

SubGenius

Converted

-

Gender

Undisclosed

-

Location

West O' Boston

-

Personal Text

Give me slack!, or give me death.

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

ratmice's Achievements

Contributor (5/14)

2

Reputation

-

On the page with the color selection might be good, maybe right near the color picker? While we're at it, would it be possible to have different color schemes for the disk view and heat map view, that would be awesome.

-

Thanks for replying so quickly. I did indeed change some colors on the heat map. The reset colors worked to set things right. BTW, the reset colors is on the tray allocation tab, for those trying to find it.

-

ratmice started following Hardware failure, replacing components. , [PLUGIN] Disk Location , Strange problems after replacing dead router. and 1 other

-

I just installed, and really like this plug in. However, I cannot seem to get the Main page to use the colors, for the drives, from the configuration page. I changed them but the old colors remain, or even began using new colors entirely. One category changed to black and I can't read any info at all 😆

-

SO I am pre clearing a 6TB disk I had laying around, and the speeds are abysmal (2-3 MB/s). I notice a lot of these blocks within the log: Apr 30 11:40:31 Tower kernel: ata8.00: exception Emask 0x0 SAct 0x80e00000 SErr 0x0 action 0x0 Apr 30 11:40:31 Tower kernel: ata8.00: irq_stat 0x40000008 Apr 30 11:40:31 Tower kernel: ata8.00: failed command: READ FPDMA QUEUED Apr 30 11:40:31 Tower kernel: ata8.00: cmd 60/40:a8:00:94:73/05:00:00:00:00/40 tag 21 ncq dma 688128 in Apr 30 11:40:31 Tower kernel: res 41/40:00:00:94:73/00:00:00:00:00/00 Emask 0x409 (media error) <F> Apr 30 11:40:31 Tower kernel: ata8.00: status: { DRDY ERR } Apr 30 11:40:31 Tower kernel: ata8.00: error: { UNC } Apr 30 11:40:31 Tower kernel: ata8.00: configured for UDMA/133 Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=DRIVER_OK cmd_age=11s Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 Sense Key : 0x3 [current] Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 ASC=0x11 ASCQ=0x4 Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 CDB: opcode=0x88 88 00 00 00 00 00 00 73 94 00 00 00 05 40 00 00 Apr 30 11:40:31 Tower kernel: I/O error, dev sdo, sector 7574528 op 0x0:(READ) flags 0x84700 phys_seg 168 prio class 0 Apr 30 11:40:31 Tower kernel: ata8: EH complete Is this due to a failing drive, and does it also explain the speeds being in the toilet?

-

Thanks for pointing me in the right direction, I really appreciate it.

-

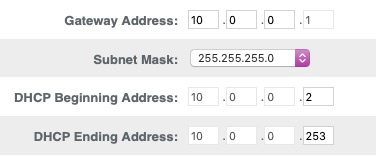

So, I'm guessing if I change the current static IP address to 10.0.0.88 (assuming it's currently not used) and change the port mappings in the docker settings to reflect that change, I might be good to go. I assume I will also need to change the default gateway to 10.0.0.1, as well.

-

Its an Xfinity router XB8, which I believe is a Technicolor CGM4981COM Wi-Fi 6E under the hood. It's admin page is accessed by the typical 10.0.0.1 IP Addy. My MacPro seems to be happy with an IP address of xx.xx.xx.190, if that helps. note: this thing has been installed for only a few hours so I haven't had much of a chance to check everything out. I started with UnRAID as that is my priority. I found this, too:

-

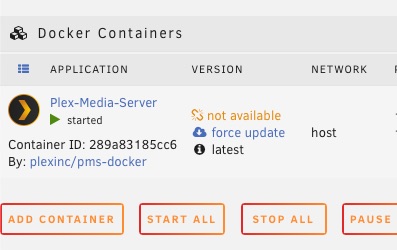

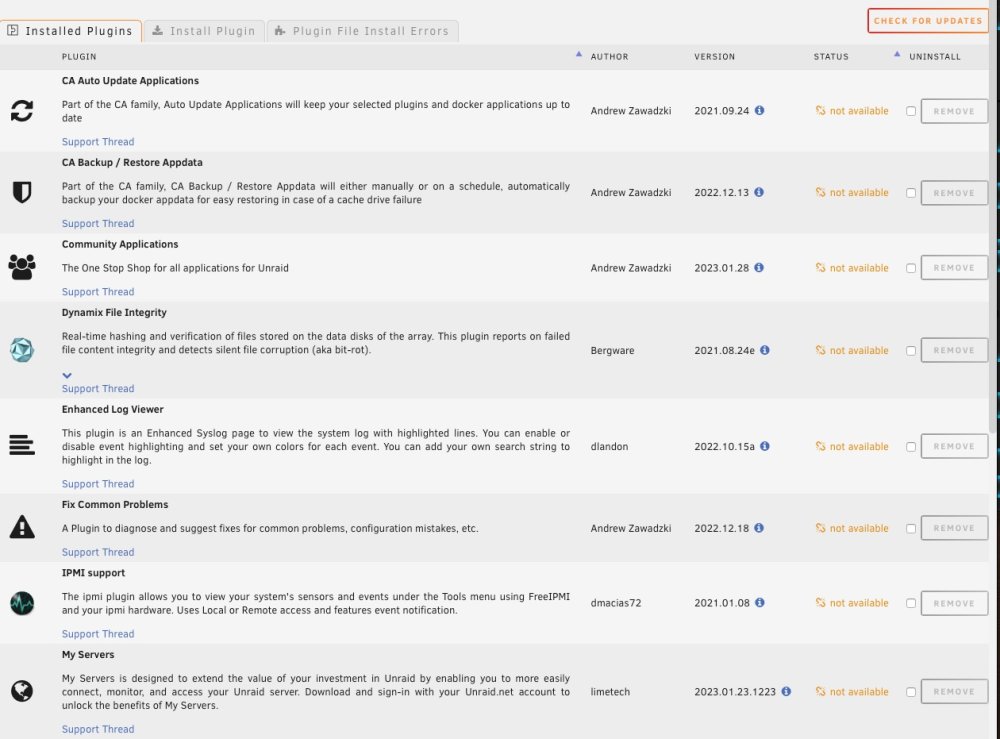

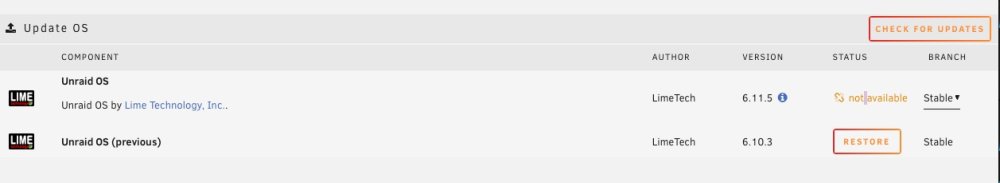

So, I was out of town for a couple of months and came home to a dead router, which has now been replaced. After getting things set-up, I am now seeing a host of problems with UnRAID. I was able to start my array without issue, and can see my shares from my home network, but that's where it seems to end. Updating dockers, plug-ins, and even UnRAID OS will not work. Also, server is no longer registering in My Servers. The common factor is that the versions of all the aforementioned things (plugins, dockers, OS) do not show up, so therefore, the updates cannot occur. I am not super computer savvy and am having a bit of trouble even knowing where to begin. I am currently on UnRAID 6.11.5. I will attach a few SSs of what I am seeing. It seems likely that there is still some setup to do on the new router, but I thought I would begin to try to get things back in order. To be honest that router, and UnRAID itself, was set up over a decade ago, so my memory of what was necessary to get unRAID running has long since disappeared. Any guidance will be much appreciated. tower-diagnostics-20230407-1817.zip

-

One last thing. I am going to add a second parity disk. I assume that this should happen after I rebuild the disabled disk and update the OS, correct?

-

Hello, all. I have returned home after an extended abscence (months), and found a disk disabled (disk 2). I have procured a replacement disk, and need some advice as to how to proceed. My array is currently running on 6.9.2, and I see there is an update to 6.10.0 available. Also, I cannot access any SMART data for the disabled disk, and to my eye the syslog does not help (me, anyway) determine that actual state of the disk in question. My questions are, should I update the UnRAID OS first, or proceed with the rebuild first? Can anyone shed light on anything of interest in the diagnostics, that my untrained eye doesn't grok. Any other words of wisdom? Thanks in advance. tower-diagnostics-20220522-1057.zip

-

Thanks. This PSU is about 10 yrs old, but it appears that it is, indeed, missing a -5V rail. Good news!

-

Don't really have a spare 'puter around to test on, but I can bring it to a friend's house. I did a bit of sleuthing and it seems that the -5V rail is deprecated in the ATX specs, as seen in the last paragraph of this document (http://www.playtool.com/pages/psurailhistory/rails.html). I am not sure if this PS is that did not have that voltage available when new.

-

I recently ran into a problem whereby my server would not power up. I am planning to renovate the server now. I purchased a little power supply tester box. When plugging in the MB connector everything checks out except the -5V LED does not light up. All other connectors test out fine. I went online to see what this could mean and noticed that more expensive, and presumably better testers, do not even report the -5V signal. My question is: what does this even mean, and is this reason to get another PS? If I had a different tester, I might not even know about the -5V "problem".

-

Great to hear that. Thanks for the reply Sent from my iPhone using Tapatalk