-

Posts

332 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by ratmice

-

On the page with the color selection might be good, maybe right near the color picker? While we're at it, would it be possible to have different color schemes for the disk view and heat map view, that would be awesome.

-

Thanks for replying so quickly. I did indeed change some colors on the heat map. The reset colors worked to set things right. BTW, the reset colors is on the tray allocation tab, for those trying to find it.

-

I just installed, and really like this plug in. However, I cannot seem to get the Main page to use the colors, for the drives, from the configuration page. I changed them but the old colors remain, or even began using new colors entirely. One category changed to black and I can't read any info at all 😆

-

SO I am pre clearing a 6TB disk I had laying around, and the speeds are abysmal (2-3 MB/s). I notice a lot of these blocks within the log: Apr 30 11:40:31 Tower kernel: ata8.00: exception Emask 0x0 SAct 0x80e00000 SErr 0x0 action 0x0 Apr 30 11:40:31 Tower kernel: ata8.00: irq_stat 0x40000008 Apr 30 11:40:31 Tower kernel: ata8.00: failed command: READ FPDMA QUEUED Apr 30 11:40:31 Tower kernel: ata8.00: cmd 60/40:a8:00:94:73/05:00:00:00:00/40 tag 21 ncq dma 688128 in Apr 30 11:40:31 Tower kernel: res 41/40:00:00:94:73/00:00:00:00:00/00 Emask 0x409 (media error) <F> Apr 30 11:40:31 Tower kernel: ata8.00: status: { DRDY ERR } Apr 30 11:40:31 Tower kernel: ata8.00: error: { UNC } Apr 30 11:40:31 Tower kernel: ata8.00: configured for UDMA/133 Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 UNKNOWN(0x2003) Result: hostbyte=0x00 driverbyte=DRIVER_OK cmd_age=11s Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 Sense Key : 0x3 [current] Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 ASC=0x11 ASCQ=0x4 Apr 30 11:40:31 Tower kernel: sd 9:0:0:0: [sdo] tag#21 CDB: opcode=0x88 88 00 00 00 00 00 00 73 94 00 00 00 05 40 00 00 Apr 30 11:40:31 Tower kernel: I/O error, dev sdo, sector 7574528 op 0x0:(READ) flags 0x84700 phys_seg 168 prio class 0 Apr 30 11:40:31 Tower kernel: ata8: EH complete Is this due to a failing drive, and does it also explain the speeds being in the toilet?

-

Thanks for pointing me in the right direction, I really appreciate it.

-

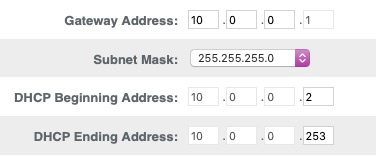

So, I'm guessing if I change the current static IP address to 10.0.0.88 (assuming it's currently not used) and change the port mappings in the docker settings to reflect that change, I might be good to go. I assume I will also need to change the default gateway to 10.0.0.1, as well.

-

Its an Xfinity router XB8, which I believe is a Technicolor CGM4981COM Wi-Fi 6E under the hood. It's admin page is accessed by the typical 10.0.0.1 IP Addy. My MacPro seems to be happy with an IP address of xx.xx.xx.190, if that helps. note: this thing has been installed for only a few hours so I haven't had much of a chance to check everything out. I started with UnRAID as that is my priority. I found this, too:

-

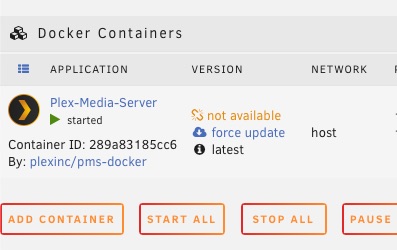

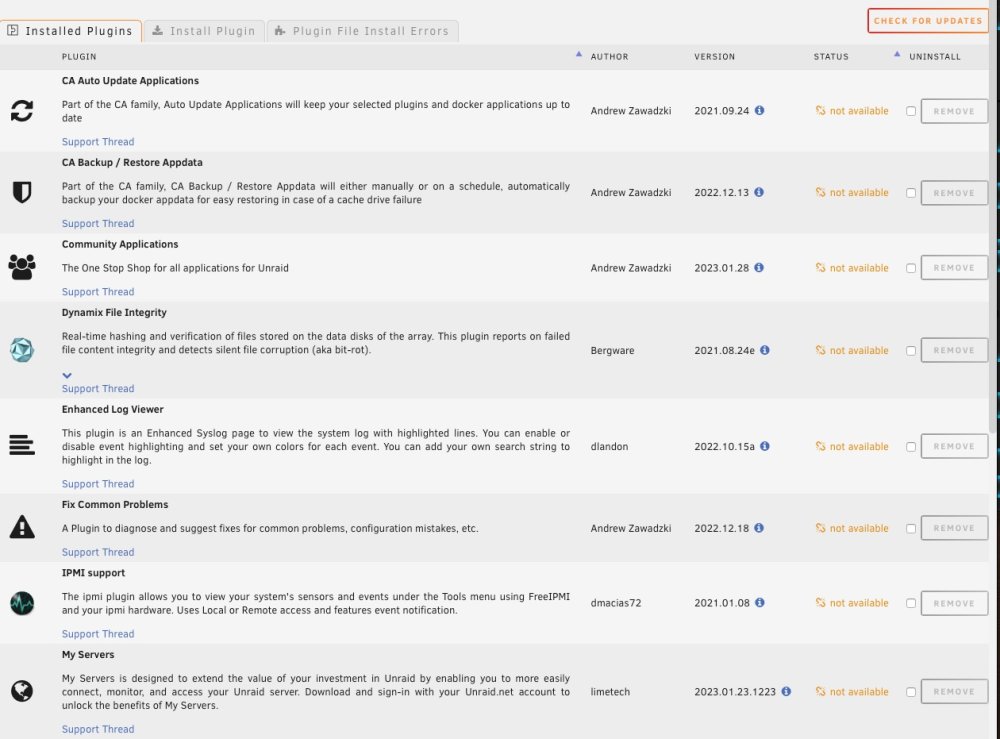

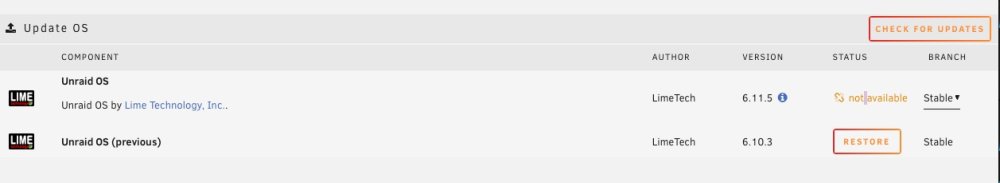

So, I was out of town for a couple of months and came home to a dead router, which has now been replaced. After getting things set-up, I am now seeing a host of problems with UnRAID. I was able to start my array without issue, and can see my shares from my home network, but that's where it seems to end. Updating dockers, plug-ins, and even UnRAID OS will not work. Also, server is no longer registering in My Servers. The common factor is that the versions of all the aforementioned things (plugins, dockers, OS) do not show up, so therefore, the updates cannot occur. I am not super computer savvy and am having a bit of trouble even knowing where to begin. I am currently on UnRAID 6.11.5. I will attach a few SSs of what I am seeing. It seems likely that there is still some setup to do on the new router, but I thought I would begin to try to get things back in order. To be honest that router, and UnRAID itself, was set up over a decade ago, so my memory of what was necessary to get unRAID running has long since disappeared. Any guidance will be much appreciated. tower-diagnostics-20230407-1817.zip

-

One last thing. I am going to add a second parity disk. I assume that this should happen after I rebuild the disabled disk and update the OS, correct?

-

Hello, all. I have returned home after an extended abscence (months), and found a disk disabled (disk 2). I have procured a replacement disk, and need some advice as to how to proceed. My array is currently running on 6.9.2, and I see there is an update to 6.10.0 available. Also, I cannot access any SMART data for the disabled disk, and to my eye the syslog does not help (me, anyway) determine that actual state of the disk in question. My questions are, should I update the UnRAID OS first, or proceed with the rebuild first? Can anyone shed light on anything of interest in the diagnostics, that my untrained eye doesn't grok. Any other words of wisdom? Thanks in advance. tower-diagnostics-20220522-1057.zip

-

Thanks. This PSU is about 10 yrs old, but it appears that it is, indeed, missing a -5V rail. Good news!

-

Don't really have a spare 'puter around to test on, but I can bring it to a friend's house. I did a bit of sleuthing and it seems that the -5V rail is deprecated in the ATX specs, as seen in the last paragraph of this document (http://www.playtool.com/pages/psurailhistory/rails.html). I am not sure if this PS is that did not have that voltage available when new.

-

I recently ran into a problem whereby my server would not power up. I am planning to renovate the server now. I purchased a little power supply tester box. When plugging in the MB connector everything checks out except the -5V LED does not light up. All other connectors test out fine. I went online to see what this could mean and noticed that more expensive, and presumably better testers, do not even report the -5V signal. My question is: what does this even mean, and is this reason to get another PS? If I had a different tester, I might not even know about the -5V "problem".

-

Great to hear that. Thanks for the reply Sent from my iPhone using Tapatalk

-

Hi team. Have been away for a few days and came home to a dead server (deets in sig), will just not spin up at all. Pulled the power supply, and it tests out fine, so it appears to be a MB/CPU issue. This server has been running mostly trouble free for about 10 years, so it owes me nothing at this point. One of the NICs died a couple of months ago, also. I have a new MB (Supermicro X11SSM-F), CPU (Xeon E3-1245), and memory (Nemix 32GB Kit DDR4-2666MHz PC4-21300 ECC Unbuffered Server Memory) en route. Needless to say, I'm a bit sleep deprived from freaking out about it and figuring out the new components, and I know just enough about this topic to make myself dangerous. Is there some best practice advice for how to go ahead and effect the rebuild, or is it just - plug the stuff in, replace the UnRAID USB drive and turn her back on? I am slightly peeved that I can't find my most recent copy of the disk assignments from the server, but I am hoping that the system is smart enough to see that everything is still in the same location in my Norco 4220 chassis. Any words of wisdom would be most appreciated.

-

thx

-

So I noticed that one of my data disks (disk 7) and my cache disk, are both showing read errors. The data disk SMART report shows a few CRC errors from a long time ago (I think). The cache disk seems like it's dying. Would someone be kind enough to see if my assessment of the 2 disks is correct? Other recommendations would be greatly appreciated, as well. Thanks for the help. One add'l question: can I use btrfs for cache (in case I want create a pool later) while using XFS for data, or does that cause any problems. tower-diagnostics-20210110-1407.zip

-

OK, thanks. I think I will just not tempt fate and leave everything alone until the new controllers get here. That should be an adventure, as well. O_o

-

So, one last question, now that the repair has proceeded, and lots of items placed in Lost + Found, indicating, I think, that there was a lot of corruption, should i bother to try mounting it? or, will that screw up things when I go and try to rebuild the disk. My thinking here is that if it is actually mountable, then unraid will think that it's current state is what it's supposed to be and adjust parity accordingly, thus giving me a screwed up rebuild. As it is it appears that the emulated disk works OK.

-

That's what I thought, i can wait a few days for the controllers to arrive. Here goes nothing Thanks again.

-

So, I attempted to run xfs_repair and got this output, seems like the disk is really borked. However is there a way to attempt mounting a single disk while in maintenance mode, or is starting the array and having it choke on this disk enough to just jump to ignoring the log while repairing? I am too *nix illiterate to know this. root@Tower:~# xfs_repair -v /dev/md17 Phase 1 - find and verify superblock... - block cache size set to 349728 entries Phase 2 - using internal log - zero log... zero_log: head block 449629 tail block 449625 ERROR: The filesystem has valuable metadata changes in a log which needs to be replayed. Mount the filesystem to replay the log, and unmount it before re-running xfs_repair. If you are unable to mount the filesystem, then use the -L option to destroy the log and attempt a repair. Note that destroying the log may cause corruption -- please attempt a mount of the filesystem before doing this. Also, 2 new controllers on the way. If this attempt to repair disk17 is unsuccessful, would the best course of action be to shut down the array, install the new controllers, and then try to rebuild disk 17 to a new drive? or would it be OK to do that now?

-

Thanks again, have a great day.

-

Thanks for the prompt reply. As always, Johnny, you are a superb asset to the forums. I am going to replace the controllers ASAP. I currently have a SuperMicro X8SIL-F motherboard. looks like I'm limited to x8 PCI-e cards (but it seems the SASLP are x4 cards). Any recommendations for direct replacements for the SASLP controllers? seems like: LSI 9211-8i P20 IT Mode for ZFS FreeNAS unRAID Dell H310 6Gbps SAS HBA might be a reasonable replacement, I'm just a bit fuzzy on the bus/lane deal. Are there more stable, proven replacements that have the SFF-8087 SAS connector so I can just plug and play?

-

Here are the post-reboot diagnostics. Also the disabled dis has a note that it in "unmountable: no file system". Not sure if that is SOP for disabled disks, or not. tower-diagnostics-20200625-1540.zip