sansoo22

Members-

Posts

24 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by sansoo22

-

Great set of dockers. I have my sender on an Intel NUC running pihole in a docker and my receiver on my unRAID server. I should probably flip them for data redundancy sake since I run a 3 disk SSD cache pool on unRAID but whatever I did it backwards. I followed @geekazoid's instructions with one caveat. For whatever reason I had to create my symlinks first and then map those as volumes. If I did it the other way around the sender kept syncing the actual symlink to the receiver which of course doesnt work because of mismatched directory structures between unRAID and Ubuntu Server 22.04. Other than that the setup was pretty straight forward and easy.

-

@MsDarkDiva - If you're on Win10 you might give Visual Studio Code a try for editing config files. Especially anything yaml. VS Code is free, easy to setup, and for yaml it does cool things like auto-indent 2 spaces whenever you hit the tab key. It would have even highlighted the word "password" in your example above as an error.

-

I don't need support. I just wanted to say thanks for this container and its continuous maintenance. I started with Aptalca's container then switched to the linuxserver.io container. Its been close to 3 yrs of rock solid performance. I often forget its even running. I thought about switching to the Nginx Proxy Manager for the nice GUI and the fact the nginx syntax makes me commit typo errors for whatever reason. However the lack of fail2ban in that container has kept me away. I'm so glad you guys decided to bake that in. You can watch what I assume are bots getting blocked daily and its a nice peace of mind. This container works great with my firewalled "docker" VLAN using Custom br0. Between the firewall and fail2ban I feel my little home setup is about as secure as I can get it. As a fellow dev I know we don't always hear a peep from users in regards to appreciation for our hours of hard work. So thanks again for keeping this container going. I really do appreciate it.

-

Its been a couple months so not sure if you still need help but you can get this error if mongo was restarted while rocket chat was still running. It happened to me a few times. The other thing I did since i was running a single node replica set was run rs.slaveOk() on my primary node. Making sure rocket is shutdown while fiddling with mongo and the above command have me going for almost a week without that error

-

My steps were a bit different to get this all working with authorization setup in MongoDb. I used the same mongo.conf file with one adjustment to get authorization working. First make sure to shutdown your RocketChat docker if its running. It will get angry when we do the rest of this if you don't. Next we need to create some users in MongoDB. Each command below needs to be run in the terminal of your MongoDB container separately. // switch to admin db use admin // first user db.createUser({ user: "root", pwd: "somePasswordThatIsReallyHard", roles: [{ role: "root", db: "admin" }] }) // second user db.createUser({ user: "rocketchat", pwd: "someOtherReallyHardPassword", roles: [{ role: "readWrite", db: "local" }] }) // switch to rocketchat db use rocketchat // create local db user db.createUser({ user: "rocketchat", pwd: "iCheatedAndUsedSamePwdAsAbove", roles: [{ role: "dbOwner", db: "rocketchat" }] }) Now we need to modify the mongo.conf file // this #security: // should be this security: authorization: "enabled" Next bounce the MongoDB docker and it should start up with authorization enabled. You can check by running the "mongo" command in terminal. If the warnings about authorization being off are gone then you have it set. You could also run "mongo -u root -p yourRootPwd" to verify. Finally we need to modify the connection strings in the RocketChat Docker // MONGO_URL: // this is the rocketchat user we created in the rocketchat database mongodb://rocketchat:[email protected]:27017/rocketchat?replicaSet=rs01 // MONGO_OPLOG_URL // this is the rocketchat user we created in the admin database mongodb://rocketchat:[email protected]:27017/local?authSource=admin&replicaSet=rs01 Fire up your RocketChat instance and hope for the best. If it works send a middle finger emoji to a random user and go relax. If not sorry I couldn't help out.

-

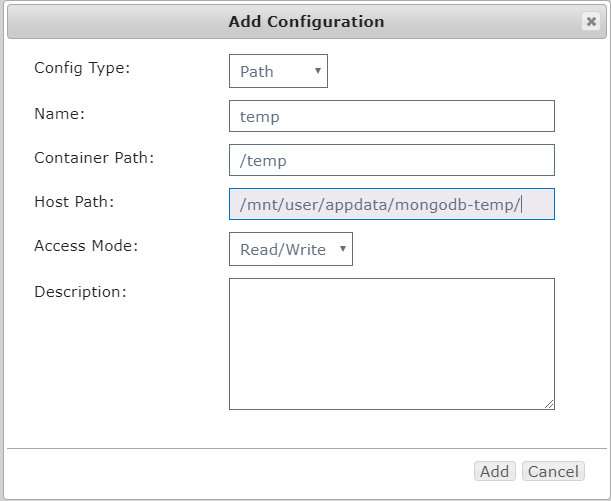

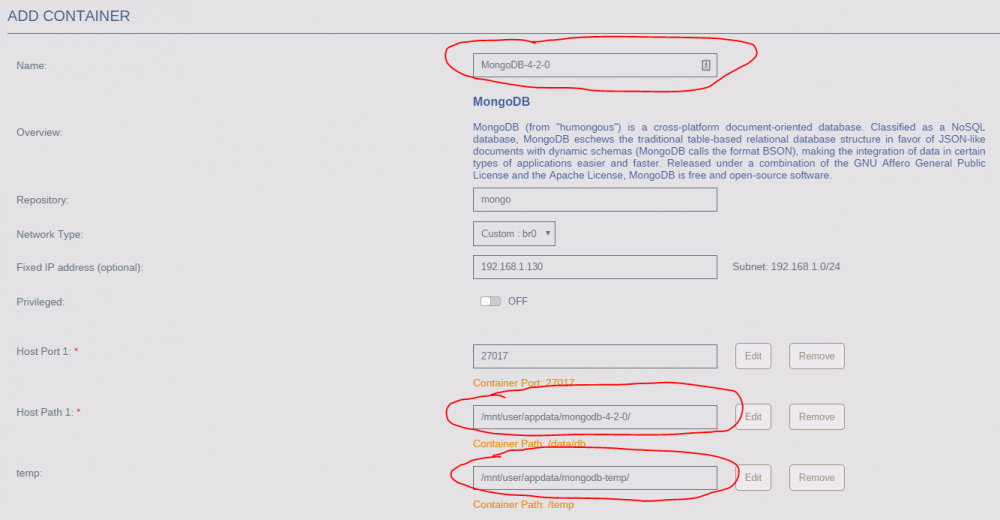

Posting my procedure steps to upgrade mongoDB image from 3.6 to 4.0/4.2 because it was a pain to figure out. Before you begin it is highly recommended to open a terminal window to your current mongodb instance and run... mongod --version Write that version number down somewhere. Mine was 3.6.14 and corresponds with a docker image tag if this whole process goes tits up and you need to revert. Backup your old mongodb volume Open a terminal into unRAID Run the following command assuming you have a cache drive. If not modify your path to wherever your appdata is stored cp -avr /usr/mnt/appdata/mongodb /usr/mnt/appdata/mongodb-bak Mount a new volume to your current MongoDB container: create a new directory in /usr/mnt/cache/appdata called mongodb-temp open your containers configuration click the + to add new path, variable, etc Fill out the form as follows and click add Save your docker configuration Dump current mongodb database to temp directory These next steps assume your mongodb instance is running on the default port and you mounted a volume named temp. Open a terminal window to your mongodb container Run the following command mongodump -v --host localhost:27017 --out=/temp The -v flag is for verbose so you should see a bunch of stuff scroll by and when its done it should report successful on the last line printed in the terminal. Upgrading MongoDB!!! This next step can be done one of two ways. The first method is nuke your current container and install brand new. The second method requires you have the "Enable Reinstall Default" setting set to "Yes" in your Community Application Settings. This write up will not cover turning that setting on. Upgrade Option 1: Open the mongodb container menu and choose remove Un-check "also remove image" Click Yes and watch it go bye-bye a. Delete the contents of your old /appdata/mongodb directory Go to Community Apps and re-install MongoDB Remember to add the /temp path to this new container just like in the screen shot above Skip down to Restoring Mongodb data Upgrade Option 2: This is the one I chose to use because it left my old mongodb instance completely intact Shutdown your current Mongodb container With "Enable Reinstall Default" turned on in Community Apps go to your "Installed" apps page Click the first icon on the MongoDb listing to reinstall from default Be sure to CHANGE the NAME and HOST PATH 1 values to create a new duplicate container. And don't forget to remap the /temp volume so we can do the restore later. If using custom br0 feel free to use the same IP address since your other container is now dormant Click Apply to create the container Move on to Restoring Mongodb data Restoring Mongodb data Open a terminal window into your current running mongodb instance. Enter the following command mongorestore -v --host localhost:27017 /temp Assuming you mounted the volume named /temp and everything went ok you should see a giant list of stuff scroll by the terminal window. At the bottom will be a report of successful and failed imports You can now leave the /temp volume mapped for later use or remove it if you like I created this process for myself last night after attempting to upgrade from 3.6 to 4.2 and running into a pesky "Invalid feature compatibility version" error. You can read about that here. I tried all the steps in the mongo documentation but being installed in a docker made things a bit trickier. I found it easier just to backup, dump, recreate image, and restore my data.

-

Recently I was given an i7-4790 for free. My current unRAID box is running an FX-8320e. My heaviest docker is currently Emby. It doesn't transcode often but when it does the CPU gets hammered pretty hard. I don't run any VMs at the moment but I would like the option to spin up at least 1 x win10 and 1 x Ubuntu VMs and have them run relatively smoothly. I don't have a socket 1150 board in the bone yard so I would need to purchase that and I'm unclear if I will get much net gain with the i7. I know all of the benchmarks out there say its a much better CPU for gaming but I haven't been able to find anything regarding virtualization. Any additional information would be greatly appreciated before I go out spending money I may not need to spend. Full docker list and current system specs listed below. Full Docker List: Emby Rocket Chat nginx MongoDB MariaDB NextCloud Unifi Pihole (backup DNS) MQTT Full unRAID spec: CPU: FX-8320e w/ Corsair H110i v2 RAM: 32GB DDR3 HDD: 5 x Toshiba 3TB P300 SSD: 2 x Samsung 860 EVO SAS: HP H220 in IT Mode PSU: Seasonic X850 GPU: GT210(?)

-

Finally got around to getting the HP 220 card installed. My girlfriend is a teacher and was using my Emby server to binge watch Criminal Minds before starting school again. I've learned not to upset her in that last week or two before school. Looks like the the "UDMA CRC Error count" issue can finally be put behind me. I replaced the Seagate drive that had a couple "187 Reported Uncorrect" errors on it as well. I thought it had died because there was about 200 write errors to it when trying to spin up a VM and unRAID disabled. So i put the new drive in let it rebuild and the same thing happened again. Disk 2 was disabled by unraid and i had to remove it, start the array, stop the array, and its currently rebuilding on to itself. Its a brand new disk that i ran a full extended SMART test on before installing with 0 errors. I even ran another short test from within unRAID when it was disabled and its all green. So something with that old VM that used to have the 2 1060s assigned to it is so borked its causing a disk to have a ton of bad writes. I was quite shocked to see this behavior. Anyway the original point of this topic is solved and the answer is don't use on board sata. Hit up ebay and get a SAS card.

-

I'm in the process of building a landing page that has built in authentication and Google Recaptcha support. I need to port my custom PHP framework to PHP7 and it relies on Composer for package management. My goal is to build something simple yet secure with bcrypt hashing and support for MySQL or MongoDB. If all goes well I will be hosting the code on Github for others to use. Planned features: - Authentication with username/password - MongoDB and MySQL support - Admin CMS for adding links and icons - Landing page that looks similar to the Chrome Apps view - User management - Activation email support (not sure how this will work just yet) Nice to haves: - Google account linking with 2 factor authentication Probably some lofty goals but being a developer by trade I already have most of the code ready to go. Just need help getting Composer set up. I'm not sure which install method would be best suited for a docker environment. Should I install directly to this container or stand up a composer docker and map its volumes to letsencrypt? Any help would be greatly appreciated

-

Can't seem to catch a break right now. Was going to install the new LSI card but then woke up to a new error of "187 Reported Uncorrect" on disk 2. That popped during a parity check and I don't have a spare drive at the moment so waiting on a new drive to show up before I tear the server down. The count on that error is 2 and there are no bad sectors reported on the drive but i dont want to run the risk of a drive going bad while im swapping around components.

-

Card is here but still waiting on cables. They said they would be here today but USPS is a crap shoot in my city. Looks like they sent them to the wrong hub and are on there way to the correct hub and may show up tomorrow. So hopefully this weekend i can get this thing installed and see how it does. Just wanted to say i havent abandoned the post. Just (im)patiently waiting for USPS to figure out logistics.

-

Using the documentation here https://github.com/diginc/docker-pi-hole I managed to 50% get Ipv6 to work. I say 50% because so far it is only been tested and working on a Windows 10 PC. I assume Linux will work as well since you can configure DNS entries for it. Steps: 1. Make sure your unRAID servers network settings have IPv4 + IPv6 set 2. Add a new variable to the piHole docker configuration I got the IPv6 from my router and just copy pasted it into the Value field. 3. Save the new field 4. Click apply and let the container rebuild 5. Edit your network connection in your PC as follows for "Internet Protocol Version 6" Unfortunately I can't get my router to use this new IPv6 address as its default IPv6 DNS and only clients with configurable DNS entries seem to work right now.

-

@Frank1940 I haven't overclocked anything in the server except for the video cards in the VM that was running them. I'm not much of an overclock guy anyway. I prefer peace of mind in all my systems. I'm not too sad to see the mining operation go. It was merely an experiment to see if a server could pay its own utilities. It was a success until the brutally hot summer we are having in the mid west on top of the market for crypto falling apart. @Stan464 thanks for the heads up. The card and cables just shipped out today. My case stays really cool when the dual 1060s arent running. Even then the AIO liquid cooler does a great job with the CPU. The Fractal Design XL R2 is a huge case with plenty of space around components. If the LSI card gets hot I thought about putting in one of these: https://www.amazon.com/GeLid-SL-PCI-02-Slot-120mm-Cooler/dp/B00OXHOQVU/ref=cm_cr_arp_d_product_top?ie=UTF8

-

Thanks @johnnie.black for the confirmation. Looks like the mining operation in this server has come to an end. May keep one of the 1060s in for a dedicated gaming VM. The Shield TV can do steam link now so maybe something to play with for some lighter gaming in the living room.

-

I think I saw that doc from another post. I've checked and all my drives have a firm click when inserting the locking cables. I took one of the Samsung drives out when I was switching ports and checked how firm the cable is in. It won't come out with the locking cables if you pull on it. Im sure if you pulled hard enough it would. Anyway still getting errors so port switching didnt work. Hit up ebay and ordered one of these https://www.ebay.com/itm/162862201664. Its an HP flashed to latest LSI firmware in IT Mode. Also picked up plenty of breakout cables. Both locking and non locking. I couldn't confirm if the card is PCIE 2.0 compatible but I'm rolling the dice on it. Next build will be PCIE 3.0 so trying to be a little future proofed. If it isn't the LSI 9211-8i in IT Mode is readily available on eBay from reputable buyers.

-

Finally had time to shutdown the server and swap SATA cables around. Moved the cache disks to the 3 and 4 ports on the motherboard. For a test i copied one of my dev projects over to a share that uses cache. The project folder with all of its node packages contained 18,792 files. With that test I saw a +2 error count on Cache 1 and a +1 error count on Cache 2. As a follow up i then deleted that directory and saw no increase in error counts. That should roughly simulate what the Mongo database will hit the disk with in a day when Rocket Chat is active during normal business hours. While I'd still like to see a 0 rise in this error count I know its not a data threatening error. I guess call it solved for now. Next step is to find an LSI card to see if it rules out any drive specific problems.

-

I had looked at getting one but was never able to find which models were best with unRAID. So instead I turned my unRAID machine into a mining rig. I figured if it was on 24/7 I may was well slap in a couple 1060s and have it make some money for me. Which if you are familiar with that market at all you know that mining small scale isn't really profitable anymore. Looks like it might be time to hit up the eBay to sell off the 1060s and invest those proceeds in an LSI card. Although I do loathe the scammer gauntlet one must traverse in order to sell anything on eBay anymore. Tonight I will be taking unRAID offline to switch the cache drives to different ports and will report back how that works out. Any advice on keeping the LSI card cool in a normal tower case? I've read that most of these were designed for forced air rack mounted servers.

-

Thanks @Frank1940. I was wondering if the last 2 ports were the cause of the problem after seeing in the BIOS they can be configured differently than the others. Since my initial post Cache 1 = 106 errors and Cache 2 = 102 errors. So something with turning off the eSATA port made a pretty big difference. You may have just found the problem for me. I've done some testing with large single file reads/writes and it doesn't seem to be causing any errors. While I still don't like the idea of having a couple suspect ports if they are assigned to my 2 large media disks it wont hurt anything. Like you said its not fatal and only hampers performance. Emby isn't that fast to begin with so doubt I would ever notice. Is there anything I need to know about moving disks / ports around? Will unRAID even notice if I move them around? Or should i just follow the process I did on my last mobo upgrade and screen shot the disk assignments in the array and ensure all the disks are assigned to the same slots? Thanks again for the help.

-

After using this link http://lime-technology.com/wiki/Replace_A_Cache_Drive to replace my cache drives I started getting quite a few CRC error count warnings. I had never seen these before on any drives. And it is still only effecting the cache drives. I have followed just about every post i can find on this error about replacing SATA cables and rerouting them. I am on my third set of cables and they are now nowhere near any power cables. The new SSD cache pool has been running for 14+ hours now and both drives are sitting around 97 to 100 on the CRC error count. Originally they were connecting at SATA 1 speeds even though I had verified SATA 3 cables. I turned off the on board eSATA port in the BIOS, booted unRAID, and now have 6 gig speeds. This slowed down the number of errors but hasn't fully resolved my issue. System: unRAID Version: 6.5.3 Gigabyte GA-990FX-UD3 R5 motherboard AMD FX8320E CPU 32GB Crucial Ballistix DDR3 RAM 3 x Seagate 3TB 7200rpm drive 1 x Seagate 2TB 7200rpm drive 2 x Samsung 500GB 860 EVO SSDs 1 x GeForce 210 gpu 2 x nvidia 1060 gpu Seasonic X-850 850 watt PSU My old cache drives were Toshiba OCZ TR150 120GB SSDs. They don't seem to report the CRC error count. I put one in an external enclosure and ran CrystalDiskInfo on my desktop and this code is present in the report. So its quite possible I've had this error for a long time and didn't know about it. Things I have tried: - Changed sata cables 3 times now - Rerouted sata and power cables to keep them at least 1/2 inch apart - Turned off eSATA port in mobo and for whatever reason this gave me SATA3 speeds Things I haven't done yet: - Update BIOS on the motherboard - Move MongoDB off of the cache drives since it is the database for a Rocket Chat instance and is very chatty with disk i/o Here is my full diagnostic file if this helps. If i missed any helpful info in my post let me know and I will try to provide it asap. Thanks. tower-diagnostics-20180717-1432.zip

-

[Plugin] CA Docker Autostart Manager - Deprecated

sansoo22 replied to Squid's topic in Plugin Support

-

[Plugin] CA Docker Autostart Manager - Deprecated

sansoo22 replied to Squid's topic in Plugin Support

Just updated to 6.5.3 and settings are still not being saved. I uninstalled and reinstalled after the update just to be certain. /flash/config/plugins/ca.docker.autostart/settings.json [ { "name": "MongoDB", "delay": 5 }, { "name": "Rocket.Chat", "delay": " ", "port": "", "IP": false } ] The value I entered for port was 27017 and the delay was 90. Neither appears to have been saved. Not sure what other troubleshooting steps need to be taken. Thanks -

[Plugin] CA Docker Autostart Manager - Deprecated

sansoo22 replied to Squid's topic in Plugin Support

Yep, make change, hit apply, get dialog confirming changes were saved. Reload page and settings are gone. I'm still on 6.3.3 with an update scheduled in the next couple days. Ironically I was attempting to install this plugin to help manage startup of Dockers after the upgrade. I will report back once I'm on 6.5.3 to see if that clears it up. -

[Plugin] CA Docker Autostart Manager - Deprecated

sansoo22 replied to Squid's topic in Plugin Support

Having a similar problem. Every time i save the configuration and reload the page or navigate away and then back all my settings are missing. I'm not receiving any errors when I save. I have attached a diagnostic zip if it helps. tower-diagnostics-20180702-1117.zip