-

Posts

296 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Arbadacarba

-

-

What is your network Type set to? Host? or something else?

If you have it set to have it's own IP address you must "Enable Bridging" in network settings.

-

I think I found a solution... Copying a 25GB file to the ram drive and then copying that file to each of my drives.

I figure this is giving me a fairly consistent view of the write performance of each of my drives:

Spinning Rust Drives:

rsync --progress --stats -v Test.mkv /mnt/disk1/Backup

25,775,133,173 100% 96.26MB/s 0:04:15 (xfr#1, to-chk=0/1)

100,512,382.22 bytes/secrsync --progress --stats -v Test.mkv /mnt/disk2/Backup

25,775,133,173 100% 105.59MB/s 0:03:52 (xfr#1, to-chk=0/1)

110,412,959.48 bytes/secrsync --progress --stats -v Test.mkv /mnt/disk3/Backup

25,775,133,173 100% 105.43MB/s 0:03:53 (xfr#1, to-chk=0/1)

110,412,959.48 bytes/secrsync --progress --stats -v Test.mkv /mnt/disk4/Backup

25,775,133,173 100% 100.77MB/s 0:04:03 (xfr#1, to-chk=0/1)

105,445,505.27 bytes/secrsync --progress --stats -v Test.mkv /mnt/disk5/Backup

25,775,133,173 100% 87.66MB/s 0:04:40 (xfr#1, to-chk=0/1)

91,585,882.91 bytes/secrsync --progress --stats -v Test.mkv /mnt/disk6/Backup

25,775,133,173 100% 93.33MB/s 0:04:23 (xfr#1, to-chk=0/1)

97,842,224.06 bytes/secSingle Cache SSD

rsync --progress --stats -v Test.mkv /mnt/cache/Backup

25,775,133,173 100% 604.45MB/s 0:00:40 (xfr#1, to-chk=0/1)

636,578,420.72 bytes/secNVME

rsync --progress --stats -v Test.mkv /mnt/config/Backup

25,775,133,173 100% 1.78GB/s 0:00:13 (xfr#1, to-chk=0/1)

1,663,317,808.97 bytes/secI'll be combining the 2 SSD's together and testing them again

-

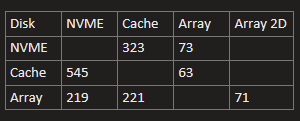

Take a look at my results from my setup... I'm told they are about what to expect:

I'm just trying to decide on some changes to mine but I'm generally pretty happy with the speeds I'm getting

-

Trying to do a little further testing...

I want to repeat this process but get as isolated results as I can.

My previous tests copied files from one drive to another... What if I stored a file on the ram disk... Say 20GB? then copied it using the above methods to each storage type?

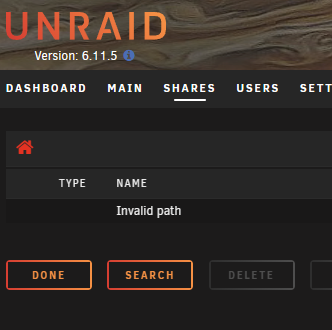

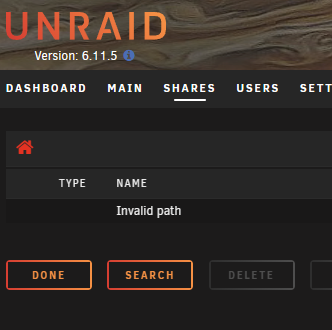

since I am unfamiliar with the available tools, I am trying to get a share available on the ram disk (/dev/shm) but I'm having no luck. I tried creating a softlink (ln -s /dev/shm/test/ /mnt/user/Backup/Test2)within another share to a folder at /dev/shm/test but I get invalid path when I try to look in that on the device:

Am I just barking up the wrong tree or am I making a minor mistake somewhere?

WHY----------------

I have upgrades my two SSD drives a little to a pair of 4TB Samsung EVO drives... It occurs to me that I might be smarted keeping my system folder on that and moving my Cache to the 2TB NVME drive...

My gaming VM is using another 2TB NVME so I don't need that much raw speed for my pfsense router - Home Assistant Server - and various test machines. But the added size and the fact that they are duplicated would add redundancy that I am currently going without.

Thanks for any help

Arbadacarba

-

Love this idea myself... Though it couldn't work in a docker could it? why not a plug in in the base os?

-

I've had similar problems, but it turned out I was causing them by removing Drives physically without dismounting them first.

-

If the helium is there for a reason and it is actually depleted for some reason, how could it not endanger the drive...

I agree with itimpi - RMA the drive if you can.

I think his answer implies that he thinks it could endanger the drive.

-

When you say not simultaneously Does that involve a reboot or just not having the VM running?

-

I'm currently running my Game VM using 5 Dedicated cores on my 10850K with a dedicated NVME drove and a 4080 card... If I'm being honest, I don't really game all that often. Between family (I have a 5 year old boy) and work (I run a small IT company) I just have other demands on my time. My Unraid server is used as a Media Server and Home Assistant server far more than for gaming.

Is there any way to free up the 4080 for transcoding or other projects when the VM is not running?

I'm currently passing it through without the use of a "Graphics ROM BIOS" and have it Bound to VFIO at boot...

Thanks

Arbadacarba

-

I'm wondering if anyone has tried accessing a DVD drive in Unraid. I have a LARGE number of disks with various files on them and would like to be able to access them in Unraid. Maybe from within Krusader? Has anyone tried this?

I've done some searching and come up empty. (Or with so many irrelevant hits that I can't find anything.

I've use Ripper in the past so I know the disk is working, but I want to be able to check disks and copy folders into my archives.

Thanks

-

Have you got a monitor hooked up to the server? You should see its current IP on the login screen... Then yu can either temporarily set a computer to hook in in the right range, or boot it with the GUI to reconfigure.

-

If they show up as two different IOMMU groups, you should be able to pass a pair of them through. Have you tried it yet?

I'd be more concerned if they will work properly in pfsense... (I've been hurt before)

-

Could just have the last step of the survey show the complete text of what is uploaded, so there is no question of what is being collected.

-

Really curious if this helps... and if so which direction.

-

+1 Absolutely

-

Adding my vote to this... I have a fair number of drives but still have to scroll to see info in column 1

-

Is it possible you just have kworker going haywire?

-

When I had a similar situation, I ran the experiment of comparing Array Stopping Times with the VM engine shut down and with the Docker engine shut down.

Turned out in my case that the pfsense VM was not shutting down...

I solved the same issue on a proxmox server by installing the QEMU Guest Agent. And have done so on most of my VM's to facilitate better integration for shutdowns etc.

-

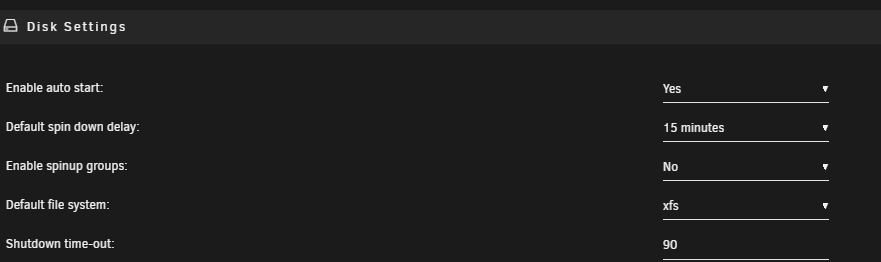

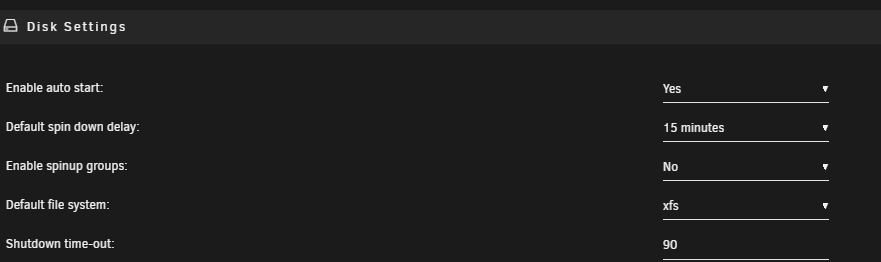

You mentioned that the shutdown, after which it ran a Parity Check was clean; but you also mentioned that shutting down the array took a very long time... If the array does not shut down in 90 seconds (that's what mine is set for) it will foce the issue and do an unclean shutdown.

-

Is your phone asking for permission to share files or is it in "File Transfer" mode?

-

Hmm, I might try that... Would that just affect the transfers to the array?

And would not spin them all up if I were just reading from one or another?

But how about the numbers I'm getting? Are they reasonable?

Thanks JorgeB

Arbadacarba

-

I tried looking for this but get so many confounding variables that I can't find the information I'm looking for.

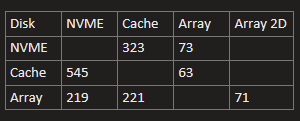

I have 3 pools:

Array - 6 Disks Plus Parity where individual Shares are locked to specific Drives (Television Shows on Disk 1, Music on Disk 3, etc. - So that the movie disk can stay spun down whien I'm mostly listening to Music, and my AudioBook disk might be spun down for weeks at a time)

Config - 1 2TB NVME disk with VM's and system files on (I wish I had named this pool System, but I think I saw a reason not to do so)

Cache - 2 SSD's (Containing my Download folder, and of course, cache)

My question is, what transfer speeds are reasonable if I tell Krusader to Move a large file from me Cache over to the Array (etc.)? I'm assuming the Write Speed of the recieving pool/disk is the deciding factor, and everything seems to be working great, but I just want to know if I am getting the Speeds I should expect.

I assume when I move a file to the array, it goes to the Cache first... I'm trying to run a few tests and have included the results below:

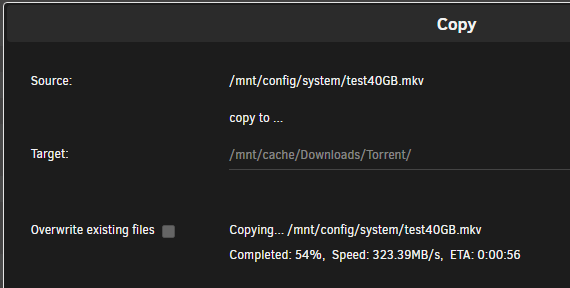

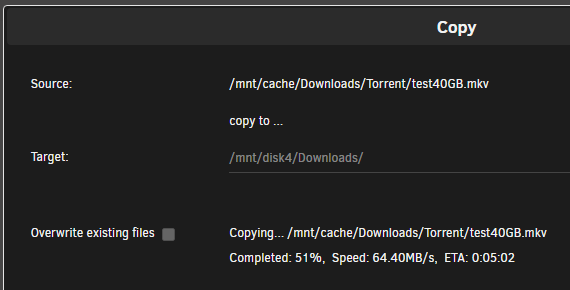

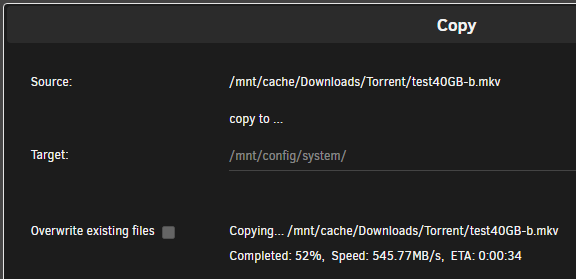

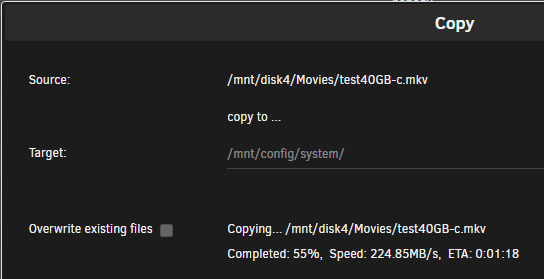

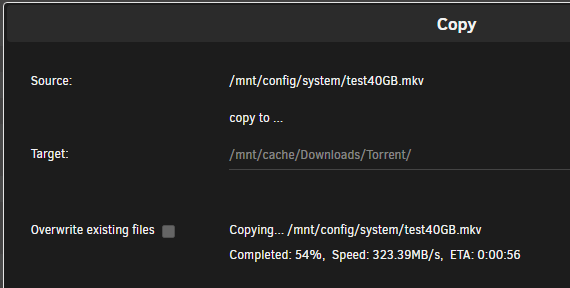

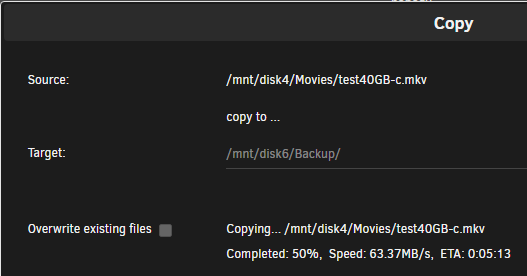

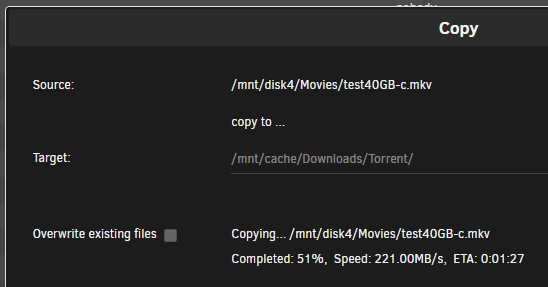

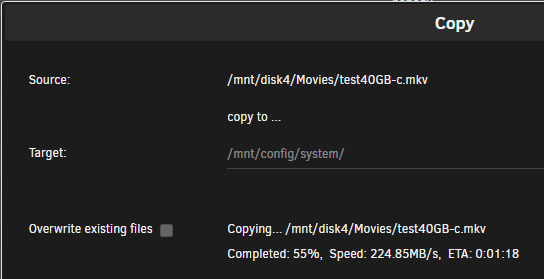

Copy 40GB file from NVME to Cache

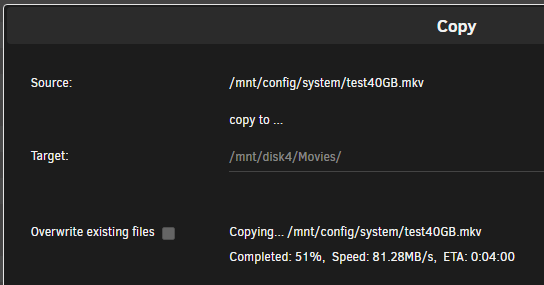

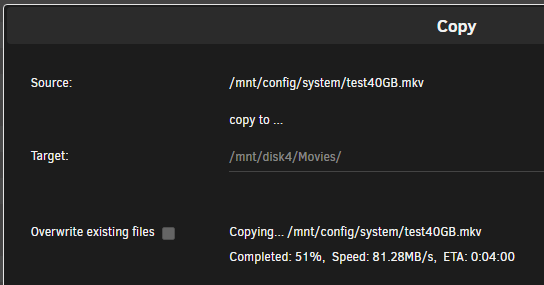

Copy 40GB file from NVME to Array (Non Caching)

Bounced between 60 and 85

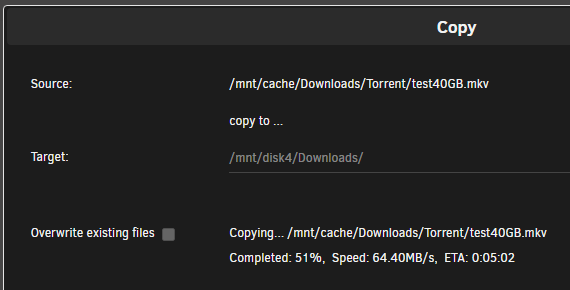

Copy 40GB file from Cache to Array (Non Caching)

Bounced between 50 and 75

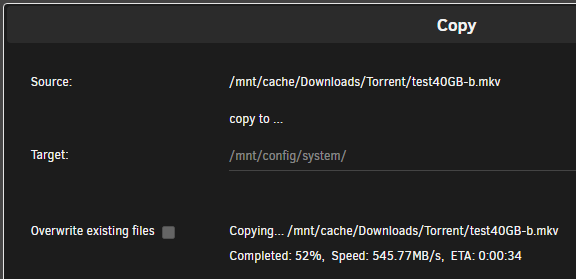

Copy 40GB file from Cache to NVME

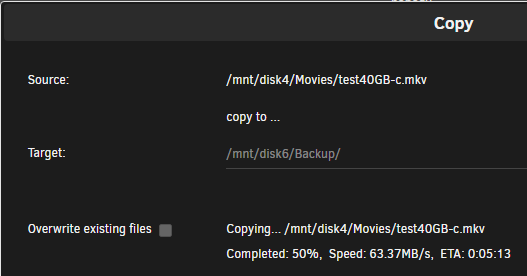

Copy 40GB file from Array (Non Caching) to Array (Non Caching) (2nd Disk)

Bounced between 63 and 81

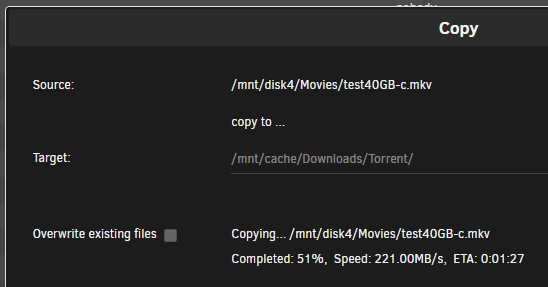

Copy 40GB file from Array (Non Caching) to Cache

Bounced between 218 and 224

Copy 40GB file from Array (Non Caching) to NVME

Bounced between 213 and 224

Methodology:

- I'm running File Copy from inside the Shares page.

- I feel like I'm seeing virtually the same results whether I Move or Copy, but if I run the tests from within Windows, I see a difference between Move and Copy.

- Moving 1Large File verses Several Small Files

- I took note at about 50% because the numbers had seemingly normalized at that point

Are these numbers about right?

OR have I got something horribly misconfigured?

Again, it is working great... The only concern I have is the occasional CRC Issue on the Cache Disks

-

If you were to try and Add a drive does it offer you the drives that are in the system?

Perhaps you need to create a new config and add the drives back into the array?

Should retain the data if you create a new config

-

I've seen this and the solution was to clear the browser cache. Worth a try.

-

1

1

-

Pool Naming

in General Support

Posted

Can I name a pool "System" and then store the system file on it? Will that cause any issues?

Thanks