-

Posts

264 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Iker

-

-

Hi @Ter thanks for your feedback, let me take a closer look into this and get back to you. The problem with "-a" option, is that it mounts all the datasets, not just the childs, that can be a little bit problematic for other users.

-

You probably need the feature to be enabled on your bios (Global C Stats, CPPC, and a lot of other options), I don't recall the exact parameters and it varies for different manufacturers, so give it a try with a google search.

-

Which unraid version are you using?, I don't have amd cpu governor enabled, Unraid lags a lot and generally speaking performance vs power is not so good.

-

I get this thing working with the following options

1. Add the blacklisting and pstate command to the syslinux config (It's possible from the GUI):

modprobe.blacklist=acpi_cpufreq amd_pstate.shared_mem=1

2. Include in your go file the following line to load the kernel module:

modprobe amd_pstate3. Reboot and enjoy your new scheduler.

-

Not returned, but created by a plugin.

Endpoints Example:

https://unradiserver/Main/ZFS/dataset - Accepts PUT, GET and DELETE Request https://unradiserver/Main/ZFS/snapshot - Accepts PUT, GET and DELETE Request https://unradiserver/Main/ZFS/pool - Accepts GET and DELETE Request -

Hi guys, is it possible to develop a plugin with multiple endpoints? I want to expose multiple endpoints for ZFS administration programmatically. However, I need help finding a proper way to do this. And in the other hand, I prefer to avoid creating a separate docker container for this.

-

This lazy load functionality has been on my mind for quite a while now, and it will help a lot with load times in general; that's why I'm focusing on rewriting a portion of the backend, so every functionality becomes a microservice, but given the unRaid UI architecture, it's a little more complicated than I anticipated, I understand your issue but please hang in there for a little while.

-

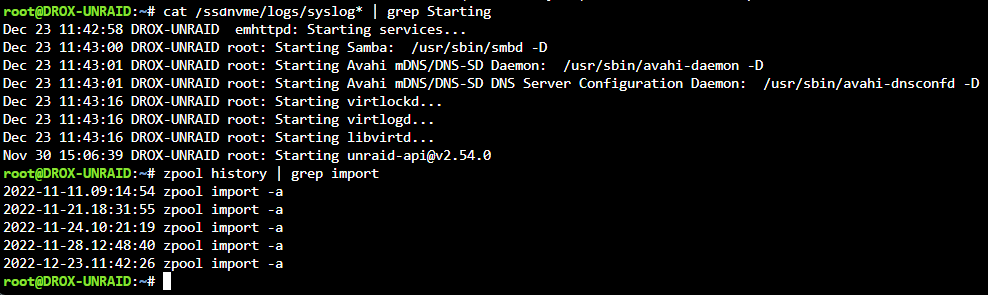

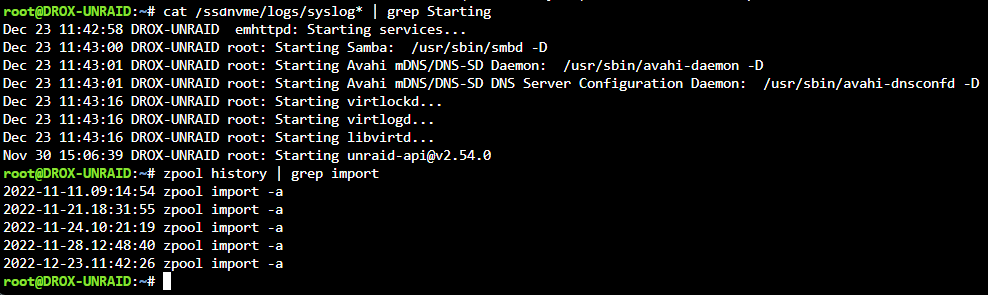

I dig a little about this issue with the logs available at my system and found that the command is issued every time you reboot Unraid; not sure if that's your case @1WayJonny, but it seems pretty correct, at least in my case

-

Hi @1WayJonny, plugin author here; I'm not so sure if you are in the correct forum, but if case you are, it seems that you get a couple of things wrong:

- The plugin doesn't implement any commands related to importing pools; the source code is available, and you can check in the repo "zfs import" command doesn't exist.

- There are no actions performed automatically or in the background by the plugin besides refreshing the info every 30 seconds (or the time you configure) when you are in the main tab.

- The zfs program you correctly identify is the refreshing action; it only occurs when interacting with the main tab.

- There is something else messing with your pools; I'm not sure why, but I suggest you uninstall all the zfs related plugins and then check the history commands.

Best,

-

On 11/25/2022 at 10:25 AM, SuperW2 said:

It had been working perfectly without error for almost a year, and I created the pool initially with the same Truenas instance I'm not sure if likely related or not.

Just remind that when you import a pool, ZFS tries to upgrade the filesystem to the latest version; for me, it looks pretty likely that it is something related to a specific attribute/feature that TrueNAS introduces to the pool; I mean, if the pool works with TrueNAS but not with vanilla ZFS (unRaid) there is not a lot of space for other types of errors.

-

7 hours ago, SuperW2 said:

Have been running ZFS on my UNRaid now for most of this year with no issues. Completed update to 6.11.5 (had previously been applying all point releases fairly quickly after release)... After the last update I'm getting a PANIC at boot directly after "Starting Samba: on 4th line "/usr/sbin/winbindd -D".

If I start Unraid in safe mode it boots just fine... I renamed the unRAID6-ZFS .PLG file and it boots normally as expected... Has anyone else run into any issues with the ZFS plugin and newer 6.11 versions of UnRaid? Any ideas? I completely removed the ZFS plugin and reinstalled and get the error messages in the live SysLog...

I've imported my pool into a TRUENAS boot that I've used to add vdevs and it appears healthy and no issues that I can identity. Scrub completed normally. I also downgraded to previous 6.11 versions (I was running 6.11.4 before with no issue) and no change, same PANIC error.

Any Ideas or anyone else with similar issues?

SW2

As you mentioned Truenas maybe is related to that, based on this:

https://github.com/openzfs/zfs/issues/12643

-

19 hours ago, anylettuce said:

my own doing, moved from unrias+zfs to trunas to trunas scale back to unraid. Suprizingly everything seems to be there, credit to zfs. However 2 of my disks say format option and don't show zfs partition....

sdw and sdv are the drive with the format option.

This is due to the import process of the pool and how the Unassigned devices plugin works; it has nothing to do with you pool and the only way that I'm aware of to solve it is by attaching and detaching the disk using:

zpool detach mypool sdd zpool attach mypool /dev/disk/sdd-by-id <wait until zpool status shows it's rebuilt...>

One easy way could be:

zpool export mypool zpool import -d /dev/disk/by-id mypoolAdditional info:

-

5 minutes ago, ncceylan said:

Hi, I created a raidz-1 pool test using your zfs plugin. Now I want to add new hard drives to this pool, but it doesn't work. The command I use is zpool attach test raidz1-0 sdd. But it was not successful, prompting me "can only attach to mirrors and top-level disks". But I see others are successful, how this is achieved.

Please be aware that there are some diferencies in using "add" and "attach:

https://openzfs.github.io/openzfs-docs/man/8/zpool-attach.8.html

https://openzfs.github.io/openzfs-docs/man/8/zpool-add.8.html

-

9 hours ago, daver898 said:

Hi,

I installed the update and could not see any difference. Does it only work for unraid 6.11.n

Hi daver, the new functionalities are for the most part associated with snapshots; for examploe, batch deletion and cloning, are exclusively present on the snapshot admin dialog.

-

Last update of the year (Probably):

2022.11.12

- Fix - Error on dialogs and input controls

- Add - Clone capabilities for snapshots

- Add - Promote capabilities for datasets

General Advice: Be careful when using clones and promoting datasets; things can become messy quick

-

Hi guys, a new update is live with the following changelog:

2022.11.05

- Fix - Error on pools with snapshots but without datasets

- Fix - Dialogs not sizing properly

- Add - Snapshot Batch Deletion

Be aware that for the Snapshots Admin dialog, the delete and other operations are now reported through the status message; however, the dialog doesn't refresh the info, so you have to close it, hit the refesh button and open it again.

I will work on one more feature (Clone snapshots and promote them) before the year ends; after that; I will focus on rewriting a portion of the backend, so every functionality becomes a microservice; that should open the door for a good API (Automation is coming...).

Best

-

1

1

-

For the options already configured in BIOS everything seems to be okay. First; make sure that you are on the latest version of unraid (6.11.1), then add the following options to syslinux config

modprobe.blacklist=acpi_cpufreq amd_pstate.shared_mem=1

After that and just to be sure add this to your go file

modprobe amd_pstate

Reboot, and that's it; but, as I have stated; probably it's not going to worth all the trouble, the power reduce doesn't compensate for the latency problems.

-

1

1

-

-

I have the same processor and a similar config in the BIOS; the only possible way to reach C6 states it's using the AMD PState; however, in my testing, Unraid lags a lot using this config given that the driver doesn't schedule priority task really well, one example its Telegraf+Prometheus+InfluxDB2, some metrics get missed. So I ended up reverting to acpi_cpufreq. Additionally, power use was reduced between 15% and 20%.

-

1

1

-

-

5 hours ago, fwiler said:

The info is the same that the commands "zpool list" and "zfs list" prints; with that being said; in the pool "size" reports the entire size including parity (Thats for for raidz1, for mirror it's just the size of one disk). Used, and free columns includes parity as well; so no, from the pool you are not able to know how much space available for data you have.

For datasets things are different, the used should report the actual ammount of data in the dataset, and free space reports the reamining usable space or quota for the dataset. So yes you should be able to use the entire 4.51 TB-

1

1

-

-

1 hour ago, thany said:

Can I just ask the one stupid question?

I don't have any pools yet, so how do I actually create one?..

Unfortunately, that is not currently possible through this plugin, you must use the command line for the pool creation process.

-

6 hours ago, jamesy829 said:

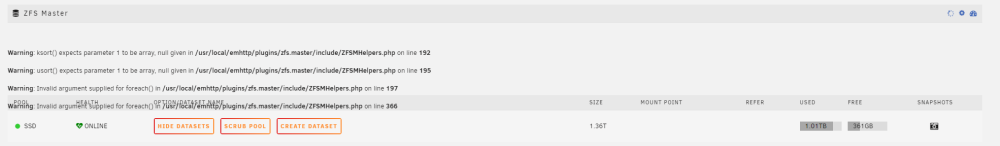

I'm using ZFS Master version 2022.08.21.54

It's possible that one of your pools doesn't have any datasets?, I mean, that's the only thing that comes to my mind that could case that error; if that not the case, please send me a PM with the result (in text) of the following command:

zpool list -v-

1

1

-

-

7 hours ago, Jimbo7136 said:

I hit the "scrub pool" button in main. The scrub completed, but now my data seems to be AWOL. zpool list shows the same capacity and used space, but when I access the share either by samba or by winscp the directory is empty.

Oddly the info shown by "properties" under windows of the share is half the size of the pool (2.68T as opposed to 7.27TB shown by zpool list).

This seems to be more a ZFS Problem than plugin problem, please post in the ZFS Plugin thread the output of your zpool command.

-

9 hours ago, jamesy829 said:

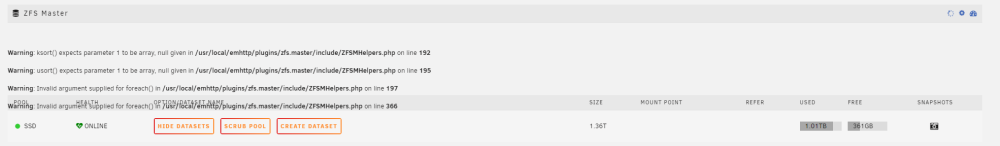

Hi @IkerI was on v6.10.3 and seeing the below issue so I reinstalled the plugin but I'm still seeing the error. I tried upgraded to unraid v6.11.0 but the error still persisted.

Any recommendation?

Which plugin version are you using?

-

5 hours ago, TheThingIs said:

Other plugins install fine. The only thing that stands out to me is that there was a requirement to install zfs plugin which I already had installed so maybe it can't see that it's already installed.

Thanks for looking into it though, I'll post in the 6.11 thread as you suggested.

Indeed there is a requirement that the ZFS Plugin has to be installed; however, it is just declared on the ZFS Master manifest but not enforced in any way (at least in my code).

[Solved] Modprobe blacklisting and AMD P-STATE

in General Support

Posted

Nicely done! It's weird as all you did has the same effects as the kernel parameters, but anyway, it's nice to have a new method.

For the last question, I didn't have any instability issues, they were latency problems. I have multiple services running every X seconds, and additionally in the morning the governor change to power save; with the new driver the cores go down to 500 MHz, that's actually nice; but also it causes some of the scheduled tasks to fail with timeouts and generally speaking the cores didn't scale very well. Obviously, you save a buck or two with the new driver, but if you need performance it doesn't work really well.