-

Posts

23 -

Joined

-

Last visited

Converted

-

Personal Text

unRAID Version: 6.9.0-beta30

Recent Profile Visitors

854 profile views

Starli0n's Achievements

Noob (1/14)

3

Reputation

-

NVME passthrough causes bluescreen and repair mode in Windows

Starli0n replied to Starli0n's topic in VM Engine (KVM)

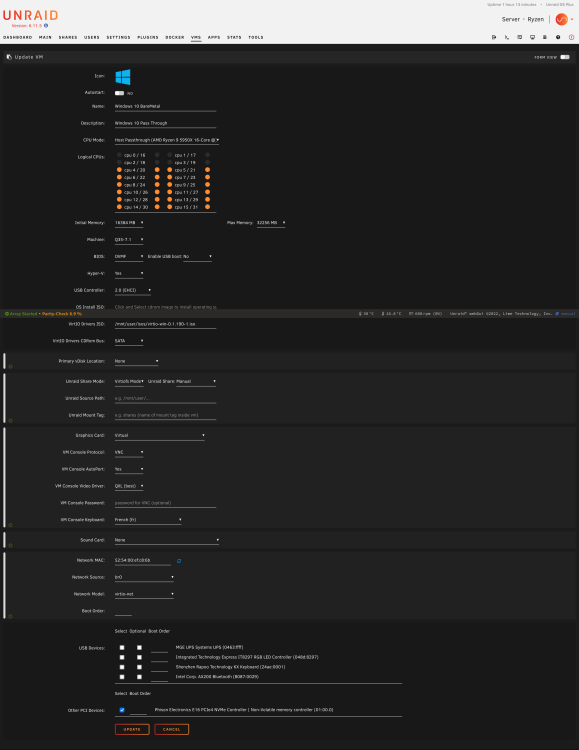

I am taking back my old post, hoping that I will found some help here by giving you more details: I have been following this tuto: To sum-up the issue, I am able to boot on Win10 from my NVME drive (without Unraid), but when I try to create a VM template and to boot Unraid, I have systematically a blue screen with error code: 0xc000021a. and when I reboot directly outside Unraid evrything is fine. I have tried various configurations like not passing through the graphic card to be able to see the VNC console log. I have changed the machine type i440fx-7.1 / Q35-7.1 (I learnt that this parameter was better for GPU Passthrough), the Hyper-V parameter Yes / No, but I am not able to figure out the issue... I do not know exactly what to share so that you can help me properly so here are some piece of my configuration: - The VM Manager: PCIe ACS override: Both VFIO allow unsafe interrupts: Yes - The System Device: The NVME, I try to pass through is from IOMMU group 14 - The vfio-pci log: I noticed two errors but I do not know if it is important nor how to correct them - The boot log: - The VNC log: text error warn system array login 2023-05-17 23:55:11.098+0000: starting up libvirt version: 8.7.0, qemu version: 7.1.0, kernel: 5.19.17-Unraid, hostname: Ryzen LC_ALL=C \ PATH=/bin:/sbin:/usr/bin:/usr/sbin \ HOME='/var/lib/libvirt/qemu/domain-1-Windows 10 BareMetal' \ XDG_DATA_HOME='/var/lib/libvirt/qemu/domain-1-Windows 10 BareMetal/.local/share' \ XDG_CACHE_HOME='/var/lib/libvirt/qemu/domain-1-Windows 10 BareMetal/.cache' \ XDG_CONFIG_HOME='/var/lib/libvirt/qemu/domain-1-Windows 10 BareMetal/.config' \ /usr/local/sbin/qemu \ -name 'guest=Windows 10 BareMetal,debug-threads=on' \ -S \ -object '{"qom-type":"secret","id":"masterKey0","format":"raw","file":"/var/lib/libvirt/qemu/domain-1-Windows 10 BareMetal/master-key.aes"}' \ -blockdev '{"driver":"file","filename":"/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd","node-name":"libvirt-pflash0-storage","auto-read-only":true,"discard":"unmap"}' \ -blockdev '{"node-name":"libvirt-pflash0-format","read-only":true,"driver":"raw","file":"libvirt-pflash0-storage"}' \ -blockdev '{"driver":"file","filename":"/etc/libvirt/qemu/nvram/46630960-32f8-d2d3-0e31-dd5839cf0c2e_VARS-pure-efi.fd","node-name":"libvirt-pflash1-storage","auto-read-only":true,"discard":"unmap"}' \ -blockdev '{"node-name":"libvirt-pflash1-format","read-only":false,"driver":"raw","file":"libvirt-pflash1-storage"}' \ -machine pc-q35-7.1,usb=off,dump-guest-core=off,mem-merge=off,memory-backend=pc.ram,pflash0=libvirt-pflash0-format,pflash1=libvirt-pflash1-format \ -accel kvm \ -cpu host,migratable=on,topoext=on,hv-time=on,hv-relaxed=on,hv-vapic=on,hv-spinlocks=0x1fff,hv-vendor-id=none,host-cache-info=on,l3-cache=off \ -m 32256 \ -object '{"qom-type":"memory-backend-ram","id":"pc.ram","size":33822867456}' \ -overcommit mem-lock=off \ -smp 24,sockets=1,dies=1,cores=12,threads=2 \ -uuid 032e02b4-0499-053c-f806-d90700080009 \ -display none \ -no-user-config \ -nodefaults \ -chardev socket,id=charmonitor,fd=35,server=on,wait=off \ -mon chardev=charmonitor,id=monitor,mode=control \ -rtc base=localtime \ -no-hpet \ -no-shutdown \ -boot strict=on \ -device '{"driver":"pcie-root-port","port":8,"chassis":1,"id":"pci.1","bus":"pcie.0","multifunction":true,"addr":"0x1"}' \ -device '{"driver":"pcie-root-port","port":9,"chassis":2,"id":"pci.2","bus":"pcie.0","addr":"0x1.0x1"}' \ -device '{"driver":"pcie-root-port","port":10,"chassis":3,"id":"pci.3","bus":"pcie.0","addr":"0x1.0x2"}' \ -device '{"driver":"pcie-root-port","port":11,"chassis":4,"id":"pci.4","bus":"pcie.0","addr":"0x1.0x3"}' \ -device '{"driver":"pcie-root-port","port":12,"chassis":5,"id":"pci.5","bus":"pcie.0","addr":"0x1.0x4"}' \ -device '{"driver":"pcie-root-port","port":13,"chassis":6,"id":"pci.6","bus":"pcie.0","addr":"0x1.0x5"}' \ -device '{"driver":"ich9-usb-ehci1","id":"usb","bus":"pcie.0","addr":"0x7.0x7"}' \ -device '{"driver":"ich9-usb-uhci1","masterbus":"usb.0","firstport":0,"bus":"pcie.0","multifunction":true,"addr":"0x7"}' \ -device '{"driver":"ich9-usb-uhci2","masterbus":"usb.0","firstport":2,"bus":"pcie.0","addr":"0x7.0x1"}' \ -device '{"driver":"ich9-usb-uhci3","masterbus":"usb.0","firstport":4,"bus":"pcie.0","addr":"0x7.0x2"}' \ -device '{"driver":"virtio-serial-pci","id":"virtio-serial0","bus":"pci.2","addr":"0x0"}' \ -blockdev '{"driver":"file","filename":"/mnt/user/isos/virtio-win-0.1.190-1.iso","node-name":"libvirt-1-storage","auto-read-only":true,"discard":"unmap"}' \ -blockdev '{"node-name":"libvirt-1-format","read-only":true,"driver":"raw","file":"libvirt-1-storage"}' \ -device '{"driver":"ide-cd","bus":"ide.1","drive":"libvirt-1-format","id":"sata0-0-1"}' \ -netdev tap,fd=36,id=hostnet0 \ -device '{"driver":"virtio-net","netdev":"hostnet0","id":"net0","mac":"52:54:00:f5:39:78","bus":"pci.1","addr":"0x0"}' \ -chardev pty,id=charserial0 \ -device '{"driver":"isa-serial","chardev":"charserial0","id":"serial0","index":0}' \ -chardev socket,id=charchannel0,fd=34,server=on,wait=off \ -device '{"driver":"virtserialport","bus":"virtio-serial0.0","nr":1,"chardev":"charchannel0","id":"channel0","name":"org.qemu.guest_agent.0"}' \ -audiodev '{"id":"audio1","driver":"none"}' \ -device '{"driver":"vfio-pci","host":"0000:0c:00.0","id":"hostdev0","bus":"pci.3","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:0c:00.1","id":"hostdev1","bus":"pci.4","addr":"0x0"}' \ -device '{"driver":"vfio-pci","host":"0000:01:00.0","id":"hostdev2","bus":"pci.5","addr":"0x0"}' \ -sandbox on,obsolete=deny,elevateprivileges=deny,spawn=deny,resourcecontrol=deny \ -msg timestamp=on char device redirected to /dev/pts/0 (label charserial0) 2023-05-17T23:55:14.579083Z qemu-system-x86_64: vfio: Cannot reset device 0000:0c:00.1, depends on group 36 which is not owned. 2023-05-17T23:55:26.952967Z qemu-system-x86_64: terminating on signal 15 from pid 7750 (/usr/sbin/libvirtd) 2023-05-17 23:55:27.576+0000: shutting down, reason=shutdown - The VM Template: The XML version: <?xml version='1.0' encoding='UTF-8'?> <domain type='kvm'> <name>Windows 10 BareMetal</name> <uuid>bdb3ec3a-eb3f-2619-7603-f2836cadd078</uuid> <description>Windows 10 Pass Through</description> <metadata> <vmtemplate xmlns="unraid" name="Windows 10" icon="windows.png" os="windows10"/> </metadata> <memory unit='KiB'>33030144</memory> <currentMemory unit='KiB'>16777216</currentMemory> <memoryBacking> <nosharepages/> </memoryBacking> <vcpu placement='static'>24</vcpu> <cputune> <vcpupin vcpu='0' cpuset='4'/> <vcpupin vcpu='1' cpuset='20'/> <vcpupin vcpu='2' cpuset='5'/> <vcpupin vcpu='3' cpuset='21'/> <vcpupin vcpu='4' cpuset='6'/> <vcpupin vcpu='5' cpuset='22'/> <vcpupin vcpu='6' cpuset='7'/> <vcpupin vcpu='7' cpuset='23'/> <vcpupin vcpu='8' cpuset='8'/> <vcpupin vcpu='9' cpuset='24'/> <vcpupin vcpu='10' cpuset='9'/> <vcpupin vcpu='11' cpuset='25'/> <vcpupin vcpu='12' cpuset='10'/> <vcpupin vcpu='13' cpuset='26'/> <vcpupin vcpu='14' cpuset='11'/> <vcpupin vcpu='15' cpuset='27'/> <vcpupin vcpu='16' cpuset='12'/> <vcpupin vcpu='17' cpuset='28'/> <vcpupin vcpu='18' cpuset='13'/> <vcpupin vcpu='19' cpuset='29'/> <vcpupin vcpu='20' cpuset='14'/> <vcpupin vcpu='21' cpuset='30'/> <vcpupin vcpu='22' cpuset='15'/> <vcpupin vcpu='23' cpuset='31'/> </cputune> <os> <type arch='x86_64' machine='pc-q35-7.1'>hvm</type> <loader readonly='yes' type='pflash'>/usr/share/qemu/ovmf-x64/OVMF_CODE-pure-efi.fd</loader> <nvram>/etc/libvirt/qemu/nvram/bdb3ec3a-eb3f-2619-7603-f2836cadd078_VARS-pure-efi.fd</nvram> <boot dev='hd'/> </os> <features> <acpi/> <apic/> <hyperv mode='custom'> <relaxed state='on'/> <vapic state='on'/> <spinlocks state='on' retries='8191'/> <vendor_id state='on' value='none'/> </hyperv> </features> <cpu mode='host-passthrough' check='none' migratable='on'> <topology sockets='1' dies='1' cores='12' threads='2'/> <cache mode='passthrough'/> <feature policy='require' name='topoext'/> </cpu> <clock offset='localtime'> <timer name='hypervclock' present='yes'/> <timer name='hpet' present='no'/> </clock> <on_poweroff>destroy</on_poweroff> <on_reboot>restart</on_reboot> <on_crash>restart</on_crash> <devices> <emulator>/usr/local/sbin/qemu</emulator> <disk type='file' device='cdrom'> <driver name='qemu' type='raw'/> <source file='/mnt/user/isos/virtio-win-0.1.190-1.iso'/> <target dev='hdb' bus='sata'/> <readonly/> <address type='drive' controller='0' bus='0' target='0' unit='1'/> </disk> <controller type='usb' index='0' model='ich9-ehci1'> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x7'/> </controller> <controller type='usb' index='0' model='ich9-uhci1'> <master startport='0'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x0' multifunction='on'/> </controller> <controller type='usb' index='0' model='ich9-uhci2'> <master startport='2'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x1'/> </controller> <controller type='usb' index='0' model='ich9-uhci3'> <master startport='4'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x07' function='0x2'/> </controller> <controller type='sata' index='0'> <address type='pci' domain='0x0000' bus='0x00' slot='0x1f' function='0x2'/> </controller> <controller type='pci' index='0' model='pcie-root'/> <controller type='pci' index='1' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='1' port='0x10'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x0' multifunction='on'/> </controller> <controller type='pci' index='2' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='2' port='0x11'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x1'/> </controller> <controller type='pci' index='3' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='3' port='0x12'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x2'/> </controller> <controller type='pci' index='4' model='pcie-root-port'> <model name='pcie-root-port'/> <target chassis='4' port='0x13'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x02' function='0x3'/> </controller> <controller type='virtio-serial' index='0'> <address type='pci' domain='0x0000' bus='0x02' slot='0x00' function='0x0'/> </controller> <interface type='bridge'> <mac address='52:54:00:ef:c8:6b'/> <source bridge='br0'/> <model type='virtio-net'/> <address type='pci' domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </interface> <serial type='pty'> <target type='isa-serial' port='0'> <model name='isa-serial'/> </target> </serial> <console type='pty'> <target type='serial' port='0'/> </console> <channel type='unix'> <target type='virtio' name='org.qemu.guest_agent.0'/> <address type='virtio-serial' controller='0' bus='0' port='1'/> </channel> <input type='tablet' bus='usb'> <address type='usb' bus='0' port='1'/> </input> <input type='mouse' bus='ps2'/> <input type='keyboard' bus='ps2'/> <graphics type='vnc' port='-1' autoport='yes' websocket='-1' listen='0.0.0.0' keymap='fr'> <listen type='address' address='0.0.0.0'/> </graphics> <audio id='1' type='none'/> <video> <model type='qxl' ram='65536' vram='65536' vgamem='16384' heads='1' primary='yes'/> <address type='pci' domain='0x0000' bus='0x00' slot='0x01' function='0x0'/> </video> <hostdev mode='subsystem' type='pci' managed='yes'> <driver name='vfio'/> <source> <address domain='0x0000' bus='0x01' slot='0x00' function='0x0'/> </source> <address type='pci' domain='0x0000' bus='0x03' slot='0x00' function='0x0'/> </hostdev> <memballoon model='none'/> </devices> </domain> - The Unraid Boot conf: That's it, I hope I have been exhaustive enough 🙂 -

Hi, I had a working setup with both NVME and GPU passthrough. But since I recently upgrade my CPU, I needed to upgrade my BIOS as well causing a blue screen and triggering a repair mode in Windows. To isolate the issue, I temporarily deactivated GPU passthrough and I still have the same issue. The thing is that when I choose to reboot Windows in Safe mode, the NVME passthough is working. So it is encouraging. As I had reset my BIOS settings since the upgrade, it might be the issue. But I do not know what is the settings I missed. Maybe something with UEFI... I attached my diagnostic zip. Does someone has a lead on this ? ryzen-diagnostics-20210729-1035.zip

-

Hi, I had a working setup with both NVME and GPU passthrough. But since I recently upgrade my CPU, I needed to upgrade my BIOS as well causing a blue screen and triggering a repair mode in Windows. To isolate the issue, I temporarily deactivated GPU passthrough and I still have the same issue. The thing is that when I choose to reboot Windows in Safe mode, the NVME passthough is working. So it is encouraging. As I had reset my BIOS settings since the upgrade, it might be the issue. But I do not know what is the settings I missed. Maybe something with UEFI... I attached my diagnostic zip. Does someone has a lead on this ? ryzen-diagnostics-20210729-1035.zip

-

Add TSL kernel module (ktsl) on Unraid 6.9.1

Starli0n replied to Starli0n's topic in Feature Requests

Thank you, I did not know the 'm' config, so I use this config: export CONFIG_TLS=y export CONFIG_TLS_DEVICE=m But the file seems not to be generated nor the "*.ko" files. I attached the log, if someone can help me. build-tls.log -

Add TSL kernel module (ktsl) on Unraid 6.9.1

Starli0n replied to Starli0n's topic in Feature Requests

@ich777, I just realize that you are the author of the module. I built the kernel in Custom mode and I added the two variables at the beginning of the script: export CONFIG_TLS=y export CONFIG_TLS_DEVICE=y it seems to work fine but I do not see the TLS module in a separate file in the output dir. Does it means that something went wrong or the modules are directly included into the main files ? My aim would be not to replace the whole kernel but only to install/add the ktls compiled module. Do you have any advice on this ? -

Add TSL kernel module (ktsl) on Unraid 6.9.1

Starli0n replied to Starli0n's topic in Feature Requests

Thank you @ich777, I thought to avoid rebuilding the kernel as I just want a module but I think I will not be able to do otherwise. Are you sure that activate this feature could result a Kernel panic as it seems that ktls is activated out of the box on other distro ? -

Add TSL kernel module (ktsl) on Unraid 6.9.1

Starli0n replied to Starli0n's topic in Feature Requests

Thank you @doron, I did not know this command -

Hi, I do not know how to activate the TSL kernel module (ktsl) on Unraid 6.9.1. I already found some post but seems to be outdated. I tried: $ modprobe tls modprobe: FATAL: Module tls not found in directory /lib/modules/5.10.21-Unraid So it seems that Unraid 6.9.1 is based on linux kernel 5.10.21. The TLS feature seems to be activated since linux kernel 4.13 and should be enable with those flags (CONFIG_TLS=y and CONFIG_TLS_DEVICE=y) I learnt that Unraid is base on https://packages.slackware.com but I do not know on which version ? Nor if it is 64 bits or not ? Does someone have any idea to achieve that ? Thanks

-

Permissions are not taken into account in /mnt/user/share folders

Starli0n replied to Starli0n's topic in General Support

Wow, thank you for sharing, it is an interesting behavior, if I can say that. So the '/mnt/cache' folder respects the permissions whereas '/mnt/user' don't... I could reproduce on my side Is there a chance to fix this ? -

Permissions are not taken into account in /mnt/user/share folders

Starli0n replied to Starli0n's topic in General Support

Thank you for your answer. I understand that Unraid is not made for that but I do not get why it fails especially on /mnt/user/share. Is it linked to smb ? I do not want to create a linux VM as I want to keep my resources at the maximum. I just want a dev account to manage my docker and be able to share this account while keeping other shares private. -

Hi, I do not know if it is a bug or a feature but it seems that permissions are not taken into account in /mnt/user/share folders. I create a user named 'coder'. As expected in the home folder: # in /home/coder $ ls -pla foo ---------- 1 root root 4 Mar 23 03:13 foo $ cat foo cat: foo: Permission denied But in a shared folder with the same file: # in /mnt/user/system $ ls -pla foo ---------- 1 root root 4 Mar 23 03:12 foo $ cat foo bar It is a strange behavior. How can I apply correct permissions in shared folders ?

-

Starli0n changed their profile photo

-

So to have a clean sshd_config file, simply make a backup of the file: mv /boot/config/ssh/sshd_config /boot/config/ssh/sshd_config.bak Actually, /boot/config/ssh/sshd_config should no longer exist Then restart sshd: /etc/rc.d/rc.sshd restart Finally you should have the following files: /etc/ssh/sshd_config /etc/ssh/sshd_config.bak with /etc/ssh/sshd_config set to his default configuration Provided that it works the same way in the normal version as I am using the Beta

-

Thanks @ken-ji your method works like a charm 👌 After I understood that /boot/config/ssh/root.pubkeys was a file and not a directory 🙄 That being said, I am using Unraid Version: 6.9.0-beta30 and as far there is this symlink: (I do not know if the symlink is present in the stable version) /root/.ssh/ -> /boot/config/ssh/root/ You can keep the /root/.ssh/authorized_keys as the default configuration for /etc/ssh/sshd_config file You have to put your public key files here: /boot/config/ssh/root/authorized_keys So you will have it there as a usual configuration: /root/.ssh/authorized_keys Therefore no need to copy the file from /etc/ssh/sshd_config to /boot/config/ssh/sshd_config for the modification Then restart ssh: /etc/rc.d/rc.sshd restart By the way, restarting is copying the files from /boot/config/ssh/ to /etc/ssh/ BUT not the directories inside the folder. Plus, it keeps the files that were already present in /etc/ssh/ even though there were deleted from /boot/config/ssh/. For that a reboot is required as the RAM is flushed.