-

Posts

148 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by gyto6

-

-

2 hours ago, glockmane said:

I got a bunch new SSDs and HDDs and need to restructure my whole Homeserver.. Would you:

A) Do it now with latest stable Version

B) Wait for RC with ZFS

C) Use latest Beta

- Is there any way to migrate later to RC or Release Version (simple Update or with little manual effort e.g. exporting and importing config..)

I must say that I don't rely on the Server cause I don't even use it as it is but I want to start and the project is on hold since I heard the frst time of the ZFS Support in 6.12..

A) You already can set your server with ZFS plugin if using ZFS is what you're intending to.

-

3 hours ago, bubbl3 said:

I believe the proper disclaimer to people buying unraid should be: do not make business decisions based on roadmaps.

Or :

-

1

1

-

-

On 2/28/2023 at 7:41 PM, Iker said:

I`m still working on the lazy load thing ( @HumanTechDesign ), a page for editing dataset properties, and make everything compatible with Unraid 6.12. The microservices idea is a big no, so probably I'm going to use websockets (nchan functionality) to improve load times, the Snapshots are going to be loaded on the background.

Looking forward with an certain enthusiasm. Thanks for your work. 💖

-

25 minutes ago, JonathanM said:

Unfortunately I think the energy and attention are on the new authentication process, so the silence here is expected.

I was thinking the same too (no joke). 😁

-

4 hours ago, custom90gt said:

All is quiet on the front, perhaps it's time for an RC? lol

I was thinking the same. 😁

-

1

1

-

-

1 hour ago, glockmane said:

Is it possible to upgrade 6.11.5 to beta and in the future upgrade to RC?

As a non-conventional channel delivery, Don't expect beta releases to guarantee any upgrade to RC.

This is an internal Limetech testing process.

Final beta that shall be packaged as RC then is meant and coded to be smoothly upgradeable from official previous releases.

That's the purposes from beta.

-

-

1 minute ago, Xxharry said:

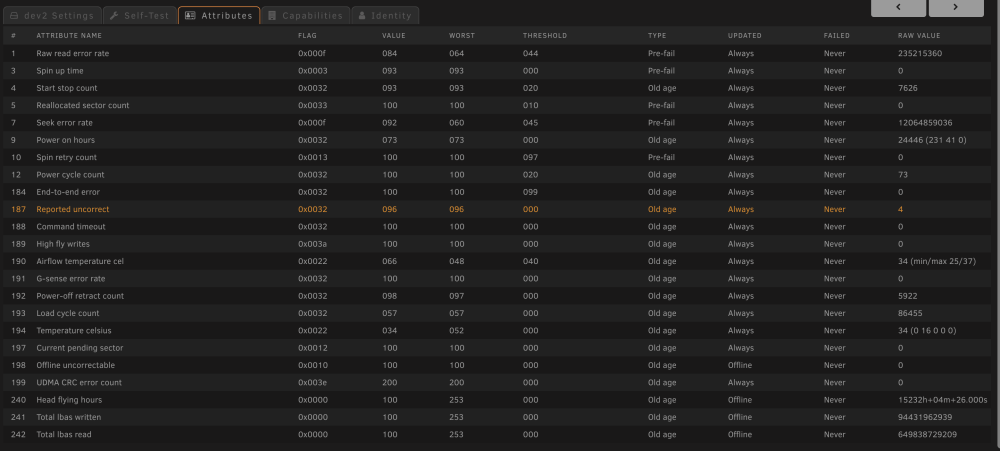

Hey Guys, how do I fix this? happened after the server went down due to sudden power loss.

root@UnRAID:~# zpool status pool: citadel state: ONLINE status: One or more devices has experienced an unrecoverable error. An attempt was made to correct the error. Applications are unaffected. action: Determine if the device needs to be replaced, and clear the errors using 'zpool clear' or replace the device with 'zpool replace'. see: https://openzfs.github.io/openzfs-docs/msg/ZFS-8000-9P scan: scrub repaired 0B in 05:25:00 with 0 errors on Fri Feb 17 21:23:57 2023 config: NAME STATE READ WRITE CKSUM citadel ONLINE 0 0 0 raidz1-0 ONLINE 0 0 0 ata-ST4000VN008-2DR166_ZGY98G8E ONLINE 0 0 0 ata-ST4000VN008-2DR166_ZM40MTRB ONLINE 0 0 0 ata-ST4000VN008-2DR166_ZM40VDNZ ONLINE 0 0 77 ata-WDC_WD40EFRX-68N32N0_WD-WCC7K1XK022N ONLINE 0 0 0Check the SMART info relative to the disk. If everything is alright, run "zpool clear" and another scrub on the "citadel" pool.

If no more error occurs, continue to use your pool safely.

-

On 2/15/2023 at 5:50 PM, JorgeB said:

There are some known issues running a docker image (btrfs or xfs) on top of a zfs filesystem, AFAIK this is a zfs problem, not Unraid, solution for now is to create the image on a non zfs drive/pool or use the folder option.

Concerning this troubleshoot, you can run the docker image within a ZVOL on a ZFS volume. It authorizes to create a XFS, BTRFS or any Filesystem "partition", inside ZFS. Notice the quotes because it's not at partition at all.

My own server uses this to fully run with ZFS and not dedicate a full drive or partition to the docker files.

-

2

2

-

-

Indeed, this is not an official thread. Just sharing our enthusiasm and questions about what might happen. And this "morse session" seemly has been more overflowing than expected.

However, if some reactions were "childish", others were "grumpy". It doesn't make sense to me feeling harassed or annoyed at this point. I clearly don't spend my whole time on this forum and didn't witness such a "craziness", in a certain way, until now. So I am probably less impacted than others.-

3

3

-

-

·· −·−· ·− −· ··· · · − ···· · −− ·− − ·−· ·· −··− −· −−− ·−− ·−·−·−

-

13 minutes ago, JorgeB said:

Just need to set a spin down timeout on the GUI, like for other pools.

Good to know. I've no HDD on my setup, so I wasn't aware that the GUI has this functionnality... 😅

-

5 hours ago, brandon3055 said:

Will it be possible to automatically spin down an entire raidz pool when inactive? Example use case would be a pool that is used for nightly backups.

Sure, powertop or hdparm can set HDD spinning speed.

-

1 minute ago, Iker said:

This lazy load functionality has been on my mind for quite a while now, and it will help a lot with load times in general; that's why I'm focusing on rewriting a portion of the backend, so every functionality becomes a microservice, but given the unRaid UI architecture, it's a little more complicated than I anticipated, I understand your issue but please hang in there for a little while.

Your concern is kind. Thanks however

-

1 hour ago, JonathanM said:

What benefits of ZFS still apply to single drive volumes? I know many of the BTRFS features are unavailable.

ARC is also included with ZFS single drive volumes (or vdev). It caches each file read in RAM and the most used files used. Some more properties proper to ZFS can also be managed.

-

1

1

-

-

3 hours ago, orhaN_utanG said:

Same here. Bought a license, server is laying around waiting for official ZFS support

Euhhhhh...

You can already use ZFS in Unraid with no GUI, instead of leaving the dust on your server : Support Forum

-

1

1

-

-

11 hours ago, jortan said:

edit: That said, ZFS raidz expansion is now a thing,

I doubt as the PR is still opened on the github.

But indeed, the operation is already available for mirrored vdev.

Concerning ZFS support in Unraid, it's "coming" in Unraid 6.12

QuoteAdditional Feedback and Gift Card Winners

We heard a lot of feedback for ZFS. We hear you loud and clear and it's coming in Unraid OS 6.12!

-

1 hour ago, Jclendineng said:

That’s true, I could do that, I was more thinking about ha within unraid so running 2 instances of the sql docker so I can patch one and not take all the apps relying on it down.

Sent from my iPhone using TapatalkIn this expected configuration, the SQL docker must run on its own storage datastore, independent of the application pool storage running grace to your database container.

Because the expected failure is then your SQL storage, what about the application storage? You'll be only able then to restore your system in one situation. Not ideal but that's a beginning.

Whatever, an HA is litteraly a copy from your whole system on several servers. So your scenario is still not ideal for full HA.

-

The only good DIY alternative would be to proceed ZFS replications and using Mikrotik router (cheaper and customizable) to proceed "heartbeat" from your VM with ping or from your host each minute. In case of failure, of VM or container answer, the Mikrotik can proceed an SSH session to boot the sibling VM/container on the backup server, but trafic also need to be manage on the network side.

So another mess to script also according to your need.

-

Don't expect to do HA with Unraid, it's not expected to do so.

ESXi with the vCenter is the basic one system to do so. You can proceed to replication each hour with a third server (better than a VM) hosting Veeam. The vCenter Replication can do so each minute and give more automatic actions in case of emergency.

Some other solution, still with vCenter, is Datacore, but as all others solutions I quoted, you've to pay and you can't crack this solution, whatever, it works with an ideal HA server and its network.

You can find ESXi and vCenter keys on github dedicated for labs. Host Unraid VM on it and proceed to replication according to the solution you're using. But if you're a rookie, I stronlgy do not recommand to do so. It's easier to manage backup instead of setting up and managing an HA solution.

Load Balancing and Failover need to be also managed from your router if you're expecting traffic from WAN to work.

-

1

1

-

-

Reserved - ZFS and Usage

-

Reserved - Hardware Choices and how to skip bottleneck

-

0

Quote"Moria. You fear to go into those mines. The Dwarves dug too greedily and too deep. You know what they awoke in the darkness of Khazad-dûm... shadow and flame."

Deep in my cave, is stored a silent server, only its fans wheels can be heard like a whisper. This server is a SuperMicro 1018GR-T.

This 1U server is connected from the deepest places of my home to the brightest places of the internet by Mikrotik RB4011-GS+ directly connected to my French ISP (Orange) GPON.

Once the server is opened, most of these secrets shows Its components are brighter than the shade around us.

First of all, the heart of the beast : a Micron e230 USB DOM. This tiny card of 4GB/Go is equipped with ECC components that shall assure that Unraid doesn’t suffer from corrupted file due to magnetic interferences.. How can it be reached in such as dark place where no light seems to descends ?

A second vital element then shows up : an SSD-DM06 from SuperMicro, this special SATA port is able to give it the power to run. This cards is dedicated to store the Unraid’s logs.

Not so far can be seen 8 sticks of RAM from Micron. It appears that the server is equipped with 128GB/Go ECC RAM from Micron. Enough to run one Chrome tab with a bright sun if this last one would come down here.

The machine is equipped with 6 SATA SSD from Kingston. With a capacity of 11.52TB, this server could store a quarter of Star Citizen at max SATAIII speed 600MB/Mo’s. With its Power Loss Protection (PLP), the data cached in its own RAM can safely be written to the NAND in case of power outage.

Those 6 drives are connected to a AOC-S3108L-H8iR from SuperMicro. As the SATA SSD saturates the DMI which needs to vehiculate ethernet packets too, the motherboard needs it to not suffocate.

Finally, a green card can catch our eye. This RTX Quadro 4000 is more than enough to run Minecraft Java.

This less blinky card show up. The dark plate was hiding a silver one on the otherside. It seems to hide something in it…

The card dissected, some jewels can catch our attention. An Optane P4801x ! This memory’s so fast, that no RAM are required to access the data in its cells quickly. PLP is still present as some capacitors appears on its body. It let the controller the time to run the last received instructions and store it in the 100GB/Go storage.

Some more conventional flash cells are also on the Hyper M.2 Asus card. 2 Micron 7400 Pro with PLP are there as long as the 110mm Optane stick’s length. A little 80mm stick remains, a Crucial P5 with 2TB/To of storage, as much as the Micron sticks.

Finally, a V7 1500VA UPS is calmly sitting in the floor of this rack to assure the best to run endlessly, even in case of power outage for 40 minutes

-

2

2

-

-

4 hours ago, ncceylan said:

Yes. I am an unraid user. I want to add new hard drives to the existing raidz1 pool. But the Internet said that it is currently not possible.Seems like it can be achieved with RAID Z Expansion.

ZFS raidz expansion is not available yet.

You must destroy and create a pool with each disk. Copy your data to an external USB drive temporarily to create your new zpool.

Unraid 6.11.5 - Can't connect to Mothership Error

in Connect Plugin Support

Posted

Got the same error. I did several disconnections and connections to get it back to normal state.