-

Posts

148 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by gyto6

-

-

Hi everyone,

I've some trouble with one container. I need to pass a variable in it, but this same variable is already set in the docker-compose.yml container's file, so I have to edit the variable at the source.

The problem is that I'm using the Docker vDisk file, and due to all these docker layers, I'd like to know what could be the best way to edit this file.

I'm not experienced enough with docker, and maybe that using vDisk might lead me to a dead end, but i've still a lot of interess to any explanation.Thank you!

-

Server updated. No problem.

-

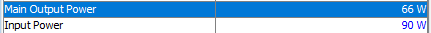

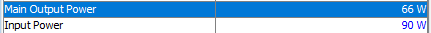

From the IPMI, I'm running at 90W IDLE... I expected better from this 1400W PSU concerning the ratio:

-

Done.

-

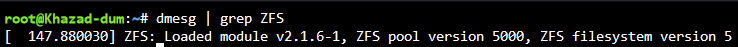

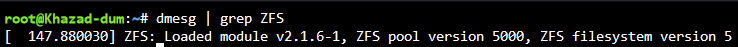

A quick note for ZFS users with NVMe devices.

While scrolling the OpenZFS github page, I've discovered this PR from Brian Atkinson concerning the Direct IO implementation within ZFS.

Not applicable for databases, but many others situations shall see a real benefit with its implementation.

You must understand that we're bypassing the ARC, and Direct I/O is very specific. So it's not a "performance booster" but a property that should be set accordingly to your need.

https://docs.google.com/presentation/d/1f9bE1S6KqwHWVJtsOOfCu_cVKAFQO94h/edit#slide=id.p1

-

18 hours ago, Sally-san said:

I see that "expand" has been added to ZFS for raidz configs, is this feature part of the ZFS plugin yet? Or do we have to wait for and update?

If it is part of the Unraid ZFS plugin already, how do we use it. I'm still a newb.

ZFS "plugin" downloads the last ZFS version from OpenZFS github, so the functionality is there.

-

15 minutes ago, Valiran said:

Yes if you have something to share, I may have something to read

Thanks !

Thanks !

First, you'll find many informations on Aaron Toponce website : https://pthree.org/2012/04/17/install-zfs-on-debian-gnulinux/

Second : In Situ scenari, I wrote this tutorial to optimize ZFS for another application. I tried my best to explain ZFS so it might worth the shot. https://github.com/photoprism/photoprism-contrib/tree/main/tutorials/zfs

Third : ZFS/Unraid Compendium from @BVD https://github.com/teambvd/UnRAID-Performance-Compendium/blob/main/README.md

QuoteZFS, while powerful, isn't for everyone - you'll need not only have the desire/drive to do some of your own background research and learning, but just as importantly, the TIME to invest in that learning. It's almost comically easy to have a much worse experience with zfs than alternatives by simply copy/pasting something seen online, while not fully understanding the implications of what that exact command syntax was meant to do.

-

1

1

-

-

2 minutes ago, Valiran said:

So if I have to go ZFS, I better use TrueNAS, at least until full support is added to Unraid

No need to come back to Unraid if you're only planning to use Plex and to store personal data on your server I think.

-

4 minutes ago, Valiran said:

What you've linked if about command lines. The ZFS addons for Unraid are not graphical friendly?

Nope. If Unraid add ZFS support, it should be a bit more graphical. But with many caveats probably as they are tons of properties to customize a Zpool and a dataset.

-

First thing that comes to my mind, according to the required space, set a raidz or raidz2 and dedicate your 18Tb drive as a spare.

https://docs.oracle.com/cd/E53394_01/html/E54801/gpegp.htmlhttps://docs.oracle.com/cd/E19253-01/820-2315/gcvcw/index.html (french one)

Proceed to a safe test; with no data in the array; to check that in case of a drive failure, the 18Tb drive is automatically mounted. Just remove the SATA cable to simulate a drive failure.

When creating the raidz, set the autoreplace property.

Quote-

1

1

-

-

26 minutes ago, Valiran said:

Is ZFS pertinent for a Plex Library?

I've moved from Unraid to Xpenology (virtualized on Unraid) because I've had performance issues with Plex libraries with standard Array

ZFS is not more pertinent. But if you’ve the required stuff in your server, you can custom the Filesystem in this way.

Whatever, if you’re meeting performance issue, I don’t think that ZFS will magically solve the trouble.

-

For those wishing the use of a ZVOL with XFS for a XFS docker disk image:

zfs create -V 20G yourpool/datasetname # -V refers to create a ZVOL / 20G = 20GB or 20Go cfdisk /dev/zvol/yourpool/datasetname # To create easily a partition mkfs.xfs /dev/zvol/yourpool/datasetname-part1 # Simple to format in the desired sgb mount /dev/zvol/yourpool/datasetname-part1 /mnt/yourpool/datasetname/ # The expected mount point-

1

1

-

-

On 10/22/2022 at 5:51 AM, fwiler said:

Confused.

1st line- is pool/docker already a location on your pool or are you creating a new one with this command?

3rd line- I read that xfs should be used instead of btrfs, so would I just use mkfs.xfs -q /

and I don't understand the docker-part1. Wouldn't you mkfs on /dev/pool/docker? When I try with /dev/pool/docker-part1 it says Error accessing specified device /dev/ssdpool/docker-part1 : No such file or directory. (Yes I did write after cfdisk create)

Update- never mind. The write in cfdisk didn't happen for some reason. I just used fdisk. Now I see docker-part1 is automatically created.

To explain:

Zfs create : Is used to create a dataset, so any path following this command refers to a zfs pool (already created) and a dataset (or sub dataset) to be created.

Do not mix up "zfs create" and "zpool create".

BTRFS or XFS : At the time I posted it, I tried first to make a docker disk image run in a ZVOL, so I began with the BTRFS. Several specifications tends to prefer the use of XFS instead of BTRFS.

Use XFS docker disk image in a ZVOL partitionned with XFS.

Error accessing specified device: /dev/pool/docker is a ZFS "file". /dev/pool/docker-part1 is the relative path to the partition included in the ZVOL.

Once your partition configured with the appropriate file system, you'd better use a script to mount the filesystem at boot in a different path to enable writing. My own actually :

mount /dev/zvol/pool/Docker-part1 /mnt/pool/Docker/

-

1

1

-

-

I've got a similar issue, but with the Nvidia Driver plugin however.

Whatever, the missing plugin has been automatically downloaded right after reboot.

-

1

1

-

-

With an image

MYDOMAIN.COM -----> WAN ROUTER IP -----> PORT 80/443 FORWARDED TO NGINX -----> PRIVATE HOST FOR MYDOMAIN.COM

-----> PRIVATE HOST FOR MYDOMAIN2.COM

-

If you want to host several websites behind one WAN IP, you must forward the dedicated port to a reverse proxy like Nginx, then proceed to assign different domain name to each reachable host.

Once the hosts named with a public domain name, set them into Nginx that will redirect the requests according to the desired domain name.

-

54 minutes ago, Iker said:

Great! the new method to obtain datasets, volumes, snapshots, and their respective properties; is as good as it can be to prevent parsing errors and, overall, much quicker than the previous one. Now that I have finished with that and it is working (As far as I know) very good, I will focus on new functionality and improvements over the UI; the two you describe are on their way.

If I can help you concerning the full dataset snapshots deletion:

zfs destroy tank/docker@%The % in ZFS is like the most common * character to include any value. So any snapshot from the docker dataset shall be removed.

-

1

1

-

-

1 hour ago, calvados said:

Add User Script plugin and create a script with the relative command :

zpool scrub tankTwice a month for public drives and once a month for professional drives.

-

1

1

-

-

On 7/30/2022 at 4:22 PM, BVD said:

I honestly dont see them including it in the base OS, instead opting to better support ZFS datasets within the UI for normal cache/share/etc operations. e.g. youd be able to create a zfs cache pool, but only if the optional zfs driver plugin is installed; otherwise, only btrfs and xfs are listed as options.

Thatd be how I'd do it anyway - forego any potential legal risk (no matter how small), limit memory footprint by leaving it out of the base for those who dont need/want it, and numerous other reasons.

Indeed, ZFS is a "bit" more complex and I don't see it perfectly included within Unraid as they did with BTRFS/XFS. Using a plugin as it's the case with "My Servers" is not something that I expected, but it might do the work indeed. Only authorising its integration with the disk management GUI would be the least, and we won't finally require a dead usb-key or disk to start the array anymore.

11 hours ago, Marshalleq said:Just to be clear - nothing in that post says ZFS will be available in 6.11. It only says they're laying the groundwork for it. Yes, that does officially elude that it's coming - but that's happened in a few places already.

Indeed, words have a meaning. What do you mean saying "that's happened in a few places already" ? That other functionalities has been annonced but not released, or at least in another upgrade?

-

On 7/18/2022 at 11:00 PM, ich777 said:

TBH, even if it is implemented in Unraid directly I'm fine with it, as long as it is working and makes Unraid better for everyone I'm fine with it, I also don't see a reason why I should be mad about such a thing...

ZFS support is official now for Unraid 6.11 : This release includes some bug fixes and update of base packages. Sorry no major new feature in this release, but instead we are paying some "technical debt" and laying the groundwork necessary to add better third-party driver and ZFS support. We anticipate a relatively short -rc series.

@ich777 You'll finally have some rest with this thread in an aproximate future.

ICE : Actual ZFS beginners users should audit their configuration in order to be able to recreate their zpool with the incoming Unraid 6.11. Save your "zpool create" command in a txt file as for your datasets in order to keep a trace from all the parameters you've used when creating your pool.

BACKUP ALL YOUR DATA ON AN EXTERNAL SUPPORT. SNAPSHOT IS NOT A BACKUP. A COPY IS.

ZnapZend or Sanoid plugins will help, or a simple copy from your data on a backup storage with the cp or rclone commands.

Don't expect the update to happens with no trouble

-

On 7/26/2022 at 7:32 PM, stuoningur said:

I finally tried this plugin and set everything up, 3x 4TB hdds with a raidz1 and I did run a command to test the speed from level1techs forum and I have abysmal performance. I get around 20MB/s read and writes, not sure how to troubleshoot this. In the example given in the forum he had around 150MB/s with 4 8TB hdds

The command is:

fio --direct=1 --name=test --bs=256k --filename=/dumpster/test/whatever.tmp --size=32G --iodepth=64 --readwrite=randrw

Please, provide your HDD references and your zpool properties for better support.

-

Please, if your using ZnapZend or Sanoid, DO NOT USE the script to remove your empty snapshots.

These solutions manages Snapshots Retention for Archive purpose and would keep some snapshots longer. If your using this script, a snapshot managed to be kept a month can get simply removed...

-

31 minutes ago, Jack8COke said:

1. Is it possible to do the snapshots for a specific dataset (rescursive) only?

Sure, select yourpool/yourdataset instead of yourpool to apply specific commands and snapshots to this dataset

31 minutes ago, Jack8COke said:2. Is there a command so that empty snapshots get deleted automatically?

Some scripts seems to exists, but for security matters, "We don't that here" as they take no space when empty (nearly).

This script does the job but stay cautious, it can be break with an upgrade : https://gist.github.com/SkyWriter/58e36bfaa9eea1d36460

31 minutes ago, Jack8COke said:3. I use truenas on another server offsite. Is it possible to send the snapshot to this truenas server so that they are also visible in the gui of truenas?

Did your heard about Sanoid ?

-

27 minutes ago, ich777 said:

No.

27 minutes ago, SimonF said:This is just updating tbe plugin helper code not a change to zfs. So no need to reboot.

Thanks guys, ✌️

How to edit docker-compose.yml inside docker vDisk

in Docker Engine

Posted

Well, I agree with you. I'll get in touch with the container developper to get a clue on why I can't get the variable as usual on Unraid.