poto2

Members-

Posts

64 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by poto2

-

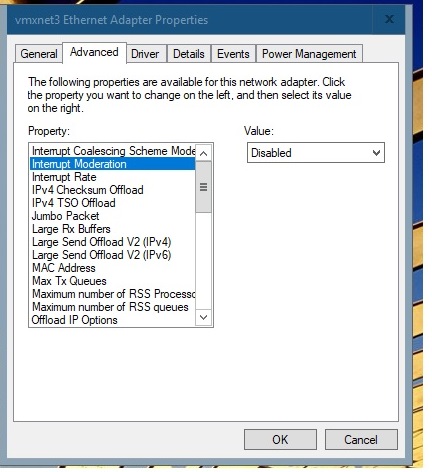

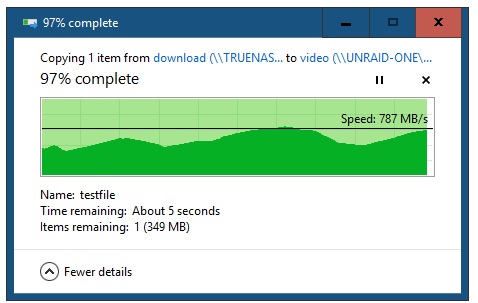

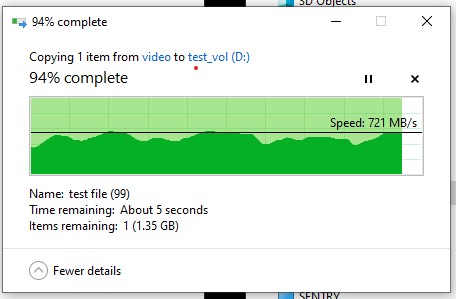

Did a bit more digging, and this forum already had the answer for the Windows PC network throttling. Windows nic driver setting for interrupt moderation "disabled" resolved this issue. For UnRaid, what worked for me was adjusting interrupt coalescing. My nic driver didn't allow tx-usecs adjustment, but rx-usecs increase did the trick. root@unraid-one:~# ethtool -C eth0 rx-usecs 200 root@unraid-one:~# ethtool -c eth0 Coalesce parameters for eth0: Adaptive RX: n/a TX: n/a stats-block-usecs: n/a sample-interval: n/a pkt-rate-low: n/a pkt-rate-high: n/a rx-usecs: 200 rx-frames: n/a rx-usecs-irq: n/a rx-frames-irq: n/a tx-usecs: 0 tx-frames: n/a tx-usecs-irq: n/a tx-frames-irq: n/a rx-usecs-low: n/a rx-frame-low: n/a tx-usecs-low: n/a tx-frame-low: n/a rx-usecs-high: n/a rx-frame-high: n/a tx-usecs-high: n/a tx-frame-high: n/a CQE mode RX: n/a TX: n/a root@unraid-one:~# That change increased file copy speed from Win PC or TrueNAS to UnRaid cache drive quite a bit. I've a bit more reading to figure out how to make change permanent (user scripts?), and equivalent adjustment for TrueNAS, but problem solved AFAIK. Thanks to @JorgeB for suggesting areas to investigate.

-

Something is definitely wrong, nowhere near 10g with single-stream iperf3. C:\download>cd iperf313 C:\download\iperf313>iperf3 -c unraid-one Connecting to host unraid-one, port 5201 [ 4] local 192.168.1.194 port 62698 connected to 192.168.1.162 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 169 MBytes 1.42 Gbits/sec [ 4] 1.00-2.00 sec 161 MBytes 1.35 Gbits/sec [ 4] 2.00-3.00 sec 166 MBytes 1.39 Gbits/sec [ 4] 3.00-4.00 sec 176 MBytes 1.47 Gbits/sec [ 4] 4.00-5.00 sec 146 MBytes 1.23 Gbits/sec [ 4] 5.00-6.00 sec 169 MBytes 1.41 Gbits/sec [ 4] 6.00-7.00 sec 200 MBytes 1.67 Gbits/sec [ 4] 7.00-8.00 sec 176 MBytes 1.48 Gbits/sec [ 4] 8.00-9.00 sec 144 MBytes 1.20 Gbits/sec [ 4] 9.00-10.00 sec 170 MBytes 1.43 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 1.64 GBytes 1.41 Gbits/sec sender [ 4] 0.00-10.00 sec 1.64 GBytes 1.41 Gbits/sec receiver iperf Done. C:\download\iperf313>iperf3 -c unraid-one -R Connecting to host unraid-one, port 5201 Reverse mode, remote host unraid-one is sending [ 4] local 192.168.1.194 port 62700 connected to 192.168.1.162 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 552 MBytes 4.63 Gbits/sec [ 4] 1.00-2.00 sec 544 MBytes 4.56 Gbits/sec [ 4] 2.00-3.00 sec 547 MBytes 4.59 Gbits/sec [ 4] 3.00-4.00 sec 540 MBytes 4.53 Gbits/sec [ 4] 4.00-5.00 sec 544 MBytes 4.56 Gbits/sec [ 4] 5.00-6.00 sec 542 MBytes 4.55 Gbits/sec [ 4] 6.00-7.00 sec 541 MBytes 4.54 Gbits/sec [ 4] 7.00-8.00 sec 543 MBytes 4.56 Gbits/sec [ 4] 8.00-9.00 sec 546 MBytes 4.58 Gbits/sec [ 4] 9.00-10.00 sec 549 MBytes 4.60 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 5.32 GBytes 4.57 Gbits/sec 0 sender [ 4] 0.00-10.00 sec 5.32 GBytes 4.57 Gbits/sec receiver iperf Done.

-

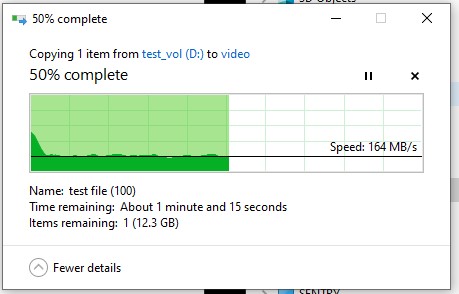

Understood on the iperf streams, just making sure total bandwidth was available. The Win10<<>>UR file copies were done using user share. I also cleared and reformatted cache drive, then tried copying again via user share. Same result: UR>>Win10 700MB/s, Win10>>UR 600MB/s for a couple seconds, then 200MB/s. Grasping for straws, also checked router for duplicate IP addresses, but nothing out of the ordinary there. And restarted switches.

-

Eliminating TrueNAS from the equation probably a good idea! Iperf3 bare-metal Win10 PC>>UnRaid much better, 9Gbps from UR, 6Gbps to UR w/4 streams. This is from bare-metal Win10 PC, 10GB nic, Intel NVME drive. perf313>iperf3 -c unraid-one -P 4 -R Connecting to host unraid-one, port 5201 Reverse mode, remote host unraid-one is sending [ 4] local 192.168.1.194 port 50573 connected to 192.168.1.162 port 5201 [ 6] local 192.168.1.194 port 50574 connected to 192.168.1.162 port 5201 [ 8] local 192.168.1.194 port 50575 connected to 192.168.1.162 port 5201 [ 10] local 192.168.1.194 port 50576 connected to 192.168.1.162 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 262 MBytes 2.20 Gbits/sec [ 6] 0.00-1.00 sec 255 MBytes 2.14 Gbits/sec [ 8] 0.00-1.00 sec 230 MBytes 1.93 Gbits/sec [ 10] 0.00-1.00 sec 240 MBytes 2.01 Gbits/sec [SUM] 0.00-1.00 sec 987 MBytes 8.28 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 1.00-2.00 sec 277 MBytes 2.33 Gbits/sec [ 6] 1.00-2.00 sec 277 MBytes 2.33 Gbits/sec [ 8] 1.00-2.00 sec 260 MBytes 2.18 Gbits/sec [ 10] 1.00-2.00 sec 270 MBytes 2.26 Gbits/sec [SUM] 1.00-2.00 sec 1.06 GBytes 9.10 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 2.00-3.00 sec 279 MBytes 2.34 Gbits/sec [ 6] 2.00-3.00 sec 253 MBytes 2.12 Gbits/sec [ 8] 2.00-3.00 sec 311 MBytes 2.61 Gbits/sec [ 10] 2.00-3.00 sec 282 MBytes 2.37 Gbits/sec [SUM] 2.00-3.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 3.00-4.00 sec 304 MBytes 2.55 Gbits/sec [ 6] 3.00-4.00 sec 286 MBytes 2.40 Gbits/sec [ 8] 3.00-4.00 sec 274 MBytes 2.30 Gbits/sec [ 10] 3.00-4.00 sec 262 MBytes 2.20 Gbits/sec [SUM] 3.00-4.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 4.00-5.00 sec 277 MBytes 2.32 Gbits/sec [ 6] 4.00-5.00 sec 284 MBytes 2.39 Gbits/sec [ 8] 4.00-5.00 sec 289 MBytes 2.42 Gbits/sec [ 10] 4.00-5.00 sec 275 MBytes 2.30 Gbits/sec [SUM] 4.00-5.00 sec 1.10 GBytes 9.43 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 5.00-6.00 sec 268 MBytes 2.25 Gbits/sec [ 6] 5.00-6.00 sec 270 MBytes 2.26 Gbits/sec [ 8] 5.00-6.00 sec 312 MBytes 2.62 Gbits/sec [ 10] 5.00-6.00 sec 275 MBytes 2.30 Gbits/sec [SUM] 5.00-6.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 6.00-7.00 sec 274 MBytes 2.30 Gbits/sec [ 6] 6.00-7.00 sec 274 MBytes 2.30 Gbits/sec [ 8] 6.00-7.00 sec 288 MBytes 2.42 Gbits/sec [ 10] 6.00-7.00 sec 289 MBytes 2.43 Gbits/sec [SUM] 6.00-7.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 7.00-8.00 sec 272 MBytes 2.28 Gbits/sec [ 6] 7.00-8.00 sec 300 MBytes 2.51 Gbits/sec [ 8] 7.00-8.00 sec 285 MBytes 2.39 Gbits/sec [ 10] 7.00-8.00 sec 269 MBytes 2.26 Gbits/sec [SUM] 7.00-8.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 8.00-9.00 sec 295 MBytes 2.47 Gbits/sec [ 6] 8.00-9.00 sec 284 MBytes 2.38 Gbits/sec [ 8] 8.00-9.00 sec 274 MBytes 2.30 Gbits/sec [ 10] 8.00-9.00 sec 273 MBytes 2.29 Gbits/sec [SUM] 8.00-9.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 9.00-10.00 sec 276 MBytes 2.32 Gbits/sec [ 6] 9.00-10.00 sec 290 MBytes 2.43 Gbits/sec [ 8] 9.00-10.00 sec 269 MBytes 2.26 Gbits/sec [ 10] 9.00-10.00 sec 290 MBytes 2.43 Gbits/sec [SUM] 9.00-10.00 sec 1.10 GBytes 9.44 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth Retr [ 4] 0.00-10.00 sec 2.72 GBytes 2.34 Gbits/sec 0 sender [ 4] 0.00-10.00 sec 2.72 GBytes 2.34 Gbits/sec receiver [ 6] 0.00-10.00 sec 2.71 GBytes 2.33 Gbits/sec 0 sender [ 6] 0.00-10.00 sec 2.71 GBytes 2.33 Gbits/sec receiver [ 8] 0.00-10.00 sec 2.73 GBytes 2.35 Gbits/sec 0 sender [ 8] 0.00-10.00 sec 2.73 GBytes 2.34 Gbits/sec receiver [ 10] 0.00-10.00 sec 2.66 GBytes 2.29 Gbits/sec 0 sender [ 10] 0.00-10.00 sec 2.66 GBytes 2.29 Gbits/sec receiver [SUM] 0.00-10.00 sec 10.8 GBytes 9.30 Gbits/sec 0 sender [SUM] 0.00-10.00 sec 10.8 GBytes 9.29 Gbits/sec receiver iperf Done. C:\download\iperf313>iperf3 -c unraid-one -P 4 Connecting to host unraid-one, port 5201 [ 4] local 192.168.1.194 port 50578 connected to 192.168.1.162 port 5201 [ 6] local 192.168.1.194 port 50579 connected to 192.168.1.162 port 5201 [ 8] local 192.168.1.194 port 50580 connected to 192.168.1.162 port 5201 [ 10] local 192.168.1.194 port 50581 connected to 192.168.1.162 port 5201 [ ID] Interval Transfer Bandwidth [ 4] 0.00-1.00 sec 180 MBytes 1.51 Gbits/sec [ 6] 0.00-1.00 sec 180 MBytes 1.51 Gbits/sec [ 8] 0.00-1.00 sec 201 MBytes 1.69 Gbits/sec [ 10] 0.00-1.00 sec 174 MBytes 1.46 Gbits/sec [SUM] 0.00-1.00 sec 735 MBytes 6.17 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 1.00-2.00 sec 166 MBytes 1.39 Gbits/sec [ 6] 1.00-2.00 sec 185 MBytes 1.55 Gbits/sec [ 8] 1.00-2.00 sec 260 MBytes 2.18 Gbits/sec [ 10] 1.00-2.00 sec 208 MBytes 1.75 Gbits/sec [SUM] 1.00-2.00 sec 820 MBytes 6.88 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 2.00-3.00 sec 144 MBytes 1.21 Gbits/sec [ 6] 2.00-3.00 sec 180 MBytes 1.51 Gbits/sec [ 8] 2.00-3.00 sec 180 MBytes 1.51 Gbits/sec [ 10] 2.00-3.00 sec 180 MBytes 1.51 Gbits/sec [SUM] 2.00-3.00 sec 684 MBytes 5.74 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 3.00-4.00 sec 182 MBytes 1.53 Gbits/sec [ 6] 3.00-4.00 sec 209 MBytes 1.75 Gbits/sec [ 8] 3.00-4.00 sec 179 MBytes 1.50 Gbits/sec [ 10] 3.00-4.00 sec 164 MBytes 1.38 Gbits/sec [SUM] 3.00-4.00 sec 734 MBytes 6.16 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 4.00-5.00 sec 150 MBytes 1.26 Gbits/sec [ 6] 4.00-5.00 sec 162 MBytes 1.36 Gbits/sec [ 8] 4.00-5.00 sec 189 MBytes 1.59 Gbits/sec [ 10] 4.00-5.00 sec 222 MBytes 1.87 Gbits/sec [SUM] 4.00-5.00 sec 724 MBytes 6.07 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 5.00-6.00 sec 167 MBytes 1.40 Gbits/sec [ 6] 5.00-6.00 sec 174 MBytes 1.46 Gbits/sec [ 8] 5.00-6.00 sec 172 MBytes 1.45 Gbits/sec [ 10] 5.00-6.00 sec 205 MBytes 1.72 Gbits/sec [SUM] 5.00-6.00 sec 718 MBytes 6.03 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 6.00-7.00 sec 152 MBytes 1.28 Gbits/sec [ 6] 6.00-7.00 sec 190 MBytes 1.60 Gbits/sec [ 8] 6.00-7.00 sec 211 MBytes 1.77 Gbits/sec [ 10] 6.00-7.00 sec 196 MBytes 1.64 Gbits/sec [SUM] 6.00-7.00 sec 750 MBytes 6.29 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 7.00-8.00 sec 183 MBytes 1.53 Gbits/sec [ 6] 7.00-8.00 sec 175 MBytes 1.46 Gbits/sec [ 8] 7.00-8.00 sec 205 MBytes 1.72 Gbits/sec [ 10] 7.00-8.00 sec 196 MBytes 1.64 Gbits/sec [SUM] 7.00-8.00 sec 758 MBytes 6.36 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 8.00-9.00 sec 223 MBytes 1.87 Gbits/sec [ 6] 8.00-9.00 sec 211 MBytes 1.77 Gbits/sec [ 8] 8.00-9.00 sec 160 MBytes 1.35 Gbits/sec [ 10] 8.00-9.00 sec 162 MBytes 1.36 Gbits/sec [SUM] 8.00-9.00 sec 756 MBytes 6.35 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ 4] 9.00-10.00 sec 142 MBytes 1.19 Gbits/sec [ 6] 9.00-10.00 sec 167 MBytes 1.40 Gbits/sec [ 8] 9.00-10.00 sec 199 MBytes 1.67 Gbits/sec [ 10] 9.00-10.00 sec 169 MBytes 1.42 Gbits/sec [SUM] 9.00-10.00 sec 678 MBytes 5.69 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bandwidth [ 4] 0.00-10.00 sec 1.65 GBytes 1.42 Gbits/sec sender [ 4] 0.00-10.00 sec 1.65 GBytes 1.42 Gbits/sec receiver [ 6] 0.00-10.00 sec 1.79 GBytes 1.54 Gbits/sec sender [ 6] 0.00-10.00 sec 1.79 GBytes 1.54 Gbits/sec receiver [ 8] 0.00-10.00 sec 1.91 GBytes 1.64 Gbits/sec sender [ 8] 0.00-10.00 sec 1.91 GBytes 1.64 Gbits/sec receiver [ 10] 0.00-10.00 sec 1.83 GBytes 1.57 Gbits/sec sender [ 10] 0.00-10.00 sec 1.83 GBytes 1.57 Gbits/sec receiver [SUM] 0.00-10.00 sec 7.19 GBytes 6.17 Gbits/sec sender [SUM] 0.00-10.00 sec 7.18 GBytes 6.17 Gbits/sec receiver iperf Done. C:\download\iperf313> File transfers are still puzzling, though. UR to WS is as expected 500-700MB/s, but WS to UR starts out around 600MB/s, then drops to steady 200MB/s for remainder of transfer. I tried some additonal steps to isolate problem: - reset UR network settings to "default" - enabled disk shares & copied direct to disk vs user share - added different NVME drive to UR as 2nd cache pool & tried file copies there All trials continued to show writes to UR cache drive/s being throttled to around 200MB/s. The only thing I haven't measured is internal write speed of NVME cache. I think the diskspeed docker only tests writes. Ran fio sequential write against current and added nvme cache drives. Both results seem reasonable. root@unraid-one:~# fio -direct=1 -iodepth=128 -rw=write -ioengine=libaio -bs=128k -numjobs=1 -time_based=1 -runtime=60 -group_reporting -filename=/dev/nvme1n1 -name=test test: (g=0): rw=write, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=128 fio-3.23 Starting 1 process Jobs: 1 (f=1): [W(1)][100.0%][w=626MiB/s][w=5010 IOPS][eta 00m:00s] test: (groupid=0, jobs=1): err= 0: pid=21979: Thu Sep 8 11:24:56 2022 write: IOPS=5028, BW=629MiB/s (659MB/s)(36.9GiB/60030msec); 0 zone resets slat (nsec): min=3327, max=60505, avg=9983.52, stdev=5930.32 clat (usec): min=333, max=58025, avg=25441.33, stdev=10088.11 lat (usec): min=340, max=58032, avg=25451.49, stdev=10088.09 clat percentiles (usec): | 1.00th=[ 6915], 5.00th=[ 9110], 10.00th=[12125], 20.00th=[15664], | 30.00th=[18482], 40.00th=[21365], 50.00th=[25297], 60.00th=[29230], | 70.00th=[32113], 80.00th=[35390], 90.00th=[39060], 95.00th=[41157], | 99.00th=[44827], 99.50th=[46400], 99.90th=[49546], 99.95th=[50594], | 99.99th=[52167] bw ( KiB/s): min=608512, max=690944, per=100.00%, avg=644709.46, stdev=18437.83, samples=119 iops : min= 4754, max= 5398, avg=5036.77, stdev=144.05, samples=119 lat (usec) : 500=0.01%, 750=0.01%, 1000=0.01% lat (msec) : 2=0.01%, 4=0.02%, 10=6.38%, 20=28.64%, 50=64.87% lat (msec) : 100=0.08% cpu : usr=4.09%, sys=5.33%, ctx=286419, majf=0, minf=10 IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1% issued rwts: total=0,301881,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=128 Run status group 0 (all jobs): WRITE: bw=629MiB/s (659MB/s), 629MiB/s-629MiB/s (659MB/s-659MB/s), io=36.9GiB (39.6GB), run=60030-60030msec Disk stats (read/write): nvme1n1: ios=0/603433, merge=0/0, ticks=0/15330361, in_queue=15330361, util=100.00% root@unraid-one:~# fio -direct=1 -iodepth=128 -rw=write -ioengine=libaio -bs=128k -numjobs=1 -time_based=1 -runtime=60 -group_reporting -filename=/dev/nvme0n1 -name=test test: (g=0): rw=write, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=128 fio-3.23 Starting 1 process Jobs: 1 (f=1): [W(1)][100.0%][w=1806MiB/s][w=14.4k IOPS][eta 00m:00s] test: (groupid=0, jobs=1): err= 0: pid=979: Thu Sep 8 11:27:07 2022 write: IOPS=12.8k, BW=1605MiB/s (1683MB/s)(94.1GiB/60006msec); 0 zone resets slat (nsec): min=3089, max=70086, avg=7303.86, stdev=4234.12 clat (usec): min=187, max=86843, avg=9958.33, stdev=7533.10 lat (usec): min=192, max=86848, avg=9965.76, stdev=7533.19 clat percentiles (usec): | 1.00th=[ 379], 5.00th=[ 603], 10.00th=[ 857], 20.00th=[ 3032], | 30.00th=[ 6390], 40.00th=[ 7832], 50.00th=[ 8848], 60.00th=[ 9896], | 70.00th=[12387], 80.00th=[16909], 90.00th=[18482], 95.00th=[19530], | 99.00th=[33817], 99.50th=[46924], 99.90th=[64226], 99.95th=[69731], | 99.99th=[76022] bw ( MiB/s): min= 577, max= 1808, per=100.00%, avg=1606.06, stdev=328.10, samples=119 iops : min= 4616, max=14466, avg=12848.50, stdev=2624.81, samples=119 lat (usec) : 250=0.08%, 500=2.80%, 750=4.74%, 1000=5.09% lat (msec) : 2=6.73%, 4=0.82%, 10=40.31%, 20=35.23%, 50=3.81% lat (msec) : 100=0.39% cpu : usr=7.38%, sys=8.44%, ctx=548065, majf=0, minf=14 IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0% submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0% complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1% issued rwts: total=0,770638,0,0 short=0,0,0,0 dropped=0,0,0,0 latency : target=0, window=0, percentile=100.00%, depth=128 Run status group 0 (all jobs): WRITE: bw=1605MiB/s (1683MB/s), 1605MiB/s-1605MiB/s (1683MB/s-1683MB/s), io=94.1GiB (101GB), run=60006-60006msec Disk stats (read/write): nvme0n1: ios=45/768933, merge=0/0, ticks=9/7655367, in_queue=7655375, util=99.99% root@unraid-one:~# I don't think the switch is bad, its an unmanaged netgear 8-port 10g copper, XS708. I could buy some cat6 cables, but hate to throw $$ at it without exhausting other options. Thanks for looking at this anyway, I'm open to any/all suggestions.

-

Still trying to eliminate possible problems here. Tried the following: - changed nic port - changed switch port - changed network cable - removed SMB multi-channel command from "SMB Extras", and made sure "Enable SMB Multi Channel" checked "No" in SMB Settings - changed nic - reboot Each run of iperf3 showed UnRaid>>TrueNAS maxing out 10Gb connection, but TrueNAS to UnRaid peaking around 2.8Gbps. AFAIK I'm using default network configuration, and in-box nic driver, really stumped here. root@TrueNAS-02[~]# iperf3 -c 192.168.1.162 -P 4 -i 30 -t 30 -R Connecting to host 192.168.1.162, port 5201 Reverse mode, remote host 192.168.1.162 is sending [ 5] local 192.168.1.160 port 28353 connected to 192.168.1.162 port 5201 [ 7] local 192.168.1.160 port 17600 connected to 192.168.1.162 port 5201 [ 9] local 192.168.1.160 port 35904 connected to 192.168.1.162 port 5201 [ 11] local 192.168.1.160 port 34427 connected to 192.168.1.162 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-30.00 sec 2.49 GBytes 712 Mbits/sec [ 7] 0.00-30.00 sec 2.58 GBytes 737 Mbits/sec [ 9] 0.00-30.00 sec 2.61 GBytes 749 Mbits/sec [ 11] 0.00-30.00 sec 2.67 GBytes 764 Mbits/sec [SUM] 0.00-30.00 sec 10.3 GBytes 2.96 Gbits/sec - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-30.13 sec 2.49 GBytes 710 Mbits/sec 134864 sender [ 5] 0.00-30.00 sec 2.49 GBytes 712 Mbits/sec receiver [ 7] 0.00-30.13 sec 2.58 GBytes 735 Mbits/sec 131587 sender [ 7] 0.00-30.00 sec 2.58 GBytes 737 Mbits/sec receiver [ 9] 0.00-30.13 sec 2.62 GBytes 746 Mbits/sec 131321 sender [ 9] 0.00-30.00 sec 2.61 GBytes 749 Mbits/sec receiver [ 11] 0.00-30.13 sec 2.67 GBytes 761 Mbits/sec 131679 sender [ 11] 0.00-30.00 sec 2.67 GBytes 764 Mbits/sec receiver [SUM] 0.00-30.13 sec 10.4 GBytes 2.95 Gbits/sec 529451 sender [SUM] 0.00-30.00 sec 10.3 GBytes 2.96 Gbits/sec receiver iperf Done. root@TrueNAS-02[~]# iperf3 -c 192.168.1.162 -P 4 -i 30 -t 30 Connecting to host 192.168.1.162, port 5201 [ 5] local 192.168.1.160 port 21998 connected to 192.168.1.162 port 5201 [ 7] local 192.168.1.160 port 20626 connected to 192.168.1.162 port 5201 [ 9] local 192.168.1.160 port 50486 connected to 192.168.1.162 port 5201 [ 11] local 192.168.1.160 port 17773 connected to 192.168.1.162 port 5201 [ ID] Interval Transfer Bitrate Retr Cwnd [ 5] 0.00-30.00 sec 8.50 GBytes 2.43 Gbits/sec 160 113 KBytes [ 7] 0.00-30.00 sec 7.52 GBytes 2.15 Gbits/sec 149 142 KBytes [ 9] 0.00-30.00 sec 7.74 GBytes 2.22 Gbits/sec 145 162 KBytes [ 11] 0.00-30.00 sec 8.63 GBytes 2.47 Gbits/sec 143 174 KBytes [SUM] 0.00-30.00 sec 32.4 GBytes 9.28 Gbits/sec 597 - - - - - - - - - - - - - - - - - - - - - - - - - [ ID] Interval Transfer Bitrate Retr [ 5] 0.00-30.00 sec 8.50 GBytes 2.43 Gbits/sec 160 sender [ 5] 0.00-30.12 sec 8.50 GBytes 2.42 Gbits/sec receiver [ 7] 0.00-30.00 sec 7.52 GBytes 2.15 Gbits/sec 149 sender [ 7] 0.00-30.12 sec 7.52 GBytes 2.14 Gbits/sec receiver [ 9] 0.00-30.00 sec 7.74 GBytes 2.22 Gbits/sec 145 sender [ 9] 0.00-30.12 sec 7.74 GBytes 2.21 Gbits/sec receiver [ 11] 0.00-30.00 sec 8.63 GBytes 2.47 Gbits/sec 143 sender

-

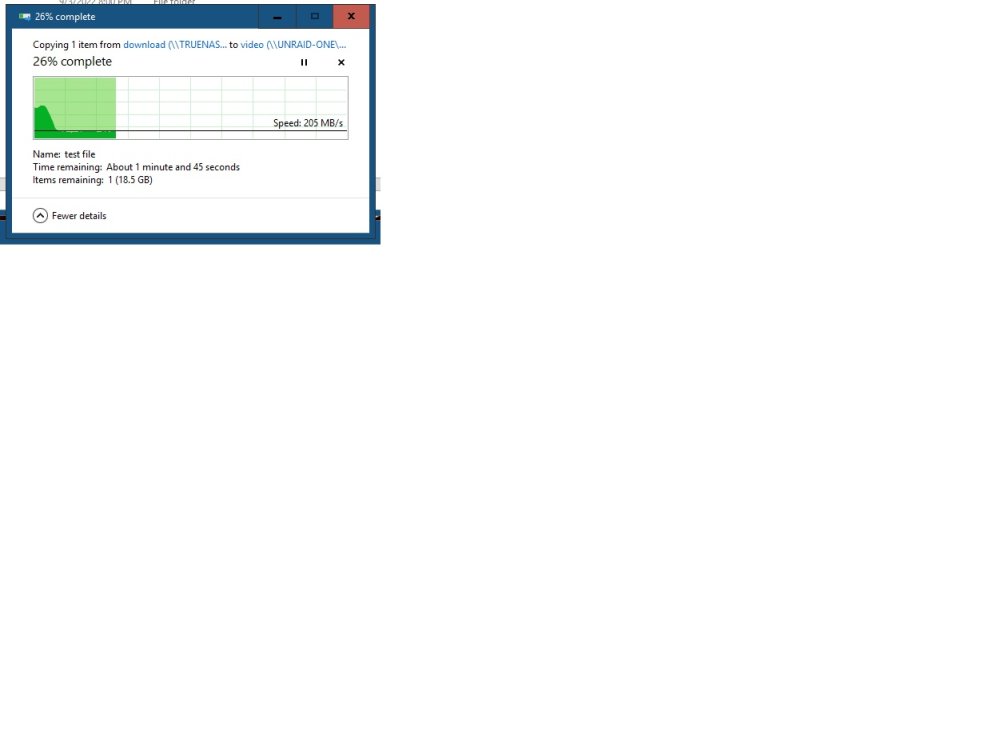

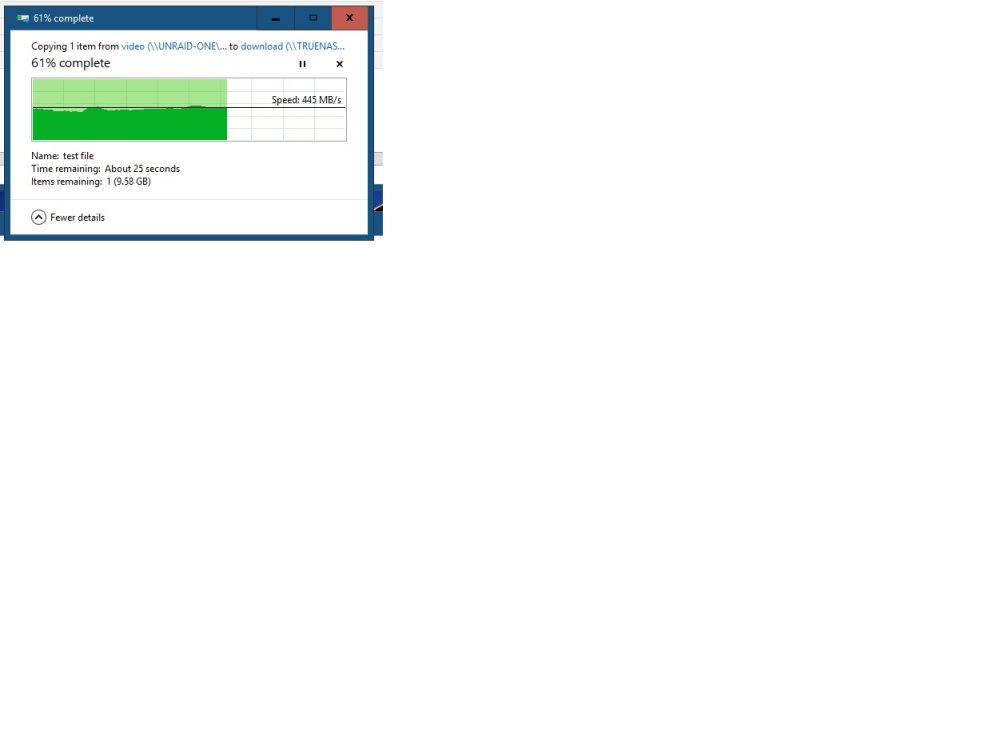

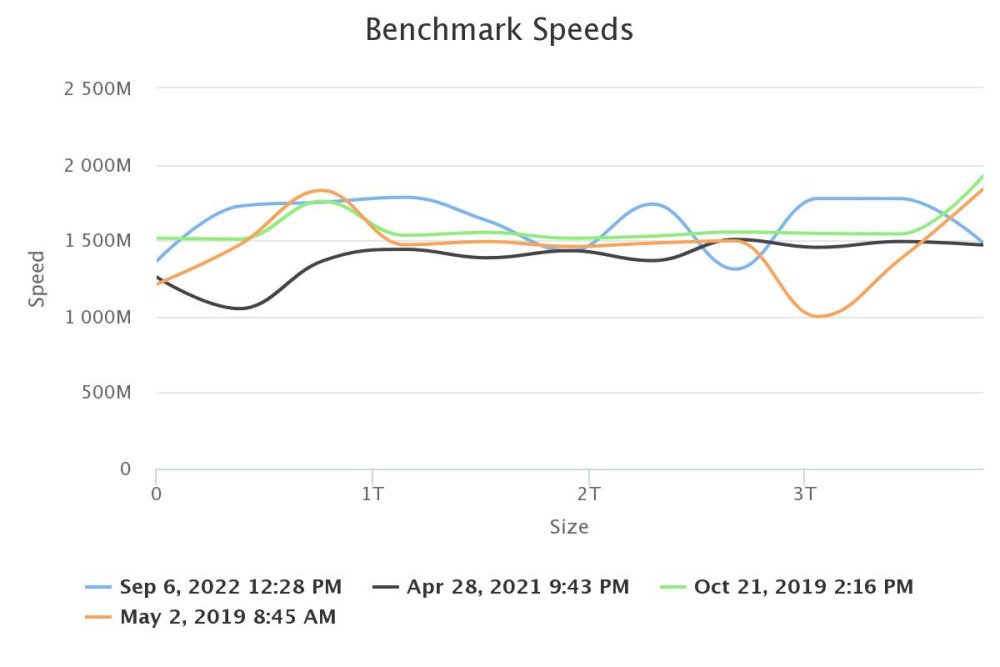

I'm seeing slower-than-expected copy speeds between UnRaid NVME cache drive and Truenas zfs array. Copies from UnRaid>>TrueNAS proceed at a steady 445MB/s, however copies from TrueNAS>>UnRaid start out around 700MB/s, then drop to steady 200MB/s. Iperf3 reflects same speed discrepancy - traffic to TrueNAS maxes out 10gb connection, but traffic to UnRaid peaks at 2.8Gbps. Direct benchmarks of UnRaid & TrueNAS perform as expected, leading me to believe I have a networking issue. I've attached screenshots of steps taken so far, as well as current diagnostics. I don't see any cpu cores at 100% during transfers. Network cables are Cat5e, 2m or less. If anyone with experience troubleshooting could take a look, I would appreciate it. Cheers, John iperf3.txt truenas-02 fio ssd array.txt unraid-one-diagnostics-20220906-1342.zip

-

Thanks for refreshing my memory - I must have made that change on main server then forgot about it. Once ast.conf created gpu mode enabled, as expected. I appreciate the assistance.👍

-

Here are the diags for system with local gui mode working. unraid-one-diagnostics-20211202-2349.zip

-

Took opportunity to pickup extra license during "black friday" special, and having a bit of trouble setting up spare installation. Flash drive is 16gb Sandisk Ultrafit, boots fine using legacy mode to Pro 6.9.2 txt login, but no luck booting to gui mode. Motherboard is SM X10SRL, bios & BMC updated to latest version. Tried adding "nomodeset" to syslinux.cfg, but did not help. BMC is Aspeed ast2400. Main unraid fileserver uses SM X10SRM, same BMC version & f/w, boots to gui mode no problem. Hopefully someone with sharper eyes can point me toward a fix, diagnostics attached. Thanks! unraid-three-diagnostics-20211202-1336.zip

-

The issue with AutoFan plugin ignoring the "exclude" function for some NVME drives has several requests going back almost a year. I moved on to the IPMI plugin; that may be an option for you depending on your specific hardware.

-

Also requesting addition of updated Aspeed AST2400 BMC drivers in next release. Text character display mode still functions, but GUI mode returns blank screen since BMC updated to address security issue. Blank screen persists in both IPMI console and vga monitor output when in GUI mode. MB: Supermicro X10SRM-TF bios v3.2 IPMI v3.86 Unraid : v6.8.2

-

CoolerMaster Centurion 590 mid-tower case -used but serviceable condition, I've moved to rack-mount and don't use anymore -side/top vent covers can be removed as needed -worn foam on front bay covers can be replaced with sheets from craft store -9 vertical 5 1/2" bays great for 5-in-3 hot-swap modules $30 PayPal, local pickup available (Spartanburg, SC) or willing to ship to CONUS I don't have original boxes, so shipping cost would be $20 for fragile packing @ UPS store, plus FedEx ground. Paypal does not offer FedEx, so I have listed on EBay to get cheapest shipping. If you are interested and have your own shipper account, I will drop off as needed. Shipping cost really limits this, but I hate to throw a great case in the dumpster!

-

Agreed, language is tricky, and easily misunderstood. I regret my wording, as I only intended to specify which version of Unraid I was using to test plugin. Hopefully, the main part of the post, where I document ongoing issue since April 2019, will receive similar attention.

-

I never stated that there is an incompatibility, not sure where that is coming from. Just trying to determine if its worth waiting for @bonienl to address previously reported issue with autofanspeed plugin, or move on to script substitute. I did read the notes on the apps, but a blanket warning that not all hardware is supported leaves a lot of gray area. Is it OK for a user to ask questions if they are not sure if problem is drivers or hardware or incompatibility with plugin or OS? The hardware I'm running is not bleeding-edge - Supermicro X9SCM-iiF, X10SRM-tf use AST2400 and Nuvoton WPCM450 BMC's - pretty mainstream. Unraid 6.7.0 + System Temp/Autofan works great, Unraid 6.7.2 + System Temp/Autofan = no sensors found. Neither combination ignores HGST NVME SSD when selected to ignore. If the developer states they are too busy, not gonna happen, no problem - I'll move on.

-

Would you recommend users of system temp and autofan plugins transition to script-based solutions at this time? The plugins are very much appreciated, but having functional issues with Unraid 6.7.2. As long as the plugins are available for download the nagging will continue

-

Monthly "bump" regarding Autofan control plugin. Issue still exists where "exclude" checkbox in plugin setup does not ignore NVME drive. Result is much higher fan speeds than necessary to cool array of spinners. Hopefully this can be addressed by developer when time permits. Original post with diagnostics linked below.

-

The X9SCM-F only has x8 physical PCIE slots; 2 closest to CPU are PCIE 3.0, so they will work with either x8 gpu (not sure of they exist) or some sort of accommodation for x16 gpu. Either a physical x16>x8 adapter or x16>x8 ribbon cable will work. Another option is opening end of x16 slot, but then clearance of onboard SMC's is a factor, as well as possibility of slot damage. I used a x16>x8 slot adapter, along with longer bracket screw standoffs with this motherboard. Works fine as long as you have 3U/4U chassis to allow for additional height of adapter.

-

Bumping again, as another month has passed...

-

Bumping previous post in hopes of future attention. Issue still remains where fan speed plugin does not ignore NVME drive when "checked" Thanks!

-

Seems like rolling back to version before nvme was supported could work, but I'm not sure how to do it. Kind of sad after all the virtualization features added that a core NAS feature is degraded i don't mind waiting, or participating in testing/troubleshooting, but the sound of crickets is a bit discouraging...

-

Just checking in to keep hope alive on update to AutoFan Speed plugin. Ongoing issue with NVME drive temps not being ignored, even if checking in setup dialog. Results in fan speeds (and noise levels) much higher than needed, and WAF being much lower than desired Details here: I realize that the plugins are supported in the free time of talented and generous community members, and they are very much appreciated. In that same spirit of generosity, some communication regarding future direction of plugin would be helpful. If this is the wrong channel to reach the right people, please redirect as needed.

-

Can anyone respond as to status of Fan Auto Control? Not sure if the "owner" is busy with other projects, or if plugin is no longer being supported/developed. I consider it integral to the function of UnRaid - just as important as UPS, parity check, mover, etc. Would be happy to help test any changes or donate funds to support current issues being addressed.