almarma

Members-

Posts

130 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by almarma

-

This right there solved my issue with Sonarr. Actually, to be more precise, I did the change only in Sonarr and Radarr (delete the shares and only use one /mnt/ (and /mnt/ inside the docker), I didn't change my Transmission client. But I also within the Sonarr client added a Remote Path Mapping as follows: Host: (the IP of my server) Remote Path: /downloads/ (the default download location by my Transmission docker) Local Path: /mnt/user/MEDIA/downloads/ (the hard linked folder to the same download URL). Once I did that and restarted Sonarr, my downloads finally begun working and are moved to the right folders by Sonarr

-

Thank you @Trurl I've run last night a Parity check and it fixed the 3 remaining errors. I've also changed the docker.img path to a particular disk (now it reads /mnt/disk1/docker.img). And I've also stopped the array and adjusted it's size on the settings web interface to 20GB so it matches its real size, so it should be ok now. Thank you again for your assistance with my issue

-

Hi Trurl. Thanks for your answer. I'm not really sure what you mean, but I'll investigate. I had to resize years ago docker.img because I was running out of space (I think because of Plex, but I'm not sure). Something got messed up, I'll investigate it. I'm not sure why is that. I'll check that too. I don't have a cache drive, so I guess I should just point it to a specific drive and that would do it, right? Yes, I lost control of it and used the terminal (it was the only visible icon from the web interface) to reboot it, but it seems like it crashed during the restart and a parity check started automatically. But every weekend I get a report of health status of the server (I don't remember how I enabled it) and it tells me it's ok. Should I run a new parity anyway?

-

Can I bump this thread?

-

Hi, It's not the first time that this happens to me and I don't know why is this: I have around 7 dockers installed, and today a couple disappeared (Plex and Duckdns). I have no clue about why this happens. Should I be worried? Normally just by reinstalling them, the appdata is still there so nothing is lost, but it's scary. Any help would be really appreciated. galeon-diagnostics-20201108-1719.zip

-

Hi everybody. I'm having a weird issue with my server: it has been working great for some months after I replaced the parity drive and I upgraded the RAM after testing it without issue. But on Friday and today again, after some hours being on, it was unresponsive: services like time machine backups couldn't connect, or any docker, but I could open the admin web interface, but only the top part was visible and the bottom part was blank for every tab. The console icon was visible so I used it to reboot but despite I used it, it triggered a new parity check. Can it be caused by some docker or plugin not working fine? Or is it a hardware issue? I attach a diagnostics zip file I generated after rebooting galeon-diagnostics-20201107-2137.zip

-

Thank you Johnnie. I forgot to mention that I followed this guide step by step and saw no error during the process: https://wiki.unraid.net/The_parity_swap_procedure But I suspect that the old damaged disk I replaced contained maybe those errors and so they were "transmitted" to the new replacement one. Anyway, I'll follow your advice and will do another parity check this evening and check if it returns 0. Thank you again

-

Hi, After replacing the old parity disk (3TB) with a new one (4TB, precleared) and a data drive with smart issues replaced with the old parity disk following the official guide from the wiki (fantastic guide, by the way), I performed a parity check and 244188000 errors were detected and corrected (yes, 244 millions!). The parity status is Valid. Other than that error, the server is working great, no other issue anywhere. I also replaced some SATA cables with better ones as recommended by @johnnie.black on another thread I opened when I begun having array writing issues, so everything is up and running as expected. Could it be maybe some "leftover issues" from the old damaged drive? Should I panic?

-

I agree totally. I think that, when creating a native File Explorer it should be pointed really really clear that mixing /mnt/user/ with /mnt/diskN will corrupt data. Thinking about the interface, I think it should be warned at the first use, or pointed very clearly. Not everybody knows or understand Linux and even what "mounting" is. To avoid beginners mistakes I would create "safe" shortcuts to the recommended or safe places to go to manipulate files or folders. I'm thinking about the /mnt/user/ address specifically, I think it should be the default address, and if the user moves out of there (to the higher /mnt/ address) then he or she should get a warning. It takes a while to understand that /user/ correspond to the "Shares" tab within the UnRAID admin page. So maybe the best is to call that shortcut "Shares" for consistency with the UnRAID naming convention. I think having a File Explorer is a great addition to UnRAID. Sometimes deleting or moving files or folders is much faster locally than via LAN, and specially if there's any weird thing going on with permissions or so, having a way to access the file system from the admin UI is a great addition. I like Dolphin, but I miss: - Shortcuts on the left side of the screen. - A text file editor (to edit some settings on some plugins or dockers that require it).

-

How can you be that sure? I just ask out of curiosity, to learn myself. Because I did the SMART extended test and it was ok and there's not a single error logged into it. Again, how did you found that out? Thank you in advance

-

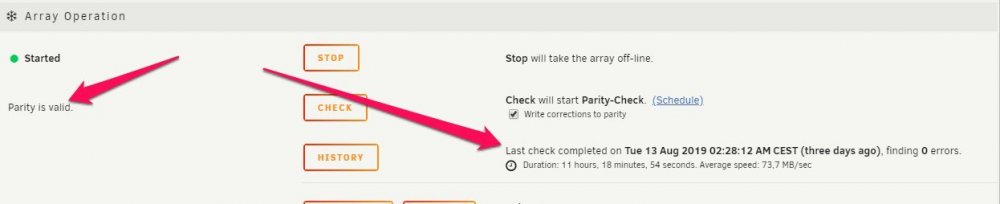

Thanks for your answer. But is it 100% sure it's the disk and not the cable? What do you mean with "Parity is failing"? As far as I know, parity is still ok and no error was corrected from parity:

-

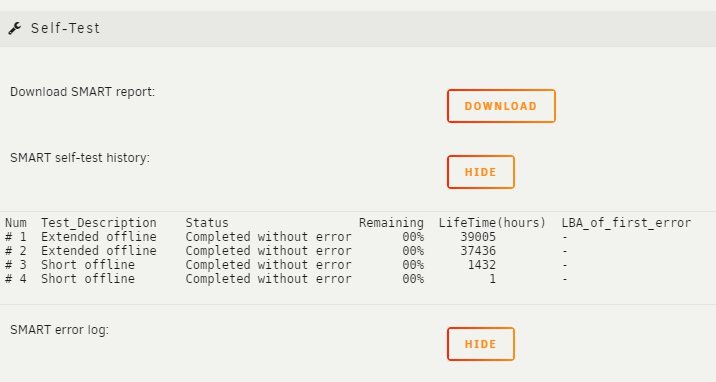

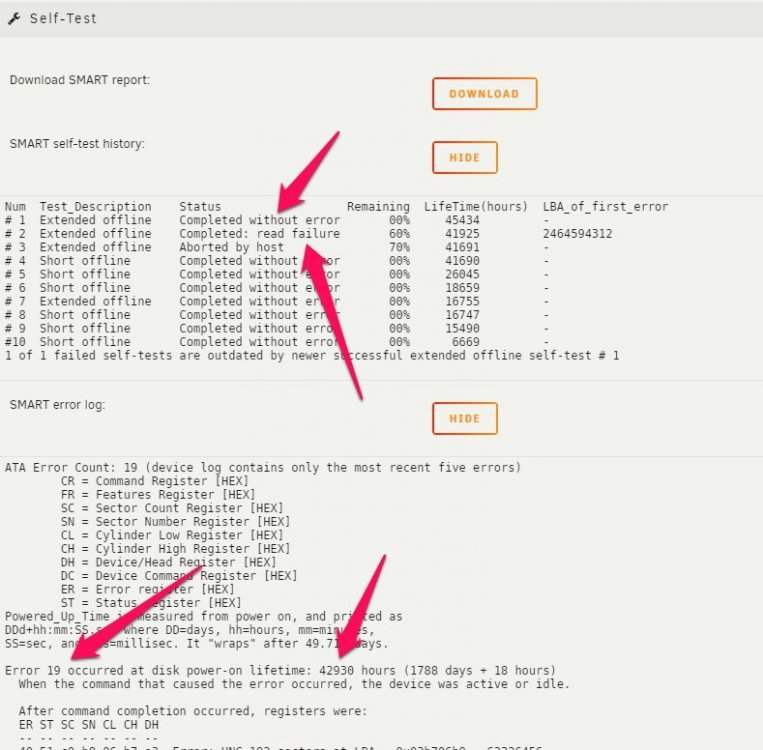

Hi everybody, A few months ago I came here asking for help to disable a supposedly damaged disk. I followed all the instructions but the last one: actually removing the disk from the array. I did it because summer holidays and such, I didn't want to hurry it or even risking to leave the array partially broken while on holidays with no access to the server. Well, now I'm back and last weekend I had an issue with a case fan which became noisy so I replaced it and rearranged the fans in another way because the cables were too tight. Well, after it, a new array test happened and now I saw 17 errors in the Parity disk, and no error in the supposedly damaged one. What's going on here? After that I did a new Extended SMART test from both disks and now none of them has any issues (the Disk3 reported issues before). Can it be the cable? Did I accidentally moved the cables while rearranging the case fans and now it's fine? It's a big weird that the issue has jumped from one disk to another one. This is the array status reporting 17 errors (not parity errors, the array shows as healthy): This is the Test results from the Parity Drive This is the test results from the Disk3 Drive. Here you can notice: - Last test, completed without error. - The previous one (#2) Completed with read failure. - Down in the error log, Error 19 happened at 42930 hours of age. No other error until now (2000 hours more). I've attached also the diagnostics file from my server in case somebody want or can help me. Thank you in advance. galeon-diagnostics-20190816-1010.zip

-

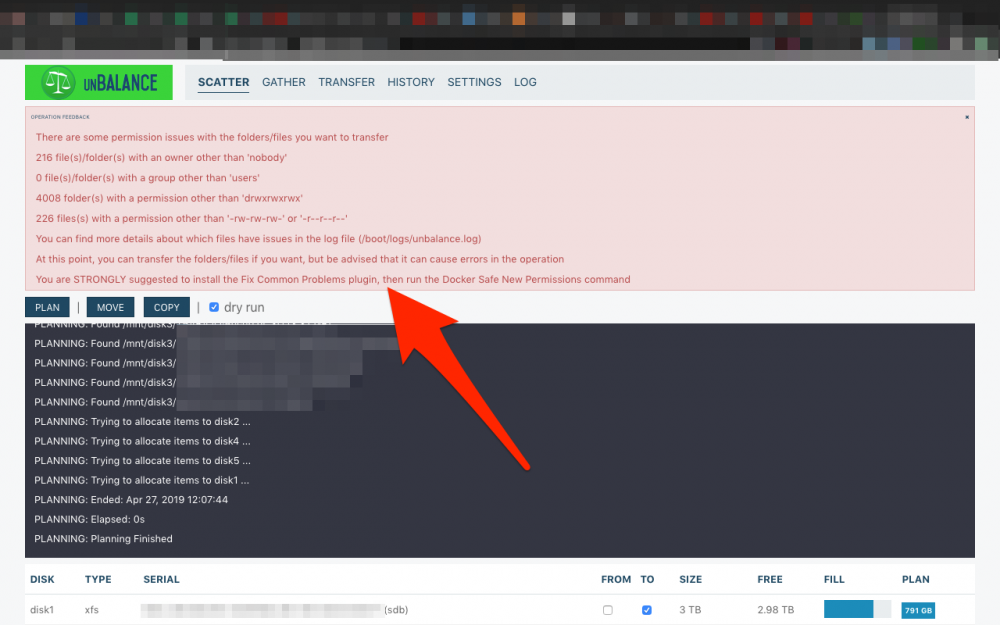

Thanks for your answer. I did, I run the docker safe permission script but the error kept popping so I'm copying instead of moving so I'm not left with half copied folders. Maybe the safest way to move data deleting it from the original source would be: copy everything, verify, and only then if 100% is copied and ok, then delete the source. Is is done like this?

-

I have a suggestion for this awesome plugin: I've noticed that, before any operation there's a scan of each share, folder, disk or whatever will be moved to ensure a successful operation (which is great!), but in case of warnings or issues, it's very difficult to read them, because it's a popup that disappears by itself after a few seconds, and you have no time to read it all. I think that, in case of warnings or errors, this popup should be permanent with maybe a "Close" or "OK" button. In this way the user has time to read it all and do the corrections needed to continue. Here's a screenshot example of a warning I get after the scan. It also was the only way I found to read it till the end:

-

I agree with you. The only reason why I did that was because the "Fix Common Problems" recommended it to me. I had it as a user share but it told me it wasn't recommended

-

OK. My progress so far: I was able to empty Disk1 totally. I was able to copy Docker.img and /appdata/ from Disk3 to Disk1 (using Dolphin Docker with root access. I copied, I didn't remove anything from Disk3 yet). Yes, I was using a docker app with the docker system still up, but because I just copied it, it seems it worked. Next I shut down Docker service, changed settings to Disk1 Then I changed the share location to use Disk1 instead of 3. I turned on Docker service and checked one by one all the apps and modified its settings to use Disk1 if I saw any path using Disk3. Everything seems to be working fine, and this was in my opinion the most critical part. Next I'll try: to rename Docker.img and /appdata/ folders (adding .backup or something) to see if nothing crashes and it's yet stable. Then I'll restart the Docker service to confirm the old folders are not used at all. And then I plan to reboot the server for same goal. If all goes as planned, I'll move the rest of the folders out of Disk3 and rebuild the array without it. Does it sounds fine? Am I missing some step?

-

I have a question: Let's say for simplicity that I have one share called MOVIES assigned to use Disk1 Disk2 and Disk3, and then with the array down, I change its settings to use only Disk1 and Disk2. Will the content be automatically moved from Disk3 to the other 2 to remove the content from Disk3? I know how it goes adding disks, but not how it goes removing them from shares

-

Thank you very much for the follow up Ok, this is the point where I want to be sure I do it right. I know some basic Linux command line tools, but it has been a while since the last time. Isn't unBalance a good tool to do it? Also, I guess the Docker should be down before proceeding. Should the array be down too? I want to be sure before doing any step to minimize stress and errors along the way.

-

Hi, I have an issue with one of my disks and I want to shut it down. Right now I have a very tight budget and cannot afford to buy a new one. The broken disk is the most crucial one I think because it stores the docker.img and the /appdata/ folder. Let me first describe better the situation: - Parity disk: 3TB - Disk1: 3TB (used mainly for Time Machine backups) - Disk2: 1.5TB - Disk3: THE BROKEN ONE. It holds shares and the docker.img plus the /appdata/ folder - Disk4: 750GB - Disk5: 1TB I'm figuring out two possible solutions: 1.- To stop using Disk1 for backups (I have a 2.5" usb disk that will solve it for now) and move all the data from Disk3 to Disk1. 2.- Replace Disk3 using Disk1. I've tried moving /appdata and the docker.img file and folder to Disk1 but I don't have the right permissions, so I'm not sure if that's the right way to do it. About option 2, I'm not totally sure about the steps to do it minimizing risks. Should I first empty disk1 and remove it from the array and then replace it for Disk3 role? Help would be much appreciated

-

Thank you. Yes, many of the content are movies which can be re downloaded. There're some backups from my Macs too, and some important stuff too, but it doesn't take that much, so I'll do a big cleanup and remote it. I'm just wondering one thing: is it better for the whole array to remove it or to just use it with irrelevant stuff? I mean, can it affect negatively to the whole array or can it still be useful to reconstruct the array in case of another failure?

-

Thank you. Here's the report galeon-diagnostics-20190419-1731.zip

-

I have 5 disks between 3TB and 1TB in size + the parity drive (3TB). The total size of the array is 9.3TB and usage is at 6.75TB (70%) but the disk3 (the one with a few read failures) is at 94% usage ratio

-

Thanks. I know, but I'm broken an unemployed right now and I cannot afford buying one :(. But I'll do as soon as I can afford it. Meanwhile I need to minimize risks as much as I can so I want to be gentle with that disk and reduce it's usage. But maybe I stress a different one and happens what you say, so maybe it's better to let it as it is now. I'm not sure what to do now

-

Hi everybody, I have a disk (disk3) which seems to be slightly damaged. Nothing serious for now because the array is still healthy but because that's the disk where my docker.img and /appdata folder live, I would prefer to move them to a different disk so I reduce risks and also reduce stress on the worn out disk. I'm trying to use unBalance but it seems it doesn't do it despite it says the operation was successful. I guess it's a permission problem, and if I try to change the user for unBalance from nobody to root, it turns back to nobody. Does anybody has any clue or idea that could help me?

-

CPU usage stuck at 100% in one core after upgrade to 6.6.7

almarma replied to almarma's topic in General Support

Thank you for your answer. It's very good to know this issue exists. I don't have Plex pass so I suspect I can't update to the same release you have yet. I'll keep an eye on it