tiwing

Members-

Posts

98 -

Joined

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

tiwing's Achievements

Apprentice (3/14)

3

Reputation

-

Hi, thanks for the great work maintaining this - I've had to use restore recently and you saved my a$$. Is this the right spot for feature requests? If not feel free please to move or delete. Ideally, looking for an option (or even default behaviour?) to shut down a docker only when the backup is going to start happening, then immediately restart it once the backup is complete? (this assumes the user has selected separate .tar backups) My use case is that I use Agent-DVR and Zoneminder which I'd love to have backed up, but ideally don't want to wait for the massive and time consuming plex backup to complete before the DVR dockers restart. For now they are excluded and set not to stop. thank you!!

-

Been using this plugin for years, and more than once it's saved my ass. Thank you for taking this on and keeping such a great piece of work alive and progressing. One question / suggestion - I have "create separate archives" set to Yes - but the plugin still stops all dockers at the start, then starts them at the end. Could it be possible to shut down a docker only when that docker appdata will be backed up instead? In other words, I don't think Plex needs to be closed if krusader is being backed up.. ? Thank you!

-

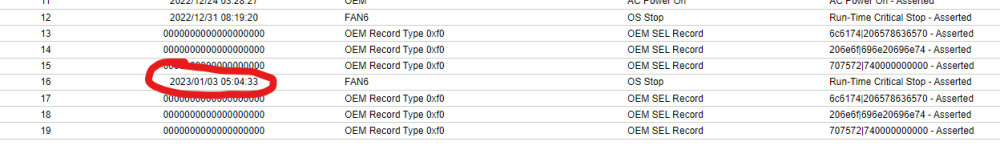

new syslog from the other server and diagnostics attached. I'm still having the server go completely offline every few days with the same machine logging from IPMI: syslog shows a gap Jan 3 between 5am and 8:30am. Nothing showing up in syslog. edit: is there any way to put v6.9 back on the box for testing purposes? I don't remember having this issue with 6.9.x... syslog-192.168.13.50-20230103_0928xx.log kscs-fvm2-diagnostics-20230103-0934.zip

-

tiwing started following OS Stop Run-Time Critical Stop

-

Hi, just experienced another one. diagnostics and syslog attached. Complete non-responsive happened at 4:00:26 Dec 19 and I powered off, and powered back on, at 6:45am Dec 19. There's nothing in syslog immediately prior. I did look at diagnostics but ... I don't know what to look for - would be awesome if some of you smart folks could say what kinds of things you go to first so I can help contribute back to the group rather than just use your brains... !! kscs-fvm2-diagnostics-20221219-0735.zip syslog-192.168.13.50.log Thanks much! tiwing edit: I just realized .... I set up the syslog server on the same box that keeps failing. Perhaps it going offline means lines were not recorded in the syslog properly. I'll spin up my backup box and leave it running .... and repoint the syslog to it. whoops??!

-

bringing this topic back from the dead. Just started to get the exact same error 3 weeks ago. I get about 3 days before having to hard stop and restart - of course invoking a parity check. Not a great way to live. X9DR3-LN4+ in a 36 bay superstore. Did anyone figure this out?? I threw a new motherboard at it, but after reading this it seems might be more likely an issue with my Unraid install?

-

[6.10.3] GUI flash/reload when clicking on unmountable cache drive

tiwing commented on tiwing's report in Stable Releases

doesn't happen when accessing the cache drive page from my other server either - either by clicking a link from any drive in a pool of multiple ssds or a single ssd pool. What do you mean whitelist the server - in Chrome, or ... something else?? If it's unique to me only and can't be replicated elsewhere, feel free to close this bug! If it's just me I can accept "can't do that from that server" and hope I don't need to make changes -

[6.10.3] GUI flash/reload when clicking on unmountable cache drive

tiwing commented on tiwing's report in Stable Releases

update: I have replaced the cache disk with a good one, but the issue remains so it's not related to the unmountable status. (Browser cache and history has all been cleared and chrome was closed and relaunched) -

consider adding some Extra Parameters to avoid completely maxing out the docker use of your resources. For my tdarr I have the following in Extra Parameters in the docker: --cpu-shares=256 --cpus=8 which puts the docker at lower priority than pretty much everything else (cpu-shares numbers range from 0 to 999 I think?? Default is 999 or 1000 I don't remember. Point is this number is lower) and cpus sets the max number of cpus that can be used at any point in time. Personally I prefer this over pinning the cpus as unraid just uses whatever it wants up to a maximum equivalent of 8 total CPUs. In my system that's 25% of available so that the system always has resources to do its thing. The other thing I found when I hit a certain threshold of number of dockers, read write into them, etc. is that having appdata and the docker image on the same SSD actually caused me a world of issues. Splitting appdata and system (docker) onto separate physical disks using separate cache pools completely eliminated my system going unresponsive.

-

tiwing started following [6.10.3] GUI flash/reload when clicking on unmountable cache drive

-

Bump again? Am I doing something wrong on these forums?

-

tiwing started following Help please- hardware error. diagnostics attached and hardware error - help please.

-

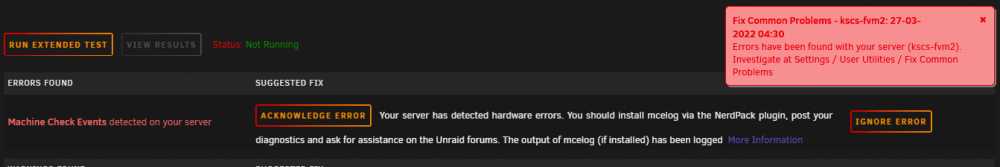

3rd time's a charm? Posted diagnostics twice before for hardware error found during fix common problems scan. nerdpack & mcelog have been installed for years. Anyone here able to help determine what the issue is please??? thank you !! tiwing kscs-fvm2-diagnostics-20220327-2000.zip

-

is anyone on these forums anymore??

-

hi all, plex goes unhealthy. diagnostics attached. extra parameters are as follows: --log-opt max-size=100m --log-opt max-file=1 I installed linuxserver's plex docker which stays up fine, and all other dockers are fine. I've been struggling with a machine error and posted here a number of months ago with no response... hopefully there is a link between this and that... kscs-fvm2-diagnostics-20211102-1511.zip thanks in advance

-

As title. MCElog has been installed for a long time... I just don't know where to look. I had a memory error a while back and replaced the stick, which I thought has solved that issue. I know my VGA port does not work due to broken solder on the motherboard... Hopefully it's just one of those two things... ? thanks for your help! kscs-fvm2-diagnostics-20210803-0753.zip