tiwing

Members-

Posts

98 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by tiwing

-

Hi, thanks for the great work maintaining this - I've had to use restore recently and you saved my a$$. Is this the right spot for feature requests? If not feel free please to move or delete. Ideally, looking for an option (or even default behaviour?) to shut down a docker only when the backup is going to start happening, then immediately restart it once the backup is complete? (this assumes the user has selected separate .tar backups) My use case is that I use Agent-DVR and Zoneminder which I'd love to have backed up, but ideally don't want to wait for the massive and time consuming plex backup to complete before the DVR dockers restart. For now they are excluded and set not to stop. thank you!!

-

Been using this plugin for years, and more than once it's saved my ass. Thank you for taking this on and keeping such a great piece of work alive and progressing. One question / suggestion - I have "create separate archives" set to Yes - but the plugin still stops all dockers at the start, then starts them at the end. Could it be possible to shut down a docker only when that docker appdata will be backed up instead? In other words, I don't think Plex needs to be closed if krusader is being backed up.. ? Thank you!

-

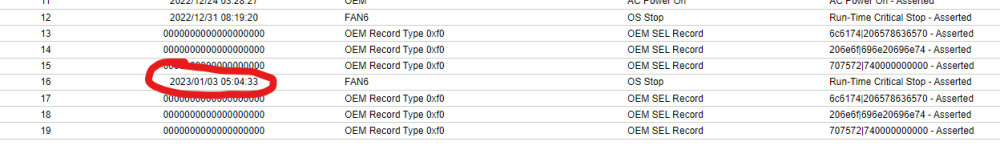

new syslog from the other server and diagnostics attached. I'm still having the server go completely offline every few days with the same machine logging from IPMI: syslog shows a gap Jan 3 between 5am and 8:30am. Nothing showing up in syslog. edit: is there any way to put v6.9 back on the box for testing purposes? I don't remember having this issue with 6.9.x... syslog-192.168.13.50-20230103_0928xx.log kscs-fvm2-diagnostics-20230103-0934.zip

-

Hi, just experienced another one. diagnostics and syslog attached. Complete non-responsive happened at 4:00:26 Dec 19 and I powered off, and powered back on, at 6:45am Dec 19. There's nothing in syslog immediately prior. I did look at diagnostics but ... I don't know what to look for - would be awesome if some of you smart folks could say what kinds of things you go to first so I can help contribute back to the group rather than just use your brains... !! kscs-fvm2-diagnostics-20221219-0735.zip syslog-192.168.13.50.log Thanks much! tiwing edit: I just realized .... I set up the syslog server on the same box that keeps failing. Perhaps it going offline means lines were not recorded in the syslog properly. I'll spin up my backup box and leave it running .... and repoint the syslog to it. whoops??!

-

bringing this topic back from the dead. Just started to get the exact same error 3 weeks ago. I get about 3 days before having to hard stop and restart - of course invoking a parity check. Not a great way to live. X9DR3-LN4+ in a 36 bay superstore. Did anyone figure this out?? I threw a new motherboard at it, but after reading this it seems might be more likely an issue with my Unraid install?

-

[6.10.3] GUI flash/reload when clicking on unmountable cache drive

tiwing commented on tiwing's report in Stable Releases

doesn't happen when accessing the cache drive page from my other server either - either by clicking a link from any drive in a pool of multiple ssds or a single ssd pool. What do you mean whitelist the server - in Chrome, or ... something else?? If it's unique to me only and can't be replicated elsewhere, feel free to close this bug! If it's just me I can accept "can't do that from that server" and hope I don't need to make changes -

[6.10.3] GUI flash/reload when clicking on unmountable cache drive

tiwing commented on tiwing's report in Stable Releases

update: I have replaced the cache disk with a good one, but the issue remains so it's not related to the unmountable status. (Browser cache and history has all been cleared and chrome was closed and relaunched) -

consider adding some Extra Parameters to avoid completely maxing out the docker use of your resources. For my tdarr I have the following in Extra Parameters in the docker: --cpu-shares=256 --cpus=8 which puts the docker at lower priority than pretty much everything else (cpu-shares numbers range from 0 to 999 I think?? Default is 999 or 1000 I don't remember. Point is this number is lower) and cpus sets the max number of cpus that can be used at any point in time. Personally I prefer this over pinning the cpus as unraid just uses whatever it wants up to a maximum equivalent of 8 total CPUs. In my system that's 25% of available so that the system always has resources to do its thing. The other thing I found when I hit a certain threshold of number of dockers, read write into them, etc. is that having appdata and the docker image on the same SSD actually caused me a world of issues. Splitting appdata and system (docker) onto separate physical disks using separate cache pools completely eliminated my system going unresponsive.

-

Bump again? Am I doing something wrong on these forums?

-

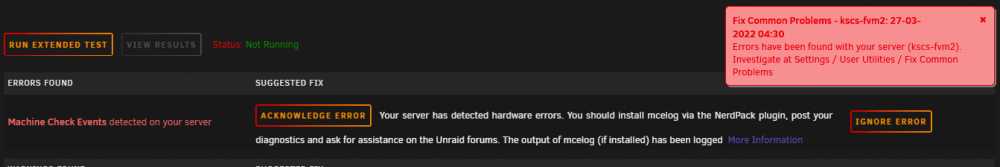

3rd time's a charm? Posted diagnostics twice before for hardware error found during fix common problems scan. nerdpack & mcelog have been installed for years. Anyone here able to help determine what the issue is please??? thank you !! tiwing kscs-fvm2-diagnostics-20220327-2000.zip

-

is anyone on these forums anymore??

-

hi all, plex goes unhealthy. diagnostics attached. extra parameters are as follows: --log-opt max-size=100m --log-opt max-file=1 I installed linuxserver's plex docker which stays up fine, and all other dockers are fine. I've been struggling with a machine error and posted here a number of months ago with no response... hopefully there is a link between this and that... kscs-fvm2-diagnostics-20211102-1511.zip thanks in advance

-

As title. MCElog has been installed for a long time... I just don't know where to look. I had a memory error a while back and replaced the stick, which I thought has solved that issue. I know my VGA port does not work due to broken solder on the motherboard... Hopefully it's just one of those two things... ? thanks for your help! kscs-fvm2-diagnostics-20210803-0753.zip

-

SSD cache pool - large writes causing connectivity issues

tiwing replied to tiwing's topic in General Support

correct. After leaving system and appdata on the "cache" pool, and moving all other shares to a different pool, I had no connection loss to my dockers and no gui interruption. I probably copied several TB in various tests last night to test, including using krusader disk to pool directly, krusader share to share, windows share to share, and having Tdarr do a bunch of work on a test folder. I'm at work now, but I'll watch "top" tonight and see what happens there. I also have the netdata docker installed, will watch that tonight and see if anything looks bizarre, and will report back here. -

SSD cache pool - large writes causing connectivity issues

tiwing replied to tiwing's topic in General Support

that's interesting, looks like I fall into the few users camp! I just tested my old machine (diagnostics attached) which actually does show the same behaviour in terms of processor usage on writing to the BTRFS pool - in this case the "pool" is one SSD as I don't care for redundancy in this box. I never noticed it when it was my primary machine, but I think I know why. This box alternates between full speed and almost zero write speeds. Always has. Still does. Seems like when the processors get maxed the write speed drops to "slow", then things calm down, processors return to normal levels and write speed increases to full, then processors ramp up and write speed drops again. I think this behaviour meant that I never lost connectivity to my dockers or gui which is why I never noticed it there. It does not happen on a regular time schedule, sometimes it will be fine when copying for a minute, then will go into a cycle as described every 10-15 seconds, then it will be fine for a bit. It's also impossible for me to tell if the processors ramp up before the write speeds slow down... in other word I don't know if high processor usage is causing slow write speeds, or if slow write is causing high processor. I'm happy to help test different scenarios since I have two different unraid boxes at home - just shoot me a PM if you want me to dig into anything. cheers kscs-bu-diagnostics-20210416-0807.zip -

SSD cache pool - large writes causing connectivity issues

tiwing replied to tiwing's topic in General Support

update: I created a new pool, put the 250GB evo's back into the box and moved docker and appdata back to them. Once I started writing large files to cache the same issue reoccurred where processors maxed out and it looks like disk IO caused connection issues, including dropping out plex, I assume because plex couldn't find IO to access the docker or appdata. Or maybe the networking within dockers... I have no idea. So I've left the twin 480s in a separate pool and switched file writes to that pool while leaving system and appdata on the smaller pool. It works perfectly, and although large writes seem to really max many of the threads it did not take down plex and I didn't lose access to the unraid gui. I don't remember this happening in any previous unraid versions, and unfortunately the upgrade o 6.9.0 happened with a day of moving to the new server. For now I guess I'll have to leave things as separate pools or to turn off cache usage for all file shares except system and appdata. I'm not impressed that I have to do this. Is this a new bug introduced in a recent version of unraid? Anyone else experiencing this, or is anyone able to replicate it? I'll mess a bit with my backup unraid server but I don't have twin cache drives on it, and I don't have parity... it's just a jbod box for daily backup. -

SSD cache pool - large writes causing connectivity issues

tiwing replied to tiwing's topic in General Support

also, thinking about the best way to switch back to the 250 evos , would it be to create a new cache pool (pool2) of 2x250SSD, move/copy over appdata and system folders and repoint the shares to "pool2", then remove the 480s? Far better, I think, than moving everything to the array, then back again (large plex library) -

SSD cache pool - large writes causing connectivity issues

tiwing replied to tiwing's topic in General Support

damn.. well that's why I'm seeing it now then - my 250s are EVOs and I never noticed a problem with them. Do you think that's largely the cause of high processor usage?? Maybe I'll dump the EVOs back in there - return window is still open for one of the 480s. edit: follow up Q - would you recommend using separate cache pools for data writes versus appdata/system ? Makes a difference to the sizes I'm buying -

kscs-fvm2-diagnostics-20210415-1243.zip Hi all, I'm having an issue where any large writes to the cache pool cause what seems to be connectivity issues to the entire unraid server including dropping connection to dockers and interruption of the GUI from a browser. I've been able to isolate two scenarios where this happens, and how I can prevent it: 1) copying large files to a cache:yes share using krusader 2) processing files using tdarr from a cache:yes share. NOTE the tdarr cache space is on an SSD in undefined devices, not on the cache pool itself. setting shares to cache:no prevents the issue. During sustained writes to the cache pool there is ever increasing processor usage where it starts fine, then over a period of minutes the number of pinned CPUs progressively increases to a pretty high number at which point I lose connectivity via the web and dockers such as plex go offline. appdata and system (docker, libvert) are all on the same cache pool. I had an "unbalanced" pool previously and thought that might be the issue (1x480GB and 2x250GB). I since pulled the 2x250s and replaced with an additional 480GB for a "proper" btrfs raid1 *cough* cache pool. I have not yet tested removing one of the drives in the pool to see if single disk on the pool is still an issue, but that is one of my next steps to problem solve. I could also create a second cache pool of either 1 or 2 disks (prefer 2 for real world redundancy) since I still have my 250GB twins available, and isolate share writes form appdata and system folders, but I'd prefer not to use more disks for cache than I need to, and I just spent a bunch of money on 480GB SSDs. I AM willing to test though, if it seems like a good next step test. I'm not challenged for CPU or memory in this machine as it's a dual 8 core Xeon with 128GB ram. All drives are all on twin LSI HBA cards through SAS2 backplanes. For now I've left the shares set to cache:no where I normally do my large writes, but that defeats the point of having a somewhat large cache, and is not how I'd prefer to use the server. Additionally, there are a number of smaller writes to these same shares all day long and I'd rather make use of the cache pool. Any help and comments is appreciated.

-

Thanks!! Sounds like a great mid way solution.

-

Thanks for that. The mirror I was suggesting would be in proxmox on a separate pc, prob with proxmox itself booting off a spinner, and probably zfs raid 1 for the vms. I did my original install of pfsense on metal with raid 1 across 2 ssds. Waste of space since pf runs mostly from memory, but I had them lying around. Nothing broke... Are you saying to rip the guts out of supermicro and put Intel igpu in the server chassis or go with a separate Intel unit? I hear your comments about 24/7. I've thought a lot about it both ways... I'm not challenging you. I do find it so hard to wrap my head around moderate heat cycles and 500 start/stop a year causing failures on modern drives. I only changed to do this because I don't want my wife on me about the electric bill and supermicro draws 200watt at idle where my old box draws 120. So having the backup off most of the time balances that. We'll see... Lol but yeah. Hear you. Thanks

-

Within the last 3 months I purchased a new-to-me (and not returnable) supermicro server to act as my primary unraid server with some pretty great specs. Or so I thought. It's a 36 bay supermicro X9DRi-LN4+ with SAS2 backplanes and dual Xeon e5-2670 processors for a total of 36 threads. I thought I needed it. Then about 2 weeks later I learned about intel quicksync. I have two primary uses for the server: Plex, and data storage. Related to plex are things like tdarr which handle all my re-encodes for better streaming, radarr, sonarr, bazarr. I share media with family and close friends via plex over the internet with a slow outbound speed, so transcoding of outbound is quite common. So now I have a power hungry 36 thread monster that can't do hardware video encoding. Sure the chassis is awesome, and redundant power supplies are great. And the fact it takes a real server 5 minutes to boot ... oh wait, not so great. I currently have a second unraid box acting as a backup server, using a Xeon W series processor that automatically starts every day at 7am triggering an incremental backup of the primary box (syncthing dockers), and then shuts down. I have a third box that's a dedicated pfsense firewall. I really want to get hardware encoding especially as I start to move into more 4k source material (and I don't want to store an extra copy pre-encoded to 1080p). So I think I have a few options: 1) Add a Nvidia low profile card to the supermicro. This would be ideal if quadro p2000 cards weren't so expensive. Even the "cheap" GTX 1050ti is expensive. It would keep my "primary" box as my primary in all respects, and give access to data locally rather than over the network. I'm not even sure you can get a low profile p2000 and I don't want to be limited to the number of transcodes running at any given point. 2) Upgrade my backup server to a modern (7th gen or higher for hevc) i3/i5 based machine, leave it also running 24/7 and accept that my "backup" is my plex (and tautulli) "primary" but still have all my "primary" data on the supermicro. This feels clunky, and I'm not sure plex can watch for file changes over the network <- that's not a deal breaker, but a "nice to have" - and would be an issue with most of the other solutions here so I'm not going to repeat it each time 3) build a small and dedicated plex server likely hosted as docker in ubuntu or alpine where it's sole purpose in life as as a plex server. 4) buy a modern i3/i5 and leave it running 24/7, but install proxmox, move pfsense into a VM there with the quad port network card passed through, and install ubuntu or alpine and run plex from that box, passing quicksync through to the plex host. there are many benefits to this option, as an i3 with modest memory 8 GB(?) would be able to easily handle pfsense (2 or 3GB is fine for it) and plex (and tautulli) in dockers, would give me a "production" server not mixing backup and primary purposes, would not result in yet another 24/7 computer being on, and would let me learn a bit about type 1 hypervisors which I know nothing about. Plus both pfsense and ubunty/alpine have small installation sizes and I assume proxmox would let me install to a set of mirrored 256GB SSDs for redundancy... (??) (way more research required). 5) rip the guts out of my supermicro and "downgrade" it to an i3/i5 based system with a mobo that can take at least a 4 port NIC plus two HBA cards to continue to handle the 36 drive bays (14 are currently occupied). That way I keep the chassis and gain hardware encoding (and lose the noise of the high pressure fans for passive CPU cooling), and reduce my electric bill. From a purely objective perspective this seems like the right thing to do. But it's hard to rip apart something I just spent a lot of money on. Over the next 10 years, though, the cost savings in electricity would probably more than pay for the additional hardware. I don't see choosing this option though but may revisit it a few years from now as I grow tired of wasted power. Maybe I'll find another use for the server too. 6) sell the supermicro (probably take a loss) and build what I would have built in the first place: an i3/i5 in a 24 bay server chassis. My gut tells me option 4. But what am I NOT thinking about? cheers.

-

*VIDEO GUIDE* A comprehensive guide to pfSense both unRAID VM and physical

tiwing replied to SpaceInvaderOne's topic in VMs

I've been playing with pfsense for well over a year now, and in all my research, and personal experience so far, I would NEVER NEVER NEVER set up a firewall as a VM (on unraid) if you rely on that one as your ONLY firewall. Simple reason is that if something happens and you need to take unraid down, you also lose your network. I've done it, but my VM on unraid acts as a secondary node that is used if I take down the primary. IF you think that one day you MIGHT want to play with primary and secondary boxes in a high availability setup, you'll need THREE network ports - one for WAN, one for LAN, one for sync between the two boxes. I'd recommend a 4 port based on intel i350. Not that much more expensive, and gives you lots of flexibility. I found a 2-pack on amazon so i'm running identical 4 ports in my physical machine and in unraid. It works so well I'd encourage that for everyone. And if you go with a good quality i350 or equivalent, I'd skip using the on-board rj45 unless you need it for something else.