Runaround

Members-

Posts

20 -

Joined

-

Last visited

Converted

-

Gender

Undisclosed

Recent Profile Visitors

The recent visitors block is disabled and is not being shown to other users.

Runaround's Achievements

Noob (1/14)

2

Reputation

-

Thanks!

-

Any chance we can get a node.js update for the homebridge-gui docker? I have several plugins saying incompatible engine now. There are a few other warn lines. npm WARN EBADENGINE Unsupported engine { npm WARN EBADENGINE package: '[email protected]', npm WARN EBADENGINE required: { homebridge: '>=1.6.0', node: '>=18' }, npm WARN EBADENGINE current: { node: 'v16.20.2', npm: '8.19.4' } npm WARN EBADENGINE } npm WARN EBADENGINE Unsupported engine { npm WARN EBADENGINE package: '[email protected]', npm WARN EBADENGINE required: { node: '>=18' }, npm WARN EBADENGINE current: { node: 'v16.20.2', npm: '8.19.4' } npm WARN EBADENGINE } npm WARN deprecated [email protected]: this library is no longer supported npm WARN deprecated [email protected]: Please upgrade to version 7 or higher. Older versions may use Math.random() in certain circumstances, which is known to be problematic. See https://v8.dev/blog/math-random for details. npm WARN deprecated [email protected]: request has been deprecated, see https://github.com/request/request/issues/3142

-

Server keeps powering off since 6.11 update

Runaround replied to Runaround's topic in General Support

Thank you JorgeB for your quick reply. I actually had to go through all that checking when I previously had an issue. It had been running very well. The CPU is at stock speeds as is the memory. I also reduced from 4 DIMMs to 2. I happened to get a Kernel warning in the syslog a little bit ago, which is below. I found in a previous request changing the docker custom network type to ipvlan. Do you think this is still worth trying? Nov 14 16:53:56 NAS4 kernel: ------------[ cut here ]------------ Nov 14 16:53:56 NAS4 kernel: WARNING: CPU: 12 PID: 1260 at net/netfilter/nf_conntrack_core.c:1208 __nf_conntrack_confirm+0xa5/0x2cb [nf_conntrack] Nov 14 16:53:56 NAS4 kernel: Modules linked in: macvlan nvidia_uvm(PO) veth xt_nat xt_tcpudp xt_conntrack xt_MASQUERADE nf_conntrack_netlink nfnetlink xfrm_user xfrm_algo xt_addrtype iptable_nat nf_nat nf_conntrack nf_defrag_ipv6 nf_defrag_ipv4 br_netfilter xfs md_mod ipmi_devintf ip6table_filter ip6_tables iptable_filter ip_tables x_tables bridge stp llc nvidia_drm(PO) nvidia_modeset(PO) nvidia(PO) drm_kms_helper drm edac_mce_amd edac_core kvm_amd kvm wmi_bmof crct10dif_pclmul mxm_wmi crc32_pclmul asus_wmi_sensors crc32c_intel ghash_clmulni_intel aesni_intel crypto_simd igb mpt3sas cryptd rapl backlight i2c_piix4 nvme i2c_algo_bit k10temp syscopyarea i2c_core nvme_core ccp sysfillrect ahci raid_class sysimgblt fb_sys_fops scsi_transport_sas libahci wmi button acpi_cpufreq unix Nov 14 16:53:56 NAS4 kernel: CPU: 12 PID: 1260 Comm: kworker/12:1 Tainted: P O 5.19.17-Unraid #2 Nov 14 16:53:56 NAS4 kernel: Hardware name: System manufacturer System Product Name/ROG STRIX X470-F GAMING, BIOS 5809 12/03/2020 Nov 14 16:53:56 NAS4 kernel: Workqueue: events macvlan_process_broadcast [macvlan] Nov 14 16:53:56 NAS4 kernel: RIP: 0010:__nf_conntrack_confirm+0xa5/0x2cb [nf_conntrack] Nov 14 16:53:56 NAS4 kernel: Code: c6 48 89 44 24 10 e8 dd e2 ff ff 8b 7c 24 04 89 da 89 c6 89 04 24 e8 56 e6 ff ff 84 c0 75 a2 48 8b 85 80 00 00 00 a8 08 74 18 <0f> 0b 8b 34 24 8b 7c 24 04 e8 16 de ff ff e8 2c e3 ff ff e9 7e 01 Nov 14 16:53:56 NAS4 kernel: RSP: 0018:ffffc90000468cf0 EFLAGS: 00010202 Nov 14 16:53:56 NAS4 kernel: RAX: 0000000000000188 RBX: 0000000000000000 RCX: 04b1607232711a15 Nov 14 16:53:56 NAS4 kernel: RDX: 0000000000000000 RSI: 0000000000000328 RDI: ffffffffa046709c Nov 14 16:53:56 NAS4 kernel: RBP: ffff888105c3f600 R08: bcbcf0f29609d337 R09: e535f8438e9bc070 Nov 14 16:53:56 NAS4 kernel: R10: e038729afc21c74d R11: 74f7e7d7c2846d05 R12: ffffffff82909480 Nov 14 16:53:56 NAS4 kernel: R13: 000000000002bb97 R14: ffff888095ea2400 R15: 0000000000000000 Nov 14 16:53:56 NAS4 kernel: FS: 0000000000000000(0000) GS:ffff88841eb00000(0000) knlGS:0000000000000000 Nov 14 16:53:56 NAS4 kernel: CS: 0010 DS: 0000 ES: 0000 CR0: 0000000080050033 Nov 14 16:53:56 NAS4 kernel: CR2: 00000ab78cdaf002 CR3: 0000000273362000 CR4: 00000000003506e0 Nov 14 16:53:56 NAS4 kernel: Call Trace: Nov 14 16:53:56 NAS4 kernel: <IRQ> Nov 14 16:53:56 NAS4 kernel: nf_conntrack_confirm+0x25/0x54 [nf_conntrack] Nov 14 16:53:56 NAS4 kernel: nf_hook_slow+0x3d/0x96 Nov 14 16:53:56 NAS4 kernel: ? ip_protocol_deliver_rcu+0x164/0x164 Nov 14 16:53:56 NAS4 kernel: NF_HOOK.constprop.0+0x79/0xd9 Nov 14 16:53:56 NAS4 kernel: ? ip_protocol_deliver_rcu+0x164/0x164 Nov 14 16:53:56 NAS4 kernel: ip_sabotage_in+0x4a/0x58 [br_netfilter] Nov 14 16:53:56 NAS4 kernel: nf_hook_slow+0x3d/0x96 Nov 14 16:53:56 NAS4 kernel: ? ip_rcv_finish_core.constprop.0+0x3b7/0x3b7 Nov 14 16:53:56 NAS4 kernel: NF_HOOK.constprop.0+0x79/0xd9 Nov 14 16:53:56 NAS4 kernel: ? ip_rcv_finish_core.constprop.0+0x3b7/0x3b7 Nov 14 16:53:56 NAS4 kernel: __netif_receive_skb_one_core+0x77/0x9c Nov 14 16:53:56 NAS4 kernel: process_backlog+0x8c/0x116 Nov 14 16:53:56 NAS4 kernel: __napi_poll.constprop.0+0x2b/0x124 Nov 14 16:53:56 NAS4 kernel: net_rx_action+0x159/0x24f Nov 14 16:53:56 NAS4 kernel: __do_softirq+0x129/0x288 Nov 14 16:53:56 NAS4 kernel: do_softirq+0x7f/0xab Nov 14 16:53:56 NAS4 kernel: </IRQ> Nov 14 16:53:56 NAS4 kernel: <TASK> Nov 14 16:53:56 NAS4 kernel: __local_bh_enable_ip+0x4c/0x6b Nov 14 16:53:56 NAS4 kernel: netif_rx+0x52/0x5a Nov 14 16:53:56 NAS4 kernel: macvlan_broadcast+0x10a/0x150 [macvlan] Nov 14 16:53:56 NAS4 kernel: macvlan_process_broadcast+0xbc/0x12f [macvlan] Nov 14 16:53:56 NAS4 kernel: process_one_work+0x1ab/0x295 Nov 14 16:53:56 NAS4 kernel: worker_thread+0x18b/0x244 Nov 14 16:53:56 NAS4 kernel: ? rescuer_thread+0x281/0x281 Nov 14 16:53:56 NAS4 kernel: kthread+0xe7/0xef Nov 14 16:53:56 NAS4 kernel: ? kthread_complete_and_exit+0x1b/0x1b Nov 14 16:53:56 NAS4 kernel: ret_from_fork+0x22/0x30 Nov 14 16:53:56 NAS4 kernel: </TASK> Nov 14 16:53:56 NAS4 kernel: ---[ end trace 0000000000000000 ]--- -

Howdy. My server has been working really well until I updated it to 6.11. For some reason since then it has been powering off at night randomly. The uptime is usually only a couple of days. If I don't start the array it seems to keep running. I'm really at a loss on the cause of this. I've looked a little bit at the syslog but it doesn't show any warning before it turns off. I've attached the diagnostics log bundle. I'm currently running 6.11.3. Any assistance would be greatly appreciated. nas4-diagnostics-20221114-0943.zip

-

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

To hurry along the 12 TB drive issue, I confirmed a single stick was good with MemTest and tried a pre-clear a 12 TB disk with only that stick. It completed yesterday for the 1st time. Thanks again @JorgeB! -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

I really appreciate your assistance with this. I have corrected a lot of configuration issues that I didn't know about already. I ran memtest and there was an error, so I'll go through each of the DIMMs to figure out what's wrong. For now, I did a test on one stick. and it passed. I have it in alone and I'm trying to pre-clear a one of these 12 TB disks. It so odd to me that I only see an issue when dealing with these 12 TB disks though. -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

Here is the syslog from the flash drive and a fresh copy of diagnostics. I didn't really see anything in the syslog though. It's really strange that this is only happening for the cache drive copy. I was able to rebuild a disk just fine. syslog nas4-diagnostics-20220327-1118.zip -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

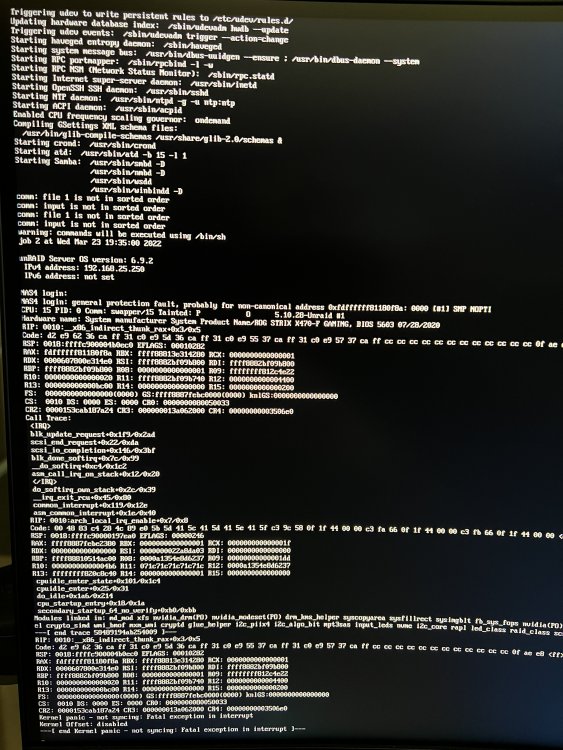

Well, I thought things were better, but the server crashed again last night. I was doing another parity copy. The last status I saw was at 96%. I’ve attached what was on the screen this morning. Any ideas? -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

The server was stable yesterday and I've started a drive replacement. It's about 60% complete so hopefully it finishes. Once it does, I'll try to swap out the parity drive again. -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

Next update: I powered off the server and took out the flash drive. Did a checkdisk on the flash drive on my PC and booted back up the server. No more errors for the flash drive in the log now and the server isn't crashing within an hour now. There was a curious number of settings that were changed / old in my server after booting though. I've corrected them all now. I also changed the power settings idle control (I think, it's named a little oddly) I picked up a smaller drive so I can try to replace my bad disk without having to copy parity first. It's clearing now, so hopefully I'll have more progress tomorrow. Thanks again for the help provided so far. -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

I set the CPU and Memory speeds to "auto" in the BIOS. The memory is now running at the speeds you linked to earlier. The BTRFS errors are gone from the logs now. Sadly, my server is acting more unstable now. I see errors showing read issues on SDA so maybe the flash drive hasn't liked having the lock ups and power cycles? I had the syslog viewer up on the screen when it crashed so I saved it and there is a screenshot from a monitor I've connected. Not sure if it's related, but there was a pre-clear running every time it has crashed. It was in the writing zero's phase. System Log 2.html -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

Thanks for the info and detailed post you linked. I'll be adjusting the memory timings today. I moved my old desktop CPU/Motherboard over 4 months ago and I didn't think about the Memory timings. I just noticed this round of btrfs issues happening today. It got corrupted last week and I was able to recover the data and re-format the disk. I thought it was working normally after that. I have had a bit of a history of issues with this particular SSD I'm using for cache. I was getting tons of CRC errors from the firmware on my HBA card when I initially installed it, but it had been working well till it had an issue last week. I'm thinking of replacing it just in case. Do you think that is contributing to the server locking up on the Parity copy as well? I've actually not had this server lock up in several years. -

(SOLVED) Server locks up on parity swap copy

Runaround replied to Runaround's topic in General Support

Thanks - I added the diagnostics to the initial post to make it easier to find. I started a preclear pass on the disk too. It's 94% done with the pre-read phase currently. -

Hello, I'm trying to replace my existing 10TB parity drive with a 12 TB while moving the 10TB down to replace a smaller drive. I've done this previously a few times (to get up to the 10 TB) and I'm following the guide. Once I swap the drive around in the GUI and start the copy the server is locking up. I tried tracking the progress this time and the last report I saw in the GUI was 86% done. I have attempted the copy 3 times now. Any ideas on what I should look at to find the cause? nas4-diagnostics-20220322-0829.zip

-

It's under the appdata/unifi/sites/default folder.