-

Posts

450 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by francrouge

-

-

Hi guys,

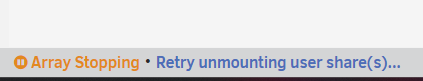

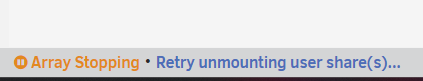

I got a problem, Everytime i'm strying to stop the array my unraid seem to freeze or at least not doing the stop

It's always staying in

Anyway to solved that because I always need to reboot to get it work

thx

-

On 4/6/2023 at 8:23 AM, Kaizac said:

You need --vv in your rclone command

Thank you so much

-

Hi guys quick question i'm not able to see in live what my upload script is uploading.

Is it normal before it was working can someone tell me if my script is ok ?

#!/bin/bash ###################### ### Upload Script #### ###################### ### Version 0.95.5 ### ###################### ####### EDIT ONLY THESE SETTINGS ####### # INSTRUCTIONS # 1. Edit the settings below to match your setup # 2. NOTE: enter RcloneRemoteName WITHOUT ':' # 3. Optional: Add additional commands or filters # 4. Optional: Use bind mount settings for potential traffic shaping/monitoring # 5. Optional: Use service accounts in your upload remote # 6. Optional: Use backup directory for rclone sync jobs # REQUIRED SETTINGS RcloneCommand="move" # choose your rclone command e.g. move, copy, sync RcloneRemoteName="gdrive_media_vfs" # Name of rclone remote mount WITHOUT ':'. RcloneUploadRemoteName="gdrive_media_vfs" # If you have a second remote created for uploads put it here. Otherwise use the same remote as RcloneRemoteName. LocalFilesShare="/mnt/user/mount_rclone_upload" # location of the local files without trailing slash you want to rclone to use RcloneMountShare="/mnt/user/mount_rclone" # where your rclone mount is located without trailing slash e.g. /mnt/user/mount_rclone MinimumAge="15m" # sync files suffix ms|s|m|h|d|w|M|y ModSort="ascending" # "ascending" oldest files first, "descending" newest files first # Note: Again - remember to NOT use ':' in your remote name above # Bandwidth limits: specify the desired bandwidth in kBytes/s, or use a suffix b|k|M|G. Or 'off' or '0' for unlimited. The script uses --drive-stop-on-upload-limit which stops the script if the 750GB/day limit is achieved, so you no longer have to slow 'trickle' your files all day if you don't want to e.g. could just do an unlimited job overnight. BWLimit1Time="01:00" BWLimit1="10M" BWLimit2Time="08:00" BWLimit2="10M" BWLimit3Time="16:00" BWLimit3="10M" # OPTIONAL SETTINGS # Add name to upload job JobName="_daily_upload" # Adds custom string to end of checker file. Useful if you're running multiple jobs against the same remote. # Add extra commands or filters Command1="--exclude downloads/**" Command2="" Command3="" Command4="" Command5="" Command6="" Command7="" Command8="" # Bind the mount to an IP address CreateBindMount="N" # Y/N. Choose whether or not to bind traffic to a network adapter. RCloneMountIP="192.168.1.253" # Choose IP to bind upload to. NetworkAdapter="eth0" # choose your network adapter. eth0 recommended. VirtualIPNumber="1" # creates eth0:x e.g. eth0:1. # Use Service Accounts. Instructions: https://github.com/xyou365/AutoRclone UseServiceAccountUpload="N" # Y/N. Choose whether to use Service Accounts. ServiceAccountDirectory="/mnt/user/appdata/other/rclone/service_accounts" # Path to your Service Account's .json files. ServiceAccountFile="sa_gdrive_upload" # Enter characters before counter in your json files e.g. for sa_gdrive_upload1.json -->sa_gdrive_upload100.json, enter "sa_gdrive_upload". CountServiceAccounts="5" # Integer number of service accounts to use. # Is this a backup job BackupJob="N" # Y/N. Syncs or Copies files from LocalFilesLocation to BackupRemoteLocation, rather than moving from LocalFilesLocation/RcloneRemoteName BackupRemoteLocation="backup" # choose location on mount for deleted sync files BackupRemoteDeletedLocation="backup_deleted" # choose location on mount for deleted sync files BackupRetention="90d" # How long to keep deleted sync files suffix ms|s|m|h|d|w|M|y ####### END SETTINGS ####### ############################################################################### ##### DO NOT EDIT BELOW THIS LINE UNLESS YOU KNOW WHAT YOU ARE DOING ##### ############################################################################### ####### Preparing mount location variables ####### if [[ $BackupJob == 'Y' ]]; then LocalFilesLocation="$LocalFilesShare" echo "$(date "+%d.%m.%Y %T") INFO: *** Backup selected. Files will be copied or synced from ${LocalFilesLocation} for ${RcloneUploadRemoteName} ***" else LocalFilesLocation="$LocalFilesShare/$RcloneRemoteName" echo "$(date "+%d.%m.%Y %T") INFO: *** Rclone move selected. Files will be moved from ${LocalFilesLocation} for ${RcloneUploadRemoteName} ***" fi RcloneMountLocation="$RcloneMountShare/$RcloneRemoteName" # Location of rclone mount ####### create directory for script files ####### mkdir -p /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName #for script files ####### Check if script already running ########## echo "$(date "+%d.%m.%Y %T") INFO: *** Starting rclone_upload script for ${RcloneUploadRemoteName} ***" if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Exiting as script already running." exit else echo "$(date "+%d.%m.%Y %T") INFO: Script not running - proceeding." touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName fi ####### check if rclone installed ########## echo "$(date "+%d.%m.%Y %T") INFO: Checking if rclone installed successfully." if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: rclone installed successfully - proceeding with upload." else echo "$(date "+%d.%m.%Y %T") INFO: rclone not installed - will try again later." rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName exit fi ####### Rotating serviceaccount.json file if using Service Accounts ####### if [[ $UseServiceAccountUpload == 'Y' ]]; then cd /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/ CounterNumber=$(find -name 'counter*' | cut -c 11,12) CounterCheck="1" if [[ "$CounterNumber" -ge "$CounterCheck" ]];then echo "$(date "+%d.%m.%Y %T") INFO: Counter file found for ${RcloneUploadRemoteName}." else echo "$(date "+%d.%m.%Y %T") INFO: No counter file found for ${RcloneUploadRemoteName}. Creating counter_1." touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_1 CounterNumber="1" fi ServiceAccount="--drive-service-account-file=$ServiceAccountDirectory/$ServiceAccountFile$CounterNumber.json" echo "$(date "+%d.%m.%Y %T") INFO: Adjusted service_account_file for upload remote ${RcloneUploadRemoteName} to ${ServiceAccountFile}${CounterNumber}.json based on counter ${CounterNumber}." else echo "$(date "+%d.%m.%Y %T") INFO: Uploading using upload remote ${RcloneUploadRemoteName}" ServiceAccount="" fi ####### Upload files ########## # Check bind option if [[ $CreateBindMount == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if IP address ${RCloneMountIP} already created for upload to remote ${RcloneUploadRemoteName}" ping -q -c2 $RCloneMountIP > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") INFO: *** IP address ${RCloneMountIP} already created for upload to remote ${RcloneUploadRemoteName}" else echo "$(date "+%d.%m.%Y %T") INFO: *** Creating IP address ${RCloneMountIP} for upload to remote ${RcloneUploadRemoteName}" ip addr add $RCloneMountIP/24 dev $NetworkAdapter label $NetworkAdapter:$VirtualIPNumber fi else RCloneMountIP="" fi # Remove --delete-empty-src-dirs if rclone sync or copy if [[ $RcloneCommand == 'move' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Using rclone move - will add --delete-empty-src-dirs to upload." DeleteEmpty="--delete-empty-src-dirs " else echo "$(date "+%d.%m.%Y %T") INFO: *** Not using rclone move - will remove --delete-empty-src-dirs to upload." DeleteEmpty="" fi # Check --backup-directory if [[ $BackupJob == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Will backup to ${BackupRemoteLocation} and use ${BackupRemoteDeletedLocation} as --backup-directory with ${BackupRetention} retention for ${RcloneUploadRemoteName}." LocalFilesLocation="$LocalFilesShare" BackupDir="--backup-dir $RcloneUploadRemoteName:$BackupRemoteDeletedLocation" else BackupRemoteLocation="" BackupRemoteDeletedLocation="" BackupRetention="" BackupDir="" fi # process files rclone $RcloneCommand $LocalFilesLocation $RcloneUploadRemoteName:$BackupRemoteLocation $ServiceAccount $BackupDir \ --user-agent="$RcloneUploadRemoteName" \ --buffer-size 512M \ --drive-chunk-size 512M \ --max-transfer 725G --tpslimit 3 \ --checkers 3 \ --transfers 3 \ -L/--copy-links --order-by modtime,$ModSort \ --min-age $MinimumAge \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --exclude *fuse_hidden* \ --exclude *_HIDDEN \ --exclude .recycle** \ --exclude .Recycle.Bin/** \ --exclude *.backup~* \ --exclude *.partial~* \ --bwlimit "${BWLimit1Time},${BWLimit1} ${BWLimit2Time},${BWLimit2} ${BWLimit3Time},${BWLimit3}" \ --bind=$RCloneMountIP $DeleteEmpty --delete-empty-src-dirs # Delete old files from mount if [[ $BackupJob == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Removing files older than ${BackupRetention} from $BackupRemoteLocation for ${RcloneUploadRemoteName}." rclone delete --min-age $BackupRetention $RcloneUploadRemoteName:$BackupRemoteDeletedLocation fi ####### Remove Control Files ########## # update counter and remove other control files if [[ $UseServiceAccountUpload == 'Y' ]]; then if [[ "$CounterNumber" == "$CountServiceAccounts" ]];then rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_* touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_1 echo "$(date "+%d.%m.%Y %T") INFO: Final counter used - resetting loop and created counter_1." else rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_* CounterNumber=$((CounterNumber+1)) touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_$CounterNumber echo "$(date "+%d.%m.%Y %T") INFO: Created counter_${CounterNumber} for next upload run." fi else echo "$(date "+%d.%m.%Y %T") INFO: Not utilising service accounts." fi # remove dummy file rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName echo "$(date "+%d.%m.%Y %T") INFO: Script complete" exit -

hi guys

I'm able to add rss feed to qbitorrent binhex for the past 3 weeks

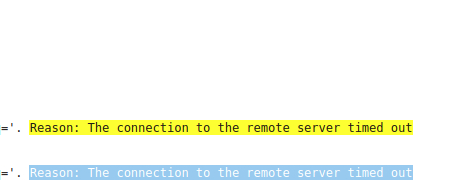

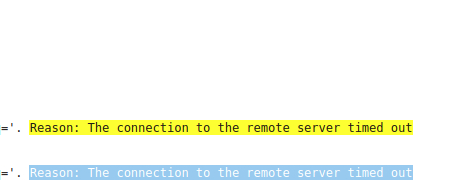

If i look into the qbitorrent logs it says the connection to the remote timed out

I tried other rss feed from jackett in my seedboxe and it worked to there something with unraid and qbitorrent and jackett binhex

-

Hi

I'm unable to add rss feed into qbitorrent.

I tried to delete everything and it's never working with jackett rss feed

anyone can help

please

-

11 hours ago, francrouge said:

Hello guys

It's been 2 weeks and i can't add any jackett rss feed into qbitorrent. its loads and load to nothing

I tried to flush the docker restar unraid nothing works

If i copy the jackett rss to chrome the link show up

Example: http://10.0.0.22:9117/api/v2.0/indexers/gktorrent/results/torznab/api?apikey=fdvdvfdvfdvfdvfdvd&t=search&cat=&q=

Can someone help me

Anyone has experience this ?

thx

-

Hello guys

It's been 2 weeks and i can't add any jackett rss feed into qbitorrent. its loads and load to nothing

I tried to flush the docker restar unraid nothing works

If i copy the jackett rss to chrome the link show up

Example: http://10.0.0.22:9117/api/v2.0/indexers/gktorrent/results/torznab/api?apikey=fdvdvfdvfdvfdvfdvd&t=search&cat=&q=

Can someone help me

-

Hello,

My server stop working yesterdey don't know why.

webgui was not working,ssh and network was not ping.

also it has 2 rj45 but it seem not to be working

so i had to reboot it.

now it start. I'm doing a parity check right now.

I was able to get the diag after the reboot

if anyone see something please tell me

-

I'm getting errors on an hdd and i need help to tell me if i need to change it

when i plugged it on my other pc it seem to be healthy

disk #2

-

4 hours ago, trurl said:

lost+found is where filesystem repair puts anything it can't figure out. Files without folders, files and folders without names. You might be able to figure what type of data is in a particular file with the linux 'file' command.

ok so i should get all the data in that folder and replace it to the good spot then ?

Thx again for all the help

-

i run it trhought gui under the disk 1

Also i now got a lost%found folder with like 270Go

What should i do with that ?

thx

-

4 minutes ago, JorgeB said:

Don't know a -d option, run without any flags or with just -v.

here you go

-

1 minute ago, JorgeB said:

Run xfs_repair again without -n, if it fails post new diags.

do i need to run it again with the -d option ?

thx

-

7 hours ago, JorgeB said:

Post new diags please.

-

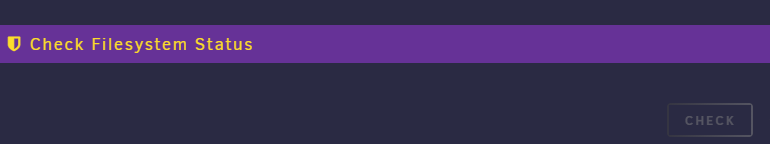

On 1/7/2023 at 6:08 AM, JorgeB said:

Check filesystem on disk1.

hi after doind a repair i got this

resetting inode 4801177989 nlinks from 10 to 9 Metadata corruption detected at 0x451c80, xfs_bmbt block 0x381ddff20/0x1000 libxfs_bwrite: write verifier failed on xfs_bmbt bno 0x381ddff20/0x8 Metadata corruption detected at 0x451c80, xfs_bmbt block 0x303f8eb00/0x1000 libxfs_bwrite: write verifier failed on xfs_bmbt bno 0x303f8eb00/0x8 Metadata corruption detected at 0x451c80, xfs_bmbt block 0x304204810/0x1000 libxfs_bwrite: write verifier failed on xfs_bmbt bno 0x304204810/0x8 Metadata corruption detected at 0x451c80, xfs_bmbt block 0x30dcb27f8/0x1000 libxfs_bwrite: write verifier failed on xfs_bmbt bno 0x30dcb27f8/0x8 Maximum metadata LSN (1713531926:523524561) is ahead of log (8:3564918). Format log to cycle 1713531929. xfs_repair: Releasing dirty buffer to free list! xfs_repair: Releasing dirty buffer to free list! xfs_repair: Releasing dirty buffer to free list! xfs_repair: Releasing dirty buffer to free list! xfs_repair: Refusing to write a corrupt buffer to the data device! xfs_repair: Lost a write to the data device! fatal error -- File system metadata writeout failed, err=117. Re-run xfs_repair.What should i do ?

thx

-

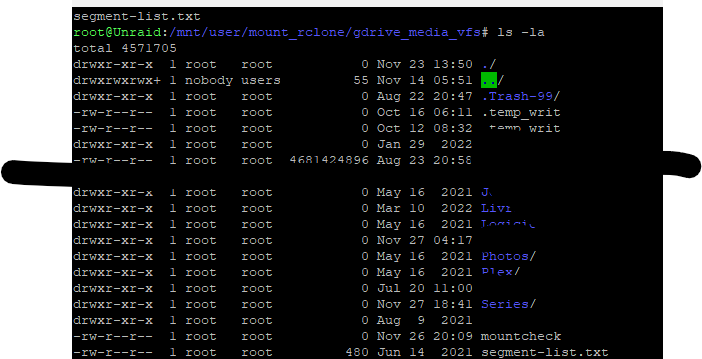

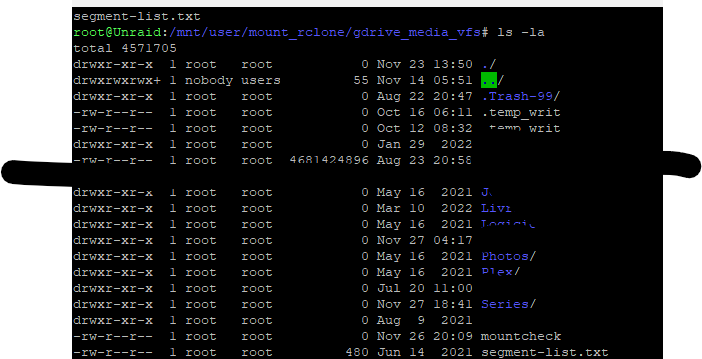

Hi guys

Some of my files movies etc are not visible in unraid filemanager, krusader etc.

But i can see that my unraid is still full i got 68% of my array full

Plex is still able to play the files that are inviisble

Can someone help me please don't know what to do

-

7 hours ago, Ronan C said:

Hello everyone, which drive provider are the best option today on the price x benefit proportion?

i think in order the one drive bussiness plan 2, they say its unlimited and the price is fine, anyone using this option? how it is?

Thanks

google i would say but it depends

-

On 11/29/2022 at 4:34 AM, Kaizac said:

Well, we've discussed this a couple of times already in this topic, and it seems there is not one fix for everyone.

What I've done is added to my mount script:

--uid 99

--gid 100

For --umask I use 002 (I think DZMM uses 000 which is allowing read and write to everyone and which I find too insecure. But that's your own decision.

I've rebooted my server without the mount script active, so just a plain boot without mounting. Then I ran the fix permissions on both my mount_rclone and local folders. Then you can check again whether the permissions of these folders are properly set. If that is the case, you can run the mount script. And then check again.

After I did this once, I never had the issue again.

Hi

Just to let you know that i tried what you told me on my main and backup server and it seem to be working permission owner where changed to nobody:users

thx alot

-

1 minute ago, Kaizac said:

Ah, I forgot, also the mount_mergerfs or mount_unionfs folder should be fixed with permissions.

I don't know whether the problem lies with the script of DZMM. I think the script creates the union/merger folders as root, which causes the problem. So I just kept my union/merger folders and also fixed those permissions. But maybe letting the script recreate them will fix it. You can test it with a simple mount script to see the difference, of course.

It's sometimes difficult to advise for me, because I'm not using the mount script from DZMM but my own one, so it's easier to for me to troubleshoot my own system.

I understand i will try both option to see thx a lot i will keep you update

-

9 minutes ago, Kaizac said:

Well, we've discussed this a couple of times already in this topic, and it seems there is not one fix for everyone.

What I've done is added to my mount script:

--uid 99

--gid 100

For --umask I use 002 (I think DZMM uses 000 which is allowing read and write to everyone and which I find too insecure. But that's your own decision.

I've rebooted my server without the mount script active, so just a plain boot without mounting. Then I ran the fix permissions on both my mount_rclone and local folders. Then you can check again whether the permissions of these folders are properly set. If that is the case, you can run the mount script. And then check again.

After I did this once, I never had the issue again.

cool thx i will try thx a lot

Should it be faster just to delete mount folder directly with no script load up and let the script create it again 🤔

-

52 minutes ago, Kaizac said:

Shouldn't be root, this caused problems. It should be:

user: nobody

group: users

Often this is 99/100.

Here is my folder

What should i do ?

thx

-

@DZMMHi

Can you tell me in you're script what user should the script put folders

like root or nobody

My folder got root permission and it seem to glitch a lot with the script normal ?

thx

-

i guys

is there a way to incoporate symlink in the upload script ?

thx

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

in Plugins and Apps

Posted

Im looking into it also but gdrive notif seem to be on some type of account i got 2 accounts with dame setup different enterprise and only got 1 email so far

Envoyé de mon Pixel 2 XL en utilisant Tapatalk