-

Posts

450 -

Joined

-

Last visited

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Everything posted by francrouge

-

unraid-smart-20221115-0937.zipunraid-smart-20221115-0937 (1).zip

-

Hi guys I'm getting a lot of error from 2 hdd one in array disk 2 and one in parity disk 2 anyone can tell me if they are dying ? SMART short self-test are ok thx tower-diagnostics-20221114-0418.zip unraid-smart-20221115-0937 (1).zip unraid-smart-20221115-0937.zip

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

did you fine a solution getting the samething -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

Hi guys quick question when you choose the "move" feature is it suppose to keep the folders in rclone upload share My script uploads the file with folder but does not delete the empty folder locally after #!/bin/bash ###################### ### Upload Script #### ###################### ### Version 0.95.5 ### ###################### ####### EDIT ONLY THESE SETTINGS ####### # INSTRUCTIONS # 1. Edit the settings below to match your setup # 2. NOTE: enter RcloneRemoteName WITHOUT ':' # 3. Optional: Add additional commands or filters # 4. Optional: Use bind mount settings for potential traffic shaping/monitoring # 5. Optional: Use service accounts in your upload remote # 6. Optional: Use backup directory for rclone sync jobs # REQUIRED SETTINGS RcloneCommand="move" # choose your rclone command e.g. move, copy, sync RcloneRemoteName="gdrive_media_vfs" # Name of rclone remote mount WITHOUT ':'. RcloneUploadRemoteName="gdrive_media_vfs" # If you have a second remote created for uploads put it here. Otherwise use the same remote as RcloneRemoteName. LocalFilesShare="/mnt/user/mount_rclone_upload" # location of the local files without trailing slash you want to rclone to use RcloneMountShare="/mnt/user/mount_rclone" # where your rclone mount is located without trailing slash e.g. /mnt/user/mount_rclone MinimumAge="15m" # sync files suffix ms|s|m|h|d|w|M|y ModSort="ascending" # "ascending" oldest files first, "descending" newest files first # Note: Again - remember to NOT use ':' in your remote name above # Bandwidth limits: specify the desired bandwidth in kBytes/s, or use a suffix b|k|M|G. Or 'off' or '0' for unlimited. The script uses --drive-stop-on-upload-limit which stops the script if the 750GB/day limit is achieved, so you no longer have to slow 'trickle' your files all day if you don't want to e.g. could just do an unlimited job overnight. BWLimit1Time="01:00" BWLimit1="10M" BWLimit2Time="08:00" BWLimit2="10M" BWLimit3Time="16:00" BWLimit3="10M" # OPTIONAL SETTINGS # Add name to upload job JobName="_daily_upload" # Adds custom string to end of checker file. Useful if you're running multiple jobs against the same remote. # Add extra commands or filters Command1="--exclude downloads/**" Command2="" Command3="" Command4="" Command5="" Command6="" Command7="" Command8="" # Bind the mount to an IP address CreateBindMount="N" # Y/N. Choose whether or not to bind traffic to a network adapter. RCloneMountIP="192.168.1.253" # Choose IP to bind upload to. NetworkAdapter="eth0" # choose your network adapter. eth0 recommended. VirtualIPNumber="1" # creates eth0:x e.g. eth0:1. # Use Service Accounts. Instructions: https://github.com/xyou365/AutoRclone UseServiceAccountUpload="N" # Y/N. Choose whether to use Service Accounts. ServiceAccountDirectory="/mnt/user/appdata/other/rclone/service_accounts" # Path to your Service Account's .json files. ServiceAccountFile="sa_gdrive_upload" # Enter characters before counter in your json files e.g. for sa_gdrive_upload1.json -->sa_gdrive_upload100.json, enter "sa_gdrive_upload". CountServiceAccounts="5" # Integer number of service accounts to use. # Is this a backup job BackupJob="N" # Y/N. Syncs or Copies files from LocalFilesLocation to BackupRemoteLocation, rather than moving from LocalFilesLocation/RcloneRemoteName BackupRemoteLocation="backup" # choose location on mount for deleted sync files BackupRemoteDeletedLocation="backup_deleted" # choose location on mount for deleted sync files BackupRetention="90d" # How long to keep deleted sync files suffix ms|s|m|h|d|w|M|y ####### END SETTINGS ####### ############################################################################### ##### DO NOT EDIT BELOW THIS LINE UNLESS YOU KNOW WHAT YOU ARE DOING ##### ############################################################################### ####### Preparing mount location variables ####### if [[ $BackupJob == 'Y' ]]; then LocalFilesLocation="$LocalFilesShare" echo "$(date "+%d.%m.%Y %T") INFO: *** Backup selected. Files will be copied or synced from ${LocalFilesLocation} for ${RcloneUploadRemoteName} ***" else LocalFilesLocation="$LocalFilesShare/$RcloneRemoteName" echo "$(date "+%d.%m.%Y %T") INFO: *** Rclone move selected. Files will be moved from ${LocalFilesLocation} for ${RcloneUploadRemoteName} ***" fi RcloneMountLocation="$RcloneMountShare/$RcloneRemoteName" # Location of rclone mount ####### create directory for script files ####### mkdir -p /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName #for script files ####### Check if script already running ########## echo "$(date "+%d.%m.%Y %T") INFO: *** Starting rclone_upload script for ${RcloneUploadRemoteName} ***" if [[ -f "/mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: Exiting as script already running." exit else echo "$(date "+%d.%m.%Y %T") INFO: Script not running - proceeding." touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName fi ####### check if rclone installed ########## echo "$(date "+%d.%m.%Y %T") INFO: Checking if rclone installed successfully." if [[ -f "$RcloneMountLocation/mountcheck" ]]; then echo "$(date "+%d.%m.%Y %T") INFO: rclone installed successfully - proceeding with upload." else echo "$(date "+%d.%m.%Y %T") INFO: rclone not installed - will try again later." rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName exit fi ####### Rotating serviceaccount.json file if using Service Accounts ####### if [[ $UseServiceAccountUpload == 'Y' ]]; then cd /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/ CounterNumber=$(find -name 'counter*' | cut -c 11,12) CounterCheck="1" if [[ "$CounterNumber" -ge "$CounterCheck" ]];then echo "$(date "+%d.%m.%Y %T") INFO: Counter file found for ${RcloneUploadRemoteName}." else echo "$(date "+%d.%m.%Y %T") INFO: No counter file found for ${RcloneUploadRemoteName}. Creating counter_1." touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_1 CounterNumber="1" fi ServiceAccount="--drive-service-account-file=$ServiceAccountDirectory/$ServiceAccountFile$CounterNumber.json" echo "$(date "+%d.%m.%Y %T") INFO: Adjusted service_account_file for upload remote ${RcloneUploadRemoteName} to ${ServiceAccountFile}${CounterNumber}.json based on counter ${CounterNumber}." else echo "$(date "+%d.%m.%Y %T") INFO: Uploading using upload remote ${RcloneUploadRemoteName}" ServiceAccount="" fi ####### Upload files ########## # Check bind option if [[ $CreateBindMount == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Checking if IP address ${RCloneMountIP} already created for upload to remote ${RcloneUploadRemoteName}" ping -q -c2 $RCloneMountIP > /dev/null # -q quiet, -c number of pings to perform if [ $? -eq 0 ]; then # ping returns exit status 0 if successful echo "$(date "+%d.%m.%Y %T") INFO: *** IP address ${RCloneMountIP} already created for upload to remote ${RcloneUploadRemoteName}" else echo "$(date "+%d.%m.%Y %T") INFO: *** Creating IP address ${RCloneMountIP} for upload to remote ${RcloneUploadRemoteName}" ip addr add $RCloneMountIP/24 dev $NetworkAdapter label $NetworkAdapter:$VirtualIPNumber fi else RCloneMountIP="" fi # Remove --delete-empty-src-dirs if rclone sync or copy if [[ $RcloneCommand == 'move' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Using rclone move - will add --delete-empty-src-dirs to upload." DeleteEmpty="--delete-empty-src-dirs " else echo "$(date "+%d.%m.%Y %T") INFO: *** Not using rclone move - will remove --delete-empty-src-dirs to upload." DeleteEmpty="" fi # Check --backup-directory if [[ $BackupJob == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Will backup to ${BackupRemoteLocation} and use ${BackupRemoteDeletedLocation} as --backup-directory with ${BackupRetention} retention for ${RcloneUploadRemoteName}." LocalFilesLocation="$LocalFilesShare" BackupDir="--backup-dir $RcloneUploadRemoteName:$BackupRemoteDeletedLocation" else BackupRemoteLocation="" BackupRemoteDeletedLocation="" BackupRetention="" BackupDir="" fi # process files rclone $RcloneCommand $LocalFilesLocation $RcloneUploadRemoteName:$BackupRemoteLocation $ServiceAccount $BackupDir \ --user-agent="$RcloneUploadRemoteName" \ -vv \ --buffer-size 512M \ --drive-chunk-size 512M \ --max-transfer 725G --tpslimit 3 \ --checkers 3 \ --transfers 3 \ --order-by modtime,$ModSort \ --min-age $MinimumAge \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --exclude *fuse_hidden* \ --exclude *_HIDDEN \ --exclude .recycle** \ --exclude .Recycle.Bin/** \ --exclude *.backup~* \ --exclude *.partial~* \ --drive-stop-on-upload-limit \ --bwlimit "${BWLimit1Time},${BWLimit1} ${BWLimit2Time},${BWLimit2} ${BWLimit3Time},${BWLimit3}" \ --bind=$RCloneMountIP $DeleteEmpty # Delete old files from mount if [[ $BackupJob == 'Y' ]]; then echo "$(date "+%d.%m.%Y %T") INFO: *** Removing files older than ${BackupRetention} from $BackupRemoteLocation for ${RcloneUploadRemoteName}." rclone delete --min-age $BackupRetention $RcloneUploadRemoteName:$BackupRemoteDeletedLocation fi ####### Remove Control Files ########## # update counter and remove other control files if [[ $UseServiceAccountUpload == 'Y' ]]; then if [[ "$CounterNumber" == "$CountServiceAccounts" ]];then rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_* touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_1 echo "$(date "+%d.%m.%Y %T") INFO: Final counter used - resetting loop and created counter_1." else rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_* CounterNumber=$((CounterNumber+1)) touch /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/counter_$CounterNumber echo "$(date "+%d.%m.%Y %T") INFO: Created counter_${CounterNumber} for next upload run." fi else echo "$(date "+%d.%m.%Y %T") INFO: Not utilising service accounts." fi # remove dummy file rm /mnt/user/appdata/other/rclone/remotes/$RcloneUploadRemoteName/upload_running$JobName echo "$(date "+%d.%m.%Y %T") INFO: Script complete" exit -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

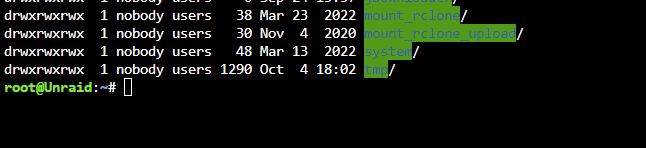

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

#1 Anyone has hits to be able to play files faster. Trying to read 1080p file 10mbits and its takes like 1 min # create rclone mount rclone mount \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --allow-other \ --umask 000 \ --uid 99 \ --gid 100 \ --dir-cache-time $RcloneMountDirCacheTime \ --attr-timeout $RcloneMountDirCacheTime \ --log-level INFO \ --poll-interval 10s \ --cache-dir=$RcloneCacheShare/cache/$RcloneRemoteName \ --drive-pacer-min-sleep 10ms \ --drive-pacer-burst 1000 \ --vfs-cache-mode full \ --vfs-cache-max-size $RcloneCacheMaxSize \ --vfs-cache-max-age $RcloneCacheMaxAge \ --vfs-read-ahead 500m \ --bind=$RCloneMountIP \ $RcloneRemoteName: $RcloneMountLocation & #2 Also do you know if its possible to direct play throught plex or its always converting ?🤔 -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

unraid 6.11.1 rclone: 1.60.0-beta.6481.6654b6611 -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

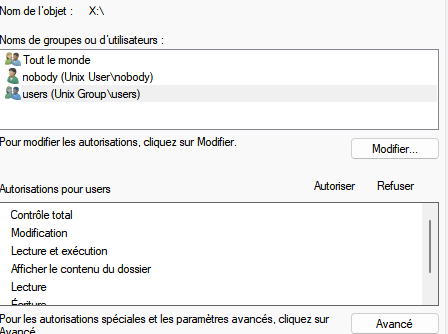

I did try right now and it does not seem to be effective. I can create files folders but i cant delete or rename them and only on my moun_rclone folder. thx -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

hi all other question about the upload script. I'm getting this now 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 90.241631ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 143.701353ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 222.186098ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 305.125972ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 402.588316ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 499.64329ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 589.545348ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 676.822802ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 680.141577ms 2022/10/06 05:21:57 DEBUG : pacer: Reducing sleep to 694.895337ms 2022/10/06 05:21:58 DEBUG : pacer: Reducing sleep to 307.907209ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 0s 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 78.586386ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 159.649286ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 198.168036ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 245.411694ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 330.517403ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 429.05441ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 523.306138ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 609.645869ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 690.942129ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 681.587878ms 2022/10/06 05:21:59 DEBUG : pacer: Reducing sleep to 639.166177ms 2022/10/06 05:22:00 DEBUG : pacer: Reducing sleep to 66.904708ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 0s 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 87.721382ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 187.616721ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 186.994169ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 285.041735ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 352.336246ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 449.015128ms 2022/10/06 05:22:01 DEBUG : pacer: Reducing sleep to 547.412525ms 2022/10/06 05:22:01 INFO : Transferred: 0 B / 0 B, -, 0 B/s, ETA - Elapsed time: 1h22m0.4s Do i need to worry i saw this for the last week maybe i put my upload script on the post thx all upload.txt -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

yes 6.11 and win 11 i will checked to see thx a lot -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

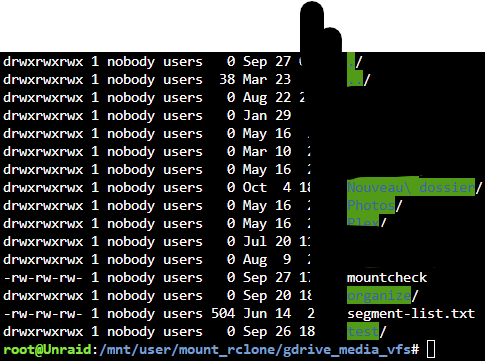

hi yes on krusader i got no problem but on windows with network shares its not working anymore i can't edit or rename delete etc. on the gdrive mount on windows my local shares are ok I will add also my mount and upload script Maybe i'm missing something should i try the new permission feature you think ? thx upload.txt mount.txt unraid-diagnostics-20221004-1818.zip -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

anyone having issue with permission i can't edit any file or folder on my windows with network share -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

Hi guys I'm getting problem with file permission inside my share every folder that were created a long time ago like years are ok but every new one i create at root of my main folder are blocked files only got 666 permission i cannot rename etc. anyone can help me please Unraid 6.10.3 # create rclone mount rclone mount \ $Command1 $Command2 $Command3 $Command4 $Command5 $Command6 $Command7 $Command8 \ --allow-other \ --umask 000 \ --dir-cache-time $RcloneMountDirCacheTime \ --attr-timeout $RcloneMountDirCacheTime \ --log-level INFO \ --poll-interval 10s \ --cache-dir=$RcloneCacheShare/cache/$RcloneRemoteName \ --drive-pacer-min-sleep 10ms \ --drive-pacer-burst 1000 \ --vfs-cache-mode full \ --vfs-cache-max-size $RcloneCacheMaxSize \ --vfs-cache-max-age $RcloneCacheMaxAge \ --vfs-read-ahead 500m \ --bind=$RCloneMountIP \ $RcloneRemoteName: $RcloneMountLocation & thx -

I upgrade my unraid this morning to 6.11 And i keep getting array undefined i can't get the diagnostic tool to work either

-

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

hi DZMM so just to be sure you transfert the file from youre seedbox directly to Gdrive to do encrypt ? thx a lot -

[Support] Linuxserver.io - Plex Media Server

francrouge replied to linuxserver.io's topic in Docker Containers

Mmm interessting So i put mine on never it was all on planified task I will check to see if it helps But the fact you're telling me about the api /Ban make sense also thx -

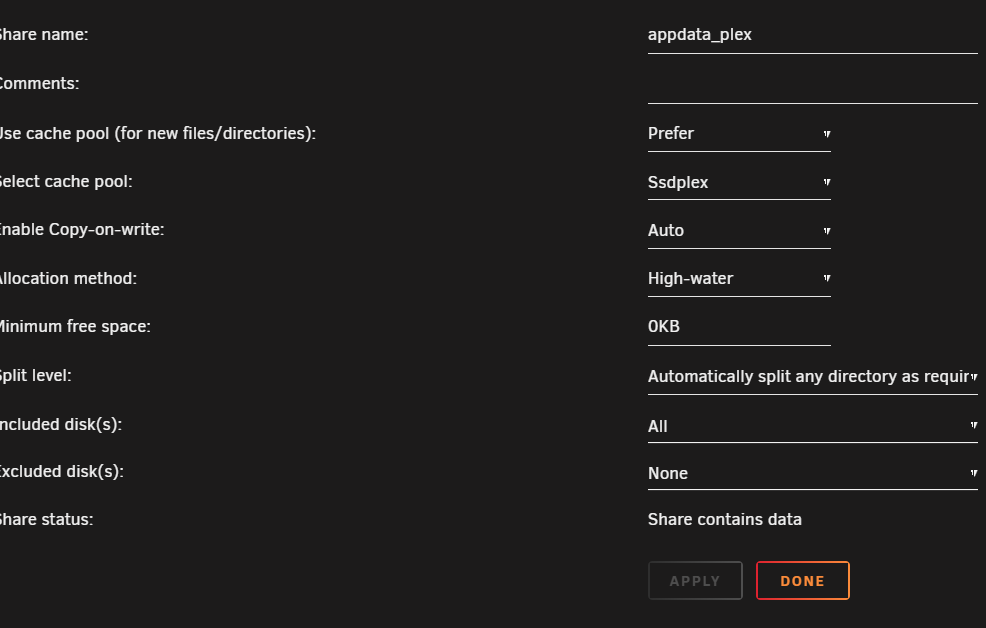

[Support] Linuxserver.io - Plex Media Server

francrouge replied to linuxserver.io's topic in Docker Containers

Hi guys i need help with Sqlite3: Sleeping for 200ms to retry busy DB I currently got an ssd just for the appdata. The folder app data seem to be creating a share with the folder. Any idea how to fix this I already did a optimse library etc. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry busy DB. Sqlite3: Sleeping for 200ms to retry Critical: libusb_init failed -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

do you have any documentation on service account ? i found some but not up to date also for teamdrive thx -

Guide: How To Use Rclone To Mount Cloud Drives And Play Files

francrouge replied to DZMM's topic in Plugins and Apps

Hi guys i was wondering if anyone of you map there download docker to be diectly on gdrive and seed from it Question: #1 Do you crypt the files ? #2 do you use hardlink Any tutorial or additionnal infos maybe how to use it ? thx -

Hi guys I'm on the last version available and i kept getting download to 99% and stalled but if it check the % downloaded of the file its at 0%. I never experience something like that. any idea ? thx

-

i did ask the question right now waiting for someone. lets see

-

Hi guys i'm having issue with the docker ive tried to stop it reboot nothing works 2022-06-21 11:50:33,058 DEBG fd 11 closed, stopped monitoring <POutputDispatcher at 23442495733232 for <Subprocess at 23442495732560 with name start-script in state RUNNING> (stdout)> 2022-06-21 11:50:33,058 DEBG fd 15 closed, stopped monitoring <POutputDispatcher at 23442495733280 for <Subprocess at 23442495732560 with name start-script in state RUNNING> (stderr)> 2022-06-21 11:50:33,058 INFO exited: start-script (exit status 1; not expected) 2022-06-21 11:50:33,058 DEBG received SIGCHLD indicating a child quit Any idea webgui is not working thx

-

Cannot connect to unRaid shares from Windows 10

francrouge replied to StrandedPirate's topic in General Support

i will check youre link and no no computers are able to access it -

Cannot connect to unRaid shares from Windows 10

francrouge replied to StrandedPirate's topic in General Support

i added the infos sorry