Niklas

-

Posts

323 -

Joined

Content Type

Profiles

Forums

Downloads

Store

Gallery

Bug Reports

Documentation

Landing

Posts posted by Niklas

-

-

4 minutes ago, bigbangus said:

Anybody? I'm new to dockers and just don't understand why duplicati is now plagued with access restrictions.

You could try setting both PUID and GUID to 0.

Please note that this will give the container full access to the file system (everything). -

1 minute ago, L0rdRaiden said:

Can someone help me with this? how can I backup UnraidOS USB (/boot)?

Set both "Container Variable: PUID" and "Container Variable: PGID" to 0-

1

1

-

-

latest is v3 for me. I changed from preview to latest a couple of minutes after the docker build and page was updated. Sonarr was on version 3.0.5.1143 for preview and upgraded to 3.0.5.1144 when changed to latest. I did not remove the tag, I changed linuxserver/sonarr:preview to linuxserver/sonarr:latest and updated.

-

Strange. I switched from preview to latest without any problems.

-

It's fixed now. Update the container.

-

1

1

-

2

2

-

-

On 3/3/2021 at 12:24 PM, Squid said:

Doesn't for me. The "du" of /var/lib/docker under both an image and as a folder are roughly the same

There must be some problem here.

My /mnt/cache/system/docker/dockerdata (docker in folder) is about 72-79GB if I check with du. My old docker.img had a max size of 30GB and was near half full.

If I check using the unraid gui and click calculate for the system share I get about 80GB used but when I use df -h i see that my cache drive only has 19GB used.

-

20 minutes ago, maxstevens2 said:

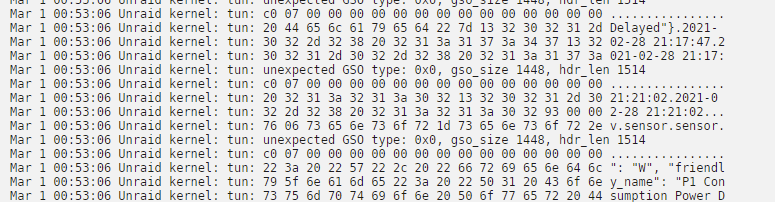

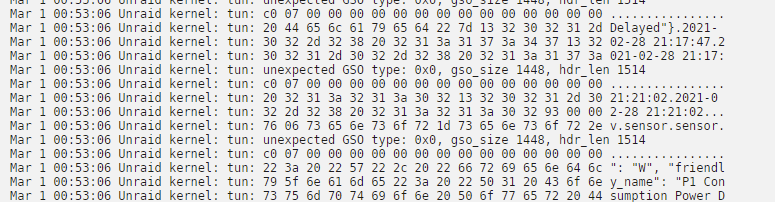

Maybe a bit off topic, but do you use dockers for Home Assistant MQTT @Niklas? My power usage is now litterally showing in kind off encrypted style in the log. lol

Only when I am not on the website it won't show anything..

Not using MQTT (yet).

I think it has something to do with networking. I don't know if this bug report could help

-

1

1

-

-

16 minutes ago, Squid said:

Rather than try and save the docker.img, just recreate it. Less hassles.

Also, far easier rather than doing Add Container to add them back in one at a time to instead Apps, Previous Apps and check off what you want then hit Install.

Far easier, yes. I knew I had that information somewhere locked away in my brain. Thanks for pointing that out!

I just moved from docker.img to directory and added my 20 containers back using the add container button. Wasted some time there.

-

8 minutes ago, maxstevens2 said:

I might have found out that Home Assistant doesn't actually 'abuse' the docker image file as it's storage location. So I am more hopeful now. Will now shut dockers down and backup the image. Then need to have 1 docker online at 00:00 CET. After that upgrade time. I'll keep you updated lol.

Thanks for the help so far though!!

Also running home assistant. The most abuse I saw was writing to the home-assistant_v2.db (recorder/history). I moved home-assistant_v2.db from the btrfs cache to my unassigned hdd in 6.8.3. With the multiple cache pool support in 6.9, I removed unassigned devices and added the hdd as a second cache pool formatted as XFS. Just for use with stuff that writes lots of data to save some ssd life. No critical stuff. -

1 minute ago, maxstevens2 said:

Here it's now 23:29. So I am trying to get this fixed before Monday morning. I will update Unraid asap.

I will ofcourse backup the image and such.

Is there a way to transport/move the dockers from the img to the 'new location'? Or is what you said the way to get the docker back with it's settings?

Same time-zone as me.

Yes, that what I said. You can try the "add container" button now before upgrading and you will probably see what I mean. Your dockers with settings will be in the "Template:" dropdown list (under "User templates"). -

2 minutes ago, maxstevens2 said:

Has 6.9 been stable for you? Been waiting for the new big release, but if it stable and the docker can be fixed, that would be perfect!

57 days uptime without any problems. I just moved from docker.img to directory like three hours ago, running fine.5 minutes ago, jay010101 said:Hey guys. I had this issue and moved to the newest beta and it was fixed. You can also use the below command but its not persistant after boot.

mount -o remount -o space_cache=v2 /mnt/cache

I hope this helps

As i understand, that command is not needed anymore with the 6.9-rc2. It only mitigates some of the writing amplifications but it's a start.

-

6 minutes ago, maxstevens2 said:

I do worry a bit about the solution as this guy 'lost' his dockers. Which would in my case mean I lose most of the Home Assistant configuration (not configuration files, but things like built-in integrations, internal user settings etc.)

You don't need the solution mentioned there if running 6.9-rc2. In 6.9 you can select a dir to store your docker data instead of the docker.img (in the gui).

You will lose all of your dockers but you can easily add them back with all your settings intact by using the "ADD CONTAINER" button. Select your docker from the "Template:" dropdown box and click apply.

-

10 minutes ago, maxstevens2 said:

It's been a long time since start of this post. I actually am having the same issue right here. Whilst checking at the report, I am scrolling trough pages and found only 1 solution from @S1dney (listed onto page 2). This big writing started when I started using MariaDB docker, but I've seen before that the cache has always been very busy.

My SSD's are running RAID 5 unencrypted with a total of 300 million writes in 41 days (started using mariadb docker since last 5 days).Any suggestion how to fix? Or is the method listed on page 2 still the workaround to go with?

Look in this report thread: -

16 hours ago, saarg said:

The rebase was forced by not being able to compile domoticz anymore on alpine. Unfortunately we can't do anything with custom scripts.

If the packages you add would benefit others also, you can make a docker mod so more people can benefit from it. There should be more info about docker mods in the readme on GitHub.

I will add a script that fixes permissions on usb devices in the container. If anyone have devices named other than ttyACMx, then please tell me so I can add it to the script.

I have one ttyUSB0 also

Edit

And three ttyACMx

-

1 minute ago, dariusz65 said:

My domoticz was updated to 2020.2 today and it broke my zwave. I'm getting 'Failed to open serial port /dev/ttyACM0'. This was working fine until this morning update.

Same here. Broke all connections to my USB devices. You could try doing chmod 777 /dev/ttyACM0 in the server terminal and restart Domoticz. If it works, add the command to your go file.

-

1

1

-

-

On 10/17/2020 at 8:44 PM, Ben de Vette said:

How can I update to the latest version of Domoticz?

The build in update functionality doesn't work.

Anymody any ideas?

Wondering the same thing,

The version is getting quite old.

-

Just want to say that I used this script and it worked as expected. Thanks!

-

1

1

-

-

I add some trackers using the "Automatically add these trackers to new downloads" but that setting and field reset when restarting the container.

In qBittorrent.conf:

Bittorrent\AddTrackers=true

Bittorrent\TrackersList=udp://tracker.example.com:669/announce\nudp://tracker2.example.com:699/announceAfter container restart

Bittorrent\AddTrackers=false

Bittorrent\TrackersList=

Edit:

This could of course be a problem with qbittorrent itself. hmm. -

I would guess this container is missing the required dependencies to make the built in version work. Also guessing it has something to do with Alpine.

-

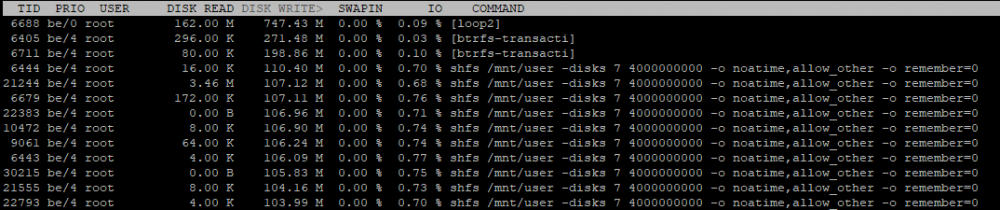

1 hour ago, S1dney said:

You're saying you're moving the mariadb location between the array, the cache location (/mnt/user/appdata) and directly onto the mountpoint (/mnt/cache).

The screenshots seem to point out that the loop device your docker image is using is still the source of a lot of writes though (loop2)?

Yes. I tried three different locations. /mnt/cache/appdata, /mnt/user/appdata (set to cache only) and /mnt/user/arraydata (array only).The two first locations generate that crazy 12-20GB/h. On array, it was like 10x+ less writing.

The loop device also does some writing I find strange, yes. Wrote about this before noticing the high mariadb usage..

I will read your bug report and answers.

Edit: this is just by keeping an eye on mariadb specifically. Other containers writing to the appdata dir will probably also generate lots of waste data including the loopback docker.img. -

1 minute ago, S1dney said:

You saying you're moving the mariadb location between the array, the cache location (/mnt/user/appdata) and directly onto the mountpoint (/mnt/cache).

The screenshots seem point out the loop device your docker image is using is still the source of a lot of writes though (loop2)?

One way to avoid this behavior would be to have docker mount on the cache directly, bypassing the loop device approach.

I had similar issues and I'm running directly on the BTRFS cache now for quite some time. Still really happy with it.

I wrote about my approach in the bug report I did here:

Note that in order to have it working on boot automatically I modified the start_docker() function and copied the entire /etc/rc.d/rc.docker file to /boot/config/docker-service-mod/rc.docker. My go file copies that back over the original rc.docker file so that when the docker deamon is started, the script sets up docker to use the cache directly.

Haven't got any issues so far 🙂

My /boot/config/go file looks like this now (just the cp command to rc.docker is relevant here, the other lines before are for hardware acceleration on Plex)

#!/bin/bash # Load the i915 driver and set the right permissions on the Quick Sync device so Plex can use it modprobe i915 chmod -R 777 /dev/dri # Put the modified docker service file over the original one to make it not use the docker.img cp /boot/config/docker-service-mod/rc.docker /etc/rc.d/rc.docker # Start the Management Utility /usr/local/sbin/emhttp &Cheers.

loop2 is the mounted docker.img, right? I have it pointed to /mnt/cache/system/docker/docker.img

What did you change in your rc.docker? -

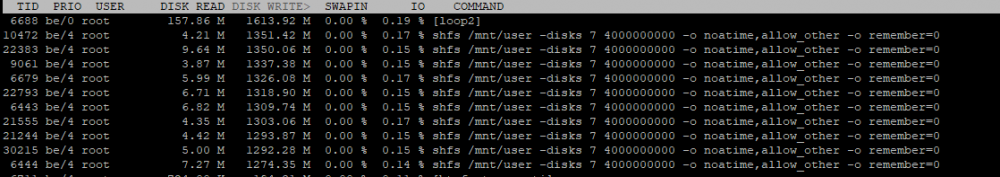

Running MariaDB-docker pointed to /mnt/cache/appdata/mariadb or /mnt/user/appdata/mariadb (Use cache: Only) generates LOTS of writes to the cache drive(s). Between 15-20GB/h. iotop showing almost all that writing consumed by mariadb. Moving the databases to array, the writes goes down very much (using "iotop -ao"). I use MariaDB for light use of Nextcloud and Home Assistant. Nothing else.

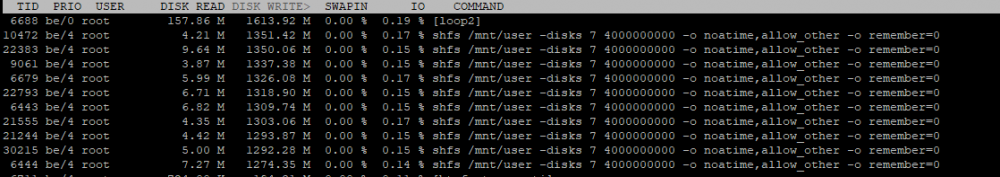

This is iotop for an hour with mariadb databases on cache drive. /mnt/cache/appdata or /mnt/user/appdata with cache only:

When /mnt/cache/appdata is used, the shfs-processes will show as mysql(d?). Missing screenshot.

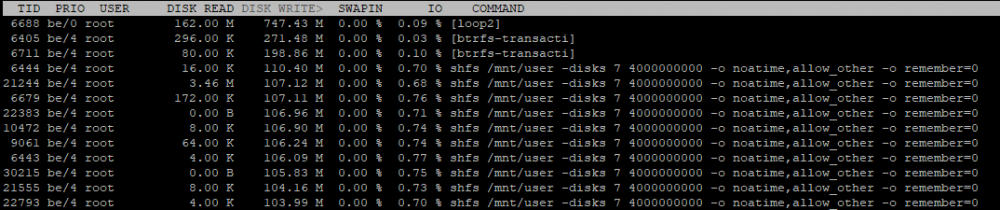

This is iotop for about an hour with databases on array. /mnt/user/araydata:

Still a bit much (seen to my light usage) but not even near the writing when on cache.

I don't know if this is a bug in Unraid, btrfs or something but I will keep my databases on array to save some ssd life. I will loose speed but as I said, this is with very light use of mariadb..

I checked tree different ways to enter the path to the location for databases (/config) and let it sit for an hour with freshly started iotop between the different paths. To calculate data used, I checked and compared the smart value "233 Lifetime wts to flsh GB" for the ssd(s). Running mirrored drives. I guess other stuff writing to the cache drive or share with cache set to only will have unnecessary high writes.

Sorry for my rumble. I get like that when I'm interested in a specific area.

Not native english speaker. Please, just ask if unclear.

Edit:My docs

On /mnt/cache/appdata/mariadb (direct cache drive)

2020-02-08 kl 22:02 - 23:04. 15 (!) GB written.

On /mnt/user/arraydata/mariadb (user share on array only)

2020-02-08 kl 23:04-00:02. 2 GB written.On /mnt/user/appdata/mariadb (Use cache: Only)

2020-02-09 kl 00:02-01:02. 22 GB (!) written.

Just ran this again to really see the differense and loook attt itttt.

On /mnt/user/arraydata/mariadb (array only, spinning rust)

2020-02-09 kl 01:02-02:02. 4 GB written.

-

On 1/29/2020 at 4:03 AM, Moussekateer said:

Hi there,

I've been experiencing an issue with backing up my unraid flash drive since updating to 6.8.1 from 6.7.x. In my Docker config for this container I have `/boot` mapped to the container path `/flash` and previously this worked fine with Duplicati able to access the entire contents of the USB stick and back them up. But now Duplicati is showing signs of being unable to access the path, with backups failing with a "[Warning-Duplicati.Library.Main.Operation.Backup.FileEnumerationProcess-FileAccessError]: Error reported while accessing file: /flash/" message.

Is there a setting I need to change or something?

8 minutes ago, mwells said:I'm having the same issue. Is there a fix for this?

Change both PUID and PGID to 0 in the settings for the container.-

1

1

-

-

7 minutes ago, Vesko said:

When i try this i get error

sudo: /config/www/nextcloud/occ: command not found

i dont know what is wrong.

"php7" missing in the command.

sudo -u abc php7 /config/www/nextcloud/occ db:convert-filecache-bigint

-

1

1

-

2

2

-

[Support] Linuxserver.io - Duplicati

in Docker Containers

Posted

Something changed with permissions when Unraid got updated a while back.